- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I'm trying to update my stack to Openvino 2022 to benefit from the latest news. And I'm using this update to gain a little performance by trying to use multiple NCS2 asynchrounously.

However I'm a bit stuck despite reading quite some documentation on your website and the community. I'm finding a lot of examples of this on 2021 version but I can't manage to pass it to 2022 2.0 API.

The closest example I found is this : https://github.com/openvinotoolkit/openvino/tree/master/samples/cpp/classification_sample_async but it doesn't manage multiple devices.

Would you have a very basic example of a model loaded and called by batch on more than 1 NCS2 ? That would be immensely helpful for me.

Best regards,

Greg

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Gaudibert,

Thanks for reaching out to us.

For your information, you can use multiple Intel® Neural Compute Stick 2 asynchronously as shown as follows:

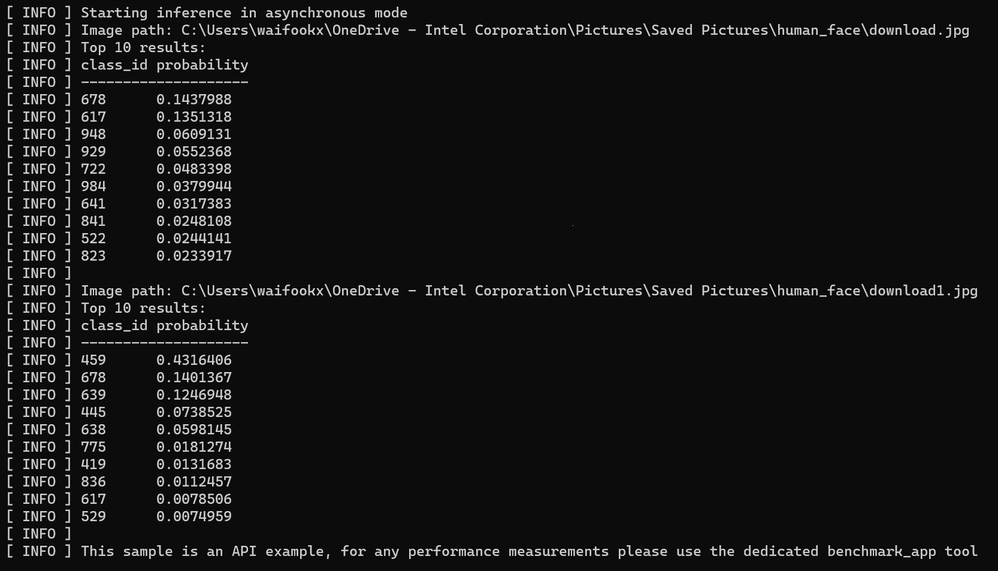

1. Run Hello Query Device Python Sample script which is located in the following directory:

cd <INSTALL_DIR>\samples\python\hello_query_device

python hello_query_device.py

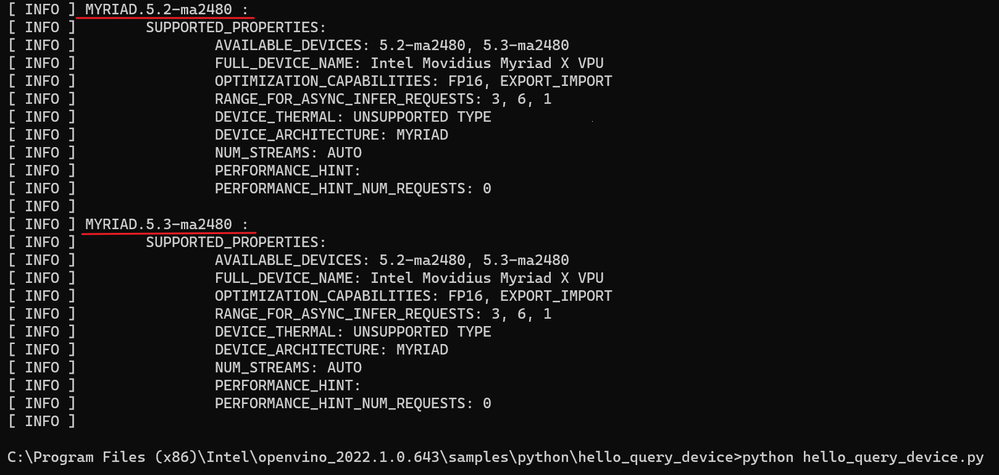

2. Use Multi-device plugin with the following command:

python classification_sample_async.py -m "<path_to_model>\alexnet.xml" -i "path_to_input_1" "path_to_input_2" -d MULTI:MYRIAD.5.2-ma2480,MYRIAD.5.3-ma2480

For more information, please refer to Running on multiple devices simultaneously and Multi-Device Plugin with the Intel® Neural Compute Stick 2.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Wan,

Thank you for your quick reply. That is exactly what I needed.

I should have taken a look inside the openvino repository and not only online, sorry about that !

Cheers,

Greg

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Gaudibert,

Thanks for your question.

This thread will no longer be monitored since we have provided information.

If you need any additional information from Intel, please submit a new question.

Best regards,

Wan

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page