- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The Pytorch model with its own defined network was converted to the OpenVINO model and inference was performed.

However, the inference results of the pytorch model and the OpenVINO model are very different.

I used the following command to perform the model conversion.

python .\mo.py --input_model [path] --data_type FP32 --output_dir [path]

I would like to add some additional parameters to the model transformation, but which of the following parameters should I add?

- --input_shape

- --mean_values

- --scale_values

If have to add mean_values and scale_values, please tell me how to calculate them.

My model inputs an image and other information into the model, but do the mean values and scale values only affect the image?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi 2Yu,

Yes. The mean_values and scales_values are to normalize the data for the multiple inputs. The --scale_values means scaling (divide by) value to apply to input values and --mean_values is the value to be subtracted from input values before scaling (one per channel).

In case the model has multiple inputs and you want to provide the mean/scale value, you need to pass those values for each input. More specifically, the number of passed values should be the same as the number of inputs of the model.

Regards,

Aznie

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi 2Yu,

Thanks for reaching out to us.

Which specific PyTorch model you are using? The mean values and scales values depend on the model format. Check out the single-human-pose-estimation-0001 PyTorch model from the OpenVINO pre-trained model which states the mean and scale values for the model. Therefore, you can also find the Model Optimizer arguments for the PyTorch model from this model.yml file.

The Mean values and Scale values are to be used for the input image per channel. Values are to be provided in the (R, G, B) format. The value can be defined as desired input of your model. The exact meaning and order of the channels depend on how your original model was trained. Thus these two parameter will only affect your image.

Meanwhile, for the Model Optimizer conversion, you can adjust the conversion process by using the General Conversions Parameters.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aznie

Thank you for replying.

I want to convert the following model that I originally created with Pytorch into an IR model.

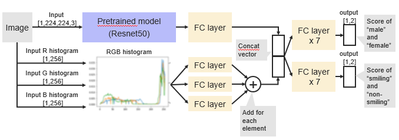

As shown in the figure below, I used resnet50, which is available on the official Pytorch website, for the pretrained part.

All other FC layers were added by me on my own.

Four inputs are required to use this model.

The respective input shapes are shown in the figure.

Detail information of this model is here.

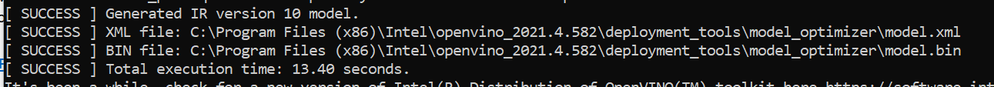

First, I converted Pytorch model to ONNX model.

Second, based on your advice, I used following command to convert ONNX model into IR model.

python .\mo.py --input_model [path] --data_type FP32 --output_dir [path] --mean_values=[123.675,116.28,103.53] --scale_values=[58.395,57.12,57.375]

But following error occured.

[ ERROR ] Exception occurred during running replacer "REPLACEMENT_ID" (<class 'extensions.middle.AddMeanScaleValues.AddMeanScaleValues'>): Numbers of inputs and mean/scale values do not match.

For more information please refer to Model Optimizer FAQ, question #61. (https://docs.openvinotoolkit.org/latest/openvino_docs_MO_DG_prepare_model_Model_Optimizer_FAQ.html?question=61#question-61)

Would you please tell me how to convert model?

If needed, I can send you an ONNX model with the network structure shown in the picture via email.

Regards,

2Yu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi 2Yu,

The error shows that your mean values and the number of inputs are not equal. Anyway, how did you convert your Pytorch model into ONNX model format? I would advise you to use the script from OpenVINO, check out Export PyTorch* Model to ONNX* Format documentation.

Meanwhile, I've validated that the Public Models, Resnet 50 model from ONNX Model Zoo can be successfully converted into Intermediate Representation (IR) file. Model Optimizer command used : python mo.py --input_model "resnet50/model.onnx"

For further investigation from our side, you can share the ONNX model privately to my email:

noor.aznie.syaarriehaahx.binti.baharuddin@intel.com

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aznie

Please let me suspend the issue of model conversion because my script for inference by OpenVINO may be incorrect.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @Aznie_Intel

By the way, what happens inside OpenVINO when I set --mean_values and --scale_values for model conversion?

Are these values used to normalize the data?

Regards,

2Yu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi 2Yu,

Yes. The mean_values and scales_values are to normalize the data for the multiple inputs. The --scale_values means scaling (divide by) value to apply to input values and --mean_values is the value to be subtracted from input values before scaling (one per channel).

In case the model has multiple inputs and you want to provide the mean/scale value, you need to pass those values for each input. More specifically, the number of passed values should be the same as the number of inputs of the model.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, @Aznie_Intel

Thank you for reply.

I understood the role of --mean_values and --scale_values.

regards,

2Yu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi 2Yu,

Glad to hear that. Therefore, this thread will no longer be monitored since this issue has been resolved. If you need any additional information from Intel, please submit a new question.

Regards,

Aznie

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page