- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

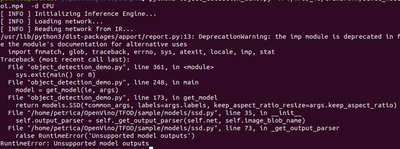

I have successfully converted my model, but I cannot run it because of the error "unsupported model output"

I will attach the saved model and the parameters that I have used for conversion.

This is how I tested the model (using the sample file from the toolkit):

python3 object_detection_demo.py -m ./TFOD_40/transform/saved_model.xml -at ssd -i ./mitoi.mp4 -d MYRIAD

And this is how i converted my model:

mo_tf.py --saved_model_dir ./TFOD_40/saved_model --tensorflow_object_detection_api_pipeline_config ./TFOD_40/pipeline.config --output_dir ./TFOD_40/transform

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Petrica,

Thanks for reaching out to us.

The object_detection_demo is expecting detection_out with shape [1,1,N,7] and each detection is expected in the following format: [image_id, label, conf, x_min, y_min, x_max, y_max]. You can refer to SSD with MobileNet V2, one of the validated model for the demo.

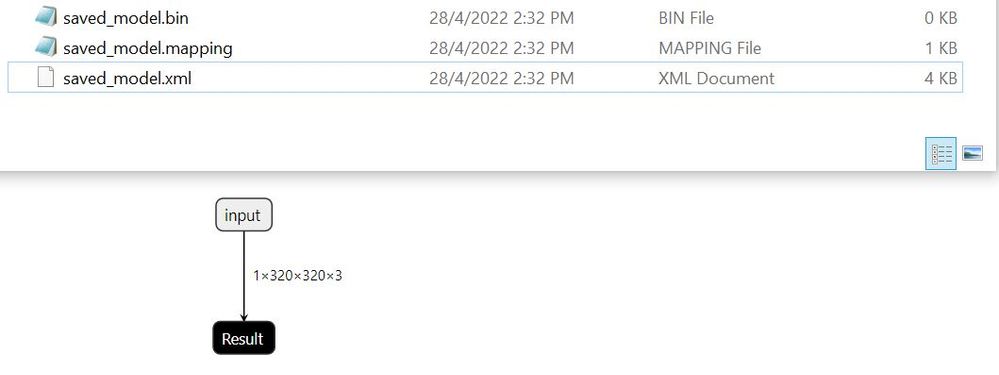

Besides, I also notice that your IR files are empty which need you back to the conversion process again. By the way, I also fail to convert your model. In the previous discussion, you mentioned that you was able to convert the model with the same pipeline config file in the past. I would like to know what have been changed which led to conversion failure.

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

In the past, like 2 months ago I successfully converted with a similar pipeline config file, just replaced this training_config argument " data_augmentation_options {

random_horizontal_flip {

}

}"

with the following parameters:

"data_augmentation_options {

random_adjust_contrast {

}

}

data_augmentation_options {

random_adjust_saturation {

}

}

data_augmentation_options {

random_adjust_brightness {

}

}"

And since then, I am not able to convert the old model with the transformations config (.json) file. Probably I used another parameter when running the conversion script, but I am not able to remember what I have used, exactly.

From your answer I understand that my model cannot be converted, or is it a different way for me to use the Intel Neural Compute Stick with my model.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Petrica,

You can refer to the parameter in the config files here.

Based on your current model (saved model format), the node names of your model does not match with the node names in the JSON file. These node names are stated under the "start_points" and "end_points" of the "id: ObjectDetectionAPIProposalReplacement" in the JSON file.

Hence, if you proceed to convert your model without the transformation config file (JSON file) will result in empty IR.

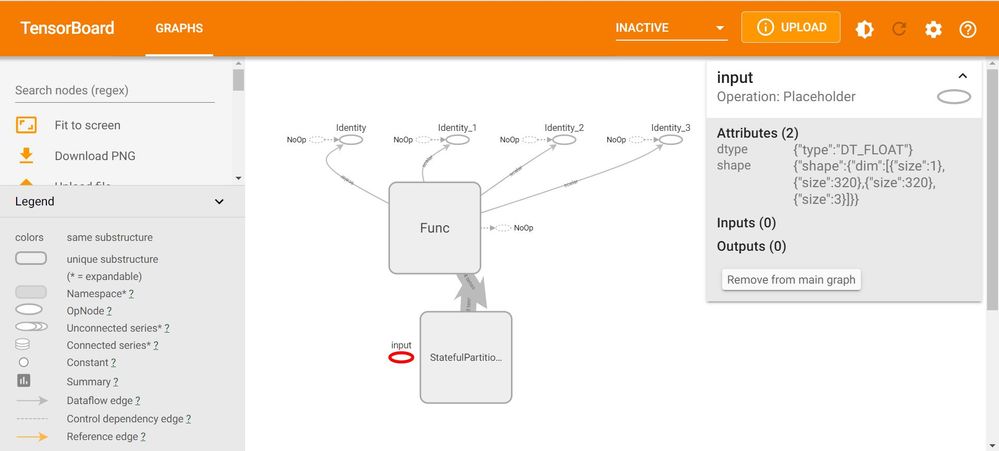

Besides, I also notice that the input of your model does not attach to the graph. It looks weird for me. Please verify on your side that your model is trained completely.

You can use Model Optimizer to visualize the input graph of the model in TensorBoard.

1. Dump the input graph of the model.

mo_tf.py --saved_model_dir=<saved_model> --tensorboard_logdir=<TENSORBOARD_LOGDIR>

2. Visualize the input graph of the model in TensorBoard.

tensorboard --logdir=<TENSORBOARD_LOGDIR>

3. Copy and paste the output URL in the browser.

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Petrica,

Thank you for your question. If you need any additional information from Intel, please submit a new question as this thread is no longer being monitored.

Regards,

Peh

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page