- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

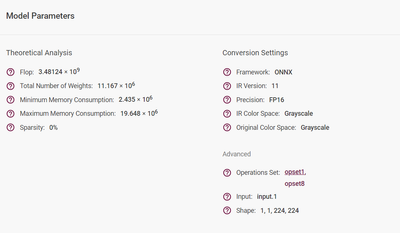

I have custom model built on top of resnet-18 Pytorch in ONXX Format. The model is loaded successfully, the input shape is [1,1,224,224] = [Batch,Channel,W,H] .

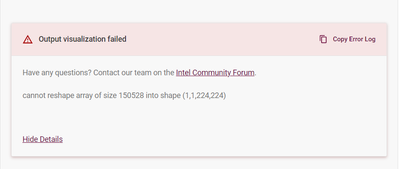

While creating the project, we need to give the validation dataset , however the channels are being taken as 3 even though grey images are given.

This is resulting in obvious error while running , since it is expecting [1,1,224,224] but my image is sent as [1,3,224,224].

224 x 224 x 3 = 150528

I am using latest version of workbench.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Bansi,

Thanks for reaching out.

The model input blob for resnet-18-pytorch from Open Model Zoo consists of a single image of "1x3x224x224" in RGB order. From your model parameters information, your model input is "1x1x224x224".

How did you convert your Pytorch model into ONNX? Could you provide your workaround?

You may also share your ONNX file for me to test from my end.

You can share it here or privately to my email: noor.aznie.syaarriehaahx.binti.baharuddin@intel.com

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aznie,

Please find my workaround. I found out that current Workbench has issue in automatically converting the channels to 1.

Older Version

-------------

I tested with this version of Workbench docker run -p 0.0.0.0:5665:5665 --name workbench -it openvino/workbench:2021.1 , I did not face any isssue.

I was able to profile with RGB dataset and converted to INT8.

Latest Version

-------------

However on the latest version it is failing when I created a project. It is converting to FP32 but fails to run inference.

How do we tell when creating Openvino that it needs to convert to grey , the input image?

I have specified Original Colour Space as Gray , but while inferencing it is unable to resize RGM/GRAY image to 1 channel dim.

But to test the accuracy, by providing accuracy config it ran inference successfully on RGB Images.

The accuracy Config :

datasets:

- annotation_conversion:

converter: image_processing

data_dir: $DATASET_PATH

data_source: $DATASET_PATH

metrics:

- presenter: print_vector

type: accuracy

name: dataset

preprocessing:

- type: resize

size: 224

- type: rgb_to_gray

subsample_size: 100%

=================================================

Here is my code to convert to ONXX,

# IMAGE CONFIG

IMG_HEIGHT = 224

IMG_WIDTH = 224

IMG_CHANNEL = 1

BATCH = 1

DUMMY_INPUT = torch.zeros(BATCH,IMG_CHANNEL,IMG_WIDTH,IMG_HEIGHT, dtype=torch.float, requires_grad=False)

torch.onnx.export(model_r,DUMMY_INPUT,RESNET_18_PATH,export_params=True)

=======================================================

ERROR LOG

[setupvars.sh] OpenVINO environment initialized

2022-12-12 10:01:49.881943: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory; LD_LIBRARY_PATH: :/opt/intel/openvino_2022.1.0.643/extras/opencv/python/cv2/../../bin:/opt/intel/openvino/extras/opencv/lib:/opt/intel/openvino/tools/compile_tool:/opt/intel/openvino/runtime/3rdparty/tbb/lib::/opt/intel/openvino/runtime/3rdparty/hddl/lib:/opt/intel/openvino/runtime/lib/intel64::/opt/intel/openvino_2022.1.0.643/extras/opencv/python/cv2/../../bin:/opt/intel/openvino/extras/opencv/lib:/opt/intel/openvino/runtime/lib/intel64:/opt/intel/openvino/tools/compile_tool:/opt/intel/openvino/runtime/3rdparty/tbb/lib:/opt/intel/openvino/runtime/3rdparty/hddl/lib

2022-12-12 10:01:49.881987: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

/usr/local/lib/python3.8/dist-packages/defusedxml/__init__.py:30: DeprecationWarning: defusedxml.cElementTree is deprecated, import from defusedxml.ElementTree instead.

from . import cElementTree

[NEW STAGE] 1;1

[RUN COMMAND] "benchmark_app" -m "/home/workbench/.workbench/models/4/original/resnet18_gcm.xml" -i "/home/workbench/.workbench/datasets/5" -d "CPU" -b "1" -nstreams "1" -t "20" --report_type "no_counters" --report_folder "/opt/intel/openvino_2022/tools/workbench/wb/data/profiling_artifacts/6/job_artifacts/1_1" -pc --exec_graph_path "/opt/intel/openvino_2022/tools/workbench/wb/data/profiling_artifacts/6/job_artifacts/1_1/exec_graph.xml"

[Step 1/11] Parsing and validating input arguments

[Step 2/11] Loading OpenVINO

[ WARNING ] PerformanceMode was not explicitly specified in command line. Device CPU performance hint will be set to THROUGHPUT.

[ INFO ] OpenVINO:

API version............. 2022.1.0-7019-cdb9bec7210-releases/2022/1

[ INFO ] Device info

CPU

openvino_intel_cpu_plugin version 2022.1

Build................... 2022.1.0-7019-cdb9bec7210-releases/2022/1

[Step 3/11] Setting device configuration

[Step 4/11] Reading network files

[ INFO ] Read model took 14.27 ms

[Step 5/11] Resizing network to match image sizes and given batch

[ INFO ] Network batch size: 1

[Step 6/11] Configuring input of the model

[ INFO ] Model input 'input.1' precision f32, dimensions ([N,C,H,W]): 1 1 224 224

[ INFO ] Model output '191' precision f32, dimensions ([...]): 1 4

[Step 7/11] Loading the model to the device

[ INFO ] Compile model took 130.03 ms

[Step 8/11] Querying optimal runtime parameters

[ INFO ] DEVICE: CPU

[ INFO ] AVAILABLE_DEVICES , ['']

[ INFO ] RANGE_FOR_ASYNC_INFER_REQUESTS , (1, 1, 1)

[ INFO ] RANGE_FOR_STREAMS , (1, 4)

[ INFO ] FULL_DEVICE_NAME , 11th Gen Intel(R) Core(TM) i7-1185G7 @ 3.00GHz

[ INFO ] OPTIMIZATION_CAPABILITIES , ['WINOGRAD', 'FP32', 'FP16', 'INT8', 'BIN', 'EXPORT_IMPORT']

[ INFO ] CACHE_DIR ,

[ INFO ] NUM_STREAMS , 1

[ INFO ] INFERENCE_NUM_THREADS , 0

[ INFO ] PERF_COUNT , True

[ INFO ] PERFORMANCE_HINT_NUM_REQUESTS , 0

[Step 9/11] Creating infer requests and preparing input data

[ INFO ] Create 1 infer requests took 0.07 ms

[ INFO ] Prepare image /home/workbench/.workbench/datasets/5/0.jpg

[ WARNING ] Image is resized from ((900, 1600)) to ((224, 224))

[ ERROR ] Image shape (3, 224, 224) is not compatible with input shape [1, 1, 224, 224]! Make sure -i parameter is valid.

Traceback (most recent call last):

File "/usr/local/lib/python3.8/dist-packages/openvino/tools/benchmark/utils/inputs_filling.py", line 186, in get_image_tensors

images[b] = image

ValueError: could not broadcast input array from shape (3,224,224) into shape (1,224,224)

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/local/lib/python3.8/dist-packages/openvino/tools/benchmark/main.py", line 375, in run

data_queue = get_input_data(paths_to_input, app_inputs_info)

File "/usr/local/lib/python3.8/dist-packages/openvino/tools/benchmark/utils/inputs_filling.py", line 113, in get_input_data

data[port] = get_image_tensors(image_mapping[info.name][:images_to_be_used_map[info.name]], info, batch_sizes_map[info.name])

File "/usr/local/lib/python3.8/dist-packages/openvino/tools/benchmark/utils/inputs_filling.py", line 188, in get_image_tensors

raise Exception(f"Image shape {image.shape} is not compatible with input shape {shape}! "

Exception: Image shape (3, 224, 224) is not compatible with input shape [1, 1, 224, 224]! Make sure -i parameter is valid.

[ INFO ] Statistics report is stored to /opt/intel/openvino_2022/tools/workbench/wb/data/profiling_artifacts/6/job_artifacts/1_1/benchmark_report.csv

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aznie,

Please find my workaround. I found out that current Workbench has issue in automatically converting the channels to 1.

Older Version

-------------

I tested with this version of Workbench docker run -p 0.0.0.0:5665:5665 --name workbench -it openvino/workbench:2021.1 , I did not face any isssue.

I was able to profile with RGB dataset and converted to INT8.

Latest Version

-------------

However on the latest version it is failing when I created a project when specifying RGB as Original Color Space , since the model is not trained on RGB, this is acceptable, but how do we tell open vino to load image with 1 channel , even though we give b/w image it is loading 3 channels.

But to test the accuracy, by providing accuracy config it ran inference successfully on RGB Images.

The accuracy Config :datasets:

- annotation_conversion:

converter: image_processing

data_dir: $DATASET_PATH

data_source: $DATASET_PATH

metrics:

- presenter: print_vector

type: accuracy

name: dataset

preprocessing:

- type: resize

size: 224

- type: rgb_to_gray

subsample_size: 100%

=======================================================================================

Here is my code to convert to ONXX,

# IMAGE CONFIG

IMG_HEIGHT = 224

IMG_WIDTH = 224

IMG_CHANNEL = 1

BATCH = 1

DUMMY_INPUT = torch.zeros(BATCH,IMG_CHANNEL,IMG_WIDTH,IMG_HEIGHT, dtype=torch.float, requires_grad=False)

torch.onnx.export(model_r,DUMMY_INPUT,RESNET_18_PATH,export_params=True)

==========================================================================================

###### ERROR TRACE in Open Vino

[setupvars.sh] OpenVINO environment initialized

2022-12-12 10:01:49.881943: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory; LD_LIBRARY_PATH: :/opt/intel/openvino_2022.1.0.643/extras/opencv/python/cv2/../../bin:/opt/intel/openvino/extras/opencv/lib:/opt/intel/openvino/tools/compile_tool:/opt/intel/openvino/runtime/3rdparty/tbb/lib::/opt/intel/openvino/runtime/3rdparty/hddl/lib:/opt/intel/openvino/runtime/lib/intel64::/opt/intel/openvino_2022.1.0.643/extras/opencv/python/cv2/../../bin:/opt/intel/openvino/extras/opencv/lib:/opt/intel/openvino/runtime/lib/intel64:/opt/intel/openvino/tools/compile_tool:/opt/intel/openvino/runtime/3rdparty/tbb/lib:/opt/intel/openvino/runtime/3rdparty/hddl/lib

2022-12-12 10:01:49.881987: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

/usr/local/lib/python3.8/dist-packages/defusedxml/__init__.py:30: DeprecationWarning: defusedxml.cElementTree is deprecated, import from defusedxml.ElementTree instead.

from . import cElementTree

[NEW STAGE] 1;1

[RUN COMMAND] "benchmark_app" -m "/home/workbench/.workbench/models/4/original/resnet18_gcm.xml" -i "/home/workbench/.workbench/datasets/5" -d "CPU" -b "1" -nstreams "1" -t "20" --report_type "no_counters" --report_folder "/opt/intel/openvino_2022/tools/workbench/wb/data/profiling_artifacts/6/job_artifacts/1_1" -pc --exec_graph_path "/opt/intel/openvino_2022/tools/workbench/wb/data/profiling_artifacts/6/job_artifacts/1_1/exec_graph.xml"

[Step 1/11] Parsing and validating input arguments

[Step 2/11] Loading OpenVINO

[ WARNING ] PerformanceMode was not explicitly specified in command line. Device CPU performance hint will be set to THROUGHPUT.

[ INFO ] OpenVINO:

API version............. 2022.1.0-7019-cdb9bec7210-releases/2022/1

[ INFO ] Device info

CPU

openvino_intel_cpu_plugin version 2022.1

Build................... 2022.1.0-7019-cdb9bec7210-releases/2022/1

[Step 3/11] Setting device configuration

[Step 4/11] Reading network files

[ INFO ] Read model took 14.27 ms

[Step 5/11] Resizing network to match image sizes and given batch

[ INFO ] Network batch size: 1

[Step 6/11] Configuring input of the model

[ INFO ] Model input 'input.1' precision f32, dimensions ([N,C,H,W]): 1 1 224 224

[ INFO ] Model output '191' precision f32, dimensions ([...]): 1 4

[Step 7/11] Loading the model to the device

[ INFO ] Compile model took 130.03 ms

[Step 8/11] Querying optimal runtime parameters

[ INFO ] DEVICE: CPU

[ INFO ] AVAILABLE_DEVICES , ['']

[ INFO ] RANGE_FOR_ASYNC_INFER_REQUESTS , (1, 1, 1)

[ INFO ] RANGE_FOR_STREAMS , (1, 4)

[ INFO ] FULL_DEVICE_NAME , 11th Gen Intel(R) Core(TM) i7-1185G7 @ 3.00GHz

[ INFO ] OPTIMIZATION_CAPABILITIES , ['WINOGRAD', 'FP32', 'FP16', 'INT8', 'BIN', 'EXPORT_IMPORT']

[ INFO ] CACHE_DIR ,

[ INFO ] NUM_STREAMS , 1

[ INFO ] INFERENCE_NUM_THREADS , 0

[ INFO ] PERF_COUNT , True

[ INFO ] PERFORMANCE_HINT_NUM_REQUESTS , 0

[Step 9/11] Creating infer requests and preparing input data

[ INFO ] Create 1 infer requests took 0.07 ms

[ INFO ] Prepare image /home/workbench/.workbench/datasets/5/0.jpg

[ WARNING ] Image is resized from ((900, 1600)) to ((224, 224))

[ ERROR ] Image shape (3, 224, 224) is not compatible with input shape [1, 1, 224, 224]! Make sure -i parameter is valid.

Traceback (most recent call last):

File "/usr/local/lib/python3.8/dist-packages/openvino/tools/benchmark/utils/inputs_filling.py", line 186, in get_image_tensors

images[b] = image

ValueError: could not broadcast input array from shape (3,224,224) into shape (1,224,224)

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/local/lib/python3.8/dist-packages/openvino/tools/benchmark/main.py", line 375, in run

data_queue = get_input_data(paths_to_input, app_inputs_info)

File "/usr/local/lib/python3.8/dist-packages/openvino/tools/benchmark/utils/inputs_filling.py", line 113, in get_input_data

data[port] = get_image_tensors(image_mapping[info.name][:images_to_be_used_map[info.name]], info, batch_sizes_map[info.name])

File "/usr/local/lib/python3.8/dist-packages/openvino/tools/benchmark/utils/inputs_filling.py", line 188, in get_image_tensors

raise Exception(f"Image shape {image.shape} is not compatible with input shape {shape}! "

Exception: Image shape (3, 224, 224) is not compatible with input shape [1, 1, 224, 224]! Make sure -i parameter is valid.

[ INFO ] Statistics report is stored to /opt/intel/openvino_2022/tools/workbench/wb/data/profiling_artifacts/6/job_artifacts/1_1/benchmark_report.csv

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Bansi,

Thank you for reporting this issue.

I am sorry that you have faced an ONNX conversion issue in the latest DL Workbench. I've faced the same issue with an ONNX model and brought this issue to the development team for further investigation. Please feel free to refer to the Release Notes for the latest bug fixes.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Bansi,

This thread will no longer be monitored since we have provided information. If you need any additional information from Intel, please submit a new question.

Regards,

Aznie

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page