- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Greetings -

We're using OpenVINO for inference in a Windows/Intel environment where at times integrated GPUs are available and at other times they're not. Our code has been randomly crashing when running on Windows/Intel machines without integrated GPUs; while working flawlessly on those with integrated GPUs.

The "crash" is actually an access violation, and according to WinDBG it has been occurring within MKLDNNPlugin(d).dll (it happens on both release and debug versions). The problem, in addition to the crash itself, is I get very little in the way of a quality stack due to no PDB file for this module. In fact, about the only information I get from the crash is this output from WinDBG:

SYMBOL_NAME: MKLDNNPlugind+9401

MODULE_NAME: MKLDNNPlugind

IMAGE_NAME: MKLDNNPlugind.dll

STACK_COMMAND: ** Pseudo Context ** Pseudo ** Value: 18b5466b4a0 ** ; kb

FAILURE_BUCKET_ID: INVALID_POINTER_READ_c0000005_MKLDNNPlugind.dll!Unknown

OS_VERSION: 10.0.21296.1000

BUILDLAB_STR: rs_prerelease

OSPLATFORM_TYPE: x64

OSNAME: Windows 10

FAILURE_ID_HASH: {eb739792-a67d-fc42-0619-38b6284d9e66}

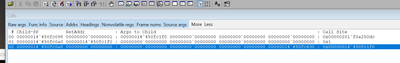

And this stack trace:

It's consistent across machines without integrated GPUs.

For what it's worth, the model we're running is a resnet 50 from FastAI (pytorch) converted to onyx, and then run through the OpenVINO model optimizer.

My ultimate question is how do I begin to debug this? I have no stack trace, the video frame on which the crash occurs appears random, and I'm at a loss as to identifying the ultimate cause. The only other "quirky" thing I can mention is we're using C++/CLR (the .Net-version of C++) to call into OpenVINO so we can access the code from C#. We're loading the model like this:

void OpenVINOAccessor::LoadNetwork(int width, int height)

{

marshal_context^ context = gcnew marshal_context();

std::string networkPath(context->marshal_as<const char*>(_networkPath));

std::string networkBinPath = networkPath.substr(0, networkPath.find_last_of('.')) + ".bin";

// instantiate everything onto a separate data structure. MC++ doesn't support

// or allow non-managed non-pointer structures to be stored as private members due to

// GC compaction rules. This skirts that.

_openVino = new OpenVINOStructures();

// instantiate "core": https://docs.openvinotoolkit.org/latest/classInferenceEngine_1_1Core.html

_openVino->Core = new Core();

_openVino->Network = _openVino->Core->ReadNetwork(networkPath, networkBinPath);

// we are using a batch size of one for inference.

_openVino->Network.setBatchSize(1);

// get a input info structure to set properties on the input layer. getInputsInfo

// returns also name information in case we have multi-input options, allowing us to specify

// the input later. this is being known as "preparing the input blobs"

// https://docs.openvinotoolkit.org/latest/classInferenceEngine_1_1ICNNNetwork.html#ac0d904dcfd039972e04923f1e0befbdd

InputInfo::Ptr input_info = _openVino->Network.getInputsInfo().begin()->second;

//Dynamically set the input size

SizeVector size{ (size_t) 1, (size_t) 1, (size_t) height, (size_t) width };

TensorDesc tensor(Precision::FP32, size, InferenceEngine::Layout::NCHW);

DataPtr dataPtr = std::make_shared<InferenceEngine::Data>("input", tensor);

input_info->setInputData(dataPtr);

_openVino->InputName = _openVino->Network.getInputsInfo().begin()->first;

// now, prepare the "output blobs", or the data format for that which gets spat out of the network

std::map<std::string, DataPtr>::iterator it;

auto outputsInfo = _openVino->Network.getOutputsInfo();

for (it = outputsInfo.begin(); it != outputsInfo.end(); it++)

{

DataPtr output_info = it->second;

// we are expecting float outputs

output_info->setPrecision(Precision::FP32);

}

// load the network, specifying a GPU as first option.

// https://docs.openvinotoolkit.org/latest/classInferenceEngine_1_1Core.html#a7ac4bd8bc351fae833aaa0db84fab738

try

{

_openVino->ExecutableNetwork = _openVino->Core->LoadNetwork(_openVino->Network, "GPU");

}

catch (InferenceEngine::details::InferenceEngineException ex)

{

_openVino->ExecutableNetwork = _openVino->Core->LoadNetwork(_openVino->Network, "CPU");

}

// clean up the context for string manipuation

delete context;

}

And inference (please note that we've commented out parts of the code for returning the values and "spoofing" it with hard-coded values as it was identified this wasn't part of the problem) is done like this:

System::Collections::Generic::Dictionary<System::String^, array<float>^>^ OpenVINOAccessor::Infer(array<unsigned short>^ frameData)

{

System::Collections::Generic::Dictionary<System::String^, array<float>^>^ toReturn = gcnew System::Collections::Generic::Dictionary<System::String^, array<float>^>();

// create an inferrequest

// https://docs.openvinotoolkit.org/latest/classInferenceEngine_1_1InferRequest.html

InferRequest inferRequest = _openVino->ExecutableNetwork.CreateInferRequest();

// copy the managed data to non-managed for execution.

float* frameDataPtr = new float[frameData->Length];

for (int c = 0; c < frameData->Length; c++)

{

frameDataPtr[c] = (float)(frameData[c]);

}

// prepare the input blob. low impact operation.

// inspirado comes from http://docs.ros.org/en/kinetic/api/librealsense2/html/openvino-helpers_8h_source.html

// and more specifically wrapMat2Blob

auto inputShapes = _openVino->Network.getInputShapes();

auto inputShape = inputShapes[_openVino->InputName];

InferenceEngine::TensorDesc tDesc(InferenceEngine::Precision::FP32, inputShape, InferenceEngine::Layout::NCHW);

// https://docs.openvinotoolkit.org/latest/ie__blob_8h.html#a2173cee0e7f2522ffbc55c97d6e05ac5

// what this returns is a "shared pointer", a native C++ construct: https://en.cppreference.com/w/cpp/memory/shared_ptr

// no memory is actually allocated, we just reference the data we allocate above.

// the shared pointer will destroy the internal data when it goes out of scope.

InferenceEngine::Blob::Ptr sharedPointer = InferenceEngine::make_shared_blob<float>(tDesc, frameDataPtr);

sharedPointer->allocate();

// set the input blob for the referrequest

// https://docs.openvinotoolkit.org/latest/classInferenceEngine_1_1InferRequest.html#a27fb179e3bae652d76076965fd2a5653

inferRequest.SetBlob(_openVino->InputName, sharedPointer);

//// synchronously execute an inference

inferRequest.Infer();

//auto outputsInfo = _openVino->Network.getOutputsInfo();

//std::map<std::string, DataPtr>::iterator it;

//for (it = outputsInfo.begin(); it != outputsInfo.end(); it++)

//{

// Blob::Ptr output = inferRequest.GetBlob(it->first);

// DataPtr output_info = it->second;

// auto const memLocker = output->cbuffer();

// size_t element_size = output->element_size();

// if (element_size != 4)

// {

// throw;

// }

// size_t num_outputs = output->size();

// System::String^ s = gcnew String(it->first.c_str());

//

// auto tensor_array = gcnew array<float>(num_outputs);

// const float* output_buffer = memLocker.as<const float*>();

// for (int i = 0; i < num_outputs; i++)

// {

// tensor_array[i] = output_buffer[i];

// }

// toReturn[s] = tensor_array;

//}

delete[] frameDataPtr;

array<float>^ a1 = gcnew array<float>(2);

a1[0] = 1.0f;

a1[1] = -3.25;

toReturn->Add("IsOtherPersonInRoom", a1);

array<float>^ a2 = gcnew array<float>(2);

a1[0] = -1.06f;

a1[1] = -0.0197f;

toReturn->Add("IsOutOfChair", a2);

return toReturn;

}

The last thing to mention is the crash occurs within the inferRequest->Infer() call.

Thanks for any advice.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Greetings,

We could see that you had used the try/catch method for inferencing and using CPU as a fallback device for everything that fails to infer on GPU.

That's quite a non-standard option to do so, considering that they only see this crash for machines where GPU is not presented.

We would suggest either to implement this differently and separate the code in the following way:

For GPU+CPU machines we recommend to use HETERO plugin configured for GPU as a primary device and CPU as a fallback one:

InferenceEngine::Core core;

auto network = core.ReadNetwork("sample.xml");

auto executable_network = core.LoadNetwork(network, "HETERO:GPU,CPU");

For CPU only machines it will be:

InferenceEngine::Core core;

auto network = core.ReadNetwork("sample.xml");

auto executable_network = core.LoadNetwork(network, "CPU");

There might be a switch in your code based on whether GPU device is presented or not.

Sincerely,

Iffa

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Greetings,

Intel will no longer monitor this thread since we have provided a solution. If you need any additional information from Intel, please submit a new question.

Sincerely,

Iffa

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page