- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear sirs,

I use the accuracy checker to run Yolov3-tf INT8 after POT, and its coco_precision is even higher than Yolov3-tf FP32. It is not in line with my perception.

My test environment information as below:

- CPU : Intel Core i5-1145GRE

- OS : linux Ubuntu 20.04 LTS, 64bits

- Openvino versoin : 2021.3.394

- Python version : 3.8.5

- Yolov3-tf : https://docs.openvinotoolkit.org/latest/omz_models_model_yolo_v3_tf.html

IR Model download: https://drive.google.com/file/d/1MiB5W-

khMNRCSw6sB5tM5MvvUQWyLiJe/view

- Coco datasets json file: https://drive.google.com/file/d/1CpEwYdtC0rdYabpiUjWT4rYvmASA5v9e/view?usp=sharing

Or you can download (instances_val2017.json) here:

http://images.cocodataset.org/annotations/annotations_trainval2017.zip

- Coco datasets JPG file : http://images.cocodataset.org/zips/val2017.zip

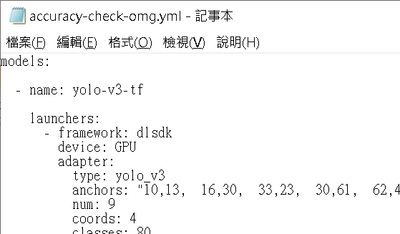

- Yml file : https://drive.google.com/file/d/1jpDutCxPP6htjIcaH2RoGJhIsAhOQhy4/view?usp=sharing

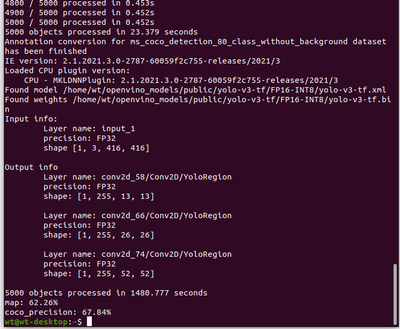

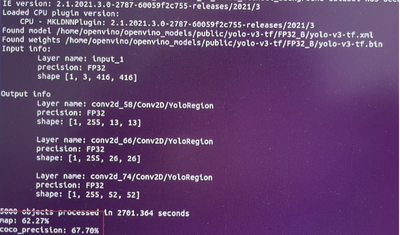

Run yoloV3-tf IR FP32 accuracy checker:

jackowt@jackowt:/opt/intel/openvino_2021/deployment_tools/open_model_zoo/tools/accuracy_checker$ accuracy_check –c accuracy-check-omg.yml –m /home/openvino/openvino_models/public/yolo-v3-tf/FP32 –s /home/openvino/coco_dataset

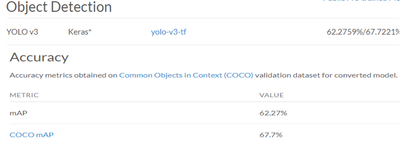

The result is same as yolo-v3-tf Accuracy as below:

Question 1:

Accuracy check for INT8.

coco_precision: 67.84% higher than FP32: 67.7% , It doesn’t make sense.

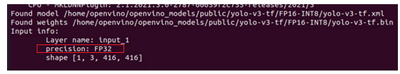

Question 2:

When accuracy check with INT8, why precision of input info shows FP32?

Question 3:

Why GPU not work?

Please help me to check.

Thanks for your suppoort.

BR

Jacko Chiu

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jacko Chiu,

Thank you for reaching out and sharing the files with us. We are currently investigating this issues and will get back to you.

Regards,

Syamimi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jacko Chiu,

Thank you for waiting.

This is the answer to your first question:

The accuracy value of the yolo-v3-tf model is validated using the mAP (mean average precision) metric, not coco_precision.

Thus, the below outcome of your replication has confirmed our documentation that 8-bit computations (referred to as int8) offer better performance compared to the results of inference in higher precision (for example, fp32) because they allow loading more data into a single processor instruction. Usually, the cost for a significant boost is reduced accuracy.

FP32 mAP: 62.95%

INT8 mAP: 62.8%

Therefore, such reduction is expected and you should not be comparing using coco_precision.

Second answer:

I will check this issue with the developer team and get back to you.

Third answer:

You need to make sure that your environment supports the GPU plugin. After that, you can have a try to run the Accuracy Checker by using the following command:

accuracy_check -c accuracy-chech.omg.yaml -m /home/openvino/openvino_models/public/yolo-v3-tf/FP32 -td GPU

Regards,

Syamimi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Syamimi,

Thanks for your response.

I have a little question about your answer to Question 1.

Here is your response:

"The accuracy value of the yolo-v3-tf model is validated using the mAP (mean average precision) metric, not coco_precision."

and Please refer to this link : https://docs.openvinotoolkit.org/latest/openvino_docs_performance_int8_vs_fp32.html

In this form, the metric name used by yolo_v3-tf is CoCo mAP.

In this form, I have seen that one of the metric names is mAP.

Question 1:

In your comment, yolo-v3-tf model is validated using the mAP, This is different from the yolo_v3-tf:coco mAP mentioned in the link form.

Question 2:

Are coco_precision and coco mAP the same? or Are mAP and coco mAP the same?

Question 3:

You say "yolo-v3-tf model is validated using the mAP (mean average precision) metric", Is there any document mentioned? Why can only be MAP, not coco_precision.

Thanks your help.

BR

Jacko

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jacko Chiu,

Thank you for waiting. For the question number 2, this is not a bug. It is default behaviour of InferenceEngine Python API to use FP32 as input precision for data.

Such configuration/info was made for user convenience because not all data type are platform agnostics (e.g. FP16), casting from FP32 to real used in model precision happens inside runtime.

Without this feature, user may need to manually pre-process data to specific required precision which is might not be trivial in some cases.

Regards,

Syamimi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jacko Chiu,

Question 1:

In your comment, yolo-v3-tf model is validated using the mAP, This is different from the yolo_v3-tf:coco mAP mentioned in the link form.

The link that you referred to [INT8 vs FP32 Comparison on Select Networks and Platforms - OpenVINO™ Toolkit (openvinotoolkit.org)] is used to show the absolute accuracy drop that is calculated as the difference in accuracy between the FP32 representation of a model and its INT8 representation.

This is merely for comparison between model accuracy value within several platform configuration.

In this table, COCO mAP is chosen as selected metric to compare the model accuracy across these identified configuration.

For OpenVINO model accuracy validation, all models are validated and tested using their respective metrics that can be found here: Trained Models - OpenVINO™ Toolkit (openvinotoolkit.org)

Accuracy metrics obtained on COCO* validation dataset for converted model of yolo-v3-tf as shown here: yolo-v3-tf - OpenVINO™ Toolkit (openvinotoolkit.org)

Question 2:

Are coco_precision and coco mAP the same? or Are mAP and coco mAP the same?

Under COCO context, there is no difference between AP and mAP as mentioned here: COCO - Common Objects in Context (cocodataset.org)

From OpenVINO context, mAP and coco_precision carries different perception/metrics as in the link: https://docs.openvinotoolkit.org/latest/omz_tools_accuracy_checker_metrics.html

- coco_precision - MS COCO Average Precision metric for keypoints recognition and object detection tasks. Supported representations: PoseEstimationAnnotation, PoseEstimationPrediction, DetectionAnnotation, DetectionPrediction.

- max_detections - max number of predicted results per image. If you have more predictions,the results with minimal confidence will be ignored.

- threshold - intersection over union threshold. You can specify one value or comma separated range of values. This parameter supports precomputed values for standard COCO thresholds (.5, .75, .5:.05:.95).

- map - mean average precision. Supported representations: DetectionAnnotation, DetectionPrediction.

- overlap_threshold - minimal value for intersection over union that allows to make decision that prediction bounding box is true positive.

- overlap_method - method for calculation bbox overlap. You can choose between intersection over union (iou), defined as area of intersection divided by union of annotation and prediction boxes areas, and intersection over area (ioa), defined as area of intersection divided by ara of prediction box.

- include_boundaries - allows include boundaries in overlap calculation process. If it is True then width and height of box is calculated by max - min + 1.

- ignore_difficult - allows to ignore difficult annotation boxes in metric calculation. In this case, difficult boxes are filtered annotations from postprocessing stage.

- distinct_conf - select only values for distinct confidences.

- allow_multiple_matches_per_ignored - allows multiple matches per ignored.

- label_map - the field in annotation metadata, which contains dataset label map (Optional, should be provided if different from default).

- integral - integral type for average precision calculation. Pascal VOC 11point and max approaches are available.

Question 3:

You say "yolo-v3-tf model is validated using the mAP (mean average precision) metric", Is there any document mentioned? Why can only be MAP, not coco_precision.

As mentioned in this link [yolo-v3-tf - OpenVINO™ Toolkit (openvinotoolkit.org)]. yolo-v3-tf accuracy is validated using mAP & COCO mAP.

Example of other Object Detection Models that validated on coco_precision specifically are ssd_mobilenet_v1_coco & faster_rcnn_inception_v2_coco

Additional references:

https://towardsdatascience.com/map-mean-average-precision-might-confuse-you-5956f1bfa9e2

https://blog.roboflow.com/mean-average-precision/

Hope these clarifies.

Regards,

Syamimi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Syamimi,

Thanks for your response.

I have a little question about coco_precision and coco_mAP.

First, you are right. Under COCO context, there is no difference between coco_ap and coco_mAP.

As mentioned in this link :[https://docs.openvinotoolkit.org/latest/omz_tools_accuracy_checker_metrics.html] .

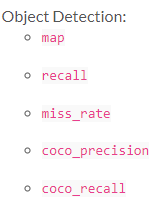

The Openvino Object Detection only support these metrics as below:

but in this link :[https://docs.openvinotoolkit.org/latest/omz_models_model_yolo_v3_tf.html ] .

you say "yolo-v3-tf accuracy is validated using mAP & COCO mAP."

Question 1:

COCO mAP is not in metric support list. so yolo-v3-tf accuracy is only validated using mAP?

Question 2:

Are COCO mAP and COCO precision the same in openvino?

Thanks for your help!!

BR

Jacko

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jacko Chiu,

Question 1:

COCO mAP is not in metric support list. so yolo-v3-tf accuracy is only validated using mAP?

All the supported metrics available in the link: https://docs.openvinotoolkit.org/latest/omz_tools_accuracy_checker_metrics.html are used and defined when you want to compare the predicted data with annotated data and perform quality measurement using OpenVINO accuracy_checker tool. You may use any available metrics based on your dataset representation and optionally reference field for metrics.

As for the model itself, yolo-v3-tf is tested and validated using both mAP and COCO mAP metrics for the converted model prior to adding it into our OMZ repository.

Therefore if you are referring to the model validation - yolo-v3-tf is validated using these metrics as per our documentation: yolo-v3-tf - OpenVINO™ Toolkit (openvinotoolkit.org)

Question 2:

Are COCO mAP and COCO precision the same in openvino?

coco_precision in accuracy_checker metric is using the similar threshold value as defined in Common Objects in Context (COCO) validation dataset.

- coco_precision - MS COCO Average Precision metric for key points recognition and object detection tasks. Metric is calculated as a percentage. Direction of metric's growth is higher-better. Supported representations: PoseEstimationAnnotation, PoseEstimationPrediction, DetectionAnnotation, DetectionPrediction.

- max_detections - max number of predicted results per image. If you have more predictions,the results with minimal confidence will be ignored.

- threshold - intersection over union threshold. You can specify one value or comma separated range of values. This parameter supports precomputed values for standard COCO thresholds (.5, .75, .5:.05:.95).

Can you assist to confirm that you are looking to utilize coco_precision value as your main reference rather than mAP?

If yes, we may re-engage with our development team to further elaborate your initial Question 1 - coco_precision: 67.84% higher than FP32: 67.7% , It doesn’t make sense.

Regards,

Syamimi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Syamimi,

Thanks for your response.

Let me summarize. Please help me confirm my concept in red.

First, COCO mAP is not in metrics support list in openvino. so yolo-v3-tf accuracy is only validated using mAP in openvino? Is That right?

Second, As you mentioned before, for the model yolo-v3-tf itself, it is tested and validated using both mAP and COCO mAP metrics for the converted model prior to adding it into our OMZ repository. => This is the validated metrics of the model itself before the conversion, which has nothing to do with openvino.

Third, the metrics that openvino supports are all in this link: [https://docs.openvinotoolkit.org/latest/omz_tools_accuracy_checker_metrics.html] , In other words, the metrics supported by openvino do not include COCO mAP, so for yolo-v3-tf, I can only focus on map.

Is the concept correct for my answer in the red letter above?

Thanks for your help!!

BR

Jacko Chiu

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jacko Chui,

First and foremost, sorry for the confusions that we might induced in providing our replies to your questions.

As for your subsequent follow-up questions, the answers as below:

Question 1: First, COCO mAP is not in metrics support list in openvino. so yolo-v3-tf accuracy is only validated using mAP in openvino? Is That right?

Accuracy_checker tools allows to reproduce validation pipeline and collect aggregated quality indicators for popular datasets both for networks in source frameworks and in the OpenVINO™ supported formats.

As yolo-v3 is object detection model, you can use any of these metrics to validate the accuracy when using OpenVINO accuracy_checker tool – not only mAP, depending on your preferences.

Object Detection:

- map

- recall

- miss_rate

- coco_precision

- coco_recall

Question 2: This is the validated metrics of the model itself before the conversion, which has nothing to do with openvino.

You are correct. Our development team utilized these two metrics (mAP and COCO mAP) to calculate the accuracy prior to add them into the OMZ repository

Question 3: In other words, the metrics supported by openvino do not include COCO mAP, so for yolo-v3-tf, I can only focus on map.

This was the confusion that we previously replied to you.

As mentioned in Question 1, you can use any of available metrics in Object Detection to calculate the accuracy and we think that you can use coco_precision with specifying the threshold under this metric – to follow the same COCO mAP metric defined here: COCO - Common Objects in Context (cocodataset.org)

However, we can say mAP was the most common metric used to calculate the model accuracy.

- coco_precision - MS COCO Average Precision metric for key points recognition and object detection tasks. Metric is calculated as a percentage. Direction of metric's growth is higher-better. Supported representations: PoseEstimationAnnotation, PoseEstimationPrediction, DetectionAnnotation, DetectionPrediction.

- max_detections - max number of predicted results per image. If you have more predictions, the results with minimal confidence will be ignored.

- threshold - intersection over union threshold. You can specify one value or comma separated range of values. This parameter supports precomputed values for standard COCO thresholds (.5, .75, .5:.05:.95).

Looking at your original question, the accuracy result that you get for INT8 using coco_precision was 67.84% higher than FP32: 67.7% and I believe you want to understand this further.

Please confirm this so that I can re-engage our engineering team to elaborate further on the reasoning behind the result obtained.

Regards,

Syamimi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Syamimi,

Thanks for your response.

Looking at your original question, the accuracy result that you get for INT8 using coco_precision was 67.84% higher than FP32: 67.7% and I believe you want to understand this further.

Please confirm this so that I can re-engage our engineering team to elaborate further on the reasoning behind the result obtained.

YES, Please.

Thanks

BR

Jacko

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jacko Chiu,

I have escalated this case to our development team and still waiting for them to share their findings with us. We will get back to you soon.

Regards,

Syamimi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jacko Chiu,

Thank you for waiting. This is the answer from our development team:

We do see the same behaviour on our internal test result for the respective model and this is something expected. The similar results were captured for some other quantized models too due to reduced variance in weights coefficients of quantized model. It does not says that overall accuracy of quantized model become better or worse on real life data, this is just a metric on validation dataset (which is always limited compared with infinite real life data).

Sometimes, INT8 accuracy is better than FP32 which may indicated that the model is not well tuned or the validation dataset is not optimal. In this particular case/model, the difference is not big thus our development team don't see a problem and we don't have concrete concerns towards coco_precision.

Regards,

Syamimi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jacko Chiu,

This thread will no longer be monitored since we have provided a solution. If you need any additional information from Intel, please submit a new question.

Regards,

Syamimi

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page