- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

C:\Intel\computer_vision_sdk_2018.5.445\deployment_tools\model_optimizer>python mo_tf.py --input_model=C:\Users\shishupalreddy\Desktop\frozen_inference_graph.pb --tensorflow_use_custom_operations_config C:/Intel/computer_vision_sdk_2018.5.445/deployment_tools/model_optimizer/extensions/front/tf/faster_rcnn_support_api_v1.7.json --tensorflow_object_detection_api_pipeline_config C:\Users\shishupalreddy\Desktop\pipeline.config --input_shape [1,600,600,3]

other snippet i tried :

C:\Intel\computer_vision_sdk_2018.5.445\deployment_tools\model_optimizer>python mo_tf.py --input_model=C:\Users\shishupalreddy\Desktop\frozen_inference_graph.pb --tensorflow_use_custom_operations_config C:/Intel/computer_vision_sdk_2018.5.445/deployment_tools/model_optimizer/extensions/front/tf/faster_rcnn_support.json --tensorflow_object_detection_api_pipeline_config C:\Users\shishupalreddy\Desktop\pipeline.config --input_shape [1,600,1024,3]

The output i get :

Model Optimizer arguments:

Common parameters:

- Path to the Input Model: C:\Users\Desktop\frozen_inference_graph.pb

- Path for generated IR: C:\Intel\computer_vision_sdk_2018.5.445\deployment_tools\model_optimizer\.

- IR output name: frozen_inference_graph

- Log level: ERROR

- Batch: Not specified, inherited from the model

- Input layers: Not specified, inherited from the model

- Output layers: Not specified, inherited from the model

- Input shapes: [1,224,224,3]

- Mean values: Not specified

- Scale values: Not specified

- Scale factor: Not specified

- Precision of IR: FP32

- Enable fusing: True

- Enable grouped convolutions fusing: True

- Move mean values to preprocess section: False

- Reverse input channels: False

TensorFlow specific parameters:

- Input model in text protobuf format: False

- Offload unsupported operations: False

- Path to model dump for TensorBoard: None

- List of shared libraries with TensorFlow custom layers implementation: None

- Update the configuration file with input/output node names: None

- Use configuration file used to generate the model with Object Detection API: C:\Users\Desktop\pipeline.config

- Operations to offload: None

- Patterns to offload: None

- Use the config file: C:/Intel/computer_vision_sdk_2018.5.445/deployment_tools/model_optimizer/extensions/front/tf/faster_rcnn_support_api_v1.7.json

Model Optimizer version: 1.5.12.49d067a0

[ WARNING ] Model Optimizer removes pre-processing block of the model which resizes image keeping aspect ratio. The Inference Engine does not support dynamic image size so the Intermediate Representation file is generated with the input image size of a fixed size.

[ WARNING ] The model resizes the input image keeping aspect ratio with min dimension 600, max dimension 1024. The provided input height 224, width 224 is transformed to height 600, width 600.

The Preprocessor block has been removed. Only nodes performing mean value subtraction and scaling (if applicable) are kept.

The graph output nodes "num_detections", "detection_boxes", "detection_classes", "detection_scores" have been replaced with a single layer of type "Detection Output". Refer to IR catalogue in the documentation for information about this layer.

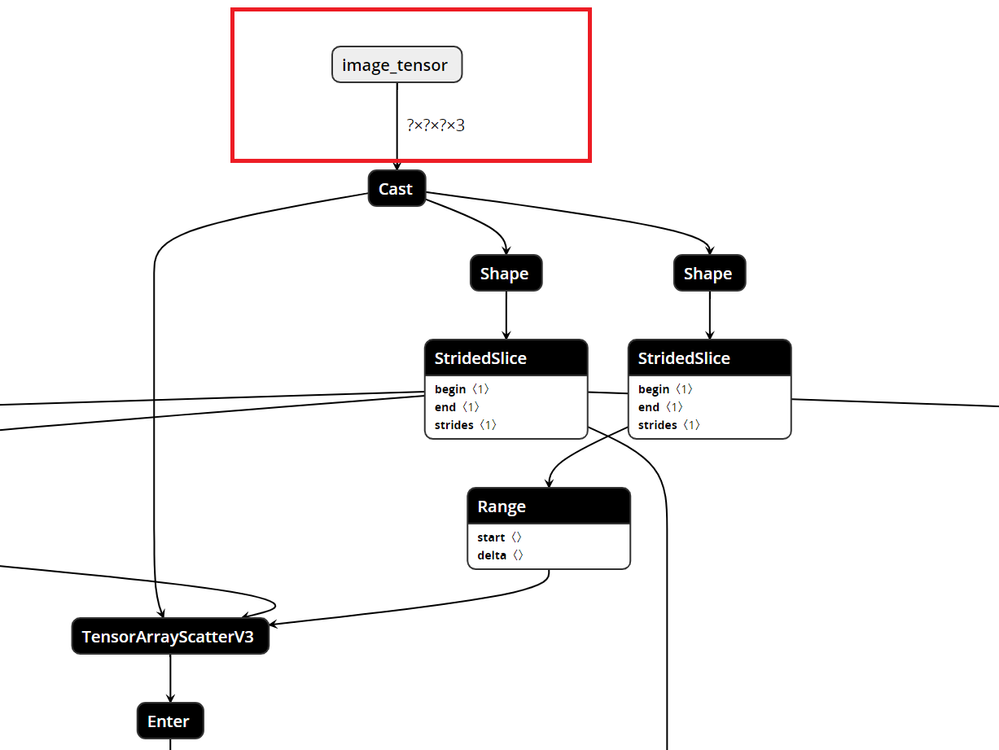

[ ERROR ] Cannot infer shapes or values for node "ToFloat_3".

[ ERROR ] NodeDef mentions attr 'Truncate' not in Op<name=Cast; signature=x:SrcT -> y:DstT; attr=SrcT:type; attr=DstT:type>; NodeDef: ToFloat_3 = Cast[DstT=DT_FLOAT, SrcT=DT_UINT8, Truncate=false](image_tensor_port_0_ie_placeholder). (Check whether your GraphDef-interpreting binary is up to date with your GraphDef-generating binary.).

[ ERROR ]

[ ERROR ] It can happen due to bug in custom shape infer function <function tf_native_tf_node_infer at 0x000002D88DA507B8>.

[ ERROR ] Or because the node inputs have incorrect values/shapes.

[ ERROR ] Or because input shapes are incorrect (embedded to the model or passed via --input_shape).

[ ERROR ] Run Model Optimizer with --log_level=DEBUG for more information.

[ ERROR ] Stopped shape/value propagation at "ToFloat_3" node.

For more information please refer to Model Optimizer FAQ (<INSTALL_DIR>/deployment_tools/documentation/docs/MO_FAQ.html), question #38.

I have also attached the frozen_inference_graph.pb and also pipeline.config files.

The above was the error i am facing and couldn't find any solutions in the forum , so we are using OpenVino R5, and model is faster_rcnn_inception_v2_coco. Could you please help us out.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I'm having the same issue!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

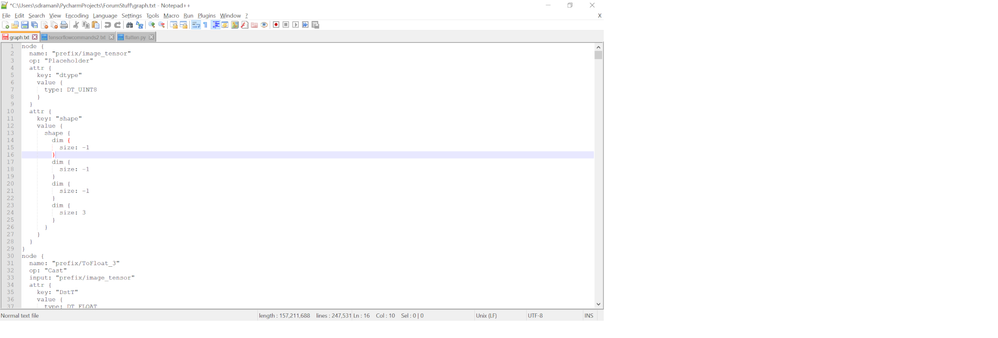

I used code similar to below to see the text version of frozen_inference_graph.pb.

import tensorflow as tf

def load_graph(frozen_graph_filename):

# We load the protobuf file from the disk and parse it to retrieve the

# unserialized graph_def

with tf.gfile.GFile(frozen_graph_filename, "rb") as f:

graph_def = tf.GraphDef()

graph_def.ParseFromString(f.read())

# Then, we import the graph_def into a new Graph and return it

with tf.Graph().as_default() as graph:

# The name var will prefix every op/nodes in your graph

# Since we load everything in a new graph, this is not needed

tf.import_graph_def(graph_def, name="prefix")

return graph

if __name__ == '__main__':

mygraph = load_graph("C:\\<PATH>\\frozen_inference_graph.pb")

tf.train.write_graph(mygraph, "./", "graph.txt")

What I noticed at the start of "graph.txt" (it turned out to be a HUGE file) just before ToFloat_3 was an input shape with dims -1, -1, -1, 3. Those -1 (which are OK in numpy) don't work for Model Optimizer. You must pass in a legitimate value for those dim attributes. Use the --input_shape argument to pass in the correct dimensions.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OP did specify the input shape.

From OP's pipeline.config file:

image_resizer {

keep_aspect_ratio_resizer {

min_dimension: 600

max_dimension: 1024

}

}

That would match the second run listed correct?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please see the image below of the graph.txt output. Those -1s for shape of prefix/image_tensor are not allowed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

--input image_tensor

--shape [1, (width), (height), 3]

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried giving --input_shape with [1, 600. 1024, 3] as a parameter.

However, it gave me the same error. Could you please advise on it should be done?

Thank you

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Shubha R,

I have successfully converted the frozen graph which i downloaded from http://download.tensorflow.org/models/object_detection/faster_rcnn_inception_v2_coco_2018_01_28.tar.gz and it has the same shape as -1, -1, -1, 3 but the above frozen graph.pb also has the same shape -1, -1, -1, 3 but not converted to .xml and .bin(optimized) i am pasting below snippets for reference.

Thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

When I run the above code on my frozen inference graph, I get the following error:

Traceback (most recent call last):

File "/home/warrior/.local/lib/python3.5/site-packages/tensorflow/python/framework/importer.py", line 418, in import_graph_def

graph._c_graph, serialized, options) # pylint: disable=protected-access

tensorflow.python.framework.errors_impl.InvalidArgumentError: NodeDef mentions attr 'Truncate' not in Op<name=Cast; signature=x:SrcT -> y:DstT; attr=SrcT:type; attr=DstT:type>; NodeDef: prefix/ToFloat = Cast[DstT=DT_FLOAT, SrcT=DT_INT32, Truncate=false](prefix/Const). (Check whether your GraphDef-interpreting binary is up to date with your GraphDef-generating binary.).

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "export_pb_to_txt.py", line 19, in <module>

mygraph = load_graph("/home/warrior/kai/pipeline/frozen_inference_graph.pb")

File "export_pb_to_txt.py", line 14, in load_graph

tf.import_graph_def(graph_def, name="prefix")

File "/home/warrior/.local/lib/python3.5/site-packages/tensorflow/python/util/deprecation.py", line 432, in new_func

return func(*args, **kwargs)

File "/home/warrior/.local/lib/python3.5/site-packages/tensorflow/python/framework/importer.py", line 422, in import_graph_def

raise ValueError(str(e))

ValueError: NodeDef mentions attr 'Truncate' not in Op<name=Cast; signature=x:SrcT -> y:DstT; attr=SrcT:type; attr=DstT:type>; NodeDef: prefix/ToFloat = Cast[DstT=DT_FLOAT, SrcT=DT_INT32, Truncate=false](prefix/Const). (Check whether your GraphDef-interpreting binary is up to date with your GraphDef-generating binary.).

It looks like I am having a different issue. Any ideas would be appreciated!

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page