- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

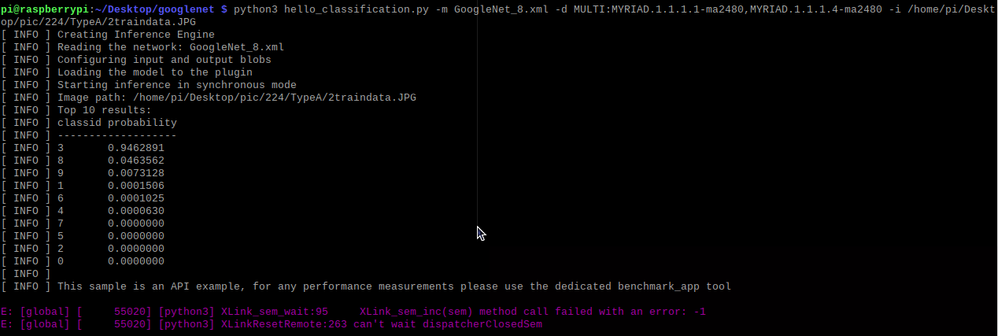

I am trying to execute my neural network on ncs2,

Although there is a result of executing the identification, the following situations will appear under the result:

E: [global] [ 69139] [python3] XLink_sem_wait:95 XLink_sem_inc(sem) method call failed with an error: -1

E: [global] [ 69139] [python3] XLinkResetRemote:263 can't wait dispatcherClosedSem

Has anyone encountered this kind of problem and has a solution?

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi alexalex35,

Thanks for reaching out to us.

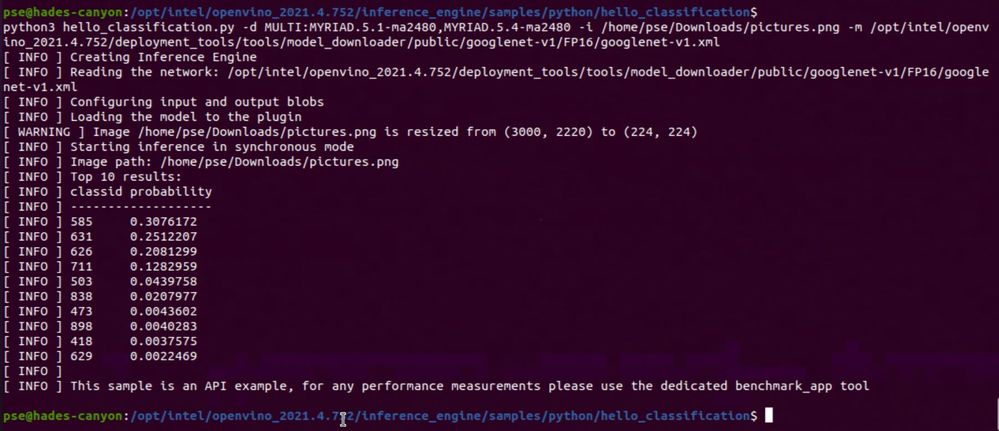

I am able to run Hello Classification Python sample with 2 Intel® Neural Compute Stick 2 (NCS2) without encountering your errors. I am using OpenVINO™ 2021.4.752 on my side.

XLink library contains reimplemented POSIX functions for working with semaphores, e.g. sem_init. But MYRIAD plugin does not contain XLink since some revision, thus built-in system functions are called instead.

Hence, the error or triggering happened when the USB3 protocol was unable to complete the method call. As long as the inferencing can be done, you can take these error messages as warning.

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Peh_Intel,

Thanks for your reply,

I would like to ask if skipping this warning message will make the inference time longer?

Because without this warning message if I only use a single neural stick, his inference speed will be faster than if I use two neuro sticks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi alexalex35,

In this scenario, this warning message need some attention as it leads to abnormal behavior of MULTI plugin which is similar to the previous Stack Overflow case.

Please have a try on modifying the Hello Classification Python sample by configuring the individual devices and creating the Multi-Device on top.

myriad1_config = {}

myriad2_config = {}

ie.set_config(config=myriad1_config, device_name="MYRIAD.1.1.1.1-ma2480")

ie.set_config(config=myriad2_config, device_name="MYRIAD. 1.1.1.4-ma2480")

exec_net = ie.load_network(network=net, device_name="MULTI", config={"MULTI_DEVICE_PRIORITIES": "MYRIAD. 1.1.1.1-ma2480,MYRIAD. 1.1.1.4-ma2480"})

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Peh_Intel,

Thanks for your reply,

I will try it out and report back to you with any questions and findings.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Peh_Intel,

Since I added the following code to hello_classification.py

myriad1_config = {}

myriad2_config = {}

ie.set_config(config=myriad1_config, device_name="MYRIAD.1.1.1.1-ma2480")

ie.set_config(config=myriad2_config, device_name="MYRIAD. 1.1.1.4-ma2480")

exec_net = ie.load_network(network=net, device_name="MULTI", config={"MULTI_DEVICE_PRIORITIES": "MYRIAD. 1.1.1.1-ma2480,MYRIAD. 1.1.1.4-ma2480"})

The following error will pop up after execution.

The attached file is my modified hello_classification.py. Could you please help me to see what is wrong?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi alexalex35,

Thanks for pointing out the error.

I am sorry about my previously given suggestion. I do not aware that the multiple instances of Intel® Neural Compute Stick 2 (NCS2) cannot be used in such way.

I just notice that you’re using OpenVINO™ on Raspberry Pi. Can I know which OpenVINO™ version you’re using? Do you install OpenVINO™ Toolkit from Raspbian OS Package or build from open source?

Sincerely,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Peh_Intel,

I tried the file with the following URL

When I use multiple computing sticks, the throughput does increase, but my response time is still not reduced, or even longer. Is it feasible to reduce the response time by using multiple NCS2?

I am using OpenVINO™ 2021.4.752 on my side.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi alexalex35,

Are you able to get around 183.15 FPS for 2 Intel® Neural Compute Stick 2 (NCS2) and 92.68 FPS for a single NCS2? I am sorry that I cannot understand you well. Could you please explain what does the response time means?

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Peh_Intel,

The response time I am referring to is the time it takes me to execute hello_classification.py from the beginning to the inference result because I obviously feel that the response time has become longer when I use two NSC2.

So I refer to this link and add time to my hello_classification.py to get the time of my response time.

I got 1.69s of inference time for one NCS2 and 3.29s for two. I think this result is abnormal.

In the compression party, I provided the model I used, the required input image, and hello_classification.py, you can try to execute the result to see if the problem is the same as what I said.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi alexalex35,

Thanks for sharing all the required files with us.

I also getting a longer response time when using 2 Intel® Neural Compute Stick 2 (NCS2) compared to a single NCS2.

I would say the result is expected. When using MULTI plugin, it takes longer time because the time to initialize two NCS2 is also included as the time started to count from the beginning.

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Peh_Intel,

If I want to use multiple NCS2, is there any other way to run multiple NCS2 besides setting ie.load_network to MULTI mode?

Can you describe in detail how MULTI works?

What can I do if I want to shorten the inference time?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi alexalex35,

The Multi-Device plugin automatically assigns inference requests to available computational devices to execute the requests in parallel. To saturate a NCS2 device to draw the maximum performance form the device, 4 inference requests is recommended to assign simultaneously. This means, you should create 4 InferRequest(s) per device. If you have 2 NCS2s, then you should create 8 InferRequests.

You can try running Benchmark App with MYRIAD and MULTI:MYRIAD.x-ma2480,MYRIAD.x-ma2480 with the same inference requests (-nireq 8). The throughput of using MULTI plugin is higher than using a single NCS2.

Or you can run Benchmark App with MYRIAD and MULTI:MYRIAD.x-ma2480,MYRIAD.x-ma2480 with the same iteration (-niter 1000). The duration of using MULTI plugin is shorter than using a single NCS2.

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Peh_Intel,

I later found out that most of the time was spent Loading the model to the device.

Can I do the following actions of inputting photos without loading the model after only loading the model once?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi alexalex35,

Yes, you can generate a blob file from XML file to reduce the load time.

ie = IECore()

net = ie.read_network(model=path_to_xml_file, weights=path_to_bin_file)

exec_net = ie.load_network(network=net, device_name="MYRIAD")

# export executable network

exec_net.export(‘<path>/model.blob’)

A blob file is generated and import the blob file with import_network method:

exec_net = ie.import_network(model_file=‘<path>/model.blob’, device_name="MYRIAD")

Regards,

Peh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi alexalex35,

This thread will no longer be monitored since we have provided answers. If you need any additional information from Intel, please submit a new question.

Regards,

Peh

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page