- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am loading a model into openvino using c++. A sample code:

InferenceEngine::Core myCore;

InferenceEngine::CNNNetwork myNetwork;

myCore.SetConfig({ {CONFIG_KEY(CACHE_DIR), "./tmp_cache/"} });

try {

InferenceEngine::ExecutableNetwork myExecutable_Network = myCore.LoadNetwork(xmlpath, "GPU");

...do some things after the load...

}

catch (std::exception& e) {

std::cout << "Unable to load model!" << e.what() << std::endl;

}

The code compiles without errors. If I set the load for "CPU" it runs without errors. If I use "GPU" I see the following exception:

implementation_map for struct cldnn::broadcast could not find any implementation to match key

and the following without the try block:

Unhandled exception at 0x00007FF910584F69 in main.exe: Microsoft C++ exception: InferenceEngine::GeneralError at memory location 0x000000BABDAF50B0.

I am using this version of openvino:

openvino_2021.4.582

With:

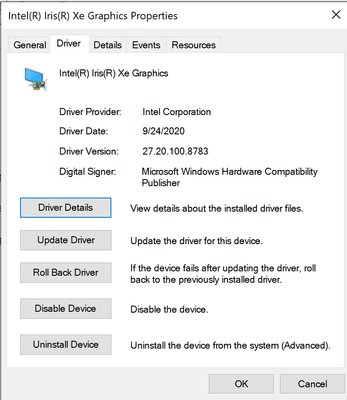

Intel Iris XE graphics, Windows 10

Any thoughts on how to solve this?

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ct123,

Thanks for reaching out to us.

Could you please try to use the latest version of Intel® Distribution of OpenVINO™ Toolkit which is 2021.4.2 (2021.4.752)?

Besides that, are you able to run Image Classification Demo Script with the GPU plugin?

On another note, could you please share your script and model with us for further investigation?

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Wan,

I tried updating my driver to the recommended version and switching to the latest version of openvino. This did not solve the issue.

I was able to run the demo with -d GPU without errors.

I have attached a sample script that was able to reproduce the error.

I have also attached the .xml file for my model. The .bin file is too large (245 MB). The forum restricts files to smaller than 71 MB.

If you need the bin file I will need another way to send it to you.

I will switch from release to debug and try to print a full stack trace.

Thanks,

ct123

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My script was deleted, so here is the full version:

#include <inference_engine.hpp>

#include <iostream> //standard input and output

#include <opencv2/opencv.hpp> //opencv functionality

#include <time.h> //time functions

#include <chrono> //time tracking

#include <vector> //vector functionality

#include <assert.h> //assert statements

#include <fstream> //file access

#include <string> //string operations

#include <filesystem> //file system access

#include <Windows.h> //windows system options

using namespace InferenceEngine;

int main()

{

//assigns input arguments

std::string binpath = "./openvino_yolo/yolov4_1_3_608_608_static.bin";

std::string xmlpath = "./openvino_yolo/yolov4_1_3_608_608_static.xml";

int height = 608;

int width = 608;

InferenceEngine::Core mCore;

mCore.SetConfig({ {CONFIG_KEY(CACHE_DIR), "./yolo_cache"} });

InferenceEngine::ExecutableNetwork executableNetwork;

InferenceEngine::InferRequest infer_request;

// 3.load model to device

executableNetwork = mCore.LoadNetwork(xmlpath, "GPU");

// 4.create infer request

infer_request = executableNetwork.CreateInferRequest();

std::cout << "Done!\n";

cv::waitKey(10000);

std::cout << "Inference Complete...\n";

}

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ct123,

Thanks for sharing your full script with us.

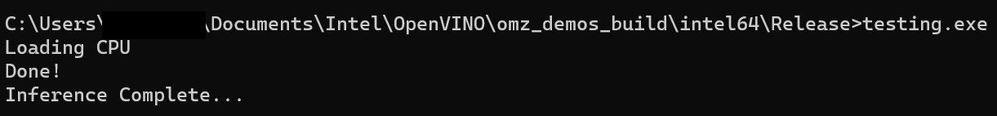

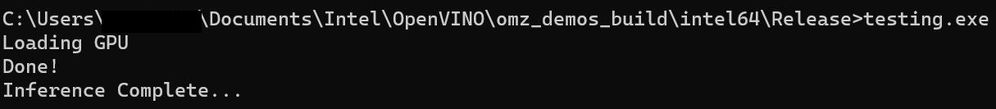

For your information, we can compile and run the application with yolo-v4-tf model successfully when using the CPU and the GPU plugin.

Load CPU Plugin:

Load GPU Plugin:

Could you please share your bin file with us? You can upload your bin file here.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I could not add a file to the google drive provided so I made my own and here is the link:

bin file

https://drive.google.com/file/d/1NInOk_A3QzW6NKDTDbbswXq9ubIo4YS3/view?usp=sharing

xml:

https://drive.google.com/file/d/13nM_pSuBDAt9nOHVZJe9SK8fpVpfyI1u/view?usp=sharing

Hopefully this helps.

Best,

ct123

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ct123,

I also encountered an error: implementation_map for struct cldnn::broadcast could not find any implementation to match key when running your model with the GPU plugin. However, no error occurs when running your model with the CPU plugin.

Thus, could you please share the following items with us for further investigation?

· Source model

· The command you used to convert your model to the Intermediate Representation

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Wan,

The original model comes from here:

GitHub - Tianxiaomo/pytorch-YOLOv4: PyTorch ,ONNX and TensorRT implementation of YOLOv4

Model is first converted to onnx format using the following command in python:

Model Optimizer arguments:

Common parameters:

- Path to the Input Model: input_path\yolov4_1_3_608_608_static.onnx

- Path for generated IR: output_path

- IR output name: yolov4_1_3_608_608_static

- Log level: ERROR

- Batch: Not specified, inherited from the model

- Input layers: Not specified, inherited from the model

- Output layers: Not specified, inherited from the model

- Input shapes: Not specified, inherited from the model

- Mean values: Not specified

- Scale values: Not specified

- Scale factor: Not specified

- Precision of IR: FP32

- Enable fusing: True

- Enable grouped convolutions fusing: True

- Move mean values to preprocess section: None

- Reverse input channels: False

ONNX specific parameters:

[ WARNING ] Failed to import Inference Engine Python API in: PYTHONPATH

[ WARNING ] DLL load failed: Access is denied.

[ WARNING ] Failed to import Inference Engine Python API in: C:\Program Files (x86)\Intel\openvino_2021\python\python3.7

[ WARNING ] DLL load failed: Access is denied.

[ WARNING ] Could not find the Inference Engine Python API. At this moment, the Inference Engine dependency is not required, but will be required in future releases.

[ WARNING ] Consider building the Inference Engine Python API from sources or try to install OpenVINO (TM) Toolkit using "install_prerequisites.sh"

Model Optimizer version: 2021.4.2-3974-e2a469a3450-releases/2021/4

[ WARNING ] Using fallback to produce IR.

[ SUCCESS ] Generated IR version 10 model.

[ SUCCESS ] XML file: output_path\yolov4_1_3_608_608_static.xml

[ SUCCESS ] BIN file: output_path\yolov4_1_3_608_608_static.bin

[ SUCCESS ] Total execution time: 31.22 seconds.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I will upload the original and intermediate forms of the model to google drive tonight (China time)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Wan,

The onnix model is here:

https://drive.google.com/file/d/1QaAKSKo5q6FORjpM0erYV0bRYbkyMxG5/view?usp=sharing

and the pytorch model is here:

https://drive.google.com/file/d/16_XvOj297DLlKGslRpn_tLf_BlM8Ytwn/view?usp=sharing

best,

ct123

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ct123,

Thanks for your information.

I have reported this issue to our Engineering team, and we will update you at the earliest.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ct123,

Thanks for your patience.

Based on the Engineering team’s response, they found out there are some bugs in the GPU plugin where it does not recognize broadcast.

We have submitted a request for the broadcast to be fixed for the GPU plugin. However, we cannot guarantee when this fix can be available in the OpenVINO™ toolkit.

On another note, we recommend you to use the yolo-v4-tf that is available in the Open Model Zoo if the model is suitable for your application.

Hope this information help.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Wan,

Thanks for looking into this. What is the original Yolov4 implementation that is available in the model zoo? I need to train yolov4 with custom data.

Thanks,

ct123

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ct123,

For your information, yolo-v4-tf was implemented in Keras framework and converted to TensorFlow framework. It was pre-trained on Common Objects in Context (COCO) dataset with 80 classes. For details, see TF Keras YOLOv4/v3/v2 Modelset. On another note, for details on training YOLOv4 with a custom dataset, please refer to Quick Start.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ct123,

Thanks for your patience.

For your information, this issue has been solved on the latest OpenVINO™ 2022.1 which will be available in March 2022.

On the other hand, you can build OpenVINO™ from source by cloning the master branch. Steps to build OpenVINO™ from source are available here.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Ct123,

This thread will no longer be monitored since this issue has been resolved.

If you need any additional information from Intel, please submit a new question.

Regards,

Wan

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page