- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

Using this command:

python mo_tf.py --input_model frozen_inference_graph.pb --input_shape [1,600,600,3] --tensorflow_use_custom_operations_config deployment_tools/model_optimizer/extensions/front/tf/faster_rcnn_support.json --tensorflow_object_detection_api_pipeline_config faster_rcnn_inception_v2_coco_2018_01_28_pipeline.config --reverse_input_channels

I have been able to successfully convert publically available faster_rcnn_inception_v2_coco_2018_01_28 model (http://download.tensorflow.org/models/object_detection/faster_rcnn_inception_v2_coco_2018_01_28.tar.gz).

Trace Log:

[ WARNING ] Use of deprecated cli option --tensorflow_use_custom_operations_config detected. Option use in the following releases will be fatal. Please use --transformations_config cli option instead

Model Optimizer arguments:

Common parameters:

- Path to the Input Model: frozen_inference_graph.pb

- Path for generated IR: /home/ubuntu/Downloads/models/

- IR output name: frozen_inference_graph

- Log level: ERROR

- Batch: Not specified, inherited from the model

- Input layers: Not specified, inherited from the model

- Output layers: Not specified, inherited from the model

- Input shapes: Not specified, inherited from the model

- Mean values: Not specified

- Scale values: Not specified

- Scale factor: Not specified

- Precision of IR: FP32

- Enable fusing: True

- Enable grouped convolutions fusing: True

- Move mean values to preprocess section: None

- Reverse input channels: False

TensorFlow specific parameters:

- Input model in text protobuf format: False

- Path to model dump for TensorBoard: None

- List of shared libraries with TensorFlow custom layers implementation: None

- Update the configuration file with input/output node names: None

- Use configuration file used to generate the model with Object Detection API: /home/acer/Downloads/faster_rcnn_inception_v2_coco_2018_01_28_pipeline.config

- Use the config file: /home/acer/deployment_tools/model_optimizer/extensions/front/tf/faster_rcnn_support.json

- Inference Engine found in: /opt/intel/openvino_2021/python/python3.6/openvino

Inference Engine version: 2.1.2021.3.0-2787-60059f2c755-releases/2021/3

Model Optimizer version: 2021.3.0-2787-60059f2c755-releases/2021/3

[ WARNING ] Model Optimizer removes pre-processing block of the model which resizes image keeping aspect ratio. The Inference Engine does not support dynamic image size so the Intermediate Representation file is generated with the input image size of a fixed size.

Specify the "--input_shape" command line parameter to override the default shape which is equal to (600, 600).

The Preprocessor block has been removed. Only nodes performing mean value subtraction and scaling (if applicable) are kept.

The graph output nodes have been replaced with a single layer of type "DetectionOutput". Refer to the operation set specification documentation for more information about the operation.

[ SUCCESS ] Generated IR version 10 model.

[ SUCCESS ] XML file: /home/acer/Documents/models/frozen_inference_graph.xml

[ SUCCESS ] BIN file: /home/acer/Documents/models/frozen_inference_graph.bin

[ SUCCESS ] Total execution time: 23.14 seconds.

[ SUCCESS ] Memory consumed: 583 MB.

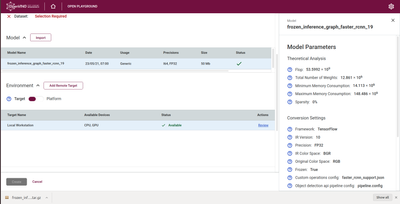

I have also been successful in converting the same model using the DL Workbench.

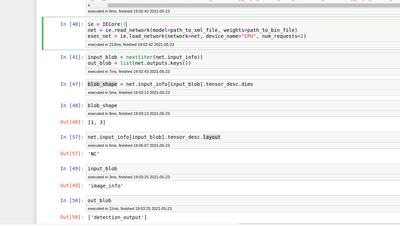

But when I am trying to read and load the model, I am only able to see that the input blob dimension is only 2 which is 'NC'

While I have been able to work with other SSD based models, I am really not sure what is the issue here. Please point out what could be the error here?

Also attached the converted files - .bin and .xml, and .mapping for reference

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

Reading through previous posts, I was able to figure out that there were two input tensors in the case of rcnn models namely:

['image_info', 'image_tensor']

out of whichh I have to use image_tensor for inference.

The problem now is during inference, I am getting huge values like these:

[array([[[[-1.9206988e-22, 4.5856091e-41, -1.9206988e-22,

4.5856091e-41, 2.4168914e-37, 0.0000000e+00,

2.4168914e-37],

[ 0.0000000e+00, 2.2271901e-37, 0.0000000e+00,

2.2271866e-37, 0.0000000e+00, 2.4318434e-37,

0.0000000e+00],

[ 2.4318398e-37, 0.0000000e+00, 2.3274243e-36,

0.0000000e+00, 2.3274214e-36, 0.0000000e+00,

6.1100523e-37],

Now, I am really not sure what is going wrong here. Initially, I suspected that such a result will be related to reversing input channels but I have tried it both ways (by using --reverse_input_channels and not using it) but I see the same result.

Please point me to right resouces if possible

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Anuj Khandelwal,

Greetings to you.

The reason you see the 2 input blob dimensions 'NC' is because the number of dimensions is two. By default, it matches the layer's precision and depends on a number of its dimensions: C - for 1-dimensional, NC - for 2-dimensional, CHW - for 3-dimensional, NCHW - for 4-dimensional NCDHW - for 5-dimensional. The default input layout might be changed preferred one using the setLayout() function. You can refer to getLayout() function for the details.

Based on our observation, we find that you are using a different input_shape than the one provided in model_optimizer_args for faster_rcnn_inception_v2_coco. Can you share with us which demo you are using?

Sincerely,

Zulkifli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @Zulkifli_Intel ,

As I mentioned in the above thread, I was able to get past the error where I was getting input blob dimension is only 2 which is 'NC' . To reiterate, after conversion to .IR format, we get two tensors namely:

['image_info', 'image_tensor']

For inference, we need to use 'image_tensor' to provide images when making a request. I have trained a tensorflow faster rcnn model on my data, the model runs absolutely fine when used in Tensorflow Object Detection API but when converted to OpenVINO, I am getting poor results.

A snapshot of the (1, 100, 7) output vector is:

[array([[[[-1.9206988e-22, 4.5856091e-41, -1.9206988e-22,

4.5856091e-41, 2.4168914e-37, 0.0000000e+00,

2.4168914e-37],

[ 0.0000000e+00, 2.2271901e-37, 0.0000000e+00,

2.2271866e-37, 0.0000000e+00, 2.4318434e-37,

0.0000000e+00],

[ 2.4318398e-37, 0.0000000e+00, 2.3274243e-36,

0.0000000e+00, 2.3274214e-36, 0.0000000e+00,

6.1100523e-37],

I have also tested it using your object detection demo (python) and getting the same output which is incorrect. Although, it is nowhere mentioned that this particular demo supports faster rcnn based model but it does because the output layout implementation for faster rcnn is same as of ssd I believe.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Anuj Khandelwal,

Object Detection Faster RCNN demo has been removed from the Open Model Zoo (OMZ) in OpenVINO 2021.2 onwards as indicated by the developers in GitHub PR 1858.

The reason is we follow a general approach that OMZ demo are validated with models available in OMZ. We can't guarantee the model will work accordingly as it is not a part of OMZ.

Additionally, you may notice in the original repository that the model was marked as a deprecated long time ago. We see no value to spend time on a supporting model which was abandoned even by its author.

Model's original repository: https://github.com/rbgirshick/py-faster-rcnn

Thus, using an unsupported demo for this model is not recommended as not validated by the development team. As per response by the developer as above, we recommend you use the Intel OMZ faster_RCNN model for inference as it was validated by Intel developers in the OpenVINO environment.

Sincerely,

Zulkifli

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Anuj Khandelwal.

This thread will no longer be monitored since we have provided informationn. If you need any additional information from Intel, please submit a new question.

Regards,

Zulkifli

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page