- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I tried deploying the following PyTorch Module to OpenVINO. The model exports to ONNX and Openvino but when trying to compile and use it I get the "RuntimeError: could not create a primitive" error.

Versions: I tried both openvino / openvino-dev 2022.2 and 2022.1, onnx 1.11.0; Ubuntu 20.04; CPU Intel(R) Core(TM) i7-7700 CPU @ 3.60GHz

class TemplateMatch(nn.Module):

"""

Custom Template Matching layer.

Uses normalized correlation to find the best match.

(Can be easily modified to use other metrics.)

This is re-implemantation of OpenCV-s matchTemplate,

with CCOEFF_NORMED metric.

For testing of faster native deployment (Intel OpenVINO on CPU /

integrated GPU).

"""

def __init__(self, templates, masks,

padding=0, # 'valid' # 0; changed from earlier versions

device='cpu'

):

"""

Inputs

------

template - (l2-normalized !) template (only masked region !),

torch.Tensor

mask - binary mask of the same shape as template

"""

super().__init__()

self.template_shape = templates.shape

in_ch, out_ch, k1, k2 = self.template_shape # 1, nr_templates,

# (template size)

self.template_sums = torch.sum(templates, # .flatten(2, -1),

# dim=-1).to(device)

dim=(2, 3)).to(device)

self.mask_sums = torch.sum(masks, # .flatten(2, -1),

# dim=-1).to(device)

dim=(2, 3)).to(device)

self.device = device

# Fixed convolution with ones; used for normalization

self.conv_norm = nn.Conv2d(in_ch, out_ch, (k1, k2),

padding=padding)

self.conv_norm.weight = nn.Parameter(data=masks,

requires_grad=False)

# Leave default padding (zeros)

self.correlation = nn.Conv2d(in_ch, out_ch, (k1, k2),

padding=padding)

# NOTE: template * mask should already be done before

self.correlation.weight = nn.Parameter(data=templates, # * masks

requires_grad=False)

def forward(self, input_):

# get (masked) norms for all blocks (mask is included in conv_norm

# definition)

# NOTE: padding must be 0 i.e. 'valid for this'

# NOTE: masks * ... is not necessary (template is already masked)

# h, w = self.template_shape[2:]

sums = self.conv_norm(input_) # / self.mask_sums

centers = torch.div(sums, self.mask_sums) # sums / self.mask_sums

norms = torch.sqrt(self.conv_norm(

# torch.square(input_))

input_ * input_)

- sums * 2. * centers

+ self.mask_sums * centers * centers # torch.square(centers)

)

# Return correlation tensor

# We only look at masked region implicitly (convolution kernel is

# already masked)

result = torch.div(

self.correlation(input_) - self.template_sums * centers,

norms)[0].flatten(1, -1)

max_ind = torch.argmax(result, dim=-1)

return result[:, max_ind], max_ind.to(torch.int32)

# return torch.max(

# torch.div((self.correlation(input_) - self.template_sums * centers)

# , norms)[0].flatten(1, -1), # remove batch dim

# dim=-1 # torch.tensor([0, 1]) # .to(self.device)

# ) # , centers.shape

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mf22,

Thanks for reaching out to us.

Did you encounter the same error when using your ONNX model without utilizing the OpenVINO™ toolkit?

Could you share the following information with us for replication purposes?

· PyTorch model

· ONNX model

· Intermediate Representation (IR)

· Source code to compile and use the IR with OpenVINO™ toolkit

· Source code to use the ONNX model without utilizing the OpenVINO™ toolkit

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mf22,

Thanks for your information.

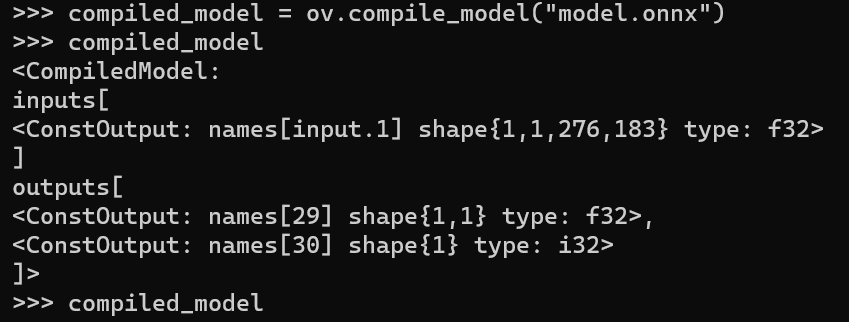

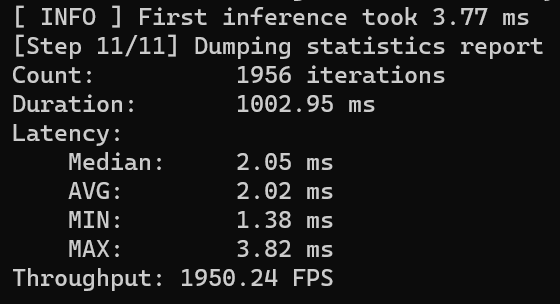

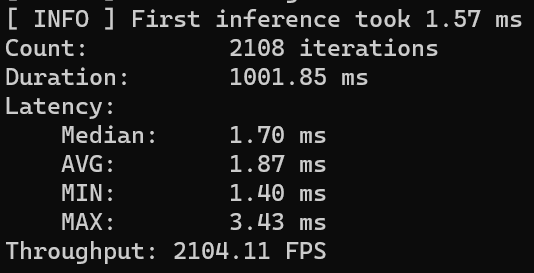

For your information, I’ve validated that your model was able to compile with OpenVINO™ toolkit.

On another note, ONNX model and IR work fine with Benchmark Python Tool.

ONNX:

IR:

Could you please provide the step to reproduce the error so we can replicate from our end?

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Wan_Intel ,

thank you for your feedback.

torch -> onnx:

torch.onnx.export(

model.cpu() if dynamic else model, # --dynamic only compatible with cpu

im.cpu() if dynamic else im,

f,

verbose=False,

opset_version=opset,

training=torch.onnx.TrainingMode.TRAINING if train else torch.onnx.TrainingMode.EVAL,

do_constant_folding=not train,

input_names=['images'],

output_names=['output'],

dynamic_axes={

'images': {

0: 'batch',

2: 'height',

3: 'width'},

'output': {

0: 'batch',

1: 'anchors'}

} if dynamic else None)

onnx -> Openvino IR:

import openvino.inference_engine as ie

f = str(file_).replace('.pt', f'_openvino_model{os.sep}')

cmd = f"mo --input_model {file_.with_suffix('.onnx')} --output_dir {f} --data_type {'FP16' if half else 'FP32'}"

subprocess.check_output(cmd.split()) # export

Output of

benchmark_app -m model.xml

is:

[Step 1/11] Parsing and validating input arguments

[ WARNING ] -nstreams default value is determined automatically for a device. Although the automatic selection usually provides a reasonable performance, but it still may be non-optimal for some cases, for more information look at README.

[Step 2/11] Loading OpenVINO

[ WARNING ] PerformanceMode was not explicitly specified in command line. Device CPU performance hint will be set to THROUGHPUT.

[ INFO ] OpenVINO:

API version............. 2022.1.0-7019-cdb9bec7210-releases/2022/1

[ INFO ] Device info

CPU

openvino_intel_cpu_plugin version 2022.1

Build................... 2022.1.0-7019-cdb9bec7210-releases/2022/1

[Step 3/11] Setting device configuration

[ WARNING ] -nstreams default value is determined automatically for CPU device. Although the automatic selection usually provides a reasonable performance, but it still may be non-optimal for some cases, for more information look at README.

[Step 4/11] Reading network files

[ INFO ] Read model took 5.35 ms

[Step 5/11] Resizing network to match image sizes and given batch

[ INFO ] Network batch size: 1

[Step 6/11] Configuring input of the model

[ INFO ] Model input 'images' precision f32, dimensions ([...]): 1 1 975 1714

[ INFO ] Model output 'output' precision f32, dimensions ([...]): 1 1

[ INFO ] Model output '30' precision i32, dimensions ([...]): 1

[Step 7/11] Loading the model to the device

[ ERROR ] could not create a primitive

Traceback (most recent call last):

File "/home/proto-touchpad/app/code/vindija/venv/lib/python3.8/site-packages/openvino/tools/benchmark/main.py", line 298, in run

compiled_model = benchmark.core.compile_model(model, benchmark.device)

File "/home/proto-touchpad/app/code/vindija/venv/lib/python3.8/site-packages/openvino/runtime/ie_api.py", line 266, in compile_model

super().compile_model(model, device_name, {} if config is None else config)

RuntimeError: could not create a primitive

Versions are as mentioned above: Openvino 2022.1, onnx 1.11.0.

network = ie.read_model(model=model_file,

weights=Path(model_file).with_suffix('.bin'))

executable_network = ie.compile_model(model=network,

device_name="CPU"

)gives the same error as above.

The models are here: https://drive.google.com/drive/folders/1SBqaClQOiZRn3LU9zJYOa-FtbgTS9BeO?usp=sharing

Code used for inference:

from time import time

from pathlib import Path

import numpy as np

#from PIL import Image

# OpenVINO

# from openvino.inference_engine import (IECore as Core,

# # Tensor

# )

# API v2.0

from openvino.runtime import Core, Tensor

import psutil

# from memory_profiler import profile

from config import POS

range_w, range_h = POS[0]

IMGSZ = (range_h[1] - range_h[0],

range_w[1] - range_w[0])

print(f'IMGSZ: {IMGSZ}')

DTYPE = np.float32 # TODO: set np.float16?

CACHE_DIR = Path.cwd().joinpath('openvino_cache')

MODE = 0o771

CACHE_DIR.mkdir(mode=MODE, parents=True,

exist_ok=True)

class OpenVINOWrapper():

"""

YOLO wrapper class for OpenVINO inference engine.

"""

def __init__(self, model_files=None,

img_shape=IMGSZ,

batch_size=1,

dtype=DTYPE, # np.float32, # float16

cache_dir=CACHE_DIR,

device='cpu',

EXT='.onnx', # '.xml',

num_threads=None):

"""

YOLO wrapper, for OpenVINO inference.

Inputs

------

w - model file (.xml) or corresponding folder

"""

assert model_files is not None, "Models must be provided."

w, h = img_shape

input_shape = (batch_size, 1, h, w) # input is Sobel-gradient image

self.dtype = dtype

ie = Core()

# ------------------------------------------------------------------------------

available_devices = ie.available_devices

print(f'AVAILABLE DEVICES: {available_devices}')

# ------------------------------------------------------------------------------

ie.set_property({'CACHE_DIR': cache_dir})

# ie.set_config(config={'cache_dir': str(cache_dir)},

# device_name="CPU")

self.executable_networks = []

self.output_layers = []

for model_file in model_files:

if not Path(model_file).is_file(): # if not *.xml

# get *.xml file from *_openvino_model dir

model_file = next(Path(model_file).glob(f'*{EXT}'))

print(f'model file: {model_file}')

# network = ie.read_network(model=model_file,

# weights=Path(model_file).with_suffix('.bin'),

# )

# APi v2.0

network = ie.read_model(model=model_file,

weights=Path(model_file).with_suffix('.bin'))

print(f'NETWORK: {network}')

# executable_network = ie.load_network(network,

# "CPU",

# num_requests=1)

executable_network = ie.compile_model(model=network,

device_name="CPU"

)

self.executable_networks.append(executable_network)

self.output_layers.append(next(iter(executable_network.outputs)))

nthreads = compiled_model.get_property("INFERENCE_NUM_THREADS")

nireq = compiled_model.get_property("OPTIMAL_NUMBER_OF_INFER_REQUESTS")

print(f'Num. threads for inference: {nthreads}\n'

f'Optimal number of infer requests: {nireq}')

# Initialize i.e. declare batch

self.input_shape = (len(model_files), *input_shape)

self.batch = np.zeros(self.input_shape, dtype=self.dtype)

@property

def input_shapes(self):

return [self.input_shape] # * len(self.executable_networks)

@property

def inputs_memory(self):

return self.batch

# @profile

def execute(self): # , batch):

"""

Inference.

Use async execution.

See:

https://docs.openvino.ai/latest/openvino_docs_OV_UG_Infer_request.html#doxid-openvino-docs-o-v-u-g-infer-request

Inputs

------

-

Outputs

-------

y - output tensor

elapsed_time

mem_info

"""

st_time = time()

# ---------------------------------------------------------------------

# Infer requests for async inference?

# TODO: check multiple networks implementation

infer_requests = []

# add sub-networks/models, see above, for every pin location

for ind, executable_network in enumerate(self.executable_networks):

infer_request = executable_network.create_infer_request()

# infer_request = executable_network.requests[0]

infer_request.set_input_tensor(Tensor(array=self.inputs_memory[ind], shared_memory=False))

# input_blobs = infer_request.input_blobs

# data = input_blobs["images"].buffer

# Original I64 precision was converted to I32

# assert data.dtype == np.int32

# Fill the first blob ...

# data = self.inputs_memory[ind]

# infer_request.set_callback()

# Run asynchronously and wait for result

# infer_request.start_async() # run_async()

# result = infer_request.infer()

infer_request.infer()

# infer_requests.append(result) # infer_request)

infer_requests.append(infer_request)

outputs = []

for infer_request in infer_requests:

# infer_request.wait()

# Get output

y = infer_request.get_output_tensor().data # [self.output_layer]

# Get output blobs mapped to output layers names

# output_blobs = infer_request.output_blobs

# y = output_blobs["output"].buffer

# Original I64 precision was converted to I32

# assert y.dtype == np.int32

# Process output data

outputs.append(y) # infer_request.get_output_tensor()[self.output_layer])

# ---------------------------------------------------------------------

# y = self.executable_network(self.inputs_memory)[self.output_layer]

elapsed_time = time() - st_time

mem = psutil.virtual_memory()

mem_info = [mem.free, mem.total]

# return y, elapsed_time, mem_info

return outputs, elapsed_time, mem_info

# Define __enter_ and __exit__ just because engine will be used as context

def __enter__(self):

return self

def __exit__(self, exc_type, exc_value, exc_tb):

passCode used for inference with ONNX:

from time import time

from pathlib import Path

import numpy as np

#from PIL import Image

import onnxruntime

import psutil

# from memory_profiler import profile

from config import POS

range_w, range_h = POS[0]

IMGSZ = (range_h[1] - range_h[0],

range_w[1] - range_w[0])

print(f'IMGSZ: {IMGSZ}')

DTYPE = np.float32

class ONNXWrapper():

"""

YOLO wrapper class for OpenVINO inference engine.

"""

def __init__(self, model_files=None,

img_shape=IMGSZ,

batch_size=1,

dtype=DTYPE, # np.float32, # float16

num_threads=None):

"""

YOLO wrapper, for OpenVINO inference.

Inputs

------

w - model file (.xml) or corresponding folder

"""

assert model_files is not None, "Models must be provided."

w, h = img_shape

input_shape = (batch_size, 1, h, w) # input is Sobel-gradient image

self.dtype = dtype

self.networks = []

for model_file in model_files:

if not Path(model_file).is_file(): # if not *.xml

# get *.xml file from *_openvino_model dir

model_file = next(Path(model_file).glob('*.onnx'))

print(f'model file: {model_file}')

# ONNX runtime

providers = ['CPUExecutionProvider'] # ['CUDAExecutionProvider', 'CPUExecutionProvider']

session = onnxruntime.InferenceSession(str(model_file),

providers=providers)

# meta = session.get_modelmeta().custom_metadata_map # metadata

# if 'stride' in meta:

# stride, names = int(meta['stride']), eval(meta['names'])

self.networks.append(session)

# Initialize i.e. declare batch

# No several models here

self.input_shape = (len(model_files), *input_shape)

self.batch = np.zeros(self.input_shape, dtype=self.dtype)

@property

def input_shapes(self):

return [self.input_shape] # * len(self.executable_networks)

@property

def inputs_memory(self):

return self.batch

# @profile

def execute(self): # , batch):

"""

Inference.

Inputs

------

-

Outputs

-------

y - output tensor

elapsed_time

mem_info

"""

st_time = time()

outputs = []

print(f'net: {self.networks}')

# ---------------------------------------------------------------------

# add sub-networks/models, see above, for every pin location

for ind, net in enumerate(self.networks):

# ONNX runtime

# io_binding = net.io_binding()

# OnnxRuntime will copy the data over to the CUDA device if 'input' is consumed by nodes on the CUDA device

# io_binding.bind_cpu_input(net.get_inputs()[0].name,

# self.inputs_memory[ind])

# io_binding.bind_output('output') # [out_.name for out_ in net.get_outputs()])

print(f'Running... Output names: {[out_.name for out_ in net.get_outputs()]}')

# print(f'Input shape: {self.inputs_memory.shape, np.max(self.inputs_memory)}')

out = net.run([out_.name for out_ in net.get_outputs()],

{net.get_inputs()[0].name:

self.inputs_memory[ind]})

# net.run_with_iobinding(io_binding)

# out = io_binding.copy_outputs_to_cpu()

outputs.append(out)

# ---------------------------------------------------------------------

# y = self.executable_network(self.inputs_memory)[self.output_layer]

elapsed_time = time() - st_time

mem = psutil.virtual_memory()

mem_info = [mem.free, mem.total]

# return y, elapsed_time, mem_info

return outputs, elapsed_time, mem_info

# Define __enter_ and __exit__ just because engine will be used as context

def __enter__(self):

return self

def __exit__(self, exc_type, exc_value, exc_tb):

pass

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Output of `benchmark_app -m model.xml`:

[Step 1/11] Parsing and validating input arguments

[ WARNING ] -nstreams default value is determined automatically for a device. Although the automatic selection usually provides a reasonable performance, but it still may be non-optimal for some cases, for more information look at README.

[Step 2/11] Loading OpenVINO

[ WARNING ] PerformanceMode was not explicitly specified in command line. Device CPU performance hint will be set to THROUGHPUT.

[ INFO ] OpenVINO:

API version............. 2022.1.0-7019-cdb9bec7210-releases/2022/1

[ INFO ] Device info

CPU

openvino_intel_cpu_plugin version 2022.1

Build................... 2022.1.0-7019-cdb9bec7210-releases/2022/1

[Step 3/11] Setting device configuration

[ WARNING ] -nstreams default value is determined automatically for CPU device. Although the automatic selection usually provides a reasonable performance, but it still may be non-optimal for some cases, for more information look at README.

[Step 4/11] Reading network files

[ INFO ] Read model took 5.13 ms

[Step 5/11] Resizing network to match image sizes and given batch

[ INFO ] Network batch size: 1

[Step 6/11] Configuring input of the model

[ INFO ] Model input 'images' precision f32, dimensions ([...]): 1 1 975 1714

[ INFO ] Model output 'output' precision f32, dimensions ([...]): 1 1

[ INFO ] Model output '30' precision i32, dimensions ([...]): 1

[Step 7/11] Loading the model to the device

[ ERROR ] could not create a primitive

Traceback (most recent call last):

File ".../venv/lib/python3.8/site-packages/openvino/tools/benchmark/main.py", line 298, in run

compiled_model = benchmark.core.compile_model(model, benchmark.device)

File ".../venv/lib/python3.8/site-packages/openvino/runtime/ie_api.py", line 266, in compile_model

super().compile_model(model, device_name, {} if config is None else config)

RuntimeError: could not create a primitiveCode used for inference using Openvino IR:

from time import time

from pathlib import Path

import numpy as np

#from PIL import Image

# OpenVINO

# from openvino.inference_engine import (IECore as Core,

# # Tensor

# )

# API v2.0

from openvino.runtime import Core, Tensor

import psutil

# from memory_profiler import profile

from config import POS

range_w, range_h = POS[0]

IMGSZ = (range_h[1] - range_h[0],

range_w[1] - range_w[0])

print(f'IMGSZ: {IMGSZ}')

DTYPE = np.float32 # TODO: set np.float16?

CACHE_DIR = Path.cwd().joinpath('openvino_cache')

MODE = 0o771

CACHE_DIR.mkdir(mode=MODE, parents=True,

exist_ok=True)

class OpenVINOWrapper():

"""

YOLO wrapper class for OpenVINO inference engine.

"""

def __init__(self, model_files=None,

img_shape=IMGSZ,

batch_size=1,

dtype=DTYPE, # np.float32, # float16

cache_dir=CACHE_DIR,

device='cpu',

EXT='.onnx', # '.xml',

num_threads=None):

"""

YOLO wrapper, for OpenVINO inference.

Inputs

------

w - model file (.xml) or corresponding folder

"""

assert model_files is not None, "Models must be provided."

w, h = img_shape

input_shape = (batch_size, 1, h, w) # input is Sobel-gradient image

self.dtype = dtype

ie = Core()

# ------------------------------------------------------------------------------

available_devices = ie.available_devices

print(f'AVAILABLE DEVICES: {available_devices}')

# ------------------------------------------------------------------------------

ie.set_property({'CACHE_DIR': cache_dir})

# ie.set_config(config={'cache_dir': str(cache_dir)},

# device_name="CPU")

self.executable_networks = []

self.output_layers = []

for model_file in model_files:

if not Path(model_file).is_file(): # if not *.xml

# get *.xml file from *_openvino_model dir

model_file = next(Path(model_file).glob(f'*{EXT}'))

print(f'model file: {model_file}')

# network = ie.read_network(model=model_file,

# weights=Path(model_file).with_suffix('.bin'),

# )

# APi v2.0

network = ie.read_model(model=model_file,

weights=Path(model_file).with_suffix('.bin'))

print(f'NETWORK: {network}')

# executable_network = ie.load_network(network,

# "CPU",

# num_requests=1)

executable_network = ie.compile_model(model=network,

device_name="CPU"

)

self.executable_networks.append(executable_network)

self.output_layers.append(next(iter(executable_network.outputs)))

nthreads = compiled_model.get_property("INFERENCE_NUM_THREADS")

nireq = compiled_model.get_property("OPTIMAL_NUMBER_OF_INFER_REQUESTS")

print(f'Num. threads for inference: {nthreads}\n'

f'Optimal number of infer requests: {nireq}')

# Initialize i.e. declare batch

self.input_shape = (len(model_files), *input_shape)

self.batch = np.zeros(self.input_shape, dtype=self.dtype)

@property

def input_shapes(self):

return [self.input_shape] # * len(self.executable_networks)

@property

def inputs_memory(self):

return self.batch

# @profile

def execute(self): # , batch):

"""

Inference.

Use async execution.

See:

https://docs.openvino.ai/latest/openvino_docs_OV_UG_Infer_request.html#doxid-openvino-docs-o-v-u-g-infer-request

Inputs

------

-

Outputs

-------

y - output tensor

elapsed_time

mem_info

"""

st_time = time()

# ---------------------------------------------------------------------

# Infer requests for async inference?

# TODO: check multiple networks implementation

infer_requests = []

# add sub-networks/models, see above, for every pin location

for ind, executable_network in enumerate(self.executable_networks):

infer_request = executable_network.create_infer_request()

# infer_request = executable_network.requests[0]

infer_request.set_input_tensor(Tensor(array=self.inputs_memory[ind], shared_memory=False))

# input_blobs = infer_request.input_blobs

# data = input_blobs["images"].buffer

# Original I64 precision was converted to I32

# assert data.dtype == np.int32

# Fill the first blob ...

# data = self.inputs_memory[ind]

# infer_request.set_callback()

# Run asynchronously and wait for result

# infer_request.start_async() # run_async()

# result = infer_request.infer()

infer_request.infer()

# infer_requests.append(result) # infer_request)

infer_requests.append(infer_request)

outputs = []

for infer_request in infer_requests:

# infer_request.wait()

# Get output

y = infer_request.get_output_tensor().data # [self.output_layer]

# Get output blobs mapped to output layers names

# output_blobs = infer_request.output_blobs

# y = output_blobs["output"].buffer

# Original I64 precision was converted to I32

# assert y.dtype == np.int32

# Process output data

outputs.append(y) # infer_request.get_output_tensor()[self.output_layer])

# ---------------------------------------------------------------------

# y = self.executable_network(self.inputs_memory)[self.output_layer]

elapsed_time = time() - st_time

mem = psutil.virtual_memory()

mem_info = [mem.free, mem.total]

# return y, elapsed_time, mem_info

return outputs, elapsed_time, mem_info

# Define __enter_ and __exit__ just because engine will be used as context

def __enter__(self):

return self

def __exit__(self, exc_type, exc_value, exc_tb):

passONNX:

from time import time

from pathlib import Path

import numpy as np

#from PIL import Image

import onnxruntime

import psutil

# from memory_profiler import profile

from config import POS

range_w, range_h = POS[0]

IMGSZ = (range_h[1] - range_h[0],

range_w[1] - range_w[0])

print(f'IMGSZ: {IMGSZ}')

DTYPE = np.float32

class ONNXWrapper():

"""

YOLO wrapper class for OpenVINO inference engine.

"""

def __init__(self, model_files=None,

img_shape=IMGSZ,

batch_size=1,

dtype=DTYPE, # np.float32, # float16

num_threads=None):

"""

YOLO wrapper, for OpenVINO inference.

Inputs

------

w - model file (.xml) or corresponding folder

"""

assert model_files is not None, "Models must be provided."

w, h = img_shape

input_shape = (batch_size, 1, h, w) # input is Sobel-gradient image

self.dtype = dtype

self.networks = []

for model_file in model_files:

if not Path(model_file).is_file(): # if not *.xml

# get *.xml file from *_openvino_model dir

model_file = next(Path(model_file).glob('*.onnx'))

print(f'model file: {model_file}')

# ONNX runtime

providers = ['CPUExecutionProvider'] # ['CUDAExecutionProvider', 'CPUExecutionProvider']

session = onnxruntime.InferenceSession(str(model_file),

providers=providers)

# meta = session.get_modelmeta().custom_metadata_map # metadata

# if 'stride' in meta:

# stride, names = int(meta['stride']), eval(meta['names'])

self.networks.append(session)

# Initialize i.e. declare batch

# No several models here

self.input_shape = (len(model_files), *input_shape)

self.batch = np.zeros(self.input_shape, dtype=self.dtype)

@property

def input_shapes(self):

return [self.input_shape] # * len(self.executable_networks)

@property

def inputs_memory(self):

return self.batch

# @profile

def execute(self): # , batch):

"""

Inference.

Inputs

------

-

Outputs

-------

y - output tensor

elapsed_time

mem_info

"""

st_time = time()

outputs = []

print(f'net: {self.networks}')

# ---------------------------------------------------------------------

# add sub-networks/models, see above, for every pin location

for ind, net in enumerate(self.networks):

# ONNX runtime

# io_binding = net.io_binding()

# OnnxRuntime will copy the data over to the CUDA device if 'input' is consumed by nodes on the CUDA device

# io_binding.bind_cpu_input(net.get_inputs()[0].name,

# self.inputs_memory[ind])

# io_binding.bind_output('output') # [out_.name for out_ in net.get_outputs()])

print(f'Running... Output names: {[out_.name for out_ in net.get_outputs()]}')

# print(f'Input shape: {self.inputs_memory.shape, np.max(self.inputs_memory)}')

out = net.run([out_.name for out_ in net.get_outputs()],

{net.get_inputs()[0].name:

self.inputs_memory[ind]})

# net.run_with_iobinding(io_binding)

# out = io_binding.copy_outputs_to_cpu()

outputs.append(out)

# ---------------------------------------------------------------------

# y = self.executable_network(self.inputs_memory)[self.output_layer]

elapsed_time = time() - st_time

mem = psutil.virtual_memory()

mem_info = [mem.free, mem.total]

# return y, elapsed_time, mem_info

return outputs, elapsed_time, mem_info

# Define __enter_ and __exit__ just because engine will be used as context

def __enter__(self):

return self

def __exit__(self, exc_type, exc_value, exc_tb):

passThe models are here: https://drive.google.com/drive/folders/1SBqaClQOiZRn3LU9zJYOa-FtbgTS9BeO?usp=sharing

Versions are as mentioned above: Openvino 2022.1, onnx 1.11.0.

ONNX inference works (although it is very slow for some reason).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @Wan_Intel ,

thank you for the feedback.

I posted my answer several times, but it doesn't show up, I am not sure what is happening.

I uploaded the files here: https://drive.google.com/drive/folders/1SBqaClQOiZRn3LU9zJYOa-FtbgTS9BeO?usp=sharing

ONNX inference works (although it is very slow for some reason).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mf22,

Thanks for sharing your information with us.

I encountered the same error "RuntimeError: could not create a primitive" when using your ONNX model with Benchmark Python Tool.

Could you please check if the following code is the right way to create your custom model?

import torch

from torch import nn

from torch.utils.data import DataLoader

from torchvision import datasets

from torchvision.transforms import ToTensor

import cv2

import numpy as np

class TemplateMatch(nn.Module):

"""

Custom Template Matching layer.

Uses normalized correlation to find the best match.

(Can be easily modified to use other metrics.)

This is re-implemantation of OpenCV-s matchTemplate,

with CCOEFF_NORMED metric.

For testing of faster native deployment (Intel OpenVINO on CPU /

integrated GPU).

"""

def __init__(self, templates, masks,

padding=0, # 'valid' # 0; changed from earlier versions

device='cpu'

):

"""

Inputs

------

template - (l2-normalized !) template (only masked region !),

torch.Tensor

mask - binary mask of the same shape as template

"""

super().__init__()

self.template_shape = templates.shape

in_ch, out_ch, k1, k2 = self.template_shape # 1, nr_templates,

# (template size)

self.template_sums = torch.sum(templates, # .flatten(2, -1),

# dim=-1).to(device)

dim=(2, 3)).to(device)

self.mask_sums = torch.sum(masks, # .flatten(2, -1),

# dim=-1).to(device)

dim=(2, 3)).to(device)

self.device = device

# Fixed convolution with ones; used for normalization

self.conv_norm = nn.Conv2d(in_ch, out_ch, (k1, k2),

padding=padding)

self.conv_norm.weight = nn.Parameter(data=masks,

requires_grad=False)

# Leave default padding (zeros)

self.correlation = nn.Conv2d(in_ch, out_ch, (k1, k2),

padding=padding)

# NOTE: template * mask should already be done before

self.correlation.weight = nn.Parameter(data=templates, # * masks

requires_grad=False)

def forward(self, input_):

# get (masked) norms for all blocks (mask is included in conv_norm

# definition)

# NOTE: padding must be 0 i.e. 'valid for this'

# NOTE: masks * ... is not necessary (template is already masked)

# h, w = self.template_shape[2:]

sums = self.conv_norm(input_) # / self.mask_sums

centers = torch.div(sums, self.mask_sums) # sums / self.mask_sums

norms = torch.sqrt(self.conv_norm(

# torch.square(input_))

input_ * input_)

- sums * 2. * centers

+ self.mask_sums * centers * centers # torch.square(centers)

)

# Return correlation tensor

# We only look at masked region implicitly (convolution kernel is

# already masked)

result = torch.div(

self.correlation(input_) - self.template_sums * centers,

norms)[0].flatten(1, -1)

max_ind = torch.argmax(result, dim=-1)

return result[:, max_ind], max_ind.to(torch.int32)

# return torch.max(

# torch.div((self.correlation(input_) - self.template_sums * centers)

# , norms)[0].flatten(1, -1), # remove batch dim

# dim=-1 # torch.tensor([0, 1]) # .to(self.device)

# ) # , centers.shape

image = cv2.imread("download.jpg")

image = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

print(image.shape)

input_imagex = np.expand_dims(image.transpose(1,0), 0)

print(input_imagex.shape)

input_image = np.expand_dims(input_imagex.transpose(0, 1, 2), 0)

print(input_image.shape)

x = torch.Tensor(input_image)

model = TemplateMatch(templates=x, masks=x)

print(model)

torch.save(model.state_dict(), "model.pth")

print("Saved PyTorch Model State to model.pth")

model.load_state_dict(torch.load("model.pth"))

y = torch.randn(1, 1, 276, 183)

torch_out = model(y)

torch.onnx.export(model, y, "model.onnx")

print("Saved model.pth to model.onnx")

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mf22,

Just wanted to follow up and see if the code above was able to resolve your issue. If you still facing the same issue, you may get back to us.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Mf22,

This thread will no longer be monitored since we have provided a suggestion.

If you need any additional information from Intel, please submit a new question.

Regards,

Wan

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page