- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Colleagues,

I'm running into an issue I never have before with OpenMP. I've set the environment variable OMP_NUM_THREADS to say 12, but then when I check "top" it only shows 500-600% CPU usage for the executable...

A couple of points:

- Machine Architecture: CPU - Dual Intel Xeon 2690's, 256 GB of RAM, Ubuntu Linux 16.04 LTS Server

- Compiler: ifort -v : ifort version 19.1.1.217

- Compiler and link Options for execution:

FFLAGS = -Ofast -xHost -qopenmp

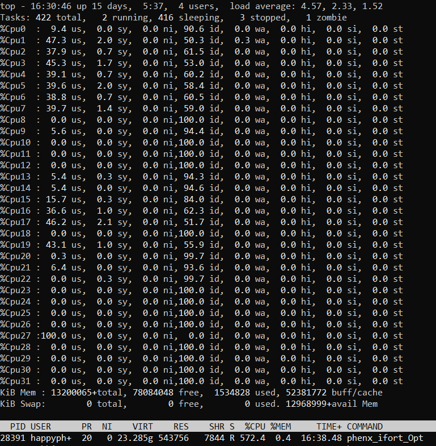

Screenshot from top:

What's odd is that when I've tried removing compiler optimizations, and just compile and link with "-qopenmp", it gives the same behavior. But when I remove all optimizations and compile and link with "-check all -qopenmp", it utilizes the full number of logical CPU's that are specified by OMP_NUM_THREADS.

I've been working on this all day, and can't seem to find any solutions. Any ideas?

Best Regards and thanks in advance.

Andrew

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

FWIW

The Intel recommended use of RANDOM_NUMBER in parallel applications is for the serial portion of the application to use the function to return an array of random numbers (called harvest). Then (not described in the documentation), in the parallel region, each thread to use a slice (or offset then stride) of the array. This may require a sufficiently large array for some simulation, however, periodically the array of random numbers could be replenished after a DO loop by MASTER (then barrier).

VTune is exceptionally good.

Jim Dempsey

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

With 256 GB of RAM, there is lots of potential for memory access delays. Increasing CPU usage with "-check all" suggests this is the case, as this increases the computation performed vs memory access rate.

Identifying opportunities to process arrays sequentially in memory, rather than striding across memory, could address this potential issue, as this would reduce the memory access rate. Memory access is page based, rather than word based. Essentially, referencing large arrays with a stride of 1 is preferable to a stride of n. Similarly, using syntax like Array(:,j) rather than Array(j,:) helps mitigate this possible cause.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Are you affinity pinning your OpenMP threads?

Try setting environment variables:

OMP_PLACES=cores

OMP_PROC_BIND=close (or experiment with =spread)

From your top command it appears that the serial portion of the application is consuming ~60% of the time (and is running on Cpu27).

IOW Cpu27 (master thread) is ~60% time in serial region + ~40% time in parallel region.

It looks like you will need to look at how to better utilize multiple threads.

In many such cases, the bottleneck is related to performing I/O. When this is the case, consider performing the I/O in a separate task (either prefetch and/or lazy write).

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for responding John and Jim.

To address the points made:

- The program itself does not utilize 256 GB of RAM - That's just how much the host system has. The program utilizes somewhere between 1.5 GB - 5 GB, depending on the simulation geometry.

- One of the main reasons I have endeavored to write this code (radiation transport) was for efficiency purposes, so I have been meticulous regarding variable memory layout. Most variables that are intuitively 2-D or 3-D have been "re-mapped" to 1-D arrays. Those that are kept at 2-D, are referenced appropriates for FORTRAN.

- I've tried affinity pinning the OpenMP Threads to no avail. I've included the environment variables suggested with no change.

- Jim, your suggestion regarding time spent in the master thread taking about 60% of the time intrigued me, and I'm currently trying to see why that might be the case (setting up a VTune project). My code is setup so that within the parallel region, the master thread is not different than a slave thread:

!$OMP PARALLEL DEFAULT(SHARED)

ThreadPrivate Variable Allocation & initialization

!$OMP DO SCHEDULE(STATIC,CHUNKSIZE)

Thread Independent Work utilizing unchanged, shared memory

!$OMP END DO

!$OMP CRITICAL

Manual Reduction due to user defined variable use

!$OMP END CRITICAL

!$OMP END PARALLEL

So, I really don't know why the master thread would be spending more time than the others... Also, my code runs fine in gfortran will the following compiler settings:

-Ofast --fast-math -march=native -flto -fopenmp

I don't understand why the gfortran works but not the intel.... I really want to get the intel working... I'll report back after I've looked at the VTune output unless anyone has any other ideas..

Thanks again,

Andrew

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Try a little of your own instrumentation. Something similar to this:

!$OMP PARALLEL DEFAULT(SHARED) PRIVATE(iThread)

ThreadPrivate Variable Allocation & initialization

iThread = omp_get_thread_num()

tBegin = omp_get_wtime()

!$OMP DO SCHEDULE(STATIC,CHUNKSIZE)

Thread Independent Work utilizing unchanged, shared memory

!$OMP END DO NOWAIT ! *** add nowait clause

tWorkEnd[iThread] = omp_get_wtime()

!$OMP BARRIER ! *** satisfy implicit wait removed by NOWAIT

!$OMP CRITICAL

tCriticalBegin[iThread] = omp_get_wtime()

Manual Reduction due to user defined variable use

!$OMP END CRITICAL

tCriticalEnd[iThread] = omp_get_wtime()

timeWork[iThread] = tWorkEnd[iThread] - tBegin[iThread]

timeCriticalWait[iThread] = tCriticalBegin[iThread] - tWorkEnd[iThread]

timeCriticalEnd[iThread] = tCriticalEnd[iThread] - tCriticalBegin[iThread]

!$OMP END PARALLEL

! now report on per-thread timings

That is a starting suggestion.

I suspect you will find an inordinate amount of time spent timeCriticalWait[iThread].

Generally the barrier (implicit and explicit) wait time is less than the KMP_BLOCKTIME which defaults at 200ms. Your ~40% compute time indicates one of two possibilities:

The above loop barrier wait times exceeds the 200ms and continues through 60% of available time.

or

The sum of the time before and after the parallel region represents 60% of available time.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Okay, I found some time to further take a look at this and apply the timings as suggested by Jim. The output is as follows.

The # column specifies the thread id, "OpenMP" specifies timings using the OMP_get_wtime() function, and the CPU_TIME specifies timing using subroutine CPU_TIME(). The first set of values represent the time of the thread spent in the !$OMP Do Loop. The second Set represent the time spent waiting for the other threads to end before executing the CRITICAL section following the do loop. The third set represent the time spent in the CRITICAL section.

!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! Time Spent in Different Code Regions by Thread History Do Loop # OpenMP CPU_TIME 0 6.4435426E+01 3.2933600E+02 1 2.4511222E+02 1.1178720E+03 2 2.5624229E+02 1.1639720E+03 3 2.6903399E+02 1.2099880E+03 4 2.5711092E+02 1.1674240E+03 5 2.5285332E+02 1.1504680E+03 6 3.0325177E+02 1.3288120E+03 7 3.0183117E+02 1.3245280E+03 8 3.0875257E+02 1.3376360E+03 9 3.0772239E+02 1.3364360E+03 10 3.0210662E+02 1.3255440E+03 11 3.0298542E+02 1.3280680E+03 Time Spent Waiting for Critical Reduction 0 2.4431700E+02 1 6.3640253E+01 2 5.2510267E+01 3 3.9718560E+01 4 5.1641703E+01 5 5.5899130E+01 6 5.5006089E+00 7 6.9214818E+00 8 1.1301041E-04 9 1.0298750E+00 10 6.6460960E+00 11 5.7674038E+00 Time Spent in Critical Reduction 0 5.3167343E-05 1 1.0013580E-05 2 1.7881393E-05 3 1.8119812E-05 4 1.5974045E-05 5 8.8214874E-06 6 1.1920929E-05 7 5.0067902E-06 8 2.9087067E-05 9 1.0013580E-05 10 6.9141388E-06 11 5.9604645E-06 ****************************** END PROJECTION LOOP

I don't know how useful the third set of values are, but the first and second set intrigue me. As noted before, the master thread gets full CPU utilization, while the other threads do no. What I found this time, is when the master thread finishes, that CPU shuts off - No other thread gets 100% CPU utilization after the master thread completes.

There is no I/O during the do loop; there is no computational difference between the master thread and the slaves; the parallel region encompasses about 95% of the runtime. I just don't understand why the master thread gets a full CPU, but the others don't. The gfortran compiler performs without any issues, but I really want the intel compiler to work.

Thanks again in advance.

Andrew

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Add to your current timing code a time capture at program start and a time capture just before your time spent report. Then include a print out of the difference in times. IOW the total runtime of the code up to the report. I do not know why the OpenMP time differs from the CPU_TIME. Doesn't matter as we are looking for relative time spent. I would use the OpenMP time alone. Alternatively I'd write a C function to get the __rdtsc() clock tick as this is as light-weight as it gets.

Your data collection seems to be in error.

master thread total DO loop time 64.43 seconds

master thread waiting for critical reduction 244.3 seconds (*** inside DO loop ***)

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Sorry I wasn't as clear. The second set of numbers represent the amount of time spent in the critical section following the !$OMP Do loop, but before !$OMP END PARALLEL. Those times do not include the amount of time spent in the Do Loop, only the critical section following. The do loop times are represented by the first set of numbers.

Does that clarify? Each thread has the same amount of work to perform. The master thread waited for longer the other threads longer than it took to perform the work. I hope that helps.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What I suggested you measure is:

PROGRAM prgStart = omp_get_wtime() ... tFirstParallelStart = omp_get_wtime() !$omp parallel iThread = omp_get_thread_num() ! iThread is threadprivate !$omp end parallel tFirstParallelEnd = omp_get_wtime() tParallelStartupOverhead = tFirstParallelEnd - tFirstParallelStart ... ! serial code up to your parallel region that is timed ... ! timed parallel region ... ! serial code following parallel region that is timed prgEnd = omp_get_wtime() prgTime = prgEnd - prgStart ... ! report including prgTime END PROGRAM

You still have to explain why the first set of numbers (total time by each thread for entire DO loop) is less than the wait time for the critical section contained within the DO loop. Unless, of course, your system is running in Dr. Who's Tardis, one would expect the run time inside a part of the loop would be less than the entirety of the loop itself.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you, Jim, and I apologize for my lack of clarity: physicist occupational hazard.

I'll try to be clear this time. The critical section is not within the Do Loop. It is contained immediately after the !$OMP END DO, but before !$OMP END PARALLEL.

Or am I misunderstanding you? I'll try you suggestion.

Andrew

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I understood where the critical section was located. I wanted you to add the total app runtime too.

Additionally, add a time capture immediately before the parallel region and immediately after the parallel region. Let's see what the difference of those produce.

What doesn't make sense is the time waiting for critical section.

The timeCriticalWait is not calculated correctly (my fault). Insert a new time capture between the barrier and the critical section. You can then produce the time spent at the barrier, and then using the new time capture, produce the correct value for the critical wait.

Some analysis:

thread 0 doWork time is ~64.4 seconds

threads 1:11 are ~245 to ~309 seconds

or thread 0 has about 1/4th the compute time as the other threads. Is there anything in your code that would account for this?

Note, if the wait time of thread 0 at the barrier exceeds the KMP_BLOCKTIME (default 200ms or 300ms), then the thread will be put to sleep, and then restarted. This will add additional latency and overhead.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Jim,

I wanted to personally thank you for your help! It was my pseudo-random generator. I have a modified implementation of an xorshift prng that is parallel robust, but I was mistakenly de-initializing the control variable that specifies which PRNG to use by including the control variable in a THREADPRIVATE clause. Without the control variable defined, my program defaults to the FORTRAN 90 intrinsic function RANDOM_NUMBER(). It appears Intel's implementation does not support parallel processing, while gfortran does. I think I remember that from when I was looking at which prng to utilize a few years ago, but it's hazy.

Needless to say, I now get the expected behavior after switching to the correct prng. I was able to get my code running in VTune to tell me why my Master Thread was running so much more efficiently. The slave threads were sleeping while waiting for RANDOM_NUMBER() to become available for use while a different thread was utilizing the function.

Again, thank you for helping me work through it. Educational experience.

Andrew

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

FWIW

The Intel recommended use of RANDOM_NUMBER in parallel applications is for the serial portion of the application to use the function to return an array of random numbers (called harvest). Then (not described in the documentation), in the parallel region, each thread to use a slice (or offset then stride) of the array. This may require a sufficiently large array for some simulation, however, periodically the array of random numbers could be replenished after a DO loop by MASTER (then barrier).

VTune is exceptionally good.

Jim Dempsey

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page