- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello

I am testing the IntelOne Api Fortran 2021.7.0, C compiler and MKL 2022 in Win11 and my new laptop i5-12450H. Not very expert, still learning the parallel programming. About Superlu-5.3.0 from github xiaoyeli, before jumping to superlu-mt, i has questions:

1. The convention in Fortran calls in uppercase, while intel calls in lowercase_, the slu_cnames.h contains several options for several machine compiling. there are ifdef ADD_; ADD__; UPCASE; CRAY. Somehow i cant push the C compiler to use existing H file to use uppercase.

I tried

-DUPCASE and -Dlowercasename_=UPPERCASENAME

not working. I must edit the slu_Cnames.h to just def UPCASE, then it works,

So there's no other way using any switch compiling without editing H file right?

2. There are several compiler now. ICX ICL IFORT IFX.

I think icx is clang mode, icl is the classic one. icx somhbcode1.fehow doesnt know /Qpar

what different ifort vs ifx?

3. I try to built superlu and it fortran wrapper first, so i copy c_fortran_*.c to SRC Superlu

since it using same include and same c files. icl *.c /c with modified slu_Cnames.h that contain upcase calling only. xilib *.obj /out:superlu.lib. this lib then copied to fortran folder.

ifort f77_main.f hbcode1.f superlu.lib /Qmkl:sequential /O3 works

f77_main < ..\EXAMPLE\g20.rua looks good

The openmp seems strange. ifort test_openmp.f hbcode1.f superlu.lib /Qmkl:sequential /Qopenmp /O3 /Heap-arrays:0 /F:200000000 created test_omp.exe

I ran.

set OMP_THREAD_NUMS=2

test_omp.exe < ..\EXAMPLE\g20.rua

It seems stalled. It showed before hbcode1, after hbcode1, and just stopped.

/Heap-arrays:0 not helping need stack /F:200000000 to helps running, otherwise stackoverflow.

4. If i used Ninja build, it stopped when building TESTING lib

in superlu root, mkdir build, cd build,

cmake -GNinja -DBLAS_VENDOR=Intel_lp64 -D"enable_blaslib_DEFAULT=OFF" -D"CMAKE_BUILD_TYPE=RELEASE" -D"BUILD_SHARED_LIBS=OFF" -D"enable_internal_blaslib=FALSE" -D"XSDK_ENABLE_Fortran=TRUE" ..

and run ninja failed in finding unistd.h. I cant find a propert unistd.h replacement.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you add the environment variables:

OMP_STACKSIZE=200M

KMP_STACKSIZE=200M

(though the KMP_STACKSIZE might not be needed)

Coding issues:

!$omp parallel do private(j,values,b,info,factors)

do j=1,nsys

!$omp parallel workshare

values(1:nnz) = A(1:nnz,j)

b(1:n) = brhs(1:n,j)

!$omp end parallel workshare

info = 0

call c_fortran_dgssv( iopt,n,nnz, nrhs, values, rowind, colptr, &

& b, ldb, factors, info )

!$omp parallel workshare

A(1:nnz,j) = values(1:nnz)

brhs(1:n,j) = b(1:n)

!$omp end parallel workshare

1) Line 3 and 12 contain "parallel". This instructs the compiler to generate a nested parallel region. IIF workshare were warranted (it is not), then you would use "!$omp workshare" without the "parallel". I suspect that prior to using the !$omp parallel do ..." you had the "!$omp parallel workshare".

2) Because values and b are private, together with j being (the array slice) index to A and brhs and j being the index of the parallel do, there need not be any workshare construct as the work is already divided.

3) A quirk of (Intel?) Fortran is non-allocated local arrays in PROGRAM procedure are equivalent to SAVE. For other procedures they are stack.

The untested suggested code is as follows:

! A simple OpenMP example to use SuperLU to solve multiple independent linear systems.

! Contributor: Ed D'Azevedo, Oak Ridge National Laboratory

!

program tslu_omp

implicit none

integer, parameter :: maxn = 10*1000

integer, parameter :: maxnz = 100*maxn

integer, parameter :: nsys = 6 !! 64

! convert the arrays using maxn and/or maxz from static/stack to allocatables

real*8, allocatable :: values(:), b(:) ! allocate(values(maxnz), b(maxn))

integer, allocatable :: rowind(:), colptr(:) ! allocate(rowind(maxnz), colptr(maxn))

! integer :: Ai(:, :), Aj(:, Note, when pasting code sample click on the "..." icon to open additional icons. Then click on the </> icon to insert marked up code/text, select Fortran Markup, paste in the code (edit if necessary)

Jim Dempsey

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please consult interoperability and BIND(C).

The heap arrays option is /heap-arrays:0 (not /Heap-arrays:0)

The /F<n> option sets the stack size for the main thread, and not necessarily that of the spawned threads.

You may need to combine /F20000000 with environment variable OMP_STACKSIZE=200M

*** However, it is not advisable to have 200MB stack size per thread. Either use heap arrays or, preferably, explicitly allocate the (very) large arrays.

While running with 2 threads may not present a problem now, consider running with 32, 64, 128, ... threads later.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Without BIND(C), the Fortran standard makes no representations whatsoever about the case or decoration of global symbols. Conventions for these vary across compilers and platforms. Intel Fortran on Windows upcases names, on Linux and Mac downcases. Regularizing this was one of the major goals of the C interoperability features added in Fortran 2003 - with BIND(C), the name is downcased (assuming NAME= not used) and the decoration is the same as the "companion C processor".

Please do NOT use command line switches to change these behaviors - use the standard language features designed for that purpose.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you

Jack and Steve

I will try using BIND(C) later in fortran subroutine.

Jack, i was tried using modified h, and i successfully run. however for the omp program test is stalled in threads > 3.

The gcc was ran successfully in 16 threads. The OMP_STACKSIZE=200MB helps little bit, but stuck in 4 threads,

before hbcode1

after hbcode1

nthreads = 8

nsys = 6

n, nnz 400 1920

... stalled.

/heap-arrays:0 not helped. Must be a larger stacksize F:20000000 still got stackoverflow. Maybe you can replicate the problem.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

>>however for the omp program test is stalled in threads > 3.

This is possibly due to the application consuming more virtual memory than the process can (or is permitted) use in physical memory. In this situation, the application enters paging mode (page-fault, swap some pages out, swap other pages in, continue).

i5-12450H has 12 threads

"new laptop" you do not list which to see how much RAM is available (4GB, 8GB, 16GB)

/heap-arrays:0 not helped... still get stack overflow

I suggest you use /heap-arrays:0 /traceback with OMP_STACKSIZE undefined (iow "deafult stack"). Then run to stack overflow (adjust thread count to force error).

Then in the console window, you should see the source file and line number last executed prior to error. This should give you an idea of where the error is located.

Second thing to consider. Is your code, either intentionally or inadvertently using nested parallelism?

If so, then with 4 first level threads, each calling a procedure entering a nested parallel region, each main level thread creates (or re-uses on 2nd time) a 4 thread team. IOW you program now requires 4*4 (16) threads. And if the cause of this nesting happens to be in a recursive procedure, then next recursion will require 4*4*4 threads, next recursion 4*4*4*4 threads, ...

gcc may default with OMP_NESTED=false, whereas ifort/ifx may default with OMP_NESTED=true.

**** MKL 2022

Note, MKL internally uses OpenMP

Typical usage is:

Single-threaded application is to link with the Multi-Threaded MKL

Multi-threaded application is to link with the Serial (single threded) MKL

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

BTW (consider this)

When MKL Threaded library is used .AND. MKL_NUM_THREADS is .NOT. specified, then MKL will default to using the number of available hardware threads (12 in your case). Thus you "4-thread" application could be trying to run with 4*12=48 threads.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I set MKL_NUM_THREADS=4 and OMP_NUM_THREADS=4 not working, stalled.

i use ifort test_omp.F hbcode1.f superlu.lib /Qmkl:sequential /Qopenmp /heap-arrays:0 /traceback /F:2000000

Where hbcode 1.f is matrix reader from text, and test_omp.f is superlu test file to calculate. it call c_fortran_dgssv.c for using superlu lib.

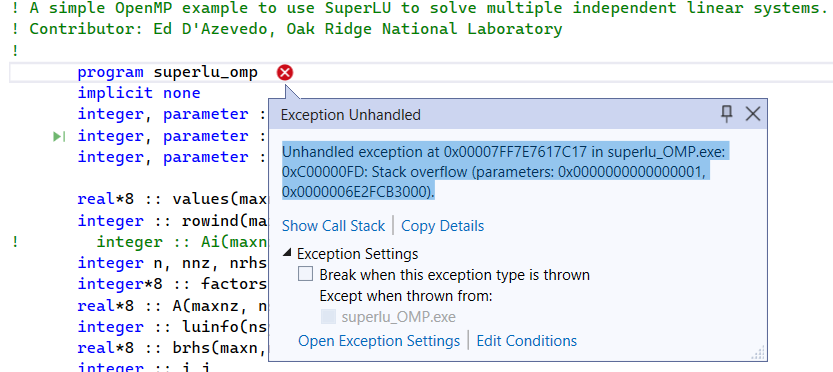

forrtl: severe (170): Program Exception - stack overflow

Image PC Routine Line Source

test_omp.exe 00007FF75A7C7A07 Unknown Unknown Unknown

test_omp.exe 00007FF75A70100D MAIN__ 4 test_omp.F

test_omp.exe 00007FF75A7B584E Unknown Unknown Unknown

test_omp.exe 00007FF75A7C7C10 Unknown Unknown Unknown

KERNEL32.DLL 00007FFAD768244D Unknown Unknown Unknown

ntdll.dll 00007FFAD868DFB8 Unknown Unknown Unknown

Looks like stack overflow at calling program ???

Release version 5.3.0 · xiaoyeli/superlu · GitHub

And here is the test_omp.F

! A simple OpenMP example to use SuperLU to solve multiple independent linear systems.

! Contributor: Ed D'Azevedo, Oak Ridge National Laboratory

!

program tslu_omp

implicit none

integer, parameter :: maxn = 10*1000

integer, parameter :: maxnz = 100*maxn

integer, parameter :: nsys = 6 !! 64

real*8 :: values(maxnz), b(maxn)

integer :: rowind(maxnz), colptr(maxn)

! integer :: Ai(maxnz, nsys), Aj(maxn, nsys) ! Sherry added

integer n, nnz, nrhs, ldb, info, iopt

integer*8 :: factors, lufactors(nsys)

real*8 :: A(maxnz, nsys)

integer :: luinfo(nsys)

real*8 :: brhs(maxn,nsys)

integer :: i,j

real*8 :: err, maxerr

integer :: nthread

!$ integer, external :: omp_get_num_threads

! --------------

! read in matrix

! --------------

print*, 'before hbcode1'

call hbcode1(n,n,nnz,values,rowind,colptr)

print*, 'after hbcode1'

nthread = 1

!$omp parallel

!$omp master

!$ nthread = omp_get_num_threads()

!$omp end master

!$omp end parallel

write(*,*) 'nthreads = ',nthread

write(*,*) 'nsys = ',nsys

write(*,*) 'n, nnz ', n, nnz

!$omp parallel do private(j)

do j=1,nsys

A(1:nnz,j) = values(1:nnz)

enddo

nrhs = 1

ldb = n

!$omp parallel do private(j)

do j=1,nsys

brhs(:,j) = j

enddo

! ---------------------

! perform factorization

! ---------------------

iopt = 1

!$omp parallel do private(j,values,b,info,factors)

do j=1,nsys

!$omp parallel workshare

values(1:nnz) = A(1:nnz,j)

b(1:n) = brhs(1:n,j)

!$omp end parallel workshare

info = 0

call c_fortran_dgssv( iopt,n,nnz, nrhs, values, rowind, colptr, &

& b, ldb, factors, info )

!$omp parallel workshare

A(1:nnz,j) = values(1:nnz)

brhs(1:n,j) = b(1:n)

!$omp end parallel workshare

luinfo(j) = info

lufactors(j) = factors

enddo

do j=1,nsys

info = luinfo(j)

if (info.ne.0) then

write(*,9010) j, info

9010 format(' factorization of j=',i7,' returns info= ',i7)

endif

enddo

! ---------------------------------------

! solve the system using existing factors

! ---------------------------------------

iopt = 2

!$omp parallel do private(j,b,values,factors,info)

do j=1,nsys

factors = lufactors(j)

values(1:nnz) = A(1:nnz,j)

info = 0

b(1:n) = brhs(1:n,j)

call c_fortran_dgssv( iopt,n,nnz,nrhs,values,rowind,colptr, &

& b,ldb,factors,info )

lufactors(j) = factors

luinfo(j) = info

brhs(1:n,j) = b(1:n)

enddo

! ------------

! simple check

! ------------

err = 0

maxerr = 0

do j=2,nsys

do i=1,n

err = abs(brhs(i,1)*j - brhs(i,j))

maxerr = max(maxerr,err)

enddo

enddo

write(*,*) 'max error = ', maxerr

! -------------

! free storage

! -------------

iopt = 3

!$omp parallel do private(j)

do j=1,nsys

call c_fortran_dgssv(iopt,n,nnz,nrhs,A(:,j),rowind,colptr, &

& brhs(:,j), ldb, lufactors(j), luinfo(j) )

enddo

stop

end program

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Did you add the environment variables:

OMP_STACKSIZE=200M

KMP_STACKSIZE=200M

(though the KMP_STACKSIZE might not be needed)

Coding issues:

!$omp parallel do private(j,values,b,info,factors)

do j=1,nsys

!$omp parallel workshare

values(1:nnz) = A(1:nnz,j)

b(1:n) = brhs(1:n,j)

!$omp end parallel workshare

info = 0

call c_fortran_dgssv( iopt,n,nnz, nrhs, values, rowind, colptr, &

& b, ldb, factors, info )

!$omp parallel workshare

A(1:nnz,j) = values(1:nnz)

brhs(1:n,j) = b(1:n)

!$omp end parallel workshare

1) Line 3 and 12 contain "parallel". This instructs the compiler to generate a nested parallel region. IIF workshare were warranted (it is not), then you would use "!$omp workshare" without the "parallel". I suspect that prior to using the !$omp parallel do ..." you had the "!$omp parallel workshare".

2) Because values and b are private, together with j being (the array slice) index to A and brhs and j being the index of the parallel do, there need not be any workshare construct as the work is already divided.

3) A quirk of (Intel?) Fortran is non-allocated local arrays in PROGRAM procedure are equivalent to SAVE. For other procedures they are stack.

The untested suggested code is as follows:

! A simple OpenMP example to use SuperLU to solve multiple independent linear systems.

! Contributor: Ed D'Azevedo, Oak Ridge National Laboratory

!

program tslu_omp

implicit none

integer, parameter :: maxn = 10*1000

integer, parameter :: maxnz = 100*maxn

integer, parameter :: nsys = 6 !! 64

! convert the arrays using maxn and/or maxz from static/stack to allocatables

real*8, allocatable :: values(:), b(:) ! allocate(values(maxnz), b(maxn))

integer, allocatable :: rowind(:), colptr(:) ! allocate(rowind(maxnz), colptr(maxn))

! integer :: Ai(:, :), Aj(:, Note, when pasting code sample click on the "..." icon to open additional icons. Then click on the </> icon to insert marked up code/text, select Fortran Markup, paste in the code (edit if necessary)

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks jim dempsey

it revised code worked. Must be:

1. Compiled by " ifort superlu_OMP.f90 hbcode1.f superlu.lib /Qmkl:sequential /Qopenmp /heap-arrays:0 /F20000000 "

Must defined stack here /F20000000, otherwise still stack overflow even for OMP_NUM_THREADS=1

The allocatable variables in modified code removes the stack overflow problem.

2. OMP_STACKSIZE=200M or KMP_STACKSIZE=200M must be stated.

3. As you stated, "!$omp workshare parallel is the main problem, why it stalled or ended without result.

Note: OMP_NUM_THREADS environment var to push 1-max thread can be given to test.

Ary.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

By the way... It is required/presumed that c_fortran_dgssv be thread-safe

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Just found out to use slu_Cnames.h that have multi machine

#if (F77_CALL_C == UPCASE)

...

#endif

just go to src create static superlu lib and copy also the c_fortran_dgssv.c and other c_fortran wrapper to SRC

icl *.c /c /QaxCORE-AVX2 -DF77_CALL_C=UPCASE /Ox /Qpar

lib *.obj /OUT:libsuperlu.lib

this eliminates conflict with libucrt that use cmake.

the upcase with intel fortran call c_fortran_dgssv is working.

while waiting perhaps someone create fortran c_Fortran_dgssv.f90 use binding c

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page