- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I observed that my program, which worked fine with OpenMPI, did hang on MPI_Iprobe with Intel MPI. I could pinpoint the problem to the usage of MPI_ANY_SOURCE. When using a a loop over all communicator ranks instead of MPI_ANY_SOURCE, my program did work!

Here is the minimal example:

/*

mpicc -Wall -std=c11 -o test-mpi mpi-probe-any-source-bug.c && mpirun -np 2 ./test-mpi

*/

#include "mpi.h"

#include <stdio.h>

#include <unistd.h>

void

testIprobe()

{

int rank = 0;

int communicatorSize = 0;

MPI_Comm_rank( MPI_COMM_WORLD, &rank );

MPI_Comm_size( MPI_COMM_WORLD, &communicatorSize );

if ( rank > 0 ) {

const int message = rank;

const int tag = rank;

MPI_Send( &message, 1, MPI_INT, /* target rank */ 0, tag, MPI_COMM_WORLD );

printf( "Sending message %i with tag : %i\n", message, tag );

} else if ( rank == 0 ) {

int flag = 0;

MPI_Status status;

while ( flag == 0 ) {

int rc;

//rc = MPI_Iprobe( 1, /* tag */ 1, MPI_COMM_WORLD, &flag, &status ); /* WORKS after <4s */

//rc = MPI_Iprobe( 1, MPI_ANY_TAG, MPI_COMM_WORLD, &flag, &status ); /* WORKS after <4s */

//rc = MPI_Iprobe( MPI_ANY_SOURCE, /* tag */ 1, MPI_COMM_WORLD, &flag, &status ); /* WILL NEVER RETURN TRUE! */

rc = MPI_Iprobe( MPI_ANY_SOURCE, MPI_ANY_TAG, MPI_COMM_WORLD, &flag, &status ); /* WILL NEVER RETURN TRUE! */

printf( "After MPI_Iprobe, flag = %d, rc = %i\n", flag, rc );

sleep( 1 );

}

printf( "Received from rank %d, with tag %d and error code %d.\n",

status.MPI_SOURCE,

status.MPI_TAG,

status.MPI_ERROR );

int message;

MPI_Recv( &message, 1, MPI_INT, status.MPI_SOURCE, status.MPI_TAG, MPI_COMM_WORLD, MPI_STATUS_IGNORE );

}

}

int main( int argc, char *argv[] )

{

MPI_Init( &argc, &argv );

char mpiVersion[MPI_MAX_LIBRARY_VERSION_STRING];

int mpiVersionSize = 0;

MPI_Get_library_version(mpiVersion, &mpiVersionSize);

int rank = 0;

MPI_Comm_rank( MPI_COMM_WORLD, &rank );

if ( rank == 0 ) {

printf( "MPI Version: %s\n", mpiVersion );

}

MPI_Barrier( MPI_COMM_WORLD );

testIprobe();

MPI_Finalize();

}

I tested it with all installed versions on an HPC system and it worked for all available versions:

- Intel(R) MPI Library 2018 Update 1 for Linux* OS

- Intel(R) MPI Library 2018 Update 3 for Linux* OS

- Intel(R) MPI Library 2018 Update 4 for Linux* OS

- Intel(R) MPI Library 2018 Update 5 for Linux* OS

- Intel(R) MPI Library 2019 Update 7 for Linux* OS

Example output for a working run:

After MPI_Iprobe, flag = 0, rc = 0

Sending message 1 with tag : 1

After MPI_Iprobe, flag = 1, rc = 0

Received from rank 1, with tag 1 and error code 0.

I can only reproduce the bug on the locally installed version:

- Intel(R) MPI Library 2021.2 for Linux* OS

Printing roughly one line per second because of the sleep(1), this is the output after ~20s:

MPI Version: Intel(R) MPI Library 2021.2 for Linux* OS

After MPI_Iprobe, flag = 0, rc = 0

Sending message 1 with tag : 1

After MPI_Iprobe, flag = 0, rc = 0

After MPI_Iprobe, flag = 0, rc = 0

After MPI_Iprobe, flag = 0, rc = 0

After MPI_Iprobe, flag = 0, rc = 0

After MPI_Iprobe, flag = 0, rc = 0

After MPI_Iprobe, flag = 0, rc = 0

After MPI_Iprobe, flag = 0, rc = 0

After MPI_Iprobe, flag = 0, rc = 0

After MPI_Iprobe, flag = 0, rc = 0

After MPI_Iprobe, flag = 0, rc = 0

After MPI_Iprobe, flag = 0, rc = 0

After MPI_Iprobe, flag = 0, rc = 0

After MPI_Iprobe, flag = 0, rc = 0

After MPI_Iprobe, flag = 0, rc = 0

After MPI_Iprobe, flag = 0, rc = 0

After MPI_Iprobe, flag = 0, rc = 0

^C[mpiexec@host] Sending Ctrl-C to processes as requested

[mpiexec@host] Press Ctrl-C again to force abort

After MPI_Iprobe, flag = 0, rc = 0

^CThis minimal example will finish successfully after replacing the MPI_ANY_SOURCE with rank 1 as noted in the commented code lines.

And as mentioned, it will work even with the MPI_ANY_SOURCE when compiling with:

- Open MPI v4.0.3, package: Debian OpenMPI, ident: 4.0.3, repo rev: v4.0.3, Mar 03, 2020

My local system runs Ubuntu 20.10. The HPC system runs: Linux 3.10.0 el7 x86_64.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for reaching out to us.

We tried running the same sample code at our end but we didn't face any such behavior.

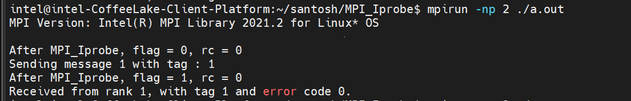

Please find the below screenshot:

So could you please provide us the command that you used to compile & run the sample using Intel MPI?

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My steps were as follows:

wget https://registrationcenter-download.intel.com/akdlm/irc_nas/17764/l_HPCKit_p_2021.2.0.2997_offline.sh

bash l_HPCKit_p_2021.2.0.2997_offline.sh -a -s --eula=accept --install-dir=/opt/intel/oneapi/ --intel-sw-improvement-program-consent=decline --components=intel.oneapi.lin.mpi.devel

source /opt/intel/oneapi/mpi/latest/env/vars.sh -i_mpi_library_kind=debug

mpicc -Wall --std=c11 -o test-mpi test-mpi.c

mpirun -np 2 ./test-mpiThe installation part is a bit of lying as I did installed it manually with the CLI but I'm pretty sure that's the effective configuration, I used.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

>>"mpicc -Wall --std=c11 -o test-mpi test-mpi.c"

We see that you are using mpicc compiler.

Try using Intel's compiler "mpiicc" and let us know if you face any issues.

Example command will be:

mpiicc -o test-mpi test-mpi.c

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Using mpiicc (icc (ICC) 2021.2.0 20210228) changes nothing.

These were my steps:

bash l_HPCKit_p_2021.2.0.2997_offline.sh -a -s --eula=accept --install-dir="/opt/intel-oneapi" --intel-sw-improvement-program-consent=decline --components=intel.oneapi.lin.mpi.devel:intel.oneapi.lin.dpcpp-cpp-compiler-pro

source "/opt/intel-oneapi/mpi/latest/env/vars.sh"

source "/opt/intel-oneapi/compiler/latest/env/vars.sh"

mpiicc --version

icc (ICC) 2021.2.0 20210228

Copyright (C) 1985-2021 Intel Corporation. All rights reserved.

mpiicc -Wall -o test-mpi test-mpi.c && mpirun -np 2 ./test-mpi

I also tested the same steps with the same Intel MPI version (I used the same installer) on some other systems:

- Ubuntu 20.04 x86_64 using AMD Ryzen 9 3900X: BUG with mpicc (gcc 8.4.0) and mpiicc

- Ubuntu 20.04 using Lenovo T14s: BUG with mpicc and mpiicc

- Ubuntu 20.04 using Lenovo T14 AMD Ryzen 7 PRO 4750U: BUG with mpicc and mpiicc

- Linux 3.10.0 el7 using Intel(R) Xeon(R) CPU E5-2680 v3: WORKS with mpicc (gcc Red Hat 4.8.5) and mpicc (gcc 10.2.0) and mpiicc

I don't wanna state the obvious difference in systems with bugs vs. working system. But, even if the hardware might be a factor, it shouldn't lead to such weird bugs. There should be same sanity checks in the installer or somewhere else. It did cost me quite some time. Then again, I saw the warning "Your system is not supported" or similarly in the installer but I did not expect such weirdly specific problems...

Also, it would be interesting to really know instead of just assuming that the the hardware is the problem and what exactly goes wrong! I would be really glad if this got a bugfix anyway.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The bug also does not appear with:

- Linux 3.10.0 el7 using AMD EPYC 7702: WORKS with mpicc (GCC 10.2.0)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

“Thank you for your inquiry. We offer support for hardware platforms that the Intel® oneAPI product supports. These platforms include those that are part of the Intel® Core™ processor family or higher, the Intel® Xeon® processor family, the Intel® Xeon® Scalable processor family, and others which can be found here – Intel® oneAPI Base Toolkit System Requirements, Intel® oneAPI HPC Toolkit System Requirements, Intel® oneAPI IoT Toolkit System Requirements

If you wish to use oneAPI on hardware that is not listed at one of the sites above, we encourage you to visit and contribute to the open oneAPI specification - https://www.oneapi.io/spec/”

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

This thread will no longer be monitored by Intel. If you need any more information, please post a new question.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page