- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello everyone

The error is as follows

Abort(1094543) on node 63 (rank 63 in comm 0): Fatal error in PMPI_Init: Other MPI error, error stack:

MPIR_Init_thread(649)......:

MPID_Init(863).............:

MPIDI_NM_mpi_init_hook(705): OFI addrinfo() failed (ofi_init.h:705:MPIDI_NM_mpi_init_hook:No data available)

forrtl: error (78): process killed (SIGTERM)

Image PC Routine Line Source

cp2k.popt 000000000C8CF8FB Unknown Unknown Unknown

libpthread-2.17.s 00002AC15DB4E5D0 Unknown Unknown Unknown

libpthread-2.17.s 00002AC15DB4D680 write Unknown Unknown

libibverbs.so.1.0 00002AC26A741E29 ibv_exp_cmd_creat Unknown Unknown

libmlx4-rdmav2.so 00002AC26B255355 Unknown Unknown Unknown

libmlx4-rdmav2.so 00002AC26B254199 Unknown Unknown Unknown

libibverbs.so.1.0 00002AC26A7401C3 ibv_create_qp Unknown Unknown

libverbs-fi.so 00002AC26A2E2017 Unknown Unknown Unknown

libverbs-fi.so 00002AC26A2E2CA0 Unknown Unknown Unknown

libverbs-fi.so 00002AC26A2D8FD1 fi_prov_ini Unknown Unknown

libfabric.so.1 00002AC1698A1189 Unknown Unknown Unknown

libfabric.so.1 00002AC1698A1610 Unknown Unknown Unknown

libfabric.so.1 00002AC1698A22AB fi_getinfo Unknown Unknown

libfabric.so.1 00002AC1698A6766 fi_getinfo Unknown Unknown

libmpi.so.12.0.0 00002AC15EF18EB6 Unknown Unknown Unknown

libmpi.so.12.0.0 00002AC15EF0DD1C MPI_Init Unknown Unknown

libmpifort.so.12. 00002AC15E65ECFB MPI_INIT Unknown Unknown

cp2k.popt 000000000366B30E message_passing_m 747 message_passing.F

cp2k.popt 000000000139B7BA f77_interface_mp_ 234 f77_interface.F

cp2k.popt 000000000043CCA5 MAIN__ 198 cp2k.F

Attachment has debug details

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Looks like you were using sockets provider, could you please check with TCP once. You can set FI_PROVIDER=tcp to use TCP as the provider.

eg: export FI_PROVIDER=tcp

Please provide us with a sample reproducer code so we can test it at our end.

Also, please provide us the mpirun command line you have used and your environment details (interconnect, total nodes, OS).

Regards

Prasanth

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks a lot for reply

My system is centos7.6, A total of 64 computing nodes in the cluster,Use 2 nodes in parallel. When using the cp2k program, the command: mpirun -n 128 cp2k.popt -i cp2k.inp 1>cp2k.out 2>cp2k.err

This is the result of fi_info query

[root@k0203 ~]# fi_info

provider: verbs;ofi_rxm

fabric: IB-0x18338657682652659712

domain: mlx4_0

version: 1.0

type: FI_EP_RDM

protocol: FI_PROTO_RXM

provider: verbs

fabric: IB-0x18338657682652659712

domain: mlx4_0

version: 1.0

type: FI_EP_MSG

protocol: FI_PROTO_RDMA_CM_IB_RC

provider: verbs

fabric: IB-0x18338657682652659712

domain: mlx4_0-rdm

version: 1.0

type: FI_EP_RDM

protocol: FI_PROTO_IB_RDM

provider: verbs

fabric: IB-0x18338657682652659712

domain: mlx4_0-dgram

version: 1.0

type: FI_EP_DGRAM

protocol: FI_PROTO_IB_UD

provider: UDP

fabric: UDP-IP

domain: udp

version: 1.1

type: FI_EP_DGRAM

protocol: FI_PROTO_UDP

provider: UDP

fabric: UDP-IP

domain: udp

version: 1.1

type: FI_EP_DGRAM

protocol: FI_PROTO_UDP

provider: UDP

fabric: UDP-IP

domain: udp

version: 1.1

type: FI_EP_DGRAM

protocol: FI_PROTO_UDP

provider: UDP

fabric: UDP-IP

domain: udp

version: 1.1

type: FI_EP_DGRAM

protocol: FI_PROTO_UDP

provider: UDP

fabric: UDP-IP

domain: udp

version: 1.1

type: FI_EP_DGRAM

protocol: FI_PROTO_UDP

provider: UDP

fabric: UDP-IP

domain: udp

version: 1.1

type: FI_EP_DGRAM

protocol: FI_PROTO_UDP

provider: UDP

fabric: UDP-IP

domain: udp

version: 1.1

type: FI_EP_DGRAM

protocol: FI_PROTO_UDP

provider: sockets

fabric: 172.25.0.0/16

domain: eno1

version: 2.0

type: FI_EP_MSG

protocol: FI_PROTO_SOCK_TCP

provider: sockets

fabric: 172.25.0.0/16

domain: eno1

version: 2.0

type: FI_EP_DGRAM

protocol: FI_PROTO_SOCK_TCP

provider: sockets

fabric: 172.25.0.0/16

domain: eno1

version: 2.0

type: FI_EP_RDM

protocol: FI_PROTO_SOCK_TCP

provider: sockets

fabric: 10.25.0.0/16

domain: ib0

version: 2.0

type: FI_EP_MSG

protocol: FI_PROTO_SOCK_TCP

provider: sockets

fabric: 10.25.0.0/16

domain: ib0

version: 2.0

type: FI_EP_DGRAM

protocol: FI_PROTO_SOCK_TCP

provider: sockets

fabric: 10.25.0.0/16

domain: ib0

version: 2.0

type: FI_EP_RDM

protocol: FI_PROTO_SOCK_TCP

provider: sockets

fabric: 192.168.122.0/24

domain: virbr0

version: 2.0

type: FI_EP_MSG

protocol: FI_PROTO_SOCK_TCP

provider: sockets

fabric: 192.168.122.0/24

domain: virbr0

version: 2.0

type: FI_EP_DGRAM

protocol: FI_PROTO_SOCK_TCP

provider: sockets

fabric: 192.168.122.0/24

domain: virbr0

version: 2.0

type: FI_EP_RDM

protocol: FI_PROTO_SOCK_TCP

provider: sockets

fabric: 127.0.0.0/8

domain: lo

version: 2.0

type: FI_EP_MSG

protocol: FI_PROTO_SOCK_TCP

provider: sockets

fabric: 127.0.0.0/8

domain: lo

version: 2.0

type: FI_EP_DGRAM

protocol: FI_PROTO_SOCK_TCP

provider: sockets

fabric: 127.0.0.0/8

domain: lo

version: 2.0

type: FI_EP_RDM

protocol: FI_PROTO_SOCK_TCP

provider: shm

fabric: shm

domain: shm

version: 1.0

type: FI_EP_RDM

protocol: FI_PROTO_SHM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

I can use export FI_PROVIDER=tcp to run normally, but the speed has a greater impact. I want to use the RDMA protocol and try to use export I_MPI_DEVICE=rdma:ofa-v2-ib0 . The above error occurred.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

From the output of fi_info, we can observe that you have mlx and verbs as providers. which means you might be using Infiniband.

Please set FI_PROVIDER=mlx and I_MPI_FABRICS=shm:ofi for better performance over RDMA.

Let us know if you face any issues.

Regards

Prasanth

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi happy New Year

According to the method you said, I added export FI_PROVIDER=mlx and

export I_MPI_FABRICS=shm:ofi but a new error occurred

"Abort(1094543) on node 45 (rank 45 in comm 0): Fatal error in PMPI_Init: Other MPI error, error stack:

MPIR_Init_thread(649)......:

MPID_Init(863).............:

MPIDI_NM_mpi_init_hook(705): OFI addrinfo() failed (ofi_init.h:705:MPIDI_NM_mpi_init_hook:No data available)

"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Could you please set FI_LOG_LEVEL=debug and I_MPI_DEBUG=10 and provide us the debug logs.

eg: export FI_LOG_LEVEL=debug

export I_MPI_DEBUG=10

Regards

Prasanth

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

This is the log with debug mode enabled

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

You might be using an older Infiniband hardware. Please check if you have all the required transports installed for mlx to work

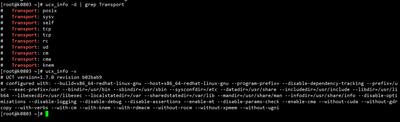

Please refer to this article (Improve Performance and Stability with Intel® MPI Library on...) for required transports for mlx to work. Let us know the transports you have using the command ucx_info -d | grep Transport

The minimum required UCX framework version is 1.4+. Please check your UCX version by using the command ucx_info -v.

Also, if possible, please update to the latest version of IMPI (2019u9).

Regards

Prasanth

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

I'm very sorry, but the reply was late due to work.

This is the result I found.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

After seeing the available transports in your system, we can see that dc transport is missing.

You can follow the steps mentioned in this article - Improve Performance and Stability with Intel® MPI Library on.... Let us know if you face any errors.

Are you facing the same error with the verbs transport? (Check with FI_PROVIDER=verbs)

Regards

Prasanth

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

Thank you very much, I will conduct a more detailed test, and I will let you know if there is any progress.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Let us know if the given workaround in the above-mentioned article works for you.

Regards

Prasanth

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

No error was reported using "FI_PROVIDER=verbs", thank you very much.

However, the cross-node efficiency is very low, and I am still looking for problems.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Have you tried the mlx provider with the given workaround? mlx would be recommended over verbs.

Regards

Prasanth

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We haven't heard back from you. Let us know if your issue isn't resolved.

Regards

Prasanth

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I am closing this thread assuming your issue has been resolved. If you require additional assistance from Intel, please start a new thread. Any further interaction in this thread will be considered community only.

Regards

Prasanth

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page