- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I installed oneAPI basic tool and HPC kits on our HPC (Rocky 8), hard disk shared by a manage node and several compute nodes (24 cores per node).

The envirement was set by:

source /opt/ohpc/pub/compiler/intel/oneapi/setvars.shPrograms run through submiting jobs from manager node with PBSpro .

The tested code is :

program hello_world

include 'mpif.h'

integer ierr, num_procs, id, namelen

character *(MPI_MAX_PROCESSOR_NAME) procs_name

!real::start,stops

logical::master

!double precision::MPI_Wtime()

call MPI_INIT ( ierr )

call MPI_COMM_RANK (MPI_COMM_WORLD, id, ierr)

call MPI_COMM_SIZE (MPI_COMM_WORLD, num_procs, ierr)

call MPI_GET_PROCESSOR_NAME (procs_name, namelen, ierr)

!start=MPI_Wtime()

master=id.eq.0

write(*,'(A24,I2,A3,I2,A14,A15)') "Hello world from process", id, " of", num_procs, &

" processes on ", procs_name

!stops=MPI_Wtime()

!write(*,*)stops

if(master)then

write(*,*)0

endif

call MPI_FINALIZE ( ierr )

end programProgram run successfully with one node by submiting job with the following script:

#!/bin/bash -x

#PBS -N MPITEST

#PBS -l select=1:ncpus=24

#PBS -l walltime=240:00:00

#PBS -j oe

#PBS -k oed

cd $PBS_O_WORKDIR

rm -f hostfile hostfile2

touch hostfile hostfile2

cat $PBS_NODEFILE | sort | uniq >> hostfile2

sort hostfile2 > hostfile

rm -f hostfile2

mpirun -n 24 -hostfile hostfile ./a.out > ./out1The output like:

Hello world from process 1 of24 processes on cn5

Hello world from process 5 of24 processes on cn5

Hello world from process 7 of24 processes on cn5

Hello world from process11 of24 processes on cn5

Hello world from process 0 of24 processes on cn5

......

However, this simple program failed to run on two nodes with the following command:

#!/bin/bash -x

#PBS -N MPITEST

#PBS -l select=2:ncpus=24

#PBS -l walltime=240:00:00

#PBS -j oe

#PBS -k oed

cd $PBS_O_WORKDIR

rm -f hostfile hostfile2

touch hostfile hostfile2

cat $PBS_NODEFILE | sort | uniq >> hostfile2

sort hostfile2 > hostfile

rm -f hostfile2

mpirun -n 48 -hostfile hostfile ./a.out > ./out1The output :

+ mpirun -genv I_MPI_DEBUG=20 -n 48 -hostfile hostfile ./a.out

[mpiexec@cn5] check_exit_codes (../../../../../src/pm/i_hydra/libhydra/demux/hydra_demux_poll.c:117): unable to run bstrap_proxy on cn6 (pid 11558, exit code 256)

[mpiexec@cn5] poll_for_event (../../../../../src/pm/i_hydra/libhydra/demux/hydra_demux_poll.c:159): check exit codes error

[mpiexec@cn5] HYD_dmx_poll_wait_for_proxy_event (../../../../../src/pm/i_hydra/libhydra/demux/hydra_demux_poll.c:212): poll for event error

[mpiexec@cn5] HYD_bstrap_setup (../../../../../src/pm/i_hydra/libhydra/bstrap/src/intel/i_hydra_bstrap.c:1061): error waiting for event

[mpiexec@cn5] HYD_print_bstrap_setup_error_message (../../../../../src/pm/i_hydra/mpiexec/intel/i_mpiexec.c:1031): error setting up the bootstrap proxies

[mpiexec@cn5] Possible reasons:

[mpiexec@cn5] 1. Host is unavailable. Please check that all hosts are available.

[mpiexec@cn5] 2. Cannot launch hydra_bstrap_proxy or it crashed on one of the hosts. Make sure hydra_bstrap_proxy is available on all hosts and it has right permissions.

[mpiexec@cn5] 3. Firewall refused connection. Check that enough ports are allowed in the firewall and specify them with the I_MPI_PORT_RANGE variable.

[mpiexec@cn5] 4. pbs bootstrap cannot launch processes on remote host. You may try using -bootstrap option to select alternative launcher.

(END)

Appreciate to receive any advice and help from the community in advance.

Additional Information:

1. Previously, I installed openmpi 4.1, the program successfully run with openmpi on one node and two nodes.

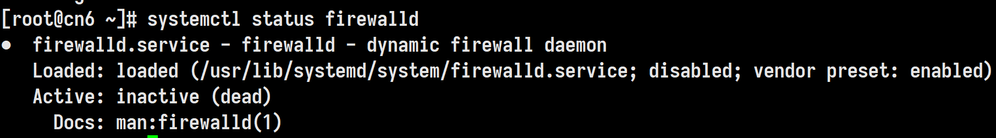

2. I searched some discussions in this community and followed some suggestions to check. i.e. the firewall on manager node and compute nodes are inactive.

3. I can ssh to compute nodes (like, cn6, cn5) from manager node without password. I can also ssh to cn6 from cn5 without password, and vice versa.

4. I further used "set I_MPI_DEBUG=20" and "set FI_LOG_LEVEL=debug", however, no more output.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for reaching out to us.

Could you please confirm which version of Intel MPI Library you are using?

Use the command below to get the Intel MPI Library version:

source /opt/intel/oneapi/setvars.sh

mpirun --version

Could you please let us know the interconnect hardware (example: Infiniband/ Intel Omni-Path) / OFI provider( example: mlx/psm2/psm3/tcp) you are using for running the program on two nodes?

To get a full list of environment variables available for configuring OFI, run the following command:

fi_info -e

Could you please try using the below command and share with us the complete debug log?

I_MPI_DEBUG=30 FI_LOG_LEVEL=debug mpirun -bootstrap ssh -n 48 -ppn 24 -hostfile hostfile ./a.out

If the problem still persists, then could you please provide us with the cluster checker log using the given command below?

Make sure to set up the oneAPI environment before using the cluster checker.

source /opt/intel/oneapi/setvars.sh

clck -f <nodefile>

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you very much for your reply and suggestion.

1. Could you please confirm which version of Intel MPI Library you are using?

A:

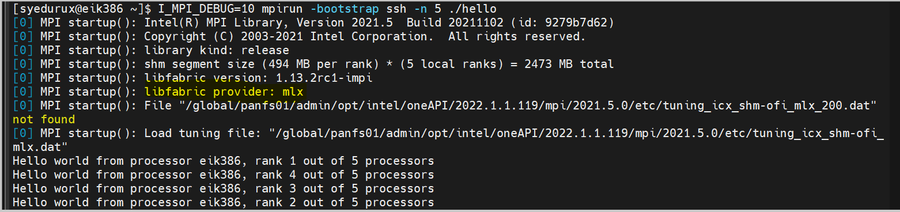

Intel(R) MPI Library for Linux* OS, Version 2021.5 Build 20211102 (id: 9279b7d62)

Copyright 2003-2021, Intel Corporation.

2. Could you please let us know the interconnect hardware (Infiniband/ Intel Omni-Path) / OFI provider(mlx/psm2/psm3) you are using for running the program on two nodes?

A: I'm not familiar with the interconnect hardware / OFI provider. could you please provide more instructions to get this information?

In our HPC, the management node and computing nodes are interconnected via switches and network cables.

The computing node is within the LAN of the management node. The IP information of the LAN of management node is :

eno1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.1.1.254 netmask 255.255.255.0 broadcast 10.1.1.255

inet6 fe80::ae1f:6bff:fe63:c096 prefixlen 64 scopeid 0x20<link>

ether ac:1f:6b:63:c0:96 txqueuelen 1000 (Ethernet)

RX packets 3185215 bytes 242709913 (231.4 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 3345803 bytes 1209306101 (1.1 GiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

device memory 0xfb200000-fb27ffff

the IP informaiton of computing node is :

eno1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.1.1.6 netmask 255.255.255.0 broadcast 10.1.1.255

inet6 fe80::ae1f:6bff:fe27:11f2 prefixlen 64 scopeid 0x20<link>

ether ac:1f:6b:27:11:f2 txqueuelen 1000 (Ethernet)

RX packets 152575 bytes 57344848 (54.6 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 144812 bytes 15970213 (15.2 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

device memory 0xc7120000-c713ffff

The output of "fi_info -e" is attached below (fi_info.txt).

3. Could you please try using the below command and share with us the complete debug log?

A: I tried this command and found that the program run successfully with "-bootstrap ssh" on two nodes (select=2:cpus=24).

However, when I changed some parameters (select=3:cpus=24) and (-np 36), this program failed (this job didn't finish for long time, within 5 seconds for normal test). The debug log is attached (MPITEST.txt)

#PBS -l select=3:ncpus=24

I_MPI_DEBUG=30 FI_LOG_LEVEL=debug mpirun -bootstrap ssh -n 36 -hostfile hostfile ./a.out

Then, I set -np to 48 / 72, both tests are successfull. (I'm very surprised). The debug log of "-np 72" is attached. (MPTEST-72.txt)

I also checked the used nodes. The program run on same nodes (cn5-cn7) for all tests.

Therefore, now, the problems are:

- why the program failed with parameters (select=3:cpus=24) and (-np 36)? How to solve?

- the program run successfully on two nodes with "-bootstrap ssh". is there any more convenient way to solve this problem? May cause some difficulties when compiling large software.

4. If the problem still persists, could you please provide us with the cluster checker log using the given command below?

A: I tried this checker, and got the following output.

Running Analyze

SUMMARY

Command-line: clck -f hostfile

Tests Run: health_base

Overall Result: No issues found

-----------------------------------------------------------------------------------------------------------------------------------------------------

3 nodes tested: cn[5-7]

3 nodes with no issues: cn[5-7]

0 nodes with issues:

-----------------------------------------------------------------------------------------------------------------------------------------------------

FUNCTIONALITY

No issues detected.

HARDWARE UNIFORMITY

No issues detected.

PERFORMANCE

No issues detected.

SOFTWARE UNIFORMITY

No issues detected.

See the following files for more information: clck_results.log, clck_execution_warnings.log

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for providing the details and debug logs.

Intel® MPI Library supports mlx, tcp, psm2,psm3, sockets, verbs, and RxM OFI providers. As mentioned in my previous post, the below command will give you the list of environment variables available for configuring OFI provider.

fi_info -eFor more information regarding OFI providers, please refer to the below link:

>>"this program failed (this job didn't finish for long time, within 5 seconds for normal test). The debug log is attached (MPITEST.txt)"

As we can not see much debug log in the attachment "MPITEST.txt" you provided, could you please confirm whether your program hangs when you use -np=36?

Could you please try using the below commands :

source /opt/intel/oneapi/setvars.sh

export VT_CHECK_TRACING=on

I_MPI_DEBUG=30 FI_LOG_LEVEL=debug mpirun -bootstrap ssh -check_mpi -n 36 -ppn 12 -hostfile hostfile ./a.out

Let us know if it resolves your issue. If the issue still persists, provide us with the complete debug log using the above command.

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you very much for your reply.

1. I tried the following command, but it didn't work. I decided to shelve the issue(program cannot run with -n 36) for now.

source /opt/intel/oneapi/setvars.sh

export VT_CHECK_TRACING=on

I_MPI_DEBUG=30 FI_LOG_LEVEL=debug mpirun -bootstrap ssh -check_mpi -n 36 -ppn 12 -hostfile hostfile ./a.out2. Now, the program can run on multi nodes with "-bootstrap ssh". Is there any configuration I can do on the ONEAPI package to avoid using this additional parameter when running a program?

3. I understand that Intel® MPI Library supports mlx, tcp, psm2, psm3,

The command "fi_info -e" print out a series of information with "#" symbol (front attachment "fi_info.txt"). Howeve to confirm the provider?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

>>" Now, the program can run on multi nodes with "-bootstrap ssh". Is there any configuration I can do on the ONEAPI package to avoid using this additional parameter when running a program?"

Could you please try the below commands?

export I_MPI_HYDRA_BOOTSTRAP=ssh

I_MPI_DEBUG=30 mpirun -n 48 -ppn 24 -hostfile hostfile ./a.out

Please let us know if the above commands work for running the program on multi nodes.

>>" But I don't known how to confirm which one is used by our HPC."

We can check the OFI provider by debugging using I_MPI_DEBUG as below. Check the libfabric provider field in the debug log.

From the above debug screenshot, we can confirm that we are using mlx as OFI provider (libfabric provider: mlx). Similarly, you can check the OFI provider being used at your HPC cluster.

If needed, we can change this provider using the "FI_PROVIDER=<arg>" environment variable explicitly.

The command "fi_info" gives you the list of environment variables available for configuring OFI provider. Refer to the file fi_info.txt attached.

As we can see from the fi_info.txt file, that the available providers at my HPC cluster are: verbs, tcp, sockets, psm3, mlx and shm.

So I can change FI_PROVIDER to any of these possible values.

export FI_PROVIDER=verbs /tcp /sockets /psm3 /mlx /shm

Similarly, you can try changing the FI_PROVIDER if needed.

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We haven't heard back from you. Is your issue resolved? If yes, then could you please confirm whether to close this thread from our end? Else, please get back to us.

Thanks & Regards,

Santosh

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We assume that your issue is resolved. If you need any additional information, please post a new question as this thread will no longer be monitored by Intel.

Thanks & Regards,

Santosh

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page