- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I was measuring the overheads in using SGX. For the memory access I had read that there are significant overheads when the size of data being accessed reaches close to L3 cache size [1]. The reason being the encryption and decryption of cache line during random accesses [1].

So I created a random read/write micro benchmark to see the impact for myself. My micro benchmark has the following function that randomly writes an array. An exact function also executes in the enclave via an ECALL (except that random number "r" is generated using sgx_read_rand in enclave)

void rand_write(){

for(int i=0; i<N; i++){

int r = rand() % N;

arr = r*(r/2);

}

return;

}

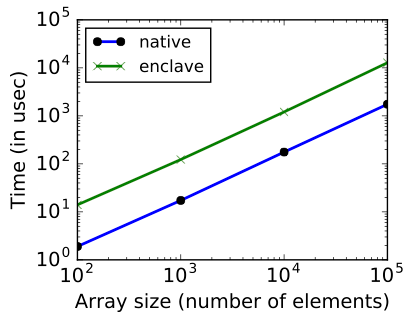

The micro benchmark creates an integer array (as global variable) of 'N' integers (x-axis for the graph below) and 'N' random writes are executed on the array as shown in the function above. The time taken (the y-axis of the graph below) is the average time by this function, when running in enclave and as native C function, over a 1000 runs. What I observed is shown in the graph below. Native random writes perform about 7 times faster. I observe similar behaviour for random reads as well. However, sequential reads and writes take pretty much the same time for both enclaves and native applications.

I am running the SGX application in hardware debug mode and on Intel Xeon E3-1230 v5.

What is the reason behind this slowdown in enclaves? Is there some other overhead because with small int array sizes, L3 cache misses shouldn't be the reason. Or perhaps I am doing something wrong?

Reference:

[1] Arnautov, Sergei, et al. "SCONE: Secure linux containers with Intel SGX." 12th USENIX Symp. Operating Systems Design and Implementation. 2016.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Decrypting the data that comes from EPC is a relatively fast operation. However, if you're accessing EPC, the Memory Encryption Engine (MEE) needs to perform additional memory reads to bring in certain parts of the integrity tree that are needed to verify the confidentiality, integrity, and freshness of the data the enclave is accessing in the first place. To avoid significant overheads, the MEE has a cache where counters, versions and tags of the integrity tree are stored.

The benefits of the MEE cache depend on how an enclave accesses EPC. In the worst case, an access to EPC memory may require reading all levels of the MEE tree. It looks like this is what you are seeing.

Have a look at this blog, which references a paper that explains the MEE design trade-offs: A Memory Encryption Engine Suitable for General Purpose Processors.

Note that "native" code accounts for linking with different libraries. Although your analysis seems reasonable, I'd also compare an enclave running in HW with one in simulation mode. This should ensure the code is the same (same libraries).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the prompt reply and the reference. It was useful to get an insight on how MEE works.

So, after reading that whitepaper, it was clear to me that the MEE overheads shouldn't be significant as long as the data was being mainly read from cache instead of EPC. In my case the largest array size was 100,000 elements which meant that it should take about ~ 400KB of space, so frequent L3 cache misses shouldn't be a problem.

The problem was the fact that I was call sgx_read_rand() inside the for loop for generating the random number. It turns out that sgx_read_rand() takes about 7 times longer compared to a pseudo random rand() call (that I was using in the native application). This was the sole cause of the large overhead that I was observing.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page