- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I have a frozen FP32 TF model. I want to convert the model to the below requirement.

Input layer : signed 16 bit integer

matrix weights : signed 8 bit integer

bias weights : signed 32 bit integer.

When quantized, the input layer is still Float32. Is there a way in Intel's Neural Compressor to achieve the requirement?

Regards,

Anand Viswanath A

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Anand,

Thank you for posting in Intel Communities.

Quantization is the replacement of floating-point arithmetic computations (FP32) with integer arithmetic (INT8). Using lower-precision data reduces memory bandwidth and accelerates performance.

8-bit computations (INT8) offer better performance compared to higher-precision computations (FP32) because they enable loading more data into a single processor instruction. Using lower-precision data requires less data movement, which reduces memory bandwidth.

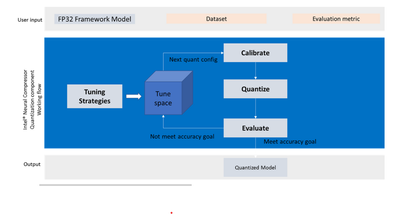

Intel neural compressor supports automatic quantization tuning flow by converting quantizable layers to INT8 and allowing users to control model accuracy and performance.

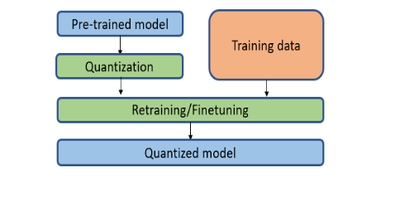

Intel® Neural Compressor Quantization Working Flow

====================================================

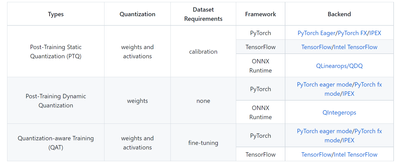

Quantization methods include the following three types:

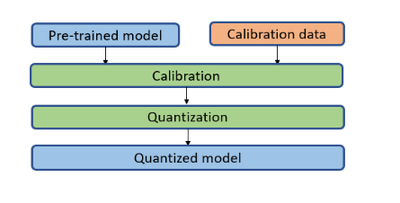

Post-Training Static Quantization:

---------------------------------

Performs quantization on already trained models, it requires an additional pass over the dataset to work, only activations do calibration.

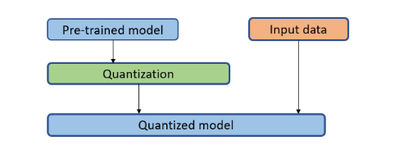

Post-Training Dynamic Quantization:

----------------------------------

Simply multiplies input values by a scaling factor, then rounds the result to the nearest, it determines the scale factor for activations dynamically based on the data range observed at runtime. Weights are quantized ahead of time but the activations are dynamically quantized during inference.

Quantization-aware Training (QAT):

---------------------------------

Quantizes models during training and typically provides higher accuracy comparing with post-training quantization, but QAT may require additional hyper-parameter tuning and it may take more time to deployment.

Could you please refer the below links

https://github.com/intel/neural-compressor/blob/master/docs/bench.md

https://github.com/intel/neural-compressor/blob/master/docs/Quantization.md

Hope this will clarify your doubts.

Thanks

Rahila

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We have not heard back from you. Please let me know if we can go ahead and close this case?

Thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We have not heard back from you. This thread will no longer be monitored by Intel. If you need further assistance, please post a new question.

Thanks

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page