- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, i have this program that i wrote for performing PageRank.

It compiles and runs in CPU, but i cannot run it on GPU because of this error:

terminate called after throwing an instance of 'sycl::_V1::compile_program_error'

what(): The program was built for 1 devices

Build program log for 'Intel(R) UHD Graphics [0x9a60]':

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for sycl::_V1::detail::RoundedRangeKernel<sycl::_V1::item<1, true>, 1, main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)>'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for sycl::_V1::detail::RoundedRangeKernel<sycl::_V1::item<1, true>, 1, main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)>'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for sycl::_V1::detail::RoundedRangeKernel<sycl::_V1::item<1, true>, 1, main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)>'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for sycl::_V1::detail::RoundedRangeKernel<sycl::_V1::item<1, true>, 1, main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)>'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for sycl::_V1::detail::RoundedRangeKernel<sycl::_V1::item<1, true>, 1, main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)>'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for sycl::_V1::detail::RoundedRangeKernel<sycl::_V1::item<1, true>, 1, main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)>'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for sycl::_V1::detail::RoundedRangeKernel<sycl::_V1::item<1, true>, 1, main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)>'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for sycl::_V1::detail::RoundedRangeKernel<sycl::_V1::item<1, true>, 1, main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)>'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for sycl::_V1::detail::RoundedRangeKernel<sycl::_V1::item<1, true>, 1, main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)>'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for sycl::_V1::detail::RoundedRangeKernel<sycl::_V1::item<1, true>, 1, main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)>'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)'

error: backend compiler failed build.

-11 (PI_ERROR_BUILD_PROGRAM_FAILURE)

Aborted

I think this is going to be a potential error also when i will run it on FPGA which, by the way, i cannot trigger.

The command i've run was this one

qsub -I -l nodes=1:fpga_compile:ppn=2 -d .

then the compiled file, but the device triggered was the CPU again.

Here's the main code:

#include <sycl/sycl.hpp>

#include <sycl/ext/intel/fpga_extensions.hpp>

// #include <oneapi/mkl/blas.hpp>

#include <cmath>

#include <chrono>

#include <iostream>

#include <vector>

#include <cmath>

#include "guideline.h"

#include "print_vector.h"

#include "print_time.h"

#include "read_graph.h"

#include "flatVector.h"

using namespace sycl;

int main(int argc, char* argv[]){

// Check Command Line

if(argc < 6){

// NOT ENOUGH PARAMS BY COMMAND LINE -> PROGRAM HALTS

guideline();

}

else{

// Command Line parsing

int device_selected = atoi(argv[1]);

std::string csv_path = argv[2];

float threshold = atof(argv[3]);

float damping = atof(argv[4]);

int verbose;

try{verbose = atoi(argv[5]);}

catch (exception const& e) {verbose = 0;}

device d;

// Selezioniamo la piattaforma di accelerazione

if(device_selected == 1){

d = device(cpu_selector_v()); //# cpu_selector returns a cpu device

}

if(device_selected == 2){

try {

d = device(gpu_selector_v()); //# gpu_selector returns a gpu device

} catch (exception const& e) {

std::cout << "Cannot select a GPU\n" << e.what() << "\n";

std::cout << "Using a CPU device\n";

d = device(cpu_selector_v()); //# cpu_selector returns a cpu device

}

}

if(device_selected == 3){

ext::intel::fpga_selector d;

}

// Queue

queue q(d);

std::cout << "Device : " << q.get_device().get_info<info::device::name>() << "\n"; // print del device

// Reading and setup Time Calculation

auto start_setup = std::chrono::steady_clock::now();

// Graph Retrieval by csv file

std::vector<std::vector<int>> graph = Read_graph(csv_path);/*Sparse Matrix Representation with the description of each Edge of the Graph*/

std::vector<int> flatGraph = flatten<int>(graph);

// Calculation of the # Nodes

int numNodes = countNodes(graph);

// Calculation of the Degree of each node

std::vector<int> degreesNodes = getDegrees(graph, numNodes+1);

auto end_setup = std::chrono::steady_clock::now();

// Setup Execution Time print

std::cout << "TIME FOR SETUP" << "\n";

print_time(start_setup, end_setup);

// Check Print

//printVector<int>(degreesNodes);

//Creation of Initial and Final Ranks' vectors of PageRank [R(t); R(t+1)]

std::vector<float> ranks_t(numNodes, (float)(1.0/ (float)(numNodes)));

std::vector<float> ranks_t_plus_one(numNodes, 0.0);

// PageRank Execution Time calculation

auto start = std::chrono::steady_clock::now();

buffer<int> bufferEdges(flatGraph.data(),flatGraph.size());

buffer<float> bufferRanks(ranks_t.data(),ranks_t.size());

buffer<int> bufferDegrees(degreesNodes.data(),degreesNodes.size());

buffer<float> bufferRanksNext(ranks_t_plus_one.data(),ranks_t_plus_one.size());

float distance = threshold + 1;

int graph_size = flatGraph.size();

while (distance > threshold) {

q.submit([&](handler &h){

accessor Edges(bufferEdges,h,read_only);

accessor Ranks(bufferRanks,h,read_only);

accessor Degrees(bufferDegrees,h,read_only);

accessor RanksNext(bufferRanksNext,h,write_only);

h.parallel_for(range<1>(numNodes),[=] (id<1> i){

RanksNext[i] = (1.0 - damping) / numNodes;

int index_node_i;

int index_node_j;

for (int j = 0; j<graph_size;j++) {

index_node_i = 2 * j;

index_node_j = index_node_i + 1;

if (Edges[index_node_j] == i) {

RanksNext[i] += damping * Ranks[Edges[index_node_i]] / Degrees[Edges[index_node_i]];

}

}

});

});

distance = 0;

for (int i = 0; i < numNodes; i++) {

distance += (ranks_t[i] - ranks_t_plus_one[i]) * (ranks_t[i] - ranks_t_plus_one[i]);

}

distance = sqrt(distance);

ranks_t_plus_one = ranks_t;

}

auto end = std::chrono::steady_clock::now();

// PageRank Results Printing

if(verbose == 1){printVector(ranks_t_plus_one);}

std::cout<<std::endl<<std::endl<<std::endl;

std::cout<<"Final Norm:\t"<<distance<<std::endl;

// PageRank Execution Time Printing

std::cout << "TIME FOR PAGERANK" << "\n";

print_time(start, end);

}

return 0;

}

Can anyone help me? Thank you in advance!

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

little edit of the code since i saw some conceptual errors on how i wrote the pagerank algorithm:

q.submit([&](handler &h){

accessor Edges(bufferEdges,h,read_only);

accessor Ranks(bufferRanks,h,read_only);

accessor Degrees(bufferDegrees,h,read_only);

accessor RanksNext(bufferRanksNext,h,write_only);

h.parallel_for(range<1>(numNodes),[=] (id<1> i){

RanksNext[i] = (1.0 - damping) / numNodes;

int index_node_i;

int index_node_j;

for (int j = 0; j<graph_size;j+=2) {

index_node_i = j;

index_node_j = j + 1;

if (Edges[index_node_j] == i) {

RanksNext[i] += damping * Ranks[Edges[index_node_i]] / Degrees[Edges[index_node_i]];

}

}

});

}).wait();Also, from watching other resources online i found out a way to see the available machines of 1api with this commands

pbsnodes | grep -B4 gpu

Which is useful to search for gen9 GPUs, which can accept double. Then, i submit the job with the following command:

qsub -I -l nodes=<node_with_gen9_GPU>:ppn=2 -d .

It Works, but the programs throws this exception after the normal prints which i wanted to have

Device : Intel(R) UHD Graphics P630 [0x3e96]

TIME FOR SETUP

Elapsed time in nanoseconds: 9672748170 ns

Elapsed time in microseconds: 9672748 µs

Elapsed time in milliseconds: 9672 ms

Elapsed time in seconds: 9 sec

terminate called after throwing an instance of 'sycl::_V1::runtime_error'

what(): Native API failed. Native API returns: -1 (PI_ERROR_DEVICE_NOT_FOUND) -1 (PI_ERROR_DEVICE_NOT_FOUND)

Aborted

How can i solve these issues? Could they be a problem also by Transporting this program to an FPGA?

Thank you in advance!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thank you for posting in Intel Communities.

Have you tried calculating RanksNext operation using int datatype inside parallel_for?

We have compiled your source code and did not face any issues. Could you please provide the print_vector.h and run time arguments you were passing?

Thanks and Regards,

Pendyala Sesha Srinivas

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here’s the print_vector.h

include <iostream>

#include <vector>

template <typename T>

void printVector(std::vector<T> vector_like_var){

for(int i = 0; i < vector_like_var.size(); i++){

std::cout<< "element " <<i+1 << "of vector:\t" << vector_like_var[i] <<std::endl;

}

}

And here’s the command line argument I passed

./<executable_name> 2 <csv_path> 3e-5 0.85 0

I can’t pass the csv because they are quite heavy. But every csv containing a list of pairs of nodes should be fine.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Could you please try the whole calculation in the kernel using int datatype and let us know the results?

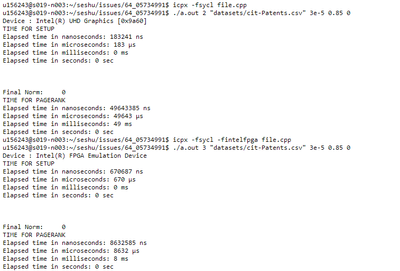

I am able to run the executable with a sample csv file on GPU and FPGA after changing the source file. Please find the below screenshot for more details.

If this does not resolve your issue, could you please send the sample CSV file so that it will be greatly helpful while investigating this case?

Thanks and Regards,

Pendyala Sesha Srinivas

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi! thanks for the reply. Unfortunatly, the task of the algorithm is in a domain of problem where i'm constrained to use non-int datatypes.

I managed to shrink a little the dataset. It's just a collection of edges of a graph described by pairs of nodes separated by a ","

Anyway, just to make a try, i compiled using the int datatypes, and i still got the same results

Just to report the logs of the errors when loading to GPUs:

<myUser>@s019-n010:~/HPCA_FINAL_PROJECT$ qsub -I -l nodes=s001-n234:ppn=2 -d .

qsub: waiting for job 2194177.v-qsvr-1.aidevcloud to start

qsub: job 2194177.v-qsvr-1.aidevcloud ready

########################################################################

# Date: Tue 14 Feb 2023 02:21:49 PM PST

# Job ID: 2194177.v-qsvr-1.aidevcloud

# User: <myUser>

# Resources: cput=75:00:00,neednodes=s001-n234:ppn=2,nodes=s001-n234:ppn=2,walltime=06:00:00

########################################################################

<myUser>@s001-n234:~/HPCA_FINAL_PROJECT$ ./BUFFER_PageRank 2 "datasets/cit-Patents.csv" 3e-05 0.85 0

Device : Intel(R) UHD Graphics P630 [0x3e96]

TIME FOR SETUP

Elapsed time in nanoseconds: 9587884043 ns

Elapsed time in microseconds: 9587884 µs

Elapsed time in milliseconds: 9587 ms

Elapsed time in seconds: 9 sec

terminate called after throwing an instance of 'sycl::_V1::runtime_error'

what(): Native API failed. Native API returns: -1 (PI_ERROR_DEVICE_NOT_FOUND) -1 (PI_ERROR_DEVICE_NOT_FOUND)

Abortedthe one above was done after i chose the node having 9 gen GPUs, selected by searching the first one available printed out by the command pbsnodes | grep -B4 gpu

The one under is the one i have by using the classic qsub -I -l nodes=1:gpu:ppn2 -d .

<myUser>@s019-n010:~/HPCA_FINAL_PROJECT$ ./BUFFER_PageRank 2 "datasets/cit-Patents.csv" 3e-05 0.85 0

Device : Intel(R) UHD Graphics [0x9a60]

TIME FOR SETUP

Elapsed time in nanoseconds: 6535755467 ns

Elapsed time in microseconds: 6535755 µs

Elapsed time in milliseconds: 6535 ms

Elapsed time in seconds: 6 sec

terminate called after throwing an instance of 'sycl::_V1::compile_program_error'

what(): The program was built for 1 devices

Build program log for 'Intel(R) UHD Graphics [0x9a60]':

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for sycl::_V1::detail::RoundedRangeKernel<sycl::_V1::item<1, true>, 1, main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)>'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for sycl::_V1::detail::RoundedRangeKernel<sycl::_V1::item<1, true>, 1, main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)>'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for sycl::_V1::detail::RoundedRangeKernel<sycl::_V1::item<1, true>, 1, main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)>'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for sycl::_V1::detail::RoundedRangeKernel<sycl::_V1::item<1, true>, 1, main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)>'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for sycl::_V1::detail::RoundedRangeKernel<sycl::_V1::item<1, true>, 1, main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)>'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for sycl::_V1::detail::RoundedRangeKernel<sycl::_V1::item<1, true>, 1, main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)>'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for sycl::_V1::detail::RoundedRangeKernel<sycl::_V1::item<1, true>, 1, main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)>'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for sycl::_V1::detail::RoundedRangeKernel<sycl::_V1::item<1, true>, 1, main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)>'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for sycl::_V1::detail::RoundedRangeKernel<sycl::_V1::item<1, true>, 1, main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)>'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for sycl::_V1::detail::RoundedRangeKernel<sycl::_V1::item<1, true>, 1, main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)>'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)'

error: backend compiler failed build.

error: Double type is not supported on this platform.

in kernel: 'typeinfo name for main::'lambda'(sycl::_V1::handler&)::operator()(sycl::_V1::handler&) const::'lambda'(sycl::_V1::id<1>)'

error: backend compiler failed build.

-11 (PI_ERROR_BUILD_PROGRAM_FAILURE)

Aborted- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Please find the below-modified source file which was compiled and executed without any errors on CPU, GPU, and FPGA.

#include <sycl/sycl.hpp>

#include <sycl/ext/intel/fpga_extensions.hpp>

#include <cmath>

#include <chrono>

#include <iostream>

#include <vector>

#include <cmath>

#include "guideline.h"

#include "print_vector.h"

#include "print_time.h"

#include "read_graph.h"

#include "flatVector.h"

using namespace sycl;

using namespace std;

int main(int argc, char* argv[])

{

// Check Command Line

if(argc < 6)

{

// NOT ENOUGH PARAMS BY COMMAND LINE -> PROGRAM HALTS

guideline();

}

else{

// Command Line parsing

int device_selected = atoi(argv[1]);

std::string csv_path = argv[2];

float threshold = atof(argv[3]);

float damping = atof(argv[4]);

int verbose;

try{verbose = atoi(argv[5]);}

catch (std::exception const& e) {verbose = 0;}

device d;

// Selezioniamo la piattaforma di accelerazione

if(device_selected == 1)

{

d = device(cpu_selector_v); //# cpu_selector returns a cpu device

}

if(device_selected == 2)

{

try

{

d = device(gpu_selector_v); //# gpu_selector returns a gpu device

} catch (std::exception const& e)

{

std::cout << "Cannot select a GPU\n" << e.what() << "\n";

std::cout << "Using a CPU device\n";

d = device(cpu_selector_v); //# cpu_selector returns a cpu device

}

}

if(device_selected == 3)

{

d = device(ext::intel::fpga_emulator_selector());

}

queue q(d);

std::cout << "Device : " << q.get_device().get_info<info::device::name>() << "\n"; // print del device

// Reading and setup Time Calculation

auto start_setup = std::chrono::steady_clock::now();

// Graph Retrieval by csv file

std::vector<std::vector<int>> graph = Read_graph(csv_path);/*Sparse Matrix Representation with the description of each Edge of the Graph*/

std::vector<int> flatGraph = flatten<int>(graph);

// Calculation of the # Nodes

int numNodes = countNodes(graph);

// Calculation of the Degree of each node

std::vector<int> degreesNodes = getDegrees(graph, numNodes+1);

auto end_setup = std::chrono::steady_clock::now();

// Setup Execution Time print

std::cout << "TIME FOR SETUP" << "\n";

print_time(start_setup, end_setup);

// Check Print

//printVector<int>(degreesNodes);

//Creation of Initial and Final Ranks' vectors of PageRank [R(t); R(t+1)]

std::vector<float> ranks_t(numNodes, (float)(1.0/ (float)(numNodes)));

std::vector<float> ranks_t_plus_one(numNodes, 0.0);

std::vector<float> ranksDifferences(numNodes, 0.0);

// PageRank Execution Time calculation

auto start = std::chrono::steady_clock::now();

buffer<int> bufferEdges(flatGraph.data(),flatGraph.size());

buffer<float> bufferRanks(ranks_t.data(),ranks_t.size());

buffer<int> bufferDegrees(degreesNodes.data(),degreesNodes.size());

buffer<float> bufferRanksNext(ranks_t_plus_one.data(),ranks_t_plus_one.size());

buffer<float> bufferRanksDifferences(ranksDifferences.data(),ranksDifferences.size());

float distance = threshold + 1;

int graph_size = flatGraph.size();

std::cout<<"graph_size = "<<graph_size<<"\n";

std::cout<<"damping = "<<damping<<"\n";

std::cout<<"numNodes = "<<numNodes<<"\n";

int T = 1;

while (distance > threshold) {

q.submit([&](handler &h){

accessor Edges(bufferEdges,h,read_only);

accessor Ranks(bufferRanks,h,read_only);

accessor Degrees(bufferDegrees,h,read_only);

accessor RanksNext(bufferRanksNext,h,read_write);

accessor RanksDifferences(bufferRanksDifferences,h,read_write);

auto out = stream(1024, 256, h);

h.parallel_for(range(numNodes), [=](auto i)

{

RanksNext[i] = (1 - damping) / numNodes;

int index_node_i;

int index_node_j;

for (int j = 0; j<graph_size;j+=2)

{

index_node_i = j;

index_node_j = j + 1;

if (Edges[index_node_j] == i)

{

RanksNext[i] = RanksNext[i] + damping * Ranks[Edges[index_node_i]] / Degrees[Edges[index_node_i]];

}

}

RanksDifferences[i] = (RanksNext[i] - Ranks[i]) * (RanksNext[i] - Ranks[i]);

});

}).wait();

distance = 0.0;

for (int i = 0; i < numNodes; i++)

{

distance = distance + ranksDifferences[i];

ranks_t[i] = ranks_t_plus_one[i];

ranks_t_plus_one[i] = 0.0;

}

distance = sqrt(distance);

std::cout<< "Time:\t" << T << "\tEuclidian Distance:\t" << distance << std::endl;

T=T+1;

}

auto end = std::chrono::steady_clock::now();

// PageRank Results Printing

if(verbose == 1){

for(int i = 0;i<ranks_t.size();i++){

std::cout<<"Final Vector" << i<< "-th component:\t"<<ranks_t[i]<<std::endl;

}

}

std::cout<<std::endl<<std::endl<<std::endl;

std::cout<<"Final Norm:\t"<<distance<<std::endl;

// PageRank Execution Time Printing

std::cout << "TIME FOR PAGERANK" << "\n";

print_time(start, end);

}

return 0;

}

Hope this resolves your issue.

Thanks and Regards,

Pendyala Sesha Srinivas

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, Thanks for the reply.

I just tried it on a GPU and i still got the same errors as above

terminate called after throwing an instance of 'sycl::_V1::runtime_error'

what(): Native API failed. Native API returns: -1 (PI_ERROR_DEVICE_NOT_FOUND) -1 (PI_ERROR_DEVICE_NOT_FOUND)

Abortedi think that maybe then i am doing something wrong during compilation time.

Could you pass me the lines you wrote on the terminal to see if i'm doing the things correctly?

I'm doing like this

if i'm working from terminal:

ssh devcloudElse, if i'm working with jupyter notebook of devcloud

qsub -I

cd <FolderProject>

icpx -fsycl main.cpp -o BUFFER_PageRank*FOR CPU*

./BUFFER_PageRank 1 "<datasetPath>" 3e-05 0.85 0

*FOR GPU*

qsub -I -l nodes=1:gpu:ppn=2 -d .

./BUFFER_PageRank 2 "<datasetPath>" 3e-05 0.85 0*FOR FPGA*

qsub -I -l nodes=1:fpga_compile:ppn=2 -d .

./BUFFER_PageRank 3 "<datasetPath>" 3e-05 0.85 0

So, just to clarify, i'm compiling once on the CPU job. Then, i'm using the same executable also for GPU and FPGA.

Am i doing this wrong?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

After connecting to the devcloud, you can access a node that has an Intel CPU device.

For compiling the source file you can use the below command.

icpx -fsycl main.cpp

For accessing an Intel GPU device in a node and compiling the source file you can use the below commands.

qsub -I -l nodes=1:gpu:ppn=2 -d .

icpx -fsycl main.cpp

For accessing an Intel FPGA device in a node and compiling the source file you can use the below commands.

qsub -I -l nodes=1:fpga:ppn=2 -d .

icpx -fsycl -fintelfpga main.cpp

The generated executables will be different after compiling on GPU and FPGA devices.

Hope this resolves your issue.

Thanks and Regards,

Pendyala Sesha Srinivas

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Has the information provided above helped? If yes, could you please confirm whether we can close this thread from our end?

Thanks and Regards,

Pendyala Sesha Srinivas

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi!

Actually no, i already tried all of the combos of the commands you gave me on the devcloud and i couldn't run on GPU or FPGA neither.

Anyway, i found out a way to run locally some experiments with other GPUs and it seems to work, even if the results differ from the ones i obtained with the CPUs (i will open a thread about it and close this one).

I think the problem in my case is that some of the drivers, environment or Configs of the GPUs/OneAPI that i have adopted locally differ from the ones that you adopt on the devcloud.

Is there a way to check envs/gpu specs?

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Please find the modified source file below which I was able to compile and execute without any errors on CPU, GPU, and FPGA.

#include <sycl/sycl.hpp>

#include <sycl/ext/intel/fpga_extensions.hpp>

#include <cmath>

#include <chrono>

#include <iostream>

#include <vector>

#include <cmath>

#include "guideline.h"

#include "print_vector.h"

#include "print_time.h"

#include "read_graph.h"

#include "flatVector.h"

using namespace sycl;

using namespace std;

int main(int argc, char* argv[])

{

// Check Command Line

if(argc < 6)

{

// NOT ENOUGH PARAMS BY COMMAND LINE -> PROGRAM HALTS

guideline();

}

else

{

// Command Line parsing

int device_selected = atoi(argv[1]);

std::string csv_path = argv[2];

float threshold = atof(argv[3]);

float damping = atof(argv[4]);

int verbose;

try{verbose = atoi(argv[5]);}

catch (std::exception const& e) {verbose = 0;}

device d;

// Selezioniamo la piattaforma di accelerazione

if(device_selected == 1)

{

d = device(cpu_selector_v); //# cpu_selector returns a cpu device

}

if(device_selected == 2){

try

{

d = device(gpu_selector_v); //# gpu_selector returns a gpu device

} catch (std::exception const& e) {

std::cout << "Cannot select a GPU\n" << e.what() << "\n";

std::cout << "Using a CPU device\n";

d = device(cpu_selector_v); //# cpu_selector returns a cpu device

}

}

if(device_selected == 3){

d = device(ext::intel::fpga_emulator_selector());

}

queue q(d);

std::cout << "Device : " << q.get_device().get_info<info::device::name>() << "\n"; // print del device

// Reading and setup Time Calculation

auto start_setup = std::chrono::steady_clock::now();

// Graph Retrieval by csv file

std::vector<std::vector<int>> graph = Read_graph(csv_path);/*Sparse Matrix Representation with the description of each Edge of the Graph*/

//std::vector<int> flatGraph = flatten<int>(graph,numNodes);

// Calculation of the # Nodes

int numNodes = countNodes(graph);

std::vector<int> flatGraph = flatten<int>(graph);

// Calculation of the Degree of each node

std::vector<int> degreesNodes = getDegrees(graph, numNodes+1);

auto end_setup = std::chrono::steady_clock::now();

// Setup Execution Time print

std::cout << "TIME FOR SETUP" << "\n";

print_time(start_setup, end_setup);

// Check Print

//printVector<int>(degreesNodes);

//Creation of Initial and Final Ranks' vectors of PageRank [R(t); R(t+1)]

std::vector<float> ranks_t(numNodes, (float)(1.0f/ (float)(numNodes)));

std::vector<float> ranks_t_plus_one(numNodes, 0.0);

std::vector<float> ranksDifferences(numNodes, 0.0);

// PageRank Execution Time calculation

auto start = std::chrono::steady_clock::now();

buffer<int> bufferEdges(flatGraph.data(),flatGraph.size());

buffer<float> bufferRanks(ranks_t.data(),ranks_t.size());

buffer<int> bufferDegrees(degreesNodes.data(),degreesNodes.size());

buffer<float> bufferRanksNext(ranks_t_plus_one.data(),ranks_t_plus_one.size());

buffer<float> bufferRanksDifferences(ranksDifferences.data(),ranksDifferences.size());

float distance = threshold + 1;

int graph_size = flatGraph.size();

std::cout<<"graph_size = "<<graph_size<<"\n";

std::cout<<"damping = "<<damping<<"\n";

std::cout<<"numNodes = "<<numNodes<<"\n";

int T = 1;

bufferRanksDifferences.get_access<access::mode::write>()[range(numNodes)] = 0.0f;

while (distance > threshold) {

q.submit([&](handler &h){

accessor Edges(bufferEdges,h,read_only);

accessor Ranks(bufferRanks,h,read_only);

accessor Degrees(bufferDegrees,h,read_write);

accessor RanksNext(bufferRanksNext,h,read_write);

accessor RanksDifferences(bufferRanksDifferences,h,read_write);

auto out = stream(1024, 256, h);

h.parallel_for(range(numNodes), [=](auto i){

RanksNext[i] = (1.0f - damping) / numNodes;

int index_node_i;

int index_node_j;

for (int j = 0; j<graph_size;j+=2)

{

index_node_i = j;

index_node_j = j + 1;

if (Edges[index_node_j] == i)

{

RanksNext[i] += damping * Ranks[Edges[index_node_i]] / Degrees[Edges[index_node_i]];

}

}

RanksDifferences[i] = (RanksNext[i] - Ranks[i]) * (RanksNext[i] - Ranks[i]);

});

}).wait();

distance = 0.0f;

for (int i=0; i < numNodes; i++)

{

distance += ranksDifferences[i];

ranks_t[i] = ranks_t_plus_one[i];

ranks_t_plus_one[i] = 0.0f;

}

distance = sqrt(distance);

std::cout<< "Time:\t" << T << "\tEuclidian Distance:\t" << distance << std::endl;

T++;

}

auto end = std::chrono::steady_clock::now();

// PageRank Results Printing

if(verbose == 1)

{

for(int i = 0;i<ranks_t.size();i++)

{

std::cout<<"Final Vector" << i<< "-th component:\t"<<ranks_t[i]<<std::endl;

}

}

std::cout<<std::endl<<std::endl<<std::endl;

std::cout<<"Final Norm:\t"<<distance<<std::endl;

// PageRank Execution Time Printing

std::cout << "TIME FOR PAGERANK" << "\n";

print_time(start, end);

}

return 0;

}

Please find the modified dataset and steps followed below.

For CPU:

qsub -I

cd <Path/to/project>

icpx -fsycl main.cpp -o cpu_out.exe

./cpu_out.exe 1 "datasets/cit-Patents.csv" 3e-5 0.85 0

For GPU:

qsub -I -l nodes=1:gpu:ppn=2 -d .

cd <Path/to/project>

icpx -fsycl main.cpp -o gpu_out.exe

./gpu_out.exe 2 "datasets/cit-Patents.csv" 3e-5 0.85 0

For FPGA:

qsub -I -l nodes=1:fpga:ppn=2 -d .

cd <Path/to/project>

icpx -fsycl -fintelfpga main.cpp -o fpga_out.exe

./fpga_out.exe 3 "datasets/cit-Patents.csv" 3e-5 0.85 0

To view the environment variables, type "export" in the command line to display all environment variables set on your system.

To get the devices information, enter the below command in the command line.

clinfo

Please run the below commands which display the GPU specifications of the system.

lspci | grep -i vga

lshw -C display

Please let us know if you face any issues in the Intel Devcloud.

Thanks and Regards,

Pendyala Sesha Srinivas

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Has the information provided above helped? If yes, could you please confirm whether we can close this thread from our end?

Thanks and Regards,

Pendyala Sesha Srinivas

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We assume that your issue is resolved. If you need any additional information, please post a new question as this thread will no longer be monitored by Intel.

Thanks and Regards,

Pendyala Sesha Srinivas

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

Was the solution to this problem ever found I also encounter this when running any of the FPGA examples on DevCloud -

./vector-add-buffers.fpga

Running on device: pac_a10 : Intel PAC Platform (pac_eb00000)

Vector size: 10000

An exception is caught for vector add.

terminate called after throwing an instance of 'sycl::_V1::runtime_error'

what(): Invalid device program image: size is zero -30 (PI_ERROR_INVALID_VALUE)

Aborted (core dumped)

Thanks,

Mike

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Update. The problem for me turned out to be the compilation step. I had been specifying -DFPGA_DEVICE=Arria10, but this is the correct way to do it -

cmake .. -DFPGA_DEVICE=/opt/intel/oneapi/intel_a10gx_pac:pac_a10

-Mike

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page