- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I try to develop a code based on MPI & DPC++ for large-scale simulation. The problem can be summarized as: I want to declare the data size, allocate the data memory inside of my class constructor, and then try to use them in the functions inside of my class. Then I realize that I have to provide a const size to the buffer if I want to use an accessor, but MPI makes an array of different sizes on each rank. Then I find that it is also not possible to use shared memory, because in DPC++ I cannot use this pointer, and the array or matrix allocated in the class cannot be used in subfunction. I am confused and have no idea about that.

the code is like this:

class abc{

queue Q{};

std::array<int, constsize> e;

std::array<double, constsize>t;

abc(){

ua = malloc_shared<double>(local_size, this->Q);

}

void b();

}

void abc::b(){

for(int i=0;i<constsize;i++){

e[i]=i;

t[i]=2*i;

}

buffer<int> ee{e};

buffer<double> tt{t};

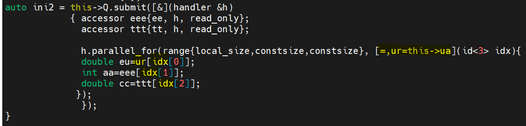

auto ini2 = this->Q.submit([&](handler &h)

{ accessor eee{ee, h, read_only};

accessor ttt{tt, h, read_only};

h.parallel_for(range{size1, size2, size3}, [=](id<3> idx)

double eu=ua[id[0]];

int aa=eee[id[1]];

double cc=ttt[id[2]];

}

}

e and t can be accessed because they have const size, and I can use the buffer. But ua has local size, and it depends on MPI, so I cannot use buffer, and shared memory also cannot be used in sub-function.

Any help with this?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for providing the details.

Intel oneAPI DPC++ can be used only with Intel GEN9 and higher GPUs.

Here is the link for Intel® oneAPI DPC++/C++ Compiler System Requirements:

If you want to use the DPC++ compiler with NVIDIA GPU you can use the opensource DPC++ compiler.

Please refer to the below link regarding opensource oneAPI DPC++ compiler:

https://intel.github.io/llvm-docs/GetStartedGuide.html

These forums are intended to support the queries related to Intel Products. Here, we do not provide support to the NVidia GPU. You can also get support for non-Intel GPUs. Please find the link for raising the queries: https://github.com/intel/llvm/issues

So, can we go ahead and close this thread?

Thanks & Regards,

Varsha

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for posting in Intel Communities.

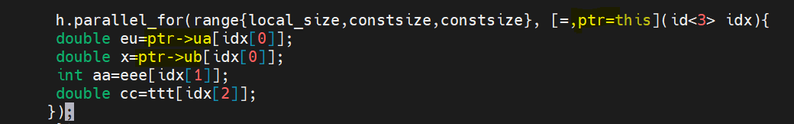

We cannot use the 'this' pointer directly inside a kernel. However, we can pass it as an argument inside the capture clause of the lambda function as shown in the below screenshot:

Please find the attached code which works fine at our end. Could you please let us know if you are still facing any issues?

Thanks & Regards,

Varsha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your reply, and with this help, the code works well. Now, I have lots of parameters that is from this class, and actually, they are all needed in the kernel function. Is that possible to instead of using[=, par1=this->p1, par2=this->p2, par3=this->p3.......parn=this->pn] and use[=,this] to solve this problem?

Best.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

But I got the error:

PI CUDA ERROR:

Value: 700

Name: CUDA_ERROR_ILLEGAL_ADDRESS

Description: an illegal memory access was encountered

Function: wait

Source Location: /home/gordon/Projects/oneAPICore/DPCPP/llvm/sycl/plugins/cuda/pi_cuda.cpp:449

Chunheng.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is it like a warning or the code block is not executing at all?

This might be a bit addressing too.

This question from Stackoverflow might help you.

https://stackoverflow.com/questions/27277365/unspecified-launch-failure-on-memcpy/27278218#27278218

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, but then it seems that the ua is not imported correctly, and it is nothing with my allocation.

Chunheng.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Could you please provide us with the OS details and the GPU details on which you are running the code?

And also, could you please let us know which version of DPCPP and Intel oneAPI you are using?

Thanks & Regards,

Varsha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I run my code on ThetaGPU.

The system information is: #101-Ubuntu SMP Fri Oct 15 20:00:55 UTC 2021

The GPU I use is: Selected device:NVIDIA A100-SXM4-40GB

I am not quite sure about the DPC++ or OneAPI version, but it is for Ubuntu 18.04.

Chunheng.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for providing the details.

Intel oneAPI DPC++ can be used only with Intel GEN9 and higher GPUs.

Here is the link for Intel® oneAPI DPC++/C++ Compiler System Requirements:

If you want to use the DPC++ compiler with NVIDIA GPU you can use the opensource DPC++ compiler.

Please refer to the below link regarding opensource oneAPI DPC++ compiler:

https://intel.github.io/llvm-docs/GetStartedGuide.html

These forums are intended to support the queries related to Intel Products. Here, we do not provide support to the NVidia GPU. You can also get support for non-Intel GPUs. Please find the link for raising the queries: https://github.com/intel/llvm/issues

So, can we go ahead and close this thread?

Thanks & Regards,

Varsha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for accepting our solution. If you need any additional information, please post a new question as this thread will no longer be monitored by Intel.

Thanks & Regards,

Varsha

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page