- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

we have a Intel® Core™ i9-7900X CPU @ 3.30GHz × 20 workstation , running ubuntu 20.04.

I have installed intel oneAPI 2022.0.2.

I am trying to build openmpi library that works with this intel oneAPI.

In past I have built separate openmpi libraries that go with gcc compilers and intel compilers.

This time it is different. It is failing in build mart itself.

I have this set up in my .bashrc

source /opt/intel/oneapi/setvars.sh

export F77=ifort

export FC=ifort

export F90=ifort

export CC=icc

export CXX=icpc

export PATH=$PATH:/opt/intel/oneapi/compiler/2022.0.2/linux/bin/intel64:/opt/intel_openmpi/bin

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/opt/intel_openmpi/lib:opt/intel/oneapi/compiler/2022.0.2/linux/compiler/lib/intel64_lin

export LD_RUN_PATH=$LD_RUN_PATH:/opt/intel_openmpi/lib

and I am running in build directory of openmpi unpacked directory.

$ ../configure -prefix=/opt/intel_openmpi CC=icc CXX=icpc F77=ifort FC=ifort

and upon

$ sudo make all install

It fails with the following error message:

Making all in config

make[1]: Entering directory '/home/smec17045/setup/openmpi-4.1.2/build_intel/config'

make[1]: Nothing to be done for 'all'.

make[1]: Leaving directory '/home/smec17045/setup/openmpi-4.1.2/build_intel/config'

Making all in contrib

make[1]: Entering directory '/home/smec17045/setup/openmpi-4.1.2/build_intel/contrib'

make[1]: Nothing to be done for 'all'.

make[1]: Leaving directory '/home/smec17045/setup/openmpi-4.1.2/build_intel/contrib'

Making all in opal

make[1]: Entering directory '/home/smec17045/setup/openmpi-4.1.2/build_intel/opal'

Making all in include

make[2]: Entering directory '/home/smec17045/setup/openmpi-4.1.2/build_intel/opal/include'

make all-am

make[3]: Entering directory '/home/smec17045/setup/openmpi-4.1.2/build_intel/opal/include'

make[3]: Leaving directory '/home/smec17045/setup/openmpi-4.1.2/build_intel/opal/include'

make[2]: Leaving directory '/home/smec17045/setup/openmpi-4.1.2/build_intel/opal/include'

Making all in datatype

make[2]: Entering directory '/home/smec17045/setup/openmpi-4.1.2/build_intel/opal/datatype'

CC libdatatype_reliable_la-opal_datatype_pack.lo

../../libtool: line 1766: icc: command not found

make[2]: *** [Makefile:1916: libdatatype_reliable_la-opal_datatype_pack.lo] Error 1

make[2]: Leaving directory '/home/smec17045/setup/openmpi-4.1.2/build_intel/opal/datatype'

make[1]: *** [Makefile:2383: all-recursive] Error 1

make[1]: Leaving directory '/home/smec17045/setup/openmpi-4.1.2/build_intel/opal'

make: *** [Makefile:1901: all-recursive] Error 1

The specific reason : libtool can't find icc.

icc is in the path for sure. but still I keep getting this error.

Any help will be appreciated.

I have a legacy code that is configured to be built with intel+openMPI combination.

Regards,

Keyur

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for reaching out to us.

Could you please let us know if you are able to get the version of icc after sourcing Intel oneAPI environment(source /opt/intel/oneapi/setvars.sh)?

Please find the below command to get the version.

icc --versionCould you try giving the complete path for all the compilers at the time of configuring?

./configure --prefix=$<path> CC=/opt/intel/oneapi/compiler/<version>/linux/bin/intel64/icc CXX=/opt/intel/oneapi/compiler/<version>/linux/bin/intel64/icpc F77=/opt/intel/oneapi/compiler/<version>/linux/bin/intel64/ifort FC=/opt/intel/oneapi/compiler/<version>/linux/bin/intel64/ifortAfter configuring, please use the "make all" command.

make allAfter using the command "make all". Now, use the "make all install" command.

make all installCould you please try and let us know if you are still facing any issues?

Thanks & Regards,

Varsha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Varsha,

thanks for responding. I went a bit ahead, I think but still facing other kind of error.

icc version prints 2021.05

$ icc --version

icc (ICC) 2021.5.0 20211109

Copyright (C) 1985-2021 Intel Corporation. All rights reserved.

but at /opt/intel/oneapi/compiler there's version dir 2022.0.2 and a softlink directory latest

$ ls /opt/intel/oneapi/compiler

2022.0.2 latest

there's no 2021.5.0 dir under /opt/intel/oneapi/compiler.

$ ../configure -prefix=/opt/intel_openmpi CC=/opt/intel/oneapi/compiler/2021.5.0/linux/bin/intel64/icc CXX=/opt/intel/oneapi/compiler/2015.5.0/linux/bin/intel64/icpc F77=/opt/intel/oneapi/compiler/2021.5.0/linux/bin/intel64/ifort FC=/opt/intel/oneapi/compiler/2021.5.0/linux/bin/intel64/ifort 2>&1 |tee config.out

checking for perl... perl

============================================================================

== Configuring Open MPI

============================================================================

*** Startup tests

checking build system type... x86_64-pc-linux-gnu

checking host system type... x86_64-pc-linux-gnu

checking target system type... x86_64-pc-linux-gnu

checking for gcc... /opt/intel/oneapi/compiler/2021.5.0/linux/bin/intel64/icc

checking whether the C compiler works... no

configure: error: in `/home/smec17045/setup/openmpi-4.1.2/build_intel':

configure: error: C compiler cannot create executables

See `config.log' for more details

configured with the following worked.

../configure -prefix=/opt/intel_openmpi CC=/opt/intel/oneapi/compiler/2022.0.2/linux/bin/intel64/icc CXX=/opt/intel/oneapi/compiler/2022.0.2/linux/bin/intel64/icpc F77=/opt/intel/oneapi/compiler/2022.0.2/linux/bin/intel64/ifort FC=/opt/intel/oneapi/compiler/2022.0.2/linux/bin/intel64/ifort 2>&1 |tee config.out

and upon make all got the error trying to load "libimf.so". I am printing only a few tail end lines of the output.

$ make all

LN_S ptype_ub_f.c

CC ptype_ub_f.lo

GENERATE psizeof_f.f90

FC psizeof_f.lo

FCLD libmpi_mpifh_psizeof.la

ar: `u' modifier ignored since `D' is the default (see `U')

CCLD libmpi_mpifh_pmpi.la

ar: `u' modifier ignored since `D' is the default (see `U')

make[3]: Leaving directory '/home/smec17045/setup/openmpi-4.1.2/build_intel/ompi/mpi/fortran/mpif-h/profile'

make[3]: Entering directory '/home/smec17045/setup/openmpi-4.1.2/build_intel/ompi/mpi/fortran/mpif-h'

GENERATE sizeof_f.f90

FC sizeof_f.lo

FCLD libmpi_mpifh_sizeof.la

ar: `u' modifier ignored since `D' is the default (see `U')

CCLD libmpi_mpifh.la

make[3]: Leaving directory '/home/smec17045/setup/openmpi-4.1.2/build_intel/ompi/mpi/fortran/mpif-h'

make[2]: Leaving directory '/home/smec17045/setup/openmpi-4.1.2/build_intel/ompi/mpi/fortran/mpif-h'

Making all in mpi/fortran/use-mpi-ignore-tkr

make[2]: Entering directory '/home/smec17045/setup/openmpi-4.1.2/build_intel/ompi/mpi/fortran/use-mpi-ignore-tkr'

GENERATE mpi-ignore-tkr-sizeof.h

GENERATE mpi-ignore-tkr-sizeof.f90

PPFC mpi-ignore-tkr.lo

FC mpi-ignore-tkr-sizeof.lo

FCLD libmpi_usempi_ignore_tkr.la

ifort: command line warning #10434: option '-nofor_main' use with underscore is deprecated; use '-nofor-main' instead

ld: /opt/intel/oneapi/compiler/2022.0.2/linux/bin/intel64/../../bin/intel64/../../lib/icx-lto.so: error loading plugin: libimf.so: cannot open shared object file: No such file or directory

make[2]: *** [Makefile:1903: libmpi_usempi_ignore_tkr.la] Error 1

make[2]: Leaving directory '/home/smec17045/setup/openmpi-4.1.2/build_intel/ompi/mpi/fortran/use-mpi-ignore-tkr'

make[1]: *** [Makefile:3555: all-recursive] Error 1

make[1]: Leaving directory '/home/smec17045/setup/openmpi-4.1.2/build_intel/ompi'

make: *** [Makefile:1901: all-recursive] Error 1

Thanks for your help! Hope you can help me with this one too.

-- Keyur

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Could you please try exporting the libraries only if you did not find them after initializing the Intel oneAPI Environment?

And also, could you please try the command "make" after the configure step and the "make all install"?

make

make all install

Thanks & Regards,

Varsha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks again!! @VarshaS_Intel

You mentioned

"Could you please try exporting the libraries only if you did not find them after initializing the Intel oneAPI Environment?"

Can you please elaborate what you mean by "exporting the libraries"? I am not sure what you mean by exporting the library?

The library is located in $LD_LIBRARY_PATH correctly. however somehow it cannot be linked.

Also, on another note: I found ifort application fails to build with intel oneAPI on multiple (3 separate servers) different version. The applications that don't even need mpi also fails.

2022.0.2 (2)

2021.1.1

all with pretty much similar error message.

ifort -check all -check noarg_temp_created -traceback -g -qopenmp -c solver_omp.f90 -o solver.o

ifort -check all -check noarg_temp_created -traceback -g -c NonLinearFEM.f90

ifort -check all -check noarg_temp_created -traceback -g -qopenmp NonLinearFEM.o assembly.o connectivity.o bc.o solver.o plotter.o postprocess.o preprocess.o metis_interface.o metis_h.o -o nlfem.x /home/hpcsmec17045/TecioLib/libtecio.a -lstdc++ -limf -lmetis

ifort: warning #10182: disabling optimization; runtime debug checks enabled

ld: /opt/intel/oneapi/compiler/2021.1.1/linux/bin/intel64/../../bin/intel64/../../lib/icx-lto.so: error loading plugin: libimf.so: cannot open shared object file: No such file or directory

make: *** [makefile:45: nlfem.x] Error 1

All these points to some issue with linking "libimf.so" in my humble opinion.

Thanks and regards,

Keyur

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

After initializing the Intel oneAPI environment, all the libraries will be set in LD_LIBRARY_PATH. You can export the libraries only if you didn't find them after initializing the oneAPI.

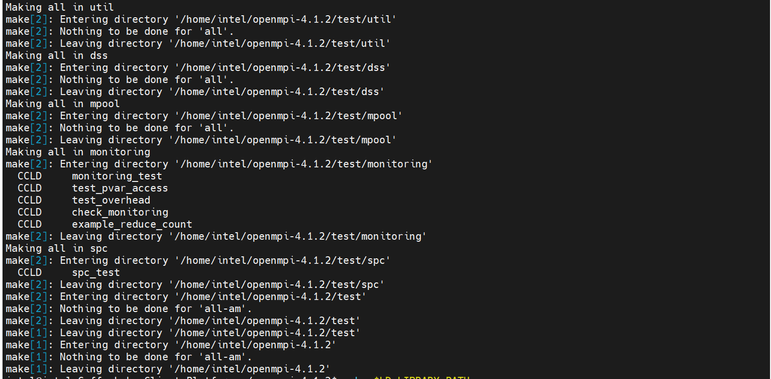

I am able to build the OpenMPI with the Intel compilers without getting any errors. Please find the below screenshot of it during "make":

And also, we want to let you know that Intel oneAPI has its own MPI where you can able to use it just by initializing the oneAPI environment. You just need to install the Intel HPC Toolkit and do initialize the oneAPI environment to use the Intel MPI Library.

To download Intel HPC Toolkit please find the below link:

https://www.intel.com/content/www/us/en/developer/tools/oneapi/hpc-toolkit-download.html

To set up the oneAPI environment, use the below command:

source /opt/intel/oneapi/mpi/2021.5.0/env/vars.shTo compile & run a sample MPI program:

Also, could you please let us know the specific reason for using the OpenMPI rather than Intel MPI so that your feedback helps us to understand better about your issue?

Thanks & Regards,

Varsha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the response. It seems perhaps the installation itself has some issue. I am unable to build even basic MPI application with intel compiler, let alone building openmpi library.

This is listing of hello_mpi.f90

PROGRAM hello_world_mpi

include 'mpif.h'

integer process_Rank, size_Of_Cluster, ierror, tag

call MPI_INIT(ierror)

call MPI_COMM_SIZE(MPI_COMM_WORLD, size_Of_Cluster, ierror)

call MPI_COMM_RANK(MPI_COMM_WORLD, process_Rank, ierror)

print *, 'Hello World from rank: ', process_Rank, 'of ', size_Of_Cluster,' total ranks'

call MPI_FINALIZE(ierror)

END PROGRAM hello_world_mpi

I am building it with mpiifort with debug flag on.

$ mpiifort -g -O0 -o hello_mpi hello_mpi.f90

And tried debugging what is causing the error. It seems MPI_INIT call itself fails.

$ mpirun -n 1 gdb ./hello_mpi

GNU gdb (Ubuntu 9.2-0ubuntu1~20.04.1) 9.2

Copyright (C) 2020 Free Software Foundation, Inc.

License GPLv3+: GNU GPL version 3 or later <http://gnu.org/licenses/gpl.html>

This is free software: you are free to change and redistribute it.

There is NO WARRANTY, to the extent permitted by law.

Type "show copying" and "show warranty" for details.

This GDB was configured as "x86_64-linux-gnu".

Type "show configuration" for configuration details.

For bug reporting instructions, please see:

<http://www.gnu.org/software/gdb/bugs/>.

Find the GDB manual and other documentation resources online at:

<http://www.gnu.org/software/gdb/documentation/>.

For help, type "help".

Type "apropos word" to search for commands related to "word"...

Reading symbols from ./hello_mpi...

(gdb) b hello_world_mpi

Breakpoint 1 at 0x4038dc: file hello_mpi.f90, line 4.

(gdb) list

1 PROGRAM hello_world_mpi

2 include 'mpif.h'

3 integer process_Rank, size_Of_Cluster, ierror, tag

4 call MPI_INIT(ierror)

5 call MPI_COMM_SIZE(MPI_COMM_WORLD, size_Of_Cluster, ierror)

6 call MPI_COMM_RANK(MPI_COMM_WORLD, process_Rank, ierror)

7 print *, 'Hello World from rank: ', process_Rank, 'of ', size_Of_Cluster,' total ranks'

8 call MPI_FINALIZE(ierror)

9 END PROGRAM hello_world_mpi

(gdb) r

Starting program: /home/smec17045/hello_mpi

[Thread debugging using libthread_db enabled]

Using host libthread_db library "/lib/x86_64-linux-gnu/libthread_db.so.1".

Breakpoint 1, hello_world_mpi () at hello_mpi.f90:4

4 call MPI_INIT(ierror)

(gdb) n

Program received signal SIGSEGV, Segmentation fault.

0x00007ffff5710131 in ofi_cq_sreadfrom ()

from /opt/intel/oneapi/mpi/2021.5.1//libfabric/lib/prov/libsockets-fi.so

(gdb) q

A debugging session is active.

Inferior 1 [process 40610] will be killed.

Quit anyway? (y or n) [answered Y; input not from terminal]

Any further guidance on how to resolve this would be appreciated. Should I try reinstalling the intel-Oneapi-Base-kit?

Regards,

-- Keyur

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Could you please try compiling and running the sample Fortran MPI Helloworld by using the below commands?

For Compiling, use the below command:

mpiifort -o hello hello.f90

For Running the MPI program, use the below command:

mpirun -n 2 ./hello

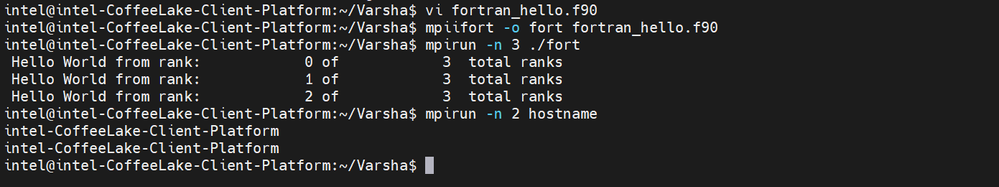

Could you please check if you are able to get the results for "hostname" by using the below command? Please find the below screenshot for more information.

mpirun -n 2 hostname

If you are facing errors while running the above cases, then could you please try reinstalling and running the above cases again?

Please do let us know if you are facing the issues even after reinstalling the toolkits.

Thanks & Regards,

Varsha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the reply!

On the workstation where original problem exists:

I compiled and ran the code as per your suggestion. here is what I am getting.

$ mpiifort -o hello hello.f90

$ mpirun -n 2 hello

[proxy:0:0@tt433gpu1] HYD_spawn (../../../../../src/pm/i_hydra/libhydra/spawn/intel/hydra_spawn.c:146): execvp error on file hello (No such file or directory)

Further, hostname is as follows.

$ mpirun -n 2 hostname

tt433gpu1

tt433gpu1

Another check on a compute cluster:

- On login node

On another compute cluster, with multiple nodes also I tried to check.

- On the login node doing the following resulted in similar result.

I checked the same with another version of toolkit on a different server 2021.1.1 and it also fails this case in similar manner.

$ mpirun -n 2 hostname

hpcvisualization

hpcvisualization

$ mpiifort -o hello_mpi hello_mpi.f90

$ mpirun -n 2 hello_mpi

[proxy:0:0@hpcvisualization] HYD_spawn (../../../../../src/pm/i_hydra/libhydra/spawn/intel/hydra_spawn.c:145): execvp error on file hello_mpi (No such file or directory)

Line number of error reporting differs by 1.

- On interactive queue:

- On development queue, requested with 2 nodes however, it seems to work, not without any issue though, It reports some issues with respect to libnuma.so but seems to give desired output also.

$ qsub -I -lwalltime=2:00:00 -lnodes=2

qsub: waiting for job 2339.hpcmaster to start

qsub: job 2339.hpcmaster ready

$ cd fortran/mpi_basic/

$ module purge

$ module load compiler/2021.1.1 mpi/2021.1.1

$ mpirun -n 2 hostname

hpcnode-1

hpcnode-2

$ mpiifort -o hello_mpi hello_mpi.f90

$ mpirun -n 2 ./hello_mpi

[LOG_CAT_SBGP] libnuma.so: cannot open shared object file: No such file or directory

[LOG_CAT_SBGP] Failed to dlopen libnuma.so. Fallback to GROUP_BY_SOCKET manual.

[LOG_CAT_SBGP] libnuma.so: cannot open shared object file: No such file or directory

[LOG_CAT_SBGP] Failed to dlopen libnuma.so. Fallback to GROUP_BY_SOCKET manual.

Hello World from rank: 1 of 2 total ranks

Hello World from rank: 0 of 2 total ranks

- On normal queue:

Again the issue resurfaces if submitted through normal queue. Please find the tarball attached with source, output file, error file and submit script.

I hope this helps in trouble shooting what's the issue with my workstation installation.

Thank you for your time and inputs!

-- Keyur

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for providing the detailed information.

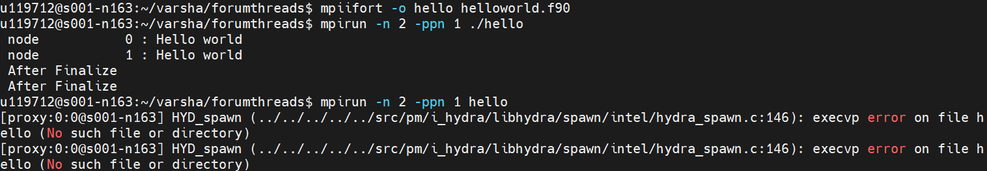

From the steps given by you((On the workstation where original problem exists, On login node), we have observed that while running the MPI code you were using the command "mpirun -n 2 hello" instead of "mpirun -n 2 ./hello".

We can face a similar issue if the binary file path is wrong. Please refer to the below image in which we were able to reproduce the same issue.

Please find the below link for more information:

We suggest you try running the below command and let us know if it works:

mpirun -n 2 -ppn 1 ./hello

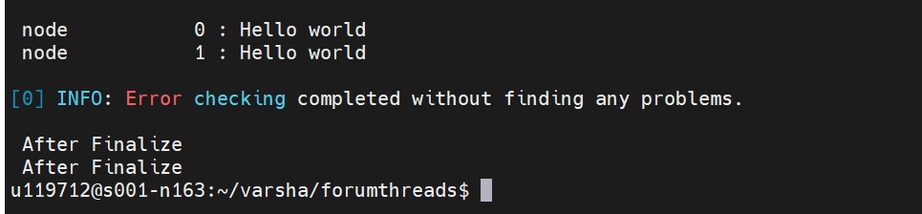

On the interactive queues, could you please try the below command for checking the correctness of the MPI code?

mpirun -check_mpi -n 2 -ppn 1 ./hello

If there is no issue with the MPI code, then it will appear as present in the below screenshot :

Could you please provide us with the results using the below command for the normal queue:

mpirun -check_mpi -n 2 -ppn 1 I_MPI_DEBUG = 30 FI_LOG_LEVEL=debug ./hello

Thanks & Regards,

Varsha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks you for your reply!

On Workstation where original problem exists:

Ok, you were right, on this account. It seems I might have missed giving correct path as "./hello".

However the problem exists even with correct path. It segfaults in the very first line. as clear from gdb session.

$ mpiifort -g -O0 -o hello_mpi hello_mpi.f90

$ mpirun -n 2 -ppn 2 ./hello_mpi

===================================================================================

= BAD TERMINATION OF ONE OF YOUR APPLICATION PROCESSES

= RANK 0 PID 5910 RUNNING AT tt433gpu1

= KILLED BY SIGNAL: 9 (Killed)

===================================================================================

===================================================================================

= BAD TERMINATION OF ONE OF YOUR APPLICATION PROCESSES

= RANK 1 PID 5911 RUNNING AT tt433gpu1

= KILLED BY SIGNAL: 11 (Segmentation fault)

===================================================================================

$ mpirun -n 1 gdb ./hello_mpi

GNU gdb (Ubuntu 9.2-0ubuntu1~20.04.1) 9.2

Copyright (C) 2020 Free Software Foundation, Inc.

License GPLv3+: GNU GPL version 3 or later <http://gnu.org/licenses/gpl.html>

This is free software: you are free to change and redistribute it.

There is NO WARRANTY, to the extent permitted by law.

Type "show copying" and "show warranty" for details.

This GDB was configured as "x86_64-linux-gnu".

Type "show configuration" for configuration details.

For bug reporting instructions, please see:

<http://www.gnu.org/software/gdb/bugs/>.

Find the GDB manual and other documentation resources online at:

<http://www.gnu.org/software/gdb/documentation/>.

For help, type "help".

Type "apropos word" to search for commands related to "word"...

Reading symbols from ./hello_mpi...

(gdb) list

1 PROGRAM hello_world_mpi

2 include 'mpif.h'

3 integer process_Rank, size_Of_Cluster, ierror, tag

4 call MPI_INIT(ierror)

5 call MPI_COMM_SIZE(MPI_COMM_WORLD, size_Of_Cluster, ierror)

6 call MPI_COMM_RANK(MPI_COMM_WORLD, process_Rank, ierror)

7 print *, 'Hello World from rank: ', process_Rank, 'of ', size_Of_Cluster,' total ranks'

8 call MPI_FINALIZE(ierror)

9 END PROGRAM hello_world_mpi

(gdb) b main

Breakpoint 1 at 0x403870

(gdb) r

Starting program: /home/smec17045/hello_mpi

[Thread debugging using libthread_db enabled]

Using host libthread_db library "/lib/x86_64-linux-gnu/libthread_db.so.1".

Breakpoint 1, 0x0000000000403870 in main ()

(gdb) n

Single stepping until exit from function main,

which has no line number information.

Program received signal SIGSEGV, Segmentation fault.

0x0000155552c67131 in ofi_cq_sreadfrom ()

from /opt/intel/oneapi/mpi/2021.5.1//libfabric/lib/prov/libsockets-fi.so

(gdb) q

A debugging session is active.

Inferior 1 [process 5950] will be killed.

Quit anyway? (y or n) [answered Y; input not from terminal]

On Compute Cluster: Interactive queue:

$ mpiifort -g -O0 -o hello_mpi hello_mpi.f90

$ mpirun -check_mpi -n 2 -ppn 1 ./hello_mpi

[LOG_CAT_SBGP] libnuma.so: cannot open shared object file: No such file or directory

[LOG_CAT_SBGP] Failed to dlopen libnuma.so. Fallback to GROUP_BY_SOCKET manual.

[LOG_CAT_SBGP] libnuma.so: cannot open shared object file: No such file or directory

[LOG_CAT_SBGP] Failed to dlopen libnuma.so. Fallback to GROUP_BY_SOCKET manual.

[0] INFO: CHECK LOCAL:EXIT:SIGNAL ON

[0] INFO: CHECK LOCAL:EXIT:BEFORE_MPI_FINALIZE ON

[0] INFO: CHECK LOCAL:MPI:CALL_FAILED ON

[0] INFO: CHECK LOCAL:MEMORY:OVERLAP ON

[0] INFO: CHECK LOCAL:MEMORY:ILLEGAL_MODIFICATION ON

[0] INFO: CHECK LOCAL:MEMORY:INACCESSIBLE ON

[0] INFO: CHECK LOCAL:MEMORY:ILLEGAL_ACCESS OFF

[0] INFO: CHECK LOCAL:MEMORY:INITIALIZATION OFF

[0] INFO: CHECK LOCAL:REQUEST:ILLEGAL_CALL ON

[0] INFO: CHECK LOCAL:REQUEST:NOT_FREED ON

[0] INFO: CHECK LOCAL:REQUEST:PREMATURE_FREE ON

[0] INFO: CHECK LOCAL:DATATYPE:NOT_FREED ON

[0] INFO: CHECK LOCAL:BUFFER:INSUFFICIENT_BUFFER ON

[0] INFO: CHECK GLOBAL:DEADLOCK:HARD ON

[0] INFO: CHECK GLOBAL:DEADLOCK:POTENTIAL ON

[0] INFO: CHECK GLOBAL:DEADLOCK:NO_PROGRESS ON

[0] INFO: CHECK GLOBAL:MSG:DATATYPE:MISMATCH ON

[0] INFO: CHECK GLOBAL:MSG:DATA_TRANSMISSION_CORRUPTED ON

[0] INFO: CHECK GLOBAL:MSG:PENDING ON

[0] INFO: CHECK GLOBAL:COLLECTIVE:DATATYPE:MISMATCH ON

[0] INFO: CHECK GLOBAL:COLLECTIVE:DATA_TRANSMISSION_CORRUPTED ON

[0] INFO: CHECK GLOBAL:COLLECTIVE:OPERATION_MISMATCH ON

[0] INFO: CHECK GLOBAL:COLLECTIVE:SIZE_MISMATCH ON

[0] INFO: CHECK GLOBAL:COLLECTIVE:REDUCTION_OPERATION_MISMATCH ON

[0] INFO: CHECK GLOBAL:COLLECTIVE:ROOT_MISMATCH ON

[0] INFO: CHECK GLOBAL:COLLECTIVE:INVALID_PARAMETER ON

[0] INFO: CHECK GLOBAL:COLLECTIVE:COMM_FREE_MISMATCH ON

[0] INFO: maximum number of errors before aborting: CHECK-MAX-ERRORS 1

[0] INFO: maximum number of reports before aborting: CHECK-MAX-REPORTS 0 (= unlimited)

[0] INFO: maximum number of times each error is reported: CHECK-SUPPRESSION-LIMIT 10

[0] INFO: timeout for deadlock detection: DEADLOCK-TIMEOUT 60s

[0] INFO: timeout for deadlock warning: DEADLOCK-WARNING 300s

[0] INFO: maximum number of reported pending messages: CHECK-MAX-PENDING 20

Hello World from rank: 0 of 2 total ranks

Hello World from rank: 1 of 2 total ranks

[0] INFO: Error checking completed without finding any problems

On Compute Cluster: normal queue:

On normal queue the run with the following command

$ mpirun -check_mpi -n 2 -ppn 1 I_MPI_DEBUG = 30 FI_LOG_LEVEL=debug ./hello_mpi >out.txt

produces the following in error file.

Loading compiler version 2021.1.1

Loading tbb version 2021.1.1

Loading debugger version 10.0.0

Loading compiler-rt version 2021.1.1

Loading dpl version 2021.1.1

Loading oclfpga version 2021.1.1

Loading init_opencl version 2021.1.1

Warning: Intel PAC device is not found.

Please install the Intel PAC card to execute your program on an FPGA device.

Warning: Intel PAC device is not found.

Please install the Intel PAC card to execute your program on an FPGA device.

Loading compiler/2021.1.1

Loading requirement: tbb/latest debugger/latest compiler-rt/latest dpl/latest

/opt/intel/oneapi/compiler/2021.1.1/linux/lib/oclfpga/modulefiles/init_opencl /opt/intel/oneapi/compiler/2021.1.1/linux/lib/oclfpga/modulefiles/oclfpga

Loading mpi version 2021.1.1

Currently Loaded Modulefiles:

1) tbb/latest

2) debugger/latest

3) compiler-rt/latest

4) dpl/latest

5) /opt/intel/oneapi/compiler/2021.1.1/linux/lib/oclfpga/modulefiles/init_opencl

6) /opt/intel/oneapi/compiler/2021.1.1/linux/lib/oclfpga/modulefiles/oclfpga

7) compiler/2021.1.1mpi/2021.1.1

9) lib/cmake/cmake-3.19.4

cp: cannot create regular file \u2018/hello_mpi\u2019: Permission denied

[proxy:0:1@hpcnode-2] HYD_spawn (../../../../../src/pm/i_hydra/libhydra/spawn/intel/hydra_spawn.c:145): execvp error on file I_MPI_DEBUG (No such file or directory)

[mpiexec@hpcnode-1] wait_proxies_to_terminate (../../../../../src/pm/i_hydra/mpiexec/intel/i_mpiexec.c:531): downstream from host hpcnode-1 was killed by signal 9 (Killed)

Thanks and regards,

Keyur

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, @VarshaS_Intel !

I'm having the exact same problem as @keyurjoshi

Running gdb debugger, I get the segfault at ofi_cq_sreadfrom().

When running all the commands of your reply above I get the same output:

===================================================================================

= BAD TERMINATION OF ONE OF YOUR APPLICATION PROCESSES

= RANK 0 PID 6583 RUNNING AT dirac

= KILLED BY SIGNAL: 9 (Killed)

===================================================================================

===================================================================================

= BAD TERMINATION OF ONE OF YOUR APPLICATION PROCESSES

= RANK 1 PID 6584 RUNNING AT dirac

= KILLED BY SIGNAL: 11 (Segmentation fault)

===================================================================================

I already tried to reinstall the HPC Toolkit. The same error repeats for test files in the mpi installation directory.

Thank you for helping!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Sorry, there is a typo in my previous response. Please use the below command for getting the complete debug log:

I_MPI_DEBUG=30 FI_LOG_LEVEL=debug mpirun -n 2 -ppn 1 ./hello

Please provide us with the debug log for the individual cases(On Compute Cluster: normal queue, On Workstation where original problem exists, etc.)

Could you try the below command for the cases where you are getting "BAD TERMINATION ERROR"? Also, please share the complete debug log with us.

mpirun -check_mpi -n 2 -ppn 1 ./hello_mpiThanks & Regards,

Varsha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Varsha,

thanks for the suggestion. Here's what I got.

On HPC Cluster, normal queue:

As per your suggestion, ran the following through normal queue

I_MPI_DEBUG=30 FI_LOG_LEVEL=debug mpirun -n 2 -ppn 1 ./hello_mpi >out.txt

The error channel output is as follows:

Loading compiler version 2021.1.1

Loading tbb version 2021.1.1

Loading debugger version 10.0.0

Loading compiler-rt version 2021.1.1

Loading dpl version 2021.1.1

Loading oclfpga version 2021.1.1

Loading init_opencl version 2021.1.1

Warning: Intel PAC device is not found.

Please install the Intel PAC card to execute your program on an FPGA device.

Warning: Intel PAC device is not found.

Please install the Intel PAC card to execute your program on an FPGA device.

Loading compiler/2021.1.1

Loading requirement: tbb/latest debugger/latest compiler-rt/latest dpl/latest

/opt/intel/oneapi/compiler/2021.1.1/linux/lib/oclfpga/modulefiles/init_opencl /opt/intel/oneapi/compiler/2021.1.1/linux/lib/oclfpga/modul\

efiles/oclfpga

Loading mpi version 2021.1.1

Currently Loaded Modulefiles:

1) tbb/latest

2) debugger/latest

3) compiler-rt/latest

4) dpl/latest

5) /opt/intel/oneapi/compiler/2021.1.1/linux/lib/oclfpga/modulefiles/init_opencl

6) /opt/intel/oneapi/compiler/2021.1.1/linux/lib/oclfpga/modulefiles/oclfpga

7) compiler/2021.1.1mpi/2021.1.1

libfabric:28642:core:core:ofi_hmem_init():202<info> Hmem iface FI_HMEM_CUDA not supported

libfabric:28642:core:core:ofi_hmem_init():202<info> Hmem iface FI_HMEM_ROCR not supported

libfabric:28642:core:core:ofi_hmem_init():202<info> Hmem iface FI_HMEM_ZE not supported

libfabric:28642:core:mr:ofi_default_cache_size():69<info> default cache size=4223952597

libfabric:14757:core:core:ofi_hmem_init():202<info> Hmem iface FI_HMEM_CUDA not supported

libfabric:14757:core:core:ofi_hmem_init():202<info> Hmem iface FI_HMEM_ROCR not supported

libfabric:14757:core:core:ofi_hmem_init():202<info> Hmem iface FI_HMEM_ZE not supported

libfabric:14757:core:mr:ofi_default_cache_size():69<info> default cache size=4223952597

libfabric:28642:core:core:ofi_hmem_init():202<info> Hmem iface FI_HMEM_CUDA not supported

libfabric:28642:core:core:ofi_hmem_init():202<info> Hmem iface FI_HMEM_ROCR not supported

libfabric:28642:core:core:ofi_hmem_init():202<info> Hmem iface FI_HMEM_ZE not supported

libfabric:28642:core:mr:ofi_default_cache_size():69<info> default cache size=4223952597

libfabric:14757:core:core:ofi_hmem_init():202<info> Hmem iface FI_HMEM_CUDA not supported

libfabric:14757:core:core:ofi_hmem_init():202<info> Hmem iface FI_HMEM_ROCR not supported

libfabric:14757:core:core:ofi_hmem_init():202<info> Hmem iface FI_HMEM_ZE not supported

libfabric:14757:core:mr:ofi_default_cache_size():69<info> default cache size=4223952597

libfabric:28642:verbs:fabric:verbs_devs_print():871<info> list of verbs devices found for FI_EP_MSG:

libfabric:28642:verbs:fabric:verbs_devs_print():875<info> #1 mlx5_0 - IPoIB addresses:

libfabric:28642:verbs:fabric:verbs_devs_print():885<info> 192.168.2.2

libfabric:28642:verbs:fabric:verbs_devs_print():885<info> fe80::63f:7203:ae:a922

libfabric:14757:verbs:fabric:verbs_devs_print():871<info> list of verbs devices found for FI_EP_MSG:

libfabric:14757:verbs:fabric:verbs_devs_print():875<info> #1 mlx5_0 - IPoIB addresses:

libfabric:14757:verbs:fabric:verbs_devs_print():885<info> 192.168.2.3

libfabric:14757:verbs:fabric:verbs_devs_print():885<info> fe80::63f:7203:ae:a93e

libfabric:28642:verbs:fabric:vrb_get_device_attrs():617<info> device mlx5_0: first found active port is 1

libfabric:14757:verbs:fabric:vrb_get_device_attrs():617<info> device mlx5_0: first found active port is 1

libfabric:28642:verbs:fabric:vrb_get_device_attrs():617<info> device mlx5_0: first found active port is 1

libfabric:28642:core:core:ofi_register_provider():427<info> registering provider: verbs (111.0)

libfabric:14757:verbs:fabric:vrb_get_device_attrs():617<info> device mlx5_0: first found active port is 1

libfabric:14757:core:core:ofi_register_provider():427<info> registering provider: verbs (111.0)

libfabric:14757:core:core:ofi_register_provider():427<info> registering provider: tcp (111.0)

libfabric:28642:core:core:ofi_register_provider():427<info> registering provider: tcp (111.0)

libfabric:14757:core:core:ofi_register_provider():427<info> registering provider: sockets (111.0)

libfabric:28642:core:core:ofi_register_provider():427<info> registering provider: sockets (111.0)

libfabric:14757:core:core:ofi_register_provider():427<info> registering provider: shm (111.0)

libfabric:28642:core:core:ofi_register_provider():427<info> registering provider: shm (111.0)

libfabric:28642:core:core:ofi_register_provider():427<info> registering provider: ofi_rxm (111.0)

libfabric:14757:core:core:ofi_register_provider():427<info> registering provider: ofi_rxm (111.0)

libfabric:14757:core:core:ofi_register_provider():427<info> registering provider: mlx (1.4)

libfabric:28642:core:core:ofi_register_provider():427<info> registering provider: mlx (1.4)

libfabric:14757:core:core:ofi_register_provider():427<info> registering provider: ofi_hook_noop (111.0)

libfabric:14757:core:core:fi_getinfo_():1117<info> Found provider with the highest priority mlx, must_use_util_prov = 0

ibfabric:14757:mlx:core:mlx_getinfo():172<info> used inject size = 1024

libfabric:14757:mlx:core:mlx_getinfo():219<info> Loaded MLX version 1.10.0

libfabric:14757:mlx:core:mlx_getinfo():266<warn> MLX: spawn support 0

libfabric:14757:core:core:fi_getinfo_():1144<info> Since mlx can be used, verbs has been skipped. To use verbs, please, set FI_PROVIDER=verbs

libfabric:14757:core:core:fi_getinfo_():1144<info> Since mlx can be used, tcp has been skipped. To use tcp, please, set FI_PROVIDER=tcp

libfabric:14757:core:core:fi_getinfo_():1144<info> Since mlx can be used, sockets has been skipped. To use sockets, please, set FI_PROVIDER=s\

ockets

libfabric:14757:core:core:fi_getinfo_():1144<info> Since mlx can be used, shm has been skipped. To use shm, please, set FI_PROVIDER=shm

libfabric:14757:core:core:fi_getinfo_():1117<info> Found provider with the highest priority mlx, must_use_util_prov = 0

libfabric:14757:mlx:core:mlx_getinfo():172<info> used inject size = 1024

libfabric:14757:mlx:core:mlx_getinfo():219<info> Loaded MLX version 1.10.0

libfabric:14757:mlx:core:mlx_getinfo():266<warn> MLX: spawn support 0

libfabric:14757:core:core:fi_getinfo_():1144<info> Since mlx can be used, verbs has been skipped. To use verbs, please, set FI_PROVIDER=verbs

libfabric:14757:core:core:fi_getinfo_():1144<info> Since mlx can be used, tcp has been skipped. To use tcp, please, set FI_PROVIDER=tcp

libfabric:14757:core:core:fi_getinfo_():1144<info> Since mlx can be used, sockets has been skipped. To use sockets, please, set FI_PROVIDER=s\

ockets

libfabric:14757:core:core:fi_getinfo_():1144<info> Since mlx can be used, shm has been skipped. To use shm, please, set FI_PROVIDER=shm

libfabric:14757:mlx:core:mlx_fabric_open():172<info>

libfabric:14757:core:core:fi_fabric_():1397<info> Opened fabric: mlx

libfabric:14757:mlx:core:ofi_check_rx_attr():785<info> Tx only caps ignored in Rx caps

libfabric:14757:mlx:core:ofi_check_tx_attr():883<info> Rx only caps ignored in Tx caps

libfabric:28642:core:core:ofi_register_provider():427<info> registering provider: ofi_hook_noop (111.0)

libfabric:28642:core:core:fi_getinfo_():1117<info> Found provider with the highest priority mlx, must_use_util_prov = 0

libfabric:28642:mlx:core:mlx_getinfo():172<info> used inject size = 1024

libfabric:28642:mlx:core:mlx_getinfo():219<info> Loaded MLX version 1.10.0

libfabric:28642:mlx:core:mlx_getinfo():266<warn> MLX: spawn support 0

libfabric:28642:core:core:fi_getinfo_():1144<info> Since mlx can be used, verbs has been skipped. To use verbs, please, set FI_PROVIDER=verbs

libfabric:28642:core:core:fi_getinfo_():1144<info> Since mlx can be used, tcp has been skipped. To use tcp, please, set FI_PROVIDER=tcp

libfabric:28642:core:core:fi_getinfo_():1144<info> Since mlx can be used, sockets has been skipped. To use sockets, please, set FI_PROVIDER=s\

ockets

libfabric:28642:core:core:fi_getinfo_():1144<info> Since mlx can be used, shm has been skipped. To use shm, please, set FI_PROVIDER=shm

libfabric:28642:core:core:fi_getinfo_():1117<info> Found provider with the highest priority mlx, must_use_util_prov = 0

libfabric:28642:mlx:core:mlx_getinfo():172<info> used inject size = 1024

libfabric:28642:mlx:core:mlx_getinfo():219<info> Loaded MLX version 1.10.0

libfabric:28642:mlx:core:mlx_getinfo():266<warn> MLX: spawn support 0

libfabric:28642:core:core:fi_getinfo_():1144<info> Since mlx can be used, verbs has been skipped. To use verbs, please, set FI_PROVIDER=verbs

libfabric:28642:core:core:fi_getinfo_():1144<info> Since mlx can be used, tcp has been skipped. To use tcp, please, set FI_PROVIDER=tcp

libfabric:28642:core:core:fi_getinfo_():1144<info> Since mlx can be used, sockets has been skipped. To use sockets, please, set FI_PROVIDER=s\

ockets

libfabric:28642:core:core:fi_getinfo_():1144<info> Since mlx can be used, shm has been skipped. To use shm, please, set FI_PROVIDER=shm

libfabric:28642:mlx:core:mlx_fabric_open():172<info>

libfabric:28642:core:core:fi_fabric_():1397<info> Opened fabric: mlx

libfabric:28642:mlx:core:ofi_check_rx_attr():785<info> Tx only caps ignored in Rx caps

libfabric:28642:mlx:core:ofi_check_tx_attr():883<info> Rx only caps ignored in Tx caps

libfabric:14757:mlx:core:ofi_check_rx_attr():785<info> Tx only caps ignored in Rx caps

libfabric:14757:mlx:core:ofi_check_tx_attr():883<info> Rx only caps ignored in Tx caps

libfabric:28642:mlx:core:ofi_check_rx_attr():785<info> Tx only caps ignored in Rx caps

libfabric:28642:mlx:core:ofi_check_tx_attr():883<info> Rx only caps ignored in Tx caps

libfabric:14757:mlx:core:mlx_cm_getname_mlx_format():73<info> Loaded UCP address: [307]...

libfabric:28642:mlx:core:mlx_cm_getname_mlx_format():73<info> Loaded UCP address: [307]...

libfabric:28642:mlx:core:mlx_av_insert():179<warn> Try to insert address #0, offset=0 (size=2) fi_addr=0x20eff80

libfabric:14757:mlx:core:mlx_av_insert():179<warn> Try to insert address #0, offset=0 (size=2) fi_addr=0x1b0cfa0

libfabric:14757:mlx:core:mlx_av_insert():189<warn> address inserted

libfabric:14757:mlx:core:mlx_av_insert():179<warn> Try to insert address #1, offset=1024 (size=2) fi_addr=0x1b0cfa0

libfabric:14757:mlx:core:mlx_av_insert():189<warn> address inserted

libfabric:28642:mlx:core:mlx_av_insert():189<warn> address inserted

libfabric:28642:mlx:core:mlx_av_insert():179<warn> Try to insert address #1, offset=1024 (size=2) fi_addr=0x20eff80

libfabric:28642:mlx:core:mlx_av_insert():189<warn> address inserted

[LOG_CAT_SBGP] libnuma.so: cannot open shared object file: No such file or directory

[LOG_CAT_SBGP] Failed to dlopen libnuma.so. Fallback to GROUP_BY_SOCKET manual.

[LOG_CAT_SBGP] libnuma.so: cannot open shared object file: No such file or directory

[LOG_CAT_SBGP] Failed to dlopen libnuma.so. Fallback to GROUP_BY_SOCKET manual.

and it writes these to console that I have redirected to a file.

[0] MPI startup(): Intel(R) MPI Library, Version 2021.1 Build 20201112 (id: b9c9d2fc5)

[0] MPI startup(): Copyright (C) 2003-2020 Intel Corporation. All rights reserved.

[0] MPI startup(): library kind: release

[0] MPI startup(): Size of shared memory segment (857 MB per rank) * (2 local ranks) = 1715 MB total

[0] MPI startup(): libfabric version: 1.11.0-impi

[0] MPI startup(): libfabric provider: mlx

[0] MPI startup(): detected mlx provider, set device name to "mlx"

[0] MPI startup(): max_ch4_vcis: 1, max_reg_eps 1, enable_sep 0, enable_shared_ctxs 0, do_av_insert 1

[0] MPI startup(): addrnamelen: 1024

[0] MPI startup(): Load tuning file: "/opt/intel/oneapi/mpi/2021.1.1/etc/tuning_skx_shm-ofi.dat"

[0] MPI startup(): Rank Pid Node name Pin cpu

[0] MPI startup(): 0 153103 hpcvisualization {0,1,2,3,4,5,6,7,8,9,20,21,22,23,24,25,26,27,28,29}

[0] MPI startup(): 1 153104 hpcvisualization {10,11,12,13,14,15,16,17,18,19,30,31,32,33,34,35,36,37,38,39}

[0] MPI startup(): I_MPI_ROOT=/opt/intel/oneapi/mpi/2021.1.1

[0] MPI startup(): I_MPI_MPIRUN=mpirun

[0] MPI startup(): I_MPI_HYDRA_TOPOLIB=hwloc

[0] MPI startup(): I_MPI_INTERNAL_MEM_POLICY=default

[0] MPI startup(): I_MPI_DEBUG=30

Hello World from rank: 1 of 2 total ranks

Hello World from rank: 0 of 2 total ranks

The following run with

mpirun -check_mpi -n 2 -ppn 1 ./hello_mpi

produces the following on error channel

Loading compiler version 2021.1.1

Loading tbb version 2021.1.1

Loading debugger version 10.0.0

Loading compiler-rt version 2021.1.1

Loading dpl version 2021.1.1

Loading oclfpga version 2021.1.1

Loading init_opencl version 2021.1.1

Warning: Intel PAC device is not found.

Please install the Intel PAC card to execute your program on an FPGA device.

Warning: Intel PAC device is not found.

Please install the Intel PAC card to execute your program on an FPGA device.

Loading compiler/2021.1.1

Loading requirement: tbb/latest debugger/latest compiler-rt/latest dpl/latest

/opt/intel/oneapi/compiler/2021.1.1/linux/lib/oclfpga/modulefiles/init_opencl /opt/intel/oneapi/compiler/2021.1.1/linux/lib/oclfpga/modul\

efiles/oclfpga

Loading mpi version 2021.1.1

Currently Loaded Modulefiles:

1) tbb/latest

2) debugger/latest

3) compiler-rt/latest

4) dpl/latest

5) /opt/intel/oneapi/compiler/2021.1.1/linux/lib/oclfpga/modulefiles/init_opencl

6) /opt/intel/oneapi/compiler/2021.1.1/linux/lib/oclfpga/modulefiles/oclfpga

7) compiler/2021.1.1mpi/2021.1.1

[LOG_CAT_SBGP] libnuma.so: cannot open shared object file: No such file or directory

[LOG_CAT_SBGP] Failed to dlopen libnuma.so. Fallback to GROUP_BY_SOCKET manual.

[LOG_CAT_SBGP] libnuma.so: cannot open shared object file: No such file or directory

[LOG_CAT_SBGP] Failed to dlopen libnuma.so. Fallback to GROUP_BY_SOCKET manual.

[0] INFO: CHECK LOCAL:EXIT:SIGNAL ON

[0] INFO: CHECK LOCAL:EXIT:BEFORE_MPI_FINALIZE ON

[0] INFO: CHECK LOCAL:MPI:CALL_FAILED ON

[0] INFO: CHECK LOCAL:MEMORY:OVERLAP ON

[0] INFO: CHECK LOCAL:MEMORY:ILLEGAL_MODIFICATION ON

[0] INFO: CHECK LOCAL:MEMORY:INACCESSIBLE ON

[0] INFO: CHECK LOCAL:MEMORY:ILLEGAL_ACCESS OFF

[0] INFO: CHECK LOCAL:MEMORY:INITIALIZATION OFF

[0] INFO: CHECK LOCAL:REQUEST:ILLEGAL_CALL ON

[0] INFO: CHECK LOCAL:REQUEST:NOT_FREED ON

[0] INFO: CHECK LOCAL:REQUEST:PREMATURE_FREE ON

[0] INFO: CHECK LOCAL:DATATYPE:NOT_FREED ON

[0] INFO: CHECK LOCAL:BUFFER:INSUFFICIENT_BUFFER ON

[0] INFO: CHECK GLOBAL:DEADLOCK:HARD ON

[0] INFO: CHECK GLOBAL:DEADLOCK:POTENTIAL ON

[0] INFO: CHECK GLOBAL:DEADLOCK:NO_PROGRESS ON

[0] INFO: CHECK GLOBAL:MSG:DATATYPE:MISMATCH ON

[0] INFO: CHECK GLOBAL:MSG:DATA_TRANSMISSION_CORRUPTED ON

[0] INFO: CHECK GLOBAL:MSG:PENDING ON

[0] INFO: CHECK GLOBAL:COLLECTIVE:DATATYPE:MISMATCH ON

[0] INFO: CHECK GLOBAL:COLLECTIVE:DATA_TRANSMISSION_CORRUPTED ON

[0] INFO: CHECK GLOBAL:COLLECTIVE:OPERATION_MISMATCH ON

[0] INFO: CHECK GLOBAL:COLLECTIVE:SIZE_MISMATCH ON

[0] INFO: CHECK GLOBAL:COLLECTIVE:REDUCTION_OPERATION_MISMATCH ON

[0] INFO: CHECK GLOBAL:COLLECTIVE:ROOT_MISMATCH ON

[0] INFO: CHECK GLOBAL:COLLECTIVE:INVALID_PARAMETER ON

[0] INFO: CHECK GLOBAL:COLLECTIVE:COMM_FREE_MISMATCH ON

[0] INFO: maximum number of errors before aborting: CHECK-MAX-ERRORS 1

[0] INFO: maximum number of reports before aborting: CHECK-MAX-REPORTS 0 (= unlimited)

[0] INFO: maximum number of times each error is reported: CHECK-SUPPRESSION-LIMIT 10

[0] INFO: timeout for deadlock detection: DEADLOCK-TIMEOUT 60s

[0] INFO: timeout for deadlock warning: DEADLOCK-WARNING 300s

[0] INFO: maximum number of reported pending messages: CHECK-MAX-PENDING 20

[0] INFO: Error checking completed without finding any problems.

And it produces the following relevant output.

Hello World from rank: 0 of 2 total ranks

Hello World from rank: 1 of 2 total ranks

I don't know what changed between earlier runs and today's but even regular run with

mpirun -n 2 -ppn 1 ./hello_mpi > out.txt

also produces correct output.

Hello World from rank: 1 of 2 total ranks

Hello World from rank: 0 of 2 total ranks

but prints the following on error channel. Let me know if this may cause issue in some other tun.

[LOG_CAT_SBGP] libnuma.so: cannot open shared object file: No such file or directory

[LOG_CAT_SBGP] Failed to dlopen libnuma.so. Fallback to GROUP_BY_SOCKET manual.

[LOG_CAT_SBGP] libnuma.so: cannot open shared object file: No such file or directory

[LOG_CAT_SBGP] Failed to dlopen libnuma.so. Fallback to GROUP_BY_SOCKET manual.

I will report results on "the workstation where original problem exists" with these same step a later taoday.

Thanks and Regards,

Keyur

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Varsha,

sorry for the delay.

On the Workstation where the original problem exist:

Upon running the following,

$ mpiifort -g O0 -o hello_mpi hello_mpi.f90

$ mpirun -check_mpi -n 2 -ppn 1 ./hello_mpi

I got the following message.

===================================================================================

= BAD TERMINATION OF ONE OF YOUR APPLICATION PROCESSES

= RANK 0 PID 49970 RUNNING AT tt433gpu1

= KILLED BY SIGNAL: 9 (Killed)

===================================================================================

===================================================================================

= BAD TERMINATION OF ONE OF YOUR APPLICATION PROCESSES

= RANK 1 PID 49971 RUNNING AT tt433gpu1

= KILLED BY SIGNAL: 11 (Segmentation fault)

===================================================================================

And the following command,

$ I_MPI_DEBUG=30 FI_LOG_LEVEL=debug mpirun -n 2 -ppn 1 ./hello_mpi

produces the following:

[0] MPI startup(): Intel(R) MPI Library, Version 2021.5 Build 20211102 (id: 9279b7d62)

[0] MPI startup(): Copyright (C) 2003-2021 Intel Corporation. All rights reserved.

[0] MPI startup(): library kind: release

[0] MPI startup(): shm segment size (1307 MB per rank) * (2 local ranks) = 2615 MB total

libfabric:50086:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_CUDA not supported

libfabric:50086:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_ROCR not supported

[0] MPI startup(): libfabric version: 1.13.2rc1-impi

libfabric:50085:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_CUDA not supported

libfabric:50085:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_ROCR not supported

libfabric:50086:core:core:ofi_hmem_init():214<warn> Failed to initialize hmem iface FI_HMEM_ZE: Input/output error

libfabric:50086:core:mr:ofi_default_cache_size():78<info> default cache size=3368535552

libfabric:50086:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_CUDA not supported

libfabric:50086:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_ROCR not supported

libfabric:50086:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_ZE not supported

libfabric:50086:core:mr:ofi_default_cache_size():78<info> default cache size=3368535552

libfabric:50085:core:core:ofi_hmem_init():214<warn> Failed to initialize hmem iface FI_HMEM_ZE: Input/output error

libfabric:50085:core:mr:ofi_default_cache_size():78<info> default cache size=3368535552

libfabric:50085:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_CUDA not supported

libfabric:50085:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_ROCR not supported

libfabric:50085:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_ZE not supported

libfabric:50085:core:mr:ofi_default_cache_size():78<info> default cache size=3368535552

libfabric:50086:verbs:fabric:vrb_init_info():1336<info> no RDMA devices found

libfabric:50086:core:core:ofi_register_provider():474<info> registering provider: tcp (113.20)

libfabric:50086:core:core:ofi_register_provider():474<info> registering provider: sockets (113.20)

libfabric:50086:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_CUDA not supported

libfabric:50086:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_ROCR not supported

libfabric:50086:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_ZE not supported

libfabric:50086:core:core:ofi_register_provider():474<info> registering provider: shm (113.20)

libfabric:50086:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_CUDA not supported

libfabric:50086:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_ROCR not supported

libfabric:50085:verbs:fabric:vrb_init_info():1336<info> no RDMA devices found

libfabric:50085:core:core:ofi_register_provider():474<info> registering provider: tcp (113.20)

libfabric:50085:core:core:ofi_register_provider():474<info> registering provider: sockets (113.20)

libfabric:50085:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_CUDA not supported

libfabric:50085:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_ROCR not supported

libfabric:50085:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_ZE not supported

libfabric:50085:core:core:ofi_register_provider():474<info> registering provider: shm (113.20)

libfabric:50085:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_CUDA not supported

libfabric:50085:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_ROCR not supported

===================================================================================

= BAD TERMINATION OF ONE OF YOUR APPLICATION PROCESSES

= RANK 0 PID 50085 RUNNING AT tt433gpu1

= KILLED BY SIGNAL: 9 (Killed)

===================================================================================

===================================================================================

= BAD TERMINATION OF ONE OF YOUR APPLICATION PROCESSES

= RANK 1 PID 50086 RUNNING AT tt433gpu1

= KILLED BY SIGNAL: 11 (Segmentation fault)

===================================================================================

I hope it helps figuring out the issue.

Regards,

Keyur

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @gabrielhgobi ,

Could you please let us know if you are able to get the hostname without any errors? Please find the below command:

mpirun -n 2 -ppn 1 hostnameCould you please let us know which code you are trying to run and facing the issue?

Could you please provide us with the complete debug log using the below command?

I_MPI_DEBUG=30 FI_LOG_LEVEL=debug mpirun -n 2 -ppn 1 ./helloAlso, could you please provide the result of the below command?

mpirun -check_mpi -n 2 -ppn 1 ./helloThanks & Regards,

Varsha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Keyur,

Thanks for providing the detailed information.

>> also produces correct output.

Glad to know that one of your issues is resolved.

>>but prints the following on error channel

We can see from the debug log(generated using -check_mpi flag) that there is no issue with the MPI code and thus the expected output got generated. The error log is related to your workstation.

On the cluster where you are facing the error "BAD TERMINATION", could you please try the below command and let us know if you are still facing any issues?

I_MPI_FABRICS=shm I_MPI_DEBUG=30 FI_LOG_LEVEL=debug mpirun -n 2 -ppn 1 ./hello_mpi

For more information, please find the below link:

Thanks & Regards,

Varsha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We have not heard back from you. Could you please provide an update on your issue?

Thanks & Regards,

Varsha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, Varsha.

Sorry for the delay.

Running the following:

mpirun -n 2 -ppn 1 hostnameI got the message

dirac

diracThe code that I am trying to run is exactly the same hello_mpi or hello program from the others replies in the topic.

Using the command:

I_MPI_DEBUG=30 FI_LOG_LEVEL=debug mpirun -n 2 -ppn 1 ./hello[0] MPI startup(): Intel(R) MPI Library, Version 2021.5 Build 20211102 (id: 9279b7d62)

[0] MPI startup(): Copyright (C) 2003-2021 Intel Corporation. All rights reserved.

[0] MPI startup(): library kind: release

[0] MPI startup(): shm segment size (1068 MB per rank) * (2 local ranks) = 2136 MB total

libfabric:4589:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_CUDA not supported

libfabric:4589:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_ROCR not supported

[0] MPI startup(): libfabric version: 1.13.2rc1-impi

libfabric:4588:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_CUDA not supported

libfabric:4588:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_ROCR not supported

libfabric:4589:core:core:ofi_hmem_init():214<warn> Failed to initialize hmem iface FI_HMEM_ZE: Input/output error

libfabric:4589:core:mr:ofi_default_cache_size():78<info> default cache size=2807081130

libfabric:4588:core:core:ofi_hmem_init():214<warn> Failed to initialize hmem iface FI_HMEM_ZE: Input/output error

libfabric:4588:core:mr:ofi_default_cache_size():78<info> default cache size=2807081130

libfabric:4588:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_CUDA not supported

libfabric:4588:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_ROCR not supported

libfabric:4588:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_ZE not supported

libfabric:4588:core:mr:ofi_default_cache_size():78<info> default cache size=2807081130

libfabric:4589:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_CUDA not supported

libfabric:4589:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_ROCR not supported

libfabric:4589:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_ZE not supported

libfabric:4589:core:mr:ofi_default_cache_size():78<info> default cache size=2807081130

libfabric:4588:verbs:fabric:vrb_init_info():1336<info> no RDMA devices found

libfabric:4588:core:core:ofi_register_provider():474<info> registering provider: tcp (113.20)

libfabric:4588:core:core:ofi_register_provider():474<info> registering provider: sockets (113.20)

libfabric:4588:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_CUDA not supported

libfabric:4588:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_ROCR not supported

libfabric:4588:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_ZE not supported

libfabric:4588:core:core:ofi_register_provider():474<info> registering provider: shm (113.20)

libfabric:4588:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_CUDA not supported

libfabric:4588:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_ROCR not supported

libfabric:4589:verbs:fabric:vrb_init_info():1336<info> no RDMA devices found

libfabric:4589:core:core:ofi_register_provider():474<info> registering provider: tcp (113.20)

libfabric:4589:core:core:ofi_register_provider():474<info> registering provider: sockets (113.20)

libfabric:4589:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_CUDA not supported

libfabric:4589:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_ROCR not supported

libfabric:4589:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_ZE not supported

libfabric:4589:core:core:ofi_register_provider():474<info> registering provider: shm (113.20)

libfabric:4589:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_CUDA not supported

libfabric:4589:core:core:ofi_hmem_init():209<info> Hmem iface FI_HMEM_ROCR not supported

===================================================================================

= BAD TERMINATION OF ONE OF YOUR APPLICATION PROCESSES

= RANK 0 PID 4588 RUNNING AT dirac

= KILLED BY SIGNAL: 9 (Killed)

===================================================================================

===================================================================================

= BAD TERMINATION OF ONE OF YOUR APPLICATION PROCESSES

= RANK 1 PID 4589 RUNNING AT dirac

= KILLED BY SIGNAL: 11 (Segmentation fault)

===================================================================================And running the following:

mpirun -check_mpi -n 2 -ppn 1 ./helloI just got the terminations:

===================================================================================

= BAD TERMINATION OF ONE OF YOUR APPLICATION PROCESSES

= RANK 0 PID 4610 RUNNING AT dirac

= KILLED BY SIGNAL: 11 (Segmentation fault)

===================================================================================

===================================================================================

= BAD TERMINATION OF ONE OF YOUR APPLICATION PROCESSES

= RANK 1 PID 4611 RUNNING AT dirac

= KILLED BY SIGNAL: 9 (Killed)

===================================================================================

Trying the command:

I_MPI_FABRICS=shm I_MPI_DEBUG=30 FI_LOG_LEVEL=debug mpirun -n 2 -ppn 1 ./hello_mpiI got the output:

[0] MPI startup(): Intel(R) MPI Library, Version 2021.5 Build 20211102 (id: 9279b7d62)

[0] MPI startup(): Copyright (C) 2003-2021 Intel Corporation. All rights reserved.

[0] MPI startup(): library kind: release

[0] MPI startup(): shm segment size (1068 MB per rank) * (2 local ranks) = 2136 MB total

[0] MPI startup(): File "" not found

[0] MPI startup(): Load tuning file: "/opt/intel/oneapi/mpi/2021.5.1/etc/tuning_skx_shm.dat"

[0] MPI startup(): Rank Pid Node name Pin cpu

[0] MPI startup(): 0 4626 dirac {0,1,2,6,7,8}

[0] MPI startup(): 1 4627 dirac {3,4,5,9,10,11}

[0] MPI startup(): I_MPI_ROOT=/opt/intel/oneapi/mpi/2021.5.1

[0] MPI startup(): I_MPI_MPIRUN=mpirun

[0] MPI startup(): I_MPI_HYDRA_TOPOLIB=hwloc

[0] MPI startup(): I_MPI_INTERNAL_MEM_POLICY=default

[0] MPI startup(): I_MPI_FABRICS=shm

[0] MPI startup(): I_MPI_DEBUG=30

[0] MPI startup(): threading: mode: direct

[0] MPI startup(): threading: vcis: 1

[0] MPI startup(): threading: app_threads: 1

[0] MPI startup(): threading: runtime: generic

[0] MPI startup(): threading: is_threaded: 0

[0] MPI startup(): threading: async_progress: 0

[0] MPI startup(): threading: num_pools: 64

[0] MPI startup(): threading: lock_level: global

[0] MPI startup(): threading: enable_sep: 0

[0] MPI startup(): threading: direct_recv: 0

[0] MPI startup(): threading: zero_op_flags: 0

[0] MPI startup(): threading: num_am_buffers: 0

[0] MPI startup(): threading: library is built with per-vci thread granularity

Hello World from rank: 1 of 2 total ranks

Hello World from rank: 0 of 2 total ranks I hope it helps. Thank you for the support!

Regards,

Gobi

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @gabrielhgobi,

Glad to know that your issue is resolved. Thanks for the confirmation.

Hi @keyurjoshi,

We have not heard back you. This thread will no longer be monitored by Intel. If you need further assistance, please post a new question.

Thanks & Regards,

Varsha

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page