- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

When I run my MPI application over 40 machines, one mpi process does not finish and hangs on 'MPI_Finalize'

(The other mpi processes in the other machines show zero cpu usage)

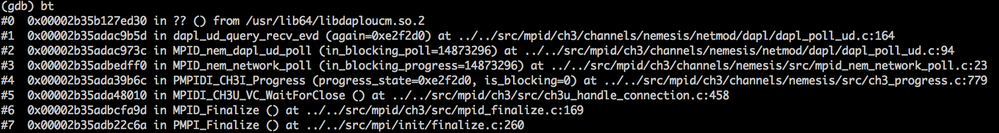

In the bellow, I attached the call stack of the process which is waiting on 'MPI_Finalize' function.

where do I have to start debugging? can anybody give me any clue on it?

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

Could you provide your launch command. Also DAPL version, Intel MPI version, details about cluster HW if possible.

If you have time for more experiements, could you try i) just a few processes with DAPL ii) other fabrics (if available) such as OFA, TMI.

BR,

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, I used the following flags:

export I_MPI_PERHOST=1

export I_MPI_FABRICS=dapl

export I_MPI_FALLBACK=0

export I_MPI_DAPL_UD=1

export MPICH_ASYNC_PROGRESS=1

export I_MPI_RDMA_SCALABLE_PROGRESS=1

export I_MPI_PIN=1

export I_MPI_DYNAMIC_CONNECTION=0

I checked the dapl provider being used with 'I_MPI_DEBUG' option:

MPI startup(): Multi-threaded optimized library

MPI startup(): DAPL provider ofa-v2-mlx4_0-1u with IB UD extension

DAPL startup(): trying to open DAPL provider from I_MPI_DAPL_UD_PROVIDER: ofa-v2-mlx4_0-1u

I_MPI_dlopen_dat(): trying to load default dat library: libdat2.so.2

MPI Version: Intel(R) MPI Library for Linux* OS, 64-bit applications, Version 5.1.2 Build 20151015

Hardware Spec:

OS : CentOS 6.4 Final

CPU : 2 * Intel® Xeon® CPU E5-2450 @ (2.10GHz, 8 physical cores)

RAM : 32GB per each

Ethernet: InfiniBand: Mellanox Technologies MT26428 [ConnectX VPI PCIe 2.0 5GT/s - IB QDR / 10GigE]

I ran the application with a few machines (40 --> 5) over the similar data size per machine. It seems that the problem has gone.. but Why?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for the detailed response.

can you run the job without "export MPICH_ASYNC_PROGRESS=1" to see if the issue goes away?

Also, could you provide a small reproducer?

Please provide the version of OFED/DAPL as well.

Thanks,

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

- I am using OFED (MLNX_OFED_LINUX-2.4-1.0.4) which is the latest version that I can install with my NIC.

Is this issue related with the driver's version (for example, the bug is reported quite long ago and fixed in the latest version?)

- When I disabled 'MPICH_ASYNC_PROGRESS', the program stops making progress on MPI functions. It waits on MPI_Wait (with request object from MPI_Rget) function forever (This also looks like a bug...)

thanks

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

could you send me a reproducer directly at mark.lubin@intel.com? This would really help.

Thanks,

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

just got internal response regarding OFED version you use:

"

this version of MOFED has a really old dapl-2.1.3 package. They need to upgrade to latest dapl-2.1.8.

See download pages for changes since 2.1.3: http://downloads.openfabrics.org/dapl/

Latest: http://downloads.openfabrics.org/dapl/dapl-2.1.8.tar.gz

"

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

do you have Intel Premier Support account? If yes, could you submit a ticket?

Thanks

Mark

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

it might be also a bug in your code. MPI Standard does not guarantee a real async progress for MPI applications (real overlapping of communications and computations).

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page