- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all!

I am getting forrtl: severe (174): SIGSEGV, segmentation fault occurred during factorization step of CPARDISO. It happens when i try to solve Nonsymmetric system of ~ 8.5 million equations. It usually occurs when factorization is complete at about 80%. Everything is fine if number of equations is ~ 1 million.

My setup phase is

NRHS = 1

MAXFCT = 1

MNUM = 1

IPARM(1) = 1 ! NO SOLVER DEFAULT

IPARM(2) = 3 ! FILL-IN REORDERING FROM METIS

IPARM(4) = 0 ! NO ITERATIVE-DIRECT ALGORITHM

IPARM(6) = 0 ! =0 SOLUTION ON THE FIRST N COMPONENTS OF X

IPARM(8) = 2 ! NUMBERS OF ITERATIVE REFINEMENT STEPS

IPARM(10) = 13 ! PERTURB THE PIVOT ELEMENTS WITH 1E-13

IPARM(11) = 1 ! USE NONSYMMETRIC PERMUTATION AND SCALING MPS

IPARM(13) = 1 ! MAXIMUM WEIGHTED MATCHING ALGORITHM IS SWITCHED-ON (DEFAULT FOR NON-SYMMETRIC)

IPARM(35) = 1 ! ZERO BASE INDEXING

ERROR = 0 ! INITIALIZE ERROR FLAG

MSGLVL = 0 ! PRINT STATISTICAL INFORMATION

MTYPE = 11 ! REAL UNSYMMETRIC

I use

mpiifort for the Intel(R) MPI Library 2018 Update 1 for Linux* Copyright(C) 2003-2017, Intel Corporation. All rights reserved. ifort version 18.0.1

My compilation line is

mpiifort -o mpi -O3 -I${MKLROOT}/include -qopenmp MPI_3D.f90 -Wl,--start-group ${MKLROOT}/lib/intel64/libmkl_intel_lp64.a ${MKLROOT}/lib/intel64/libmkl_intel_thread.a ${MKLROOT}/lib/intel64/libmkl_core.a ${MKLROOT}/lib/intel64/libmkl_blacs_intelmpi_lp64.a -Wl,--end-group -liomp5 -lpthread -lm -ldl

I tried to run on cluster on 8 nodes. Each node has 2x Intel(R) Xeon(R) Gold 6142 CPU @ 2.60GHz and 768Gb of RAM.

I also leave here link to my matrix in CSR3 format. Attention: unzipped files are more than 2 Gb.

https://drive.google.com/file/d/1HOeg-eF0iAlSx533AOi91pfCA6YMFHNd/view?usp=sharing

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Oleg!

A quick question, are you sure that the factors for your matrix fit into 32-bit integers? The matrix seems to be quite huge. If you're unsure about that, can you check and share with us the output from iparm(15)-iparm(18) after phase 1? You'll need to initialize iparm(18) < 0 to get the report for the number of nonzeros in the factors.

If the number of nonzeros in the factors exceed the limit of 32-bit integers, you'll need to switch to the ILP64 mode (64-bit integers) which would change your compilation and link lines, following the MKL Link Line Advisor (https://software.intel.com/en-us/articles/intel-mkl-link-line-advisor/).

Thanks,

Kirill

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Kirill!

Thanks for quick response! Here is some summary about my matrix:

< Linear system Ax = b >

number of equations: 8470528

number of non-zeros in A: 68307067

number of non-zeros in A (%): 0.000095

number of right-hand sides: 1

And here is something strange when i run phase = 11 and use different number of MPI processes. iparm(18) always return negative value and iparm(15)-iparm(17) are different:

np = 1

< Factors L and U >

number of columns for each panel: 72

number of independent subgraphs: 0

number of supernodes: 4396346

size of largest supernode: 48712

number of non-zeros in L: 22704680039

number of non-zeros in U: 22536224271

number of non-zeros in L+U: 45240904310

MY RANK IS 0 IPARM(15) = 18771844

MY RANK IS 0 IPARM(16) = 13991103

MY RANK IS 0 IPARM(17) = 355963495

MY RANK IS 0 IPARM(18) = -2003735946

np = 2

< Factors L and U >

number of columns for each panel: 128

number of independent subgraphs: 0

number of supernodes: 4382494

size of largest supernode: 48712

number of non-zeros in L: 23015897339

number of non-zeros in U: 22776795225

number of non-zeros in L+U: 45792692564

MY RANK IS 0 IPARM(15) = 17464877

MY RANK IS 0 IPARM(16) = 13395041

MY RANK IS 0 IPARM(17) = 181358623

MY RANK IS 0 IPARM(18) = -1451947692

MY RANK IS 1 IPARM(15) = 0

MY RANK IS 1 IPARM(16) = 0

MY RANK IS 1 IPARM(17) = 0

MY RANK IS 1 IPARM(18) = -1

np = 4

< Factors L and U >

number of columns for each panel: 128

number of independent subgraphs: 0

number of supernodes: 4389074

size of largest supernode: 48712

number of non-zeros in L: 22824983785

number of non-zeros in U: 22584778823

number of non-zeros in L+U: 45409762608

MY RANK IS 0 IPARM(15) = 17376019

MY RANK IS 0 IPARM(16) = 13355027

MY RANK IS 0 IPARM(17) = 91915182

MY RANK IS 0 IPARM(18) = -1834877648

MY RANK IS 1 IPARM(15) = 0

MY RANK IS 1 IPARM(16) = 0

MY RANK IS 1 IPARM(17) = 0

MY RANK IS 1 IPARM(18) = -1

MY RANK IS 3 IPARM(15) = 0

MY RANK IS 3 IPARM(16) = 0

MY RANK IS 3 IPARM(17) = 0

MY RANK IS 3 IPARM(18) = -1

MY RANK IS 2 IPARM(15) = 0

MY RANK IS 2 IPARM(16) = 0

MY RANK IS 2 IPARM(17) = 0

MY RANK IS 2 IPARM(18) = -1

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Some update. I tried to use 64 bit version. MSGLVL = 1 showed that factorization was at 100%, then i got another error:

forrtl: severe (174): SIGSEGV, segmentation fault occurred Image PC Routine Line Source mpi 0000000000449C3D Unknown Unknown Unknown libpthread-2.17.s 00007F43A8EBC5D0 Unknown Unknown Unknown libmkl_core.so 00007F43A9827C68 mkl_serv_free Unknown Unknown libmkl_intel_thre 00007F43AD121B95 mkl_pds_blkl_cpar Unknown Unknown libmkl_core.so 00007F43AACFB6EC mkl_pds_factorize Unknown Unknown libmkl_core.so 00007F43AACF5D77 mkl_pds_do_all_cp Unknown Unknown libmkl_core.so 00007F43AACE7A04 mkl_pds_cpardiso_ Unknown Unknown libmkl_core.so 00007F43AACE51DD mkl_pds_cluster_s Unknown Unknown mpi 000000000043187A Unknown Unknown Unknown mpi 0000000000439211 Unknown Unknown Unknown mpi 000000000040395E Unknown Unknown Unknown libc-2.17.so 00007F43A73C63D5 __libc_start_main Unknown Unknown mpi 0000000000403869 Unknown Unknown Unknown forrtl: severe (174): SIGSEGV, segmentation fault occurred Image PC Routine Line Source mpi 0000000000449C3D Unknown Unknown Unknown libpthread-2.17.s 00007FA6B53B25D0 Unknown Unknown Unknown libmkl_core.so 00007FA6B5D1DC68 mkl_serv_free Unknown Unknown libmkl_intel_thre 00007FA6B9617B95 mkl_pds_blkl_cpar Unknown Unknown libmkl_core.so 00007FA6B71E4C13 mkl_pds_factorize Unknown Unknown libmkl_core.so 00007FA6B71DAFD2 mkl_pds_cluster_s Unknown Unknown mpi 000000000043187A Unknown Unknown Unknown mpi 0000000000439211 Unknown Unknown Unknown mpi 000000000040395E Unknown Unknown Unknown libc-2.17.so 00007FA6B38BC3D5 __libc_start_main Unknown Unknown mpi 0000000000403869 Unknown Unknown Unknown forrtl: severe (174): SIGSEGV, segmentation fault occurred Image PC Routine Line Source mpi 0000000000449C3D Unknown Unknown Unknown libpthread-2.17.s 00007FA804C485D0 Unknown Unknown Unknown libmkl_core.so 00007FA8055B3C68 mkl_serv_free Unknown Unknown libmkl_intel_thre 00007FA808EADB95 mkl_pds_blkl_cpar Unknown Unknown libmkl_core.so 00007FA806A7AC13 mkl_pds_factorize Unknown Unknown libmkl_core.so 00007FA806A70FD2 mkl_pds_cluster_s Unknown Unknown mpi 000000000043187A Unknown Unknown Unknown mpi 0000000000439211 Unknown Unknown Unknown mpi 000000000040395E Unknown Unknown Unknown libc-2.17.so 00007FA8031523D5 __libc_start_main Unknown Unknown mpi 0000000000403869 Unknown Unknown Unknown forrtl: severe (174): SIGSEGV, segmentation fault occurred Image PC Routine Line Source mpi 0000000000449C3D Unknown Unknown Unknown libpthread-2.17.s 00007FE2983905D0 Unknown Unknown Unknown libmkl_core.so 00007FE298CFBC68 mkl_serv_free Unknown Unknown libmkl_intel_thre 00007FE29C5F5B95 mkl_pds_blkl_cpar Unknown Unknown libmkl_core.so 00007FE29A1C2C13 mkl_pds_factorize Unknown Unknown libmkl_core.so 00007FE29A1B8FD2 mkl_pds_cluster_s Unknown Unknown mpi 000000000043187A Unknown Unknown Unknown mpi 0000000000439211 Unknown Unknown Unknown mpi 000000000040395E Unknown Unknown Unknown libc-2.17.so 00007FE29689A3D5 __libc_start_main Unknown Unknown mpi 0000000000403869 Unknown Unknown Unknown

I compiled this with

mpiifort -o mpi -O0 -g -mkl=parallel MPI_3D.f90 -lmkl_blacs_intelmpi_ilp64 -liomp5 -lpthread -lm -ldl

At static linking almost everything is unknown.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello again!

1) I'm afraid I still don't like your link line. The option "-mkl=parallel" links against the LP64 libraries so in your last try you actually had a mix of lp64 (from -mkl=parallel) and ilp64 libraries (blacs library which you have).

Thus, I strongly recommend that you stop using "-mkl" option and instead try to follow our Link line Advisor:

https://software.intel.com/en-us/articles/intel-mkl-link-line-advisor/.

If you check there, it says that you need to add -i8 flag to your compilation line and use the link line with options

-Wl,--start-group ${MKLROOT}/lib/intel64/libmkl_intel_ilp64.a ${MKLROOT}/lib/intel64/libmkl_intel_thread.a ${MKLROOT}/lib/intel64/libmkl_core.a ${MKLROOT}/lib/intel64/libmkl_blacs_intelmpi_ilp64.a -Wl,--end-group -liomp5 -lpthread -lm -ldl

2) It's ok that for different number of processes you see different amount of memory required in iparm, some memory allocation depend on the number of processes.

3) As I see, the #nnz in the factors for your matrix do exceed the 32-bit integers. But our sparse solver should have handled that without ILP64 mode. However, now I start thinking whether your input matrix fit into the limits. Can you tell us also whether your input matrix is distributed among MPIs (iparm(40)=?)? What is the total number of nonzeros in the matrix A and the local numbers (for each process), for the configuration which crashed?

Summarizing that: could you try the ilp64 mode with correct compilation and link lines and could you also give us some more information about the matrix?

I hope this helps!

Best,

Kirill

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Kirill!

Thank you for helping me.

1) Now compilation goes static:

mpiifort -o mpi -O3 -i8 -I${MKLROOT}/include -qopenmp MPI_3D.f90 -Wl,--start-group ${MKLROOT}/lib/intel64/libmkl_intel_ilp64.a ${MKLROOT}/lib/intel64/libmkl_intel_thread.a ${MKLROOT}/lib/intel64/libmkl_core.a ${MKLROOT}/lib/intel64/libmkl_blacs_intelmpi_ilp64.a -Wl,--end-group -liomp5 -lpthread -lm -ldl

output finishes at:

Percentage of computed non-zeros for LL^T factorization 1 % 2 % 3 % 4 % 5 % 6 % 11 % 12 % 13 % 14 % 15 % 19 % 21 % 22 % 23 % 24 % 29 % 30 % 31 % 32 % 34 % 38 % 39 % 40 % 41 % 44 % 46 % 47 % 48 % 49 % 50 % 51 % 53 % 58 % 59 % 60 % 61 % 62 % 63 % 64 % 65 % 66 % 67 % 68 % 69 % 70 % 73 % 75 % 77 % 78 % 80 % 83 % 85 % 88 % 89 % 90 % 91 % 92 % 93 % 94 % 95 % 96 % 97 % 98 % 99 % 100 % [mpiexec@node79] Exit codes: [node79] 44544 [node80] 0 [node81] 0 [node82] 0

and errors are:

forrtl: severe (174): SIGSEGV, segmentation fault occurred Image PC Routine Line Source mpi 0000000004A9CC2D Unknown Unknown Unknown libpthread-2.17.s 00007F93A09555D0 Unknown Unknown Unknown mpi 000000000045F988 Unknown Unknown Unknown mpi 00000000008D61B5 Unknown Unknown Unknown mpi 000000000059F7AC Unknown Unknown Unknown mpi 0000000000504417 Unknown Unknown Unknown mpi 000000000048A1B4 Unknown Unknown Unknown mpi 000000000042D09D Unknown Unknown Unknown mpi 0000000000424F68 Unknown Unknown Unknown mpi 0000000000407F0C Unknown Unknown Unknown mpi 0000000000406C7C Unknown Unknown Unknown mpi 000000000040681E Unknown Unknown Unknown libc-2.17.so 00007F939EC2A3D5 __libc_start_main Unknown Unknown mpi 0000000000406729 Unknown Unknown Unknown forrtl: severe (174): SIGSEGV, segmentation fault occurred Image PC Routine Line Source mpi 0000000004A9CC2D Unknown Unknown Unknown libpthread-2.17.s 00007F97DAD685D0 Unknown Unknown Unknown mpi 000000000045F988 Unknown Unknown Unknown mpi 00000000008D61B5 Unknown Unknown Unknown mpi 00000000004886F3 Unknown Unknown Unknown mpi 000000000042CE92 Unknown Unknown Unknown mpi 0000000000424F68 Unknown Unknown Unknown mpi 0000000000407F0C Unknown Unknown Unknown mpi 0000000000406C7C Unknown Unknown Unknown mpi 000000000040681E Unknown Unknown Unknown libc-2.17.so 00007F97D903D3D5 __libc_start_main Unknown Unknown mpi 0000000000406729 Unknown Unknown Unknown forrtl: severe (174): SIGSEGV, segmentation fault occurred Image PC Routine Line Source mpi 0000000004A9CC2D Unknown Unknown Unknown libpthread-2.17.s 00007F7BE27605D0 Unknown Unknown Unknown mpi 000000000045F988 Unknown Unknown Unknown mpi 00000000008D61B5 Unknown Unknown Unknown mpi 00000000004886F3 Unknown Unknown Unknown mpi 000000000042CE92 Unknown Unknown Unknown mpi 0000000000424F68 Unknown Unknown Unknown mpi 0000000000407F0C Unknown Unknown Unknown mpi 0000000000406C7C Unknown Unknown Unknown mpi 000000000040681E Unknown Unknown Unknown libc-2.17.so 00007F7BE0A353D5 __libc_start_main Unknown Unknown mpi 0000000000406729 Unknown Unknown Unknown forrtl: severe (174): SIGSEGV, segmentation fault occurred Image PC Routine Line Source mpi 0000000004A9CC2D Unknown Unknown Unknown libpthread-2.17.s 00007F0D5EDD55D0 Unknown Unknown Unknown mpi 000000000045F988 Unknown Unknown Unknown mpi 00000000008D61B5 Unknown Unknown Unknown mpi 00000000004886F3 Unknown Unknown Unknown mpi 000000000042CE92 Unknown Unknown Unknown mpi 0000000000424F68 Unknown Unknown Unknown mpi 0000000000407F0C Unknown Unknown Unknown mpi 0000000000406C7C Unknown Unknown Unknown mpi 000000000040681E Unknown Unknown Unknown libc-2.17.so 00007F0D5D0AA3D5 __libc_start_main Unknown Unknown mpi 0000000000406729 Unknown Unknown Unknown

2) It is strange that in post 3 there are different numbers of non zeroes in L,U and L+U matrixes.

3) iparm(40) = 0, matrix is stored at rank 0. I already showed you some info about my matrix:

< Linear system Ax = b >

number of equations: 8470528

number of non-zeros in A: 68307067

number of non-zeros in A (%): 0.000095

number of right-hand sides: 1

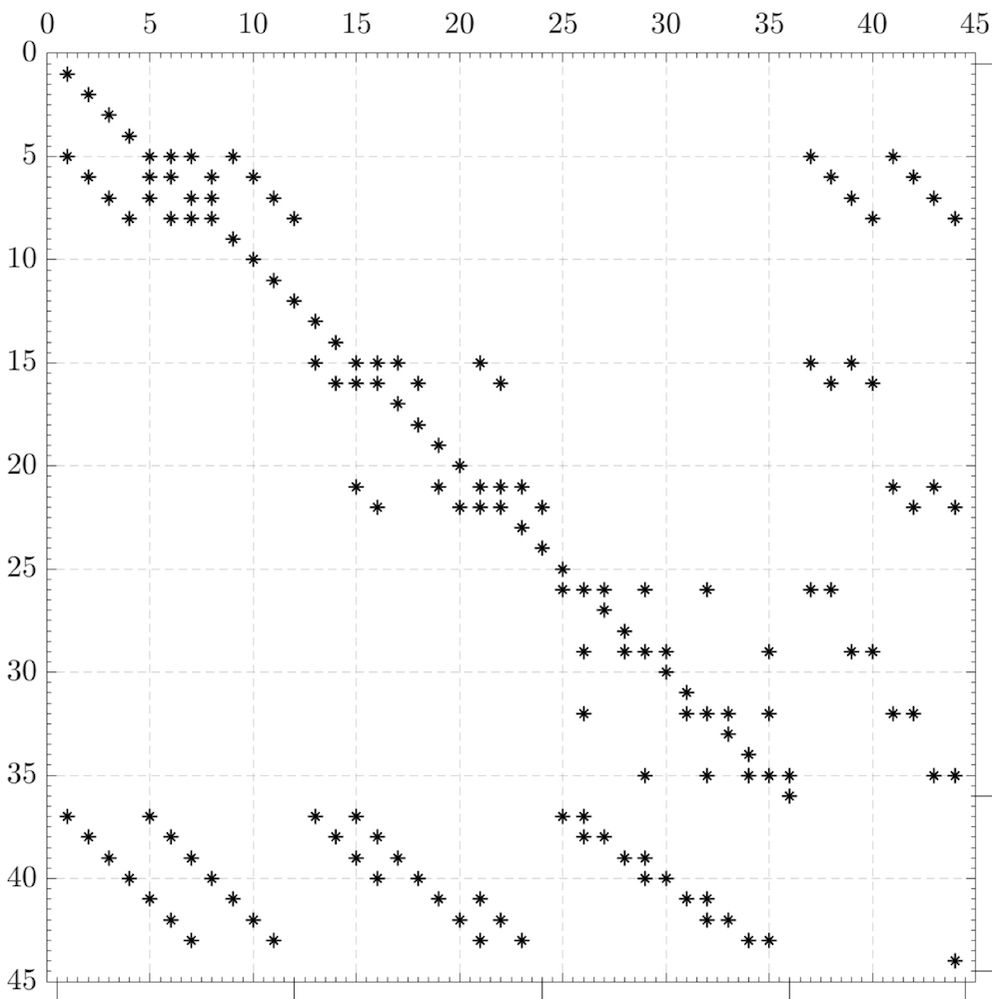

I can add that is ill conditioned, it has a lot of zeroes on main diagonal. The portrait of matrix of a lower size is:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oleg, it looks like the real issue. You may submit the ticket to the Intel Online service center where you may share all your input ( reproducer, input matrix and others details ) regard to this problem.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

OSC - https://supporttickets.intel.com/?lang=en-US

and here is how to create the access: https://software.intel.com/en-us/articles/how-to-create-a-support-request-at-online-service-center

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well, this is disappointing.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Our records indicate that you do not have a supported product associated with your account. You need a supported product in order to qualify for Priority Support. As such, this ticket is being closed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

it means you don't have a valid COM license. You may give us the reproducer and input matrix for your case from this thread or just communicate with me via email if you don't like to share all details onto the open forum.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello, Gennady

I didn't find your email. Can you share it with me?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

yes. i will

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page