- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My KNL platform is based on Intel(R) Xeon Phi(TM) CPU 7210 @ 1.30GHz, 1 node, 64 cores,64GB memory. I have some problems in linpack benchmark.

Before I use Intel® Optimized MP LINPACK Benchmark for Clusters, I have used HPL 2.2 and Intel Optimized MP LINPACK Benchmark. In HPL 2.2 and Intel Optimized MP LINPACK Benchmark, the result is bad. The highest result is 486 Gflops when I use HPL 2.2 and 683.6404 Gflops when I use Intel Optimized MP LINPACK Benchmark. However, the theoretical peak performance is 1*64*1.3*32=2662.4 Gflops.

So I am confused. This result looks like the AVX512 is not used? Where am i doing it wrong?

In HPL 2.2 test, I set the N=82800, NB=336, P=4 ,Q=16, and "mpiexec -n 64 ./xhpl", I get the best result (486 Gflops) in HPL 2.2. I also test N=82800, NB=336, P=8 ,Q=32, and "mpiexec -n 256 ./xhpl", but because of no enough memory, the result is low.

I try to use Intel® Optimized MP LINPACK Benchmark for Clusters now. But I get trouble on run it. If I run a small test, such as

mpiexec -np 8 ./xhpl -n 10000 -b 336 -p 2 -q 4

I can get a result.

Even if I enlarge the N and Nb, such as

mpiexec -np 32 ./xhpl -n 83000 -b 336 -p 4 -q 8

I can get a result too.

But when I set the p*q=64 or more, some problem happened.

[root@knl mp_linpack]$ mpiexec -np 64 ./xhpl -n 83000 -b 336 -p 4 -q 16 Number of Intel(R) Xeon Phi(TM) coprocessors : 0 Rank 0: First 5 column_factors=1 1 1 1 1 HPL[knl] pthread_create Error in HPL_pdupdate.

The test is closed directly.

So what should I do to get the higher result in linpack benchmark?

Thanks!

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Could you try running the benchmark without the mpiexec? On a single node, we do not need to use multiple MPI processes to get the best performance. You could try something like this:

./xhpl -n 83000 -b 336

Then, when you go to multi-node, please use 1 MPI process per node for the KNL systems.

Thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear all,

I have a problem with the result of MKL MP_Linkpack. In my system, I have 24 compute nodes with both Intel(R) Xeon(R) CPU E5-2680 v3 @ 2.50GHz and Xeon Phi Q7200, RAM 256GB. On each node, I run ./runme_intel64, the performance is good ~ 700-900 GFlops (only Xeon CPU).

But when I run HPL on 4 nodes, 8 nodes or more, the result is very bad, sometimes it cannot return the result with the error: MPI TERMINATED,... After that, I run the test (runme_intel64) on each node again, and the performance is very low:

~ 11,243 GFLops,

~ 10,845 GFlops,

....

But I don't know the reason why, I guess the reason is power of cluster (it is not enough for a whole system) and HPE Bios configured is Balanced Mode for the cluster (automatically change to lower power mode when the system cannot get enough the power). But when I just run on some nodes and configure the power is maximum, the problem is still not solved.

Please help me about this problem, thank you all!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your answer.

I followed your advice and had a try with (work mp_linpack)

./xhpl -n 83000 -b 336

and The result I got was 716.506Gflops.

This result is the best I've ever had. But the theoretical performance a single Intel(R) Xeon Phi(TM) CPU 7210 node is 2662.4Gflops. There is still a big gap between this result and the theoretical performance.

When I was running the Linpack test program, I tried using the monitoring software. And I found that the use rate of CPU is only about 25%. I learned from a material that When I running HPL on this platform, I can use all 256 threads. But after I used the "top" command, I found that the "xhpl" process has used 6400% CPU. And I had tried set the environment by

export OMP_NUM_THREADS=256 export MKL_NUM_THREADS=256

in order to change threads number, but I failed. The result did not change.

I remenber that when I use "mpiexec -np 64 ./xhpl " in HPL2.2, this HPL program establishes 64 threads, each process using a 100% CPU. And when I use "mpiexec -np 256 ./xhpl " in HPL2.2, I find that this program establishes 256 threads, each process using a 100% CPU. Although they both can't get idea result.

It seems that this process only used 64 threads? How can I use all of threads? Or what should I do to get the higher result in linpack benchmark?

And another problem. Is use multiple MPI processes commands caused the error blow?

[root@knl mp_linpack]$ mpiexec -np 64 ./xhpl -n 83000 -b 336 -p 4 -q 16 Number of Intel(R) Xeon Phi(TM) coprocessors : 0 Rank 0: First 5 column_factors=1 1 1 1 1 HPL[knl] pthread_create Error in HPL_pdupdate.

Thanks.

Murat Efe Guney (Intel) wrote:

Could you try running the benchmark without the mpiexec? On a single node, we do not need to use multiple MPI processes to get the best performance. You could try something like this:

./xhpl -n 83000 -b 336

Then, when you go to multi-node, please use 1 MPI process per node for the KNL systems.

Thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your answer.

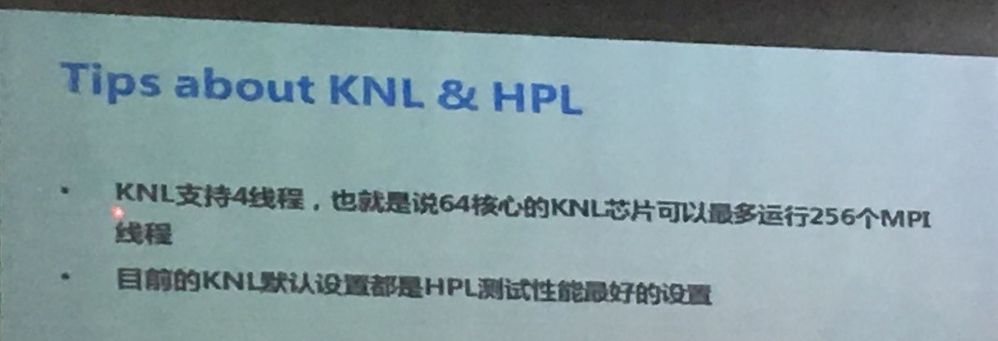

In a training course I learned that "KNL support 4 threads, in other words, this 7210 KNL can run up to 256 MPI threads." "The hardware setting is the best for HPL test currently."

So I said I can use all 256 threads.

And I tried to set

export OMP_NUM_THREADS=64

export MKL_NUM_THREADS=64

and run (mp_linpack) Intel® Optimized MP LINPACK Benchmark for Clusters, the result is still bad.

I try to google KMP_AFFINITY and I find this is a environment variables for OPENMP not MPI. The software environment is Intel composer, Intel MPI and Intel MKL.

I also try it. But it seems still not works.( The results have some fluctuate, but there are still a big gap between this results and the theoretical performance.)

I have some questions.

1. As you say,

"A top performance on your KNL system will be when only 64 cores and 64 OpenMP threads are used ( spread across all cores )"

So how can I run the mp_linpack(Intel® Optimized MP LINPACK Benchmark for Clusters https://software.intel.com/en-us/node/528619) ? "./xhpl -n 83000 -b 336 " or "mpiexec -np 64 ./xhpl -n 83000 -b 336 -p 4 -q 16" or other?

2. I also tested the HPL2.2 and Intel® Optimized LINPACK Benchmark for Linux* (which runs on a single platform, https://software.intel.com/en-us/node/528615), but the result is still not good.

For HPL2.2, do you know how to test it ?

"mpiexec -np 64 ./xhpl" and in HPL.dat, N=83000 Nb=336 P=4 Q=16

or

"mpiexec -np 256 ./xhpl" and in HPL.dat, N=83000 Nb=336 P=8 Q=32

or other?

And in the Intel® Optimized LINPACK Benchmark for Linux* Developer Guide , there are only brief introduction, no input files introduction. How to test it?

3.The theoretical performance to a single Intel(R) Xeon Phi(TM) CPU 7210 node is 2662.4Gflops. But the top result I get is 716.506Gflops. This confused me much. How to get close to the theoretical performance?

Thanks.

Sergey Kostrov wrote:

>>... I learned from a material that When I running HPL on this platform, I can use all 256 threads...

A top performance on your KNL system will be when only 64 cores and 64 OpenMP threads are used ( spread across all cores ). That is,

...

export OMP_NUM_THREADS=64

export MKL_NUM_THREADS=64

...

need to be executed instead.Also, try to set KMP_AFFINITY environment variable to:

...

export KMP_AFFINITY=scatter

or

export KMP_AFFINITY=scatter,verbose

...With KMP_AFFINITY set to compact or balanced modes performance could be worse when compared to scatter mode. I recommend you to test all of these modes.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've used the official version of hpl-2.2 on a dual nodes Phi 7230 HPC 3 months ago, and I reached 3888.69GFlops(Single node 2123GFlops). thus I think I have some knowledge of configure and optimize hpl-2.2 on KNL platform. I'm sorry to see you got bad performance (486GFlops), but I prefer to assert it was caused by bad compile optimization. show me your specific configuration of Make.intel64 file. Maybe I can help you...who knows?..

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your answer.

My KNL platform is based on Intel(R) Xeon Phi(TM) CPU 7210 @ 1.30GHz, 1 node, 64 cores and 64GB memory.(may add the extra 16G eDRAM memory in KNL?)

My software environment is Intel composer, Intel MPI and Intel MKL.

My top result tested by HPL2.2 is 486Gflops, with N=82800, NB=336, P=4 ,Q=16 in HPL.dat.

Here is my Make.intel64 file:

#

# -- High Performance Computing Linpack Benchmark (HPL)

# HPL - 2.2 - February 24, 2016

# Antoine P. Petitet

# University of Tennessee, Knoxville

# Innovative Computing Laboratory

# (C) Copyright 2000-2008 All Rights Reserved

#

# -- Copyright notice and Licensing terms:

#

# Redistribution and use in source and binary forms, with or without

# modification, are permitted provided that the following conditions

# are met:

#

# 1. Redistributions of source code must retain the above copyright

# notice, this list of conditions and the following disclaimer.

#

# 2. Redistributions in binary form must reproduce the above copyright

# notice, this list of conditions, and the following disclaimer in the

# documentation and/or other materials provided with the distribution.

#

# 3. All advertising materials mentioning features or use of this

# software must display the following acknowledgement:

# This product includes software developed at the University of

# Tennessee, Knoxville, Innovative Computing Laboratory.

#

# 4. The name of the University, the name of the Laboratory, or the

# names of its contributors may not be used to endorse or promote

# products derived from this software without specific written

# permission.

#

# -- Disclaimer:

#

# THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS

# ``AS IS'' AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT

# LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR

# A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE UNIVERSITY

# OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL,

# SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT

# LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE,

# DATA OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY

# THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT

# (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

# OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

# ######################################################################

#

# ----------------------------------------------------------------------

# - shell --------------------------------------------------------------

# ----------------------------------------------------------------------

#

SHELL = /bin/sh

#

CD = cd

CP = cp

LN_S = ln -fs

MKDIR = mkdir -p

RM = /bin/rm -f

TOUCH = touch

#

# ----------------------------------------------------------------------

# - Platform identifier ------------------------------------------------

# ----------------------------------------------------------------------

#

ARCH = Linux_Intel64

#

# ----------------------------------------------------------------------

# - HPL Directory Structure / HPL library ------------------------------

# ----------------------------------------------------------------------

#

TOPdir = /home/user002/benchmark/hpl-2.2

INCdir = $(TOPdir)/include

BINdir = $(TOPdir)/bin/$(ARCH)

LIBdir = $(TOPdir)/lib/$(ARCH)

#

HPLlib = $(LIBdir)/libhpl.a

#

# ----------------------------------------------------------------------

# - Message Passing library (MPI) --------------------------------------

# ----------------------------------------------------------------------

# MPinc tells the C compiler where to find the Message Passing library

# header files, MPlib is defined to be the name of the library to be

# used. The variable MPdir is only used for defining MPinc and MPlib.

#

MPdir = /opt/intel/compilers_and_libraries_2017.1.132/linux/mpi

MPinc = -I$(MPdir)/include64

MPlib = $(MPdir)/lib64/libmpi.a

#

# ----------------------------------------------------------------------

# - Linear Algebra library (BLAS or VSIPL) -----------------------------

# ----------------------------------------------------------------------

# LAinc tells the C compiler where to find the Linear Algebra library

# header files, LAlib is defined to be the name of the library to be

# used. The variable LAdir is only used for defining LAinc and LAlib.

#

LAdir = /opt/intel/compilers_and_libraries_2017.1.132/linux/mkl

ifndef LAinc

LAinc = $(LAdir)/include

endif

ifndef LAlib

LAlib = -L$(LAdir)/lib/intel64 \

-Wl,--start-group \

$(LAdir)/lib/intel64/libmkl_intel_lp64.a \

$(LAdir)/lib/intel64/libmkl_intel_thread.a \

$(LAdir)/lib/intel64/libmkl_core.a \

-Wl,--end-group -lpthread -ldl

endif

#

# ----------------------------------------------------------------------

# - F77 / C interface --------------------------------------------------

# ----------------------------------------------------------------------

# You can skip this section if and only if you are not planning to use

# a BLAS library featuring a Fortran 77 interface. Otherwise, it is

# necessary to fill out the F2CDEFS variable with the appropriate

# options. **One and only one** option should be chosen in **each** of

# the 3 following categories:

#

# 1) name space (How C calls a Fortran 77 routine)

#

# -DAdd_ : all lower case and a suffixed underscore (Suns,

# Intel, ...), [default]

# -DNoChange : all lower case (IBM RS6000),

# -DUpCase : all upper case (Cray),

# -DAdd__ : the FORTRAN compiler in use is f2c.

#

# 2) C and Fortran 77 integer mapping

#

# -DF77_INTEGER=int : Fortran 77 INTEGER is a C int, [default]

# -DF77_INTEGER=long : Fortran 77 INTEGER is a C long,

# -DF77_INTEGER=short : Fortran 77 INTEGER is a C short.

#

# 3) Fortran 77 string handling

#

# -DStringSunStyle : The string address is passed at the string loca-

# tion on the stack, and the string length is then

# passed as an F77_INTEGER after all explicit

# stack arguments, [default]

# -DStringStructPtr : The address of a structure is passed by a

# Fortran 77 string, and the structure is of the

# form: struct {char *cp; F77_INTEGER len;},

# -DStringStructVal : A structure is passed by value for each Fortran

# 77 string, and the structure is of the form:

# struct {char *cp; F77_INTEGER len;},

# -DStringCrayStyle : Special option for Cray machines, which uses

# Cray fcd (fortran character descriptor) for

# interoperation.

#

F2CDEFS = -DAdd__ -DF77_INTEGER=int -DStringSunStyle

#

# ----------------------------------------------------------------------

# - HPL includes / libraries / specifics -------------------------------

# ----------------------------------------------------------------------

#

HPL_INCLUDES = -I$(INCdir) -I$(INCdir)/$(ARCH) -I$(LAinc) $(MPinc)

HPL_LIBS = $(HPLlib) $(LAlib) $(MPlib)

#

# - Compile time options -----------------------------------------------

#

# -DHPL_COPY_L force the copy of the panel L before bcast;

# -DHPL_CALL_CBLAS call the cblas interface;

# -DHPL_CALL_VSIPL call the vsip library;

# -DHPL_DETAILED_TIMING enable detailed timers;

#

# By default HPL will:

# *) not copy L before broadcast,

# *) call the BLAS Fortran 77 interface,

# *) not display detailed timing information.

#

#HPL_OPTS = -DHPL_DETAILED_TIMING -DHPL_PROGRESS_REPORT

HPL_OPTS = -DASYOUGO -DHYBRID

#

# ----------------------------------------------------------------------

#

HPL_DEFS = $(F2CDEFS) $(HPL_OPTS) $(HPL_INCLUDES)

#

# ----------------------------------------------------------------------

# - Compilers / linkers - Optimization flags ---------------------------

# ----------------------------------------------------------------------

#

CC = mpiicc

CCNOOPT = $(HPL_DEFS) -O0 -w -nocompchk

#OMP_DEFS = -openmp

#CCFLAGS = $(HPL_DEFS) -O3 -w -z noexecstack -z relro -z now -nocompchk -Wall

CCFLAGS = $(HPL_DEFS) -O3 -w -ansi-alias -i-static -z noexecstack -z relro -z now -openmp -nocompchk

#

# On some platforms, it is necessary to use the Fortran linker to find

# the Fortran internals used in the BLAS library.

#

LINKER = $(CC)

#LINKFLAGS = $(CCFLAGS) $(OMP_DEFS) -mt_mpi

LINKFLAGS = $(CCFLAGS) -openmp -mt_mpi $(STATICFLAG) -nocompchk

#

ARCHIVER = ar

ARFLAGS = r

RANLIB = echo

#

# ----------------------------------------------------------------------

I'm hoping your answer. You are so warm! Thanks!

doctor_duo_sim wrote:

I've used the official version of hpl-2.2 on a dual nodes Phi 7230 HPC 3 months ago, and I reached 3888.69GFlops(Single node 2123GFlops). thus I think I have some knowledge of configure and optimize hpl-2.2 on KNL platform. I'm sorry to see you got bad performance (486GFlops), but I prefer to assert it was caused by bad compile optimization. show me your specific configuration of Make.intel64 file. Maybe I can help you...who knows?..

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

...I've checked but it seems nothing wrong about your Make.intel64 file, I'm afraid that I can't figure out why you get so poor score . you can refer to this page, it may help you, as for the 16GB HBM, you should restart the sever and check your bios to make sure you have set it as cache to get best performance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for your answer.

You are so warm!

I will refer this page to restart HPL test again.

And I hope you can give me some help about runing HPL.

Can you show me your HPL.dat and HPL.out contents with your best result? And your running command such as "mpirun -np 64 ./xhpl" or others? Or any other running settings such as environment variable setting?

I think this may help me to solve this problem.

Thanks!

Duo S. wrote:

...I've checked but it seems nothing wrong about your Make.intel64 file, I'm afraid that I can't figure out why you get so poor score . you can refer to this page, it may help you, as for the 16GB HBM, you should restart the sever and check your bios to make sure you have set it as cache to get best performance.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Sergey,

I am on same platform as yours Intel Xeon Phi Processor 7210 (16GB, 1.30 GHz, 64 core). What I want to observe is thread based performance impact starting from 1 thread mapped to 1 core (rest turned off), then 2 thread mapped to 2 different cores (rest turned off) ....so on... to 256 threads mapped to 64 core different cores. For initial analysis, I can do away with mapping thread to core, but want to have specific number of threads based on number of active cores.

For such test, which benchmark would you suggest and what I should be aware about? I tried DeepBench, but need to figure out how to make use of threading in it.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Estimating "peak" performance on KNL is a bit tricky... For my Xeon Phi 7250 processors (68-core, 1.4 GHz nominal), the guaranteed frequency running AVX-512-heavy code is 1.2 GHz. The Xeon Phi core is also a 2-instruction-issue core, but peak performance requires 2 FMA's per cycle -- so any instruction that is not an FMA is a direct subtraction from the maximum available performance. It is difficult to be precise, but it is hard to imagine any encoding of the *DGEMM kernel that does not contain about 20% non-FMA instructions.

So a ballpark double-precision "adjusted peak" for the Xeon Phi 7250 is

68 cores * 1.2 GHz * 32 DP FP Ops/Hz * 80% FMA density = 2089 GFLOPS

For DGEMM problems that can fit all three arrays into MCDRAM (in flat-quadrant mode), I have seen performance of just over 2000 GFLOPS. I don't understand why, but these runs maintain an average frequency that is significantly higher than 1.2 GHz -- close to 1.4 GHz. The observed performance is ~85% of the "adjusted peak" performance at the observed frequency, which seems pretty reasonable.

HPL execution is dominated by DGEMM, but the overall algorithm is much more complex. Unlike DGEMM, when I run HPL on KNL I do see frequencies close to the expected power-limited 1.2 GHz. Also unlike DGEMM, when I run HPL I find that the KNL does not reach asymptotic performance for problem sizes that fit into the MCDRAM memory. To get asymptotic performance for larger problems, you need to either run with the MCDRAM in cached mode, or you need an implementation that explicitly stages the data (in large blocks) through MCDRAM. If I recall correctly, asymptotic HPL performance on KNL requires array sizes of at least 50-60 GiB. On clusters, even larger sizes (per KNL) are needed to minimize overhead due to inter-node MPI communication.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

McCalpin, John (Blackbelt) wrote:Estimating "peak" performance on KNL is a bit tricky... For my Xeon Phi 7250 processors (68-core, 1.4 GHz nominal), the guaranteed frequency running AVX-512-heavy code is 1.2 GHz. The Xeon Phi core is also a 2-instruction-issue core, but peak performance requires 2 FMA's per cycle -- so any instruction that is not an FMA is a direct subtraction from the maximum available performance. It is difficult to be precise, but it is hard to imagine any encoding of the *DGEMM kernel that does not contain about 20% non-FMA instructions.

So a ballpark double-precision "adjusted peak" for the Xeon Phi 7250 is

68 cores * 1.2 GHz * 32 DP FP Ops/Hz * 80% FMA density = 2089 GFLOPS

For DGEMM problems that can fit all three arrays into MCDRAM (in flat-quadrant mode), I have seen performance of just over 2000 GFLOPS. I don't understand why, but these runs maintain an average frequency that is significantly higher than 1.2 GHz -- close to 1.4 GHz. The observed performance is ~85% of the "adjusted peak" performance at the observed frequency, which seems pretty reasonable.

HPL execution is dominated by DGEMM, but the overall algorithm is much more complex. Unlike DGEMM, when I run HPL on KNL I do see frequencies close to the expected power-limited 1.2 GHz. Also unlike DGEMM, when I run HPL I find that the KNL does not reach asymptotic performance for problem sizes that fit into the MCDRAM memory. To get asymptotic performance for larger problems, you need to either run with the MCDRAM in cached mode, or you need an implementation that explicitly stages the data (in large blocks) through MCDRAM. If I recall correctly, asymptotic HPL performance on KNL requires array sizes of at least 50-60 GiB. On clusters, even larger sizes (per KNL) are needed to minimize overhead due to inter-node MPI communication.

Dear McCalpin, John (Blackbelt),

Your information supports really helpful. My architecture is also Xeon Phi 7250 (68-core, 1.4 GHz), but the performance I got for executing HPL just 804 Gflops per 68 cores.

Could you help me explain detail about your guide using MCDRAM? That is I have to set environment variables or I need to modify source code to using MCDRAM memory?

Or could you guide me tunning some parameters in HPL.dat to get good performance?

I hope to hear from you soon.

Thanks a lot.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page