- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello All!

I have a cluster consisting of 12 dual socket E5-2650 10-cores 64GB memory compute nodes.

I ran the mkl single linpack benchmark and I got around 650 Gflops which seems to be reasonable.

However, when I tried to run the MP_Linpack with mpirun -np 80 -hostfile hostfile ./xhpl_intel64, (hostfile has 40 lines of compute101 and 40 lines of compute102), I get a result of 161 Gflops. Shouldn't I be getting a result around 1300 Gflops?

My HPL.dat has problem size of 72192 and P 8 and Q 10.

Can anyone help me out?

Cheers!

Simon.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Simon,

Could you tell us your MPI version and MKL version. Are you running the latest benchmark from https://software.intel.com/en-us/articles/intel-mkl-benchmarks-suite?

There is some guide in MKL user guide: Runing the Intel Optimized MP LINPACK benchmark, you may refer to them.

As i understand, 12 dual socket E5-2650 10-cores 64GB memory compute nodes, so there are total 12x2 x10 =240 cpu cores, but the processor is about 12 or 24. how about try the PXQ = your node number 12, 24 and copy your HPL.dat here.

Best Regards,

Ying

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello!

It looks like I am using benchmarks_11.3.1.

I have l_mpi-rt_p_5.1.2.150 Intel mpi runtime.

I run the precompiled xhpl_intel64 bin file with the mpirun command.

/share/apps/l_mklb_p_11.3.1.

This is the HPL.dat that I am using. I am trying to run this on a single node first... With this HPL.dat, I am only getting about 82 Gflops... which is even worst than before.

mpirun -np 20 -hosts compute101 ./xhpl_intel64

HPLinpack benchmark input file

Innovative Computing Laboratory, University of Tennessee

HPL.out output file name (if any)

6 device out (6=stdout,7=stderr,file)

1 # of problems sizes (N)

72912 Ns

1 # of NBs

192 NBs

0 PMAP process mapping (0=Row-,1=Column-major)

1 # of process grids (P x Q)

4 Ps

5 Qs

16.0 threshold

1 # of panel fact

2 PFACTs (0=left, 1=Crout, 2=Right)

1 # of recursive stopping criterium

4 NBMINs (>= 1)

1 # of panels in recursion

2 NDIVs

1 # of recursive panel fact.

1 RFACTs (0=left, 1=Crout, 2=Right)

1 # of broadcast

1 BCASTs (0=1rg,1=1rM,2=2rg,3=2rM,4=

1 # of lookahead depth

1 DEPTHs (>=0)

2 SWAP (0=bin-exch,1=long,2=mix)

64 swapping threshold

0 L1 in (0=transposed,1=no-transposed) form

0 U in (0=transposed,1=no-transposed) form

1 Equilibration (0=no,1=yes)

8 memory alignment in double (> 0)

0

##### This line (no. 32) is ignored (it serves as a separator). ######

0 Number of additional problem sizes for PTRANS

1200 10000 30000 values of N

0 number of additional blocking sizes for PTRANS

40 9 8 13 13 20 16 32 64 values of NB

Thanks for help me out!

Simon!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The problem size you are running is a big one -- it will be easier to debug performance issues if you back the size down to something that runs more quickly. Using a Xeon E5-2680 v3 (12 core, 2.5 GHz), you can get pretty good parallel scaling within a single node with problem sizes as small as 10,000. This should run slightly over 1 second on your system (it takes 0.96 seconds on a single node on my system).

For a single node you can get some additional diagnostic information by running the command under "perf stat". Part of the output will be "CPUs utilized", which will tell you whether you are actually using all the cores that you think you have requested....

It is a good idea to use Intel's scripts instead of using mpirun directly -- there are a number of environment variables and other controls (such as numactl) that need to be set up correctly for best performance.

You will get best performance (once things are configured correctly) with one thread per physical core. Using both "logical processors" is not a disaster, but it does run a bit slower (maybe 5%-10%) in that configuration. Part of the slowdown is due to the cache blocking -- the code is configured so that each thread expects to use the whole L2 cache -- and part of the slowdown is due to increased interference with OS processes that no longer have free "logical processors" to run on.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks Yin and John!

I manged to get a step forward! I ran the mp_linpack with the following line:

mpirun --perhost 1 -n 12 -f hostfile ./xhpl_intel64 -p 3 -q 4 -n 270000

- The matrix A is randomly generated for each test.

- The following scaled residual check will be computed:

||Ax-b||_oo / ( eps * ( || x ||_oo * || A ||_oo + || b ||_oo ) * N )

- The relative machine precision (eps) is taken to be 1.110223e-16

- Computational tests pass if scaled residuals are less than 16.0

ko100 : Column=002560 Fraction=0.005 Kernel= 8.78 Mflops=12521461.91

ko104 : Column=003840 Fraction=0.010 Kernel=8242400.96 Mflops=10779152.20

ko108 : Column=005120 Fraction=0.015 Kernel=7894148.23 Mflops=9405724.51

ko109 : Column=006400 Fraction=0.020 Kernel=7684479.04 Mflops=8985647.38

ko101 : Column=007680 Fraction=0.025 Kernel=7682637.55 Mflops=8873078.37

ko105 : Column=008960 Fraction=0.030 Kernel=8198639.52 Mflops=8911387.84

ko106 : Column=010240 Fraction=0.035 Kernel=7908887.61 Mflops=8442547.48

ko110 : Column=011520 Fraction=0.040 Kernel=7610015.71 Mflops=8349288.51

ko102 : Column=012800 Fraction=0.045 Kernel=7633593.95 Mflops=8349808.96

ko103 : Column=014080 Fraction=0.050 Kernel=8006959.72 Mflops=8509667.10

ko107 : Column=015360 Fraction=0.055 Kernel=7831806.80 Mflops=8169145.41

ko111 : Column=016640 Fraction=0.060 Kernel=7778981.00 Mflops=8141986.92

ko100 : Column=017920 Fraction=0.065 Kernel=8007913.26 Mflops=8139188.59

ko104 : Column=019200 Fraction=0.070 Kernel=7710017.53 Mflops=8330258.72

ko108 : Column=020480 Fraction=0.075 Kernel=7919776.87 Mflops=8031170.84

ko109 : Column=021760 Fraction=0.080 Kernel=7924902.14 Mflops=8026303.41

ko101 : Column=023040 Fraction=0.085 Kernel=7625053.36 Mflops=8027532.23

ko105 : Column=024320 Fraction=0.090 Kernel=8012396.62 Mflops=8231320.41

ko110 : Column=026880 Fraction=0.095 Kernel=7601629.67 Mflops=7964282.47

It seems like the results are close to theoretical. Do these results look right? So the Intel mp_linapck treats each node (a 2 socket E5 10 cores) as -n 1. Since I have 12 nodes, I only have to use -n 12.

On each compute node (dual socket E5-2650 v3 10 cores 20 threads), when I open htop, it shows 40 cpus. When I run the mp_linpack, only cpu 1 to 20 go to 100% usage, cpu 21 to 40 stays at 0% usage. Is linpack using only 1/2 the compute power? Does it only go by the physical core count? How do I run linpack so it will use all 40 threads?

Thanks!

Simon!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Simon,

Right, in order better performance, MKL usually use physical core count threads, so if you have HT is on, only half of cpu recource was showed in htop. It is expected. About cpu 1-20, and 21-40, it is related to how os threads bind with physical core, you can try

. KMP_AFFINITY=nowarnings,compact,granularity=fine or KMP_AFFINITY=nowarnings,compact,1,0,granularity=fine to see if there are different.

Back to HT, you can find some explanation from MKL user guide, like

Known Limitations of the Intel® Optimized LINPACK Benchmark

The following limitations are known for the Intel Optimized LINPACK Benchmark for Windows* OS:

- Intel Optimized LINPACK Benchmark supports only OpenMP threading

- Intel Optimized LINPACK Benchmark is threaded to effectively use multiple processors. So, in multi-processor systems, best performance will be obtained with the Intel® Hyper-Threading Technology turned off, which ensures that the operating system assigns threads to physical processors only.

Best Regards,

Ying

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Add one key thing, ,

The recommended setting for multi-socket systems is to use 1 MPI per socket. Therefore, it’s worthwhile trying 24 MPI processes here by using the runmulti_intel64 script. Here, Could you please try set MPI_PROC_NUM=24 and MPI_PER_NODE=2. and see the performance result?

Best Regards,

Ying

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

According to Table 3 of "Intel Xeon Processor E5 v3 Product Family: Processor Specification Update" (document 330785, revision 009, August 2015), the Xeon E5-2650 v3 has a minimum "Intel AVX Core Frequency" of 2.0 GHz.

Intel appears to "tune" their products so that when running long-running (>10 seconds) LINPACK or DGEMM calculations, the chip will hit its maximum power budget when all of the cores are running at the "Intel AVX Core Frequency" or one step (0.1 GHz) higher. So this is the frequency that should be used to estimate the "peak" performance of the chip, and it works out to 10 cores/package * 16 FP Ops/core/cycle * 2.0 GHz = 320 GFLOPS per package. For 2-socket systems this is 640 GFLOPS per node, and for a 12-node system the aggregate peak 64-bit floating-point performance is 7680 GFLOPS. For a well-tuned LINPACK run, I typically see about 91% of this value, which would be about 6990 GFLOPS on this cluster.

The numbers above don't include the final performance result and I have never looked in detail at what the intermediate values mean. Only the final value printed out by the benchmark is a valid measurement -- the rest of the numbers are based on incomplete computations and (most likely) on timing measurements that are not fully synchronized. The full benchmark should run for about 30 minutes at this problem size.

The final number provided (7964.28247 GFLOPS) is still higher than the expected peak performance of this cluster. This could be due to startup effects -- the processors are typically configured to allow exceeding the power limit for up to 10 seconds. At the peak all-core AVX frequency of 2.6 GHz, this gives a peak performance of 9984 GFLOPS for a 12-node cluster. Any values higher than this are almost certainly due to measurement error -- the intermediate performance values printed out are probably based on node-local timings without proper synchronization. They are there to help you see that the job is making forward progress and to give an estimate of the performance, but they are not intended to be accurate performance measurements for the whole problem.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for all your advices! I wonder if it is just me that is having so much problem running the MP_Linpack.

For ease of trouble-shooting, I assembled four single socket Pentium G3240 2 core no HT systems.

I started from scratch. I installed intel MPI runtime, then I copied the mp_linpack to a nfs share which is auto mounted on all the nodes.

This time, these systems do not have hyperthreading.

When I run mp_linpack on a single system, mpirun --perhost 1 -n 1 -host compute1 ./xhpl_intel64 -n 5000 -p 1 -q 1, htop shows both cores @ 100%.

When I run mp_linpack on 2 systems, mpirun -print-rank-map --perhost 1 -n 4 -hosts compute1,compute2 ./xhpl_intel64 -n 5000 -p 2 -q 2, htop shows cpu 0 on both system @ 100%, BUT cpu 1 on both system 0%.

The rank map shows (compute1:0,2) (compute2:1,3), which means it is running 2 threads on each 2 cores system... but somehow those 2 threads are being run on the same core instead of the two cores.

I tried export OMP_NUM_THREADS=2... and tried the KMP_AFFINITY... but its doesn't change anything...

I tried to do mpirun --perhost 2 -n 2 -hosts compute1,compute2 hostname

compute1

compute1

compute2

compute2

and the results are ok...

Do you all have this much trouble running hplinpack?

I am still so stumped by this...

Please help if you can!

Thanks!

Simon.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Simon,

For multimode-runs with each node having multiple sockets (your original system), we recommend using the runme_intel64 script inside the /mp_linpack/bin_intel/intel64 folder. This script will set the required environment variables for using multiple MPI processes per node. Here, please change the following variables based on your configurations. For example, if you have two nodes each having two-socket system and if you want to run 2 MPI per node, please set the following variables inside the runme_intel64:

export MPI_PROC_NUM=4

export MPI_PER_NODE=2

Then, just execute the script from the command line: ./runme_intel64. This script will call mpirun inside (instead of you calling it directly). You may want to modify the mpirun line inside the script to pass the compute node arguments.

And, you are right. MP LINPACK does not react to OMP_NUM_THREADS/KMP_AFFINITY settings. It should use optimal number of threads and affinitize threads for you. However, as I mentioned above, we need to use runme_intel64 script for this purpose if we have more than 1 MPI processes per node.

Please let us know if this helps!

Thank you,

Efe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Efe!

First, I did this so mpirun could work.

export PATH=$PATH:/opt/intel/compilers_and_libraries_2016.1.150/linux/mpi/intel64/bin

Second, I edited runme_intel64:

export MPI_PROC_NUM=8

export MPI_PER_NODE=2

export NUMMIC=0

mpirun -f /root/hostfile -perhost ${MPI_PER_NODE} -np ${MPI_PROC_NUM} ./runme_intel64_prv "$@" | tee -a $OUT

Third, I ran ./runme_intel64

This is a SAMPLE run script. Change it to reflect the correct number

of CPUs/threads, number of nodes, MPI processes per node, etc..

This run was done on: Fri Jan 15 09:14:18 CST 2016

MPI_RANK_FOR_NODE=0 NODE=0, CORE=, MIC=, SHARE=

MPI_RANK_FOR_NODE=1 NODE=1, CORE=, MIC=, SHARE=

MPI_RANK_FOR_NODE=0 NODE=0, CORE=, MIC=, SHARE=

MPI_RANK_FOR_NODE=1 NODE=1, CORE=, MIC=, SHARE=

MPI_RANK_FOR_NODE=0 NODE=0, CORE=, MIC=, SHARE=

MPI_RANK_FOR_NODE=1 NODE=1, CORE=, MIC=, SHARE=

MPI_RANK_FOR_NODE=0 NODE=0, CORE=, MIC=, SHARE=

MPI_RANK_FOR_NODE=1 NODE=1, CORE=, MIC=, SHARE=

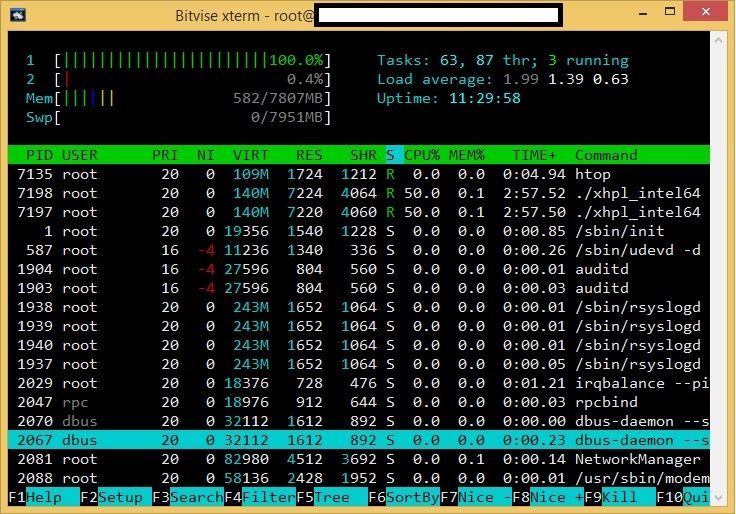

On each of the 4 compute nodes, the htop looks exactly like this:

Note that core 2 is not doing anything while the 2 mpi threads are running on core 1.

Same phenomenon as with my other cluster and this new test cluster...

Is this because I am running Linux 2.6.32-573.12.1.el6.x86_64 #1 SMP Tue Dec 15 08:24:23 CST 2015 x86_64 x86_64 x86_64 GNU/Linux Scientific Linux release 6.7 (Carbon)? When I was installing l_mpi-rt_p_5.1.2.150, it said it was an unsupported version.

Thanks!

Simon.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Simon,

Are your compute nodes dual socket nodes? You can check this with numactl --hardware: if you only see a single "node" with numactl (node means socket here) , then HPL treats this as a single socket system. Regardless, the above behavior where one core is being idle is not expected. I do not see this behavior on our lab systems.

If numactl is showing you a single socket, could you try with the following settings for your Pentium cluster?

export MPI_PROC_NUM=4

export MPI_PER_NODE=1

Here, we have 1 MPI per node and total 4 MPI processes. P=Q=2 can be used for this run. I wonder if you will still see only 1 core being utilized with the above settings. Unfortunately, we do not have this system in our lab so we cannot try HPL on it. So it may be worthwhile to do the same experiments on your original cluster.

I think you may want to post your MPI/OS interaction question on the Intel MPI forum.

Best,

Efe

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello all! Thanks for everyone's great help!

I finally got mp_linpack running correctly. It looks like I was confused about the parameters in the runme_intel64 file.

MPI_PROC_NUM='The number of actual physical server, which equals PxQ)

MPI_PER_NODE='This should be 1 and it doesn't matter if you have single socket or dual socket, if you put 2 for a dual socket system, the memory usage in htop will be shown to use 40% but in fact is using 80%, and there would be 2 controlling threads instead of one controlling thread'

For the 4 node Pentium, I used MPI_PROC_NUM=4 (P=2 Q=2, 4 physical servers) and MPI_PER_NODE=1 and it worked!

Before, I was putting MPI_PER_NODE=2 thinking there's 2 cores in each Pentium... But apparently, when you run the runme_intel64 script, it knows to spawn 2 threads even though the MPI_PER_NODE=1.

I tried this on my other cluster composing of 2 systems dual socket E5-26xx v3 16 cores, with MPI_PROC_NUM=2 (2 physical servers), MPI_PER_NODE=1, and the runme_intel64 generated 32 threads on each physical server running 100% load.

Thanks for all your help!

Cheers!

Simon.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Simon,

Glad to know the result. thanks for the summary.

Thanks

Ying

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear all,

I have a problem with the result of MKL MP_Linkpack. In my system, I have 24 compute nodes with both Intel(R) Xeon(R) CPU E5-2680 v3 @ 2.50GHz and Xeon Phi Q7200, RAM 256GB. On each node, I run ./runme_intel64, the performance is good ~ 700-900 GFlops (only Xeon CPU).

But when I run HPL on 4 nodes, 8 nodes or more, the result is very bad, sometimes it cannot return the result with the error: MPI TERMINATED,... After that, I run the test (runme_intel64) on each node again, and the performance is very low:

~ 11,243 GFLops,

~ 10,845 GFlops,

....

But I don't know the reason why, I guess the reason is power of cluster (it is not enough for a whole system) and HPE Bios configured is Balanced Mode for the cluster (automatically change to lower power mode when the system cannot get enough the power). But when I just run on some nodes and configure the power is maximum, the problem is still not solved.

Please help me about this problem, thank you all!

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page