- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

So I recently realized that the setup phase of PARDISO is not thread safe when using the METIS reordering... This is incredibly annoying and not documented anywhere as far as I can tell. I'm going to guess that it has something to do with the random number generator in METIS, which doesn't seem to be thread safe (http://glaros.dtc.umn.edu/gkhome/node/904)...

Do you have any plans to fix this?

Regards

Aron

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aron,

Thanks for reaching out to us.

We are working on this issue. we will get back to you soon.

Regards,

Vidya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aron,

Thanks for your patience.

Here are few things need to be considered while calling pardiso from parallel region

1)Calling PARDISO from parallel region with multiple handles "pt". Each thread has its own handle "pt" and its own single right hand side "b".

This is the correct way.

2)Calling PARDISO from parallel region, supplying each thread with one right hand side and the same handle.

This is incorrect usage. each thread each has its own handle "pt" and its own single right

3)Calling PARDISO from sequential code with multiple right hand sides. This uses internal PARDISO parallelization.

This is a correct usage.

4)Calling PARDISO from parallel region with the same multiple right hand sides.

This is incorrect usage. The same output can not shared for different threads, which is a data race in the use of output.

Here is the thread link with similar discussion which might hlep you

https://community.intel.com/t5/Intel-oneAPI-Math-Kernel-Library/Pardiso-not-thread-safe/td-p/793007

Please do let us know if the above information helps in answering the query.

Regards,

Vidya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aron,

As we haven't heard back from you, could you please provide us with an update regarding this issue? Please do let us know if you have any queries here in this case.

Regards,

Vidya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Vidya,

Your answer doesn't address the issue. I am calling PARDISO with each thread having its own handle - it is still not thread safe (results are different from run-to-run). This is only an issue when using METIS.

There also doesn't seem to be any way to copy a handle after the symbolic factorization is done - this is also annoying since each thread needs to do the exact same symbolic factorization when solving multiple systems with the same sparsity structure.

Regards

Aron

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aron,

Could you please provide us with a sample reproducer (& steps to reproduce the issue if any) and the results that you are getting along with the expected results?

Also please do let us know the MKL version being used and OS details so that we can test it from our end

Regards,

Vidya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aron,

As we haven't heard back from you, could you please provide us with an update regarding the issue?

Regards,

Vidya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Vidyalatha,

The code below demonstrates the issue (I could find any sane way of pasting code on here, and the forum doesn't allow me to attach files):

---

#include <mkl.h>

#include <tbb/tbb.h>

#include <vector>

#include <assert.h>

int main() {

static const int n = 64;

static const int N = n * n;

static const int nrep = 1000;

std::vector<int> ia;

std::vector<int> ja;

std::vector<double> val;

//simple example nontrivial matrix

ia.push_back(0);

for (int i = 0; i < n; i++) {

for (int j = 0; j < n; j++) {

int id = j + i * n;

#if 0

if (i > 0) {

ja.push_back(id - n);

val.push_back(-1);

}

if (j > 0) {

ja.push_back(id - 1);

val.push_back(-1);

}

#endif

ja.push_back(id);

val.push_back(4);

#if 1

if (j < n - 1) {

ja.push_back(id + 1);

val.push_back(-1);

}

if (i < n - 1) {

ja.push_back(id + n);

val.push_back(-1);

}

#endif

ia.push_back(int(ja.size()));

}

}

#if 0

for (int i = 0; i < N; i++) {

for (int j = ia[i]; j < ia[i + 1]; j++) {

int nb = ja[j];

double v = val[j];

bool t = false;

for (int k = ia[nb]; k < ia[nb + 1]; k++) {

if (ja[k] == i && v == val[k]) {

t = true;

break;

}

}

if (!t) {

fprintf(stderr, "matrix not symmetric\n");

abort();

}

}

}

#endif

MKL_INT mtype = 6; /* Real unsymmetric matrix */

/* RHS and solution vectors. */

double b[5], x[5];

MKL_INT nrhs = 1; /* Number of right hand sides. */

/* Internal solver memory pointer pt, */

/* 32-bit: int pt[64]; 64-bit: long int pt[64] */

/* or void *pt[64] should be OK on both architectures */

/* Pardiso control parameters. */

MKL_INT iparm[64];

MKL_INT maxfct, mnum, phase, error, msglvl;

/* Auxiliary variables. */

MKL_INT i;

double ddum; /* Double dummy */

MKL_INT idum; /* Integer dummy. */

/* -------------------------------------------------------------------- */

/* .. Setup Pardiso control parameters. */

/* -------------------------------------------------------------------- */

for (i = 0; i < 64; i++) {

iparm[i] = 0;

}

iparm[0] = 1; /* No solver default */

iparm[1] = 2; /* Fill-in reordering from METIS */

/* Numbers of processors, value of OMP_NUM_THREADS */

iparm[2] = 1;

iparm[3] = 0; /* No iterative-direct algorithm */

iparm[4] = 2; //return computed permutation

iparm[5] = 0; /* Write solution into x */

iparm[6] = 0; /* Not in use */

iparm[7] = 2; /* Max numbers of iterative refinement steps */

iparm[8] = 0; /* Not in use */

iparm[9] = 13; /* Perturb the pivot elements with 1E-13 */

iparm[10] = 1; /* Use nonsymmetric permutation and scaling MPS */

iparm[11] = 0; /* Not in use */

iparm[12] = 0; /* Not in use */

iparm[13] = 0; /* Output: Number of perturbed pivots */

iparm[14] = 0; /* Not in use */

iparm[15] = 0; /* Not in use */

iparm[16] = 0; /* Not in use */

iparm[17] = -1; /* Output: Number of nonzeros in the factor LU */

iparm[18] = -1; /* Output: Mflops for LU factorization */

iparm[19] = 0; /* Output: Numbers of CG Iterations */

iparm[26] = 1;

iparm[30] = 0;

iparm[34] = 1; //zero based indexing

iparm[35] = 0;

maxfct = 1; /* Maximum number of numerical factorizations. */

mnum = 1; /* Which factorization to use. */

msglvl = 0; /* Print statistical information in file */

error = 0; /* Initialize error flag */

/* -------------------------------------------------------------------- */

/* .. Initialize the internal solver memory pointer. This is only */

/* necessary for the FIRST call of the PARDISO solver. */

/* -------------------------------------------------------------------- */

phase = 11;

//compute reference permutation

std::vector<int> ref_perm(N);

{

void* pt[64];

for (i = 0; i < 64; i++) {

pt[i] = 0;

}

PARDISO(pt, &maxfct, &mnum, &mtype, &phase,

&N, val.data(), ia.data(), ja.data(), ref_perm.data(), &nrhs,

iparm, &msglvl, &ddum, &ddum, &error);

if (error != 0) {

printf("\nERROR during symbolic factorization: %d", error);

exit(1);

}

phase = -1; /* Release internal memory. */

PARDISO(pt, &maxfct, &mnum, &mtype, &phase,

&n, &ddum, ia.data(), ja.data(), ref_perm.data(), &nrhs,

iparm, &msglvl, &ddum, &ddum, &error);

}

//perform exact same symbolic factorization in multiple threads; check that results are the same

tbb::task_group tg;

//static std::mutex mtx;

for (int irep = 0; irep < nrep; irep++) {

tg.run([maxfct, mnum, mtype, phase, nrhs, &iparm, msglvl, error, &ia, &ja, &val, &ref_perm]() {

//std::lock_guard<std::mutex> lock(mtx);

void* pt[64];

for (int i = 0; i < 64; i++) {

pt[i] = 0;

}

MKL_INT iparm_[64]; memcpy(iparm_, iparm, 64 * sizeof(MKL_INT));

std::vector<int> perm(N);

double ddum;

int idum;

int error;

std::vector<int> ia_ = ia;

std::vector<int> ja_ = ja;

srand(333);

/* -------------------------------------------------------------------- */

/* .. Reordering and Symbolic Factorization. This step also allocates */

/* all memory that is necessary for the factorization. */

/* -------------------------------------------------------------------- */

int phase = 11;

PARDISO(pt, &maxfct, &mnum, &mtype, &phase,

&N, val.data(), ia_.data(), ja_.data(), perm.data(), &nrhs,

iparm_, &msglvl, &ddum, &ddum, &error);

if (error != 0) {

printf("\nERROR during symbolic factorization: %d", error);

exit(1);

}

phase = -1; /* Release internal memory. */

PARDISO(pt, &maxfct, &mnum, &mtype, &phase,

&n, &ddum, ia.data(), ja.data(), perm.data(), &nrhs,

iparm, &msglvl, &ddum, &ddum, &error);

//printf("perm[0]: %d\n", perm[0]);

for (int i = 0; i < N; i++) {

if (perm[i] != ref_perm[i]) {

fprintf(stderr, "PARDISO is not thread safe!!!\n");

abort();

}

}

//printf("\nReordering completed ... ");

//printf("\nNumber of nonzeros in factors = %d", iparm[17]);

//printf("\nNumber of factorization MFLOPS = %d", iparm[18]);

});

}

tg.wait();

return 0;

}

----

Build line: icpc -O3 pardisotest.cpp -ltbb -lmkl_intel_lp64 -lmkl_sequential -lmkl_core

os: Ubuntu 20.04.4 LTS

MKL version: 2022.2.0.

lscpu output (this is an azure VM):

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

Address sizes: 46 bits physical, 57 bits virtual

CPU(s): 64

On-line CPU(s) list: 0-63

Thread(s) per core: 2

Core(s) per socket: 16

Socket(s): 2

NUMA node(s): 2

Vendor ID: GenuineIntel

CPU family: 6

Model: 106

Model name: Intel(R) Xeon(R) Platinum 8370C CPU @ 2.80GHz

Stepping: 6

CPU MHz: 2800.000

CPU max MHz: 2800.0000

CPU min MHz: 800.0000

BogoMIPS: 5586.87

Virtualization: VT-x

Hypervisor vendor: Microsoft

Virtualization type: full

L1d cache: 1.5 MiB

L1i cache: 1 MiB

L2 cache: 40 MiB

L3 cache: 96 MiB

NUMA node0 CPU(s): 0-31

NUMA node1 CPU(s): 32-63

Vulnerability Itlb multihit: Not affected

Vulnerability L1tf: Not affected

Vulnerability Mds: Not affected

Vulnerability Meltdown: Not affected

Vulnerability Mmio stale data: Vulnerable: Clear CPU buffers attempted, no microcode; SMT Host state unknown

Vulnerability Retbleed: Vulnerable

Vulnerability Spec store bypass: Vulnerable

Vulnerability Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Vulnerability Spectre v2: Mitigation; Retpolines, STIBP disabled, RSB filling, PBRSB-eIBRS Not affected

Vulnerability Srbds: Not affected

Vulnerability Tsx async abort: Not affected

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush mmx fxsr sse sse2 ss ht sysc

all nx pdpe1gb rdtscp lm constant_tsc rep_good nopl xtopology tsc_reliable nonstop_tsc cpuid aperfmperf pni

pclmulqdq vmx ssse3 fma cx16 pcid sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xsave avx f16c rd

rand hypervisor lahf_lm abm 3dnowprefetch invpcid_single tpr_shadow vnmi ept vpid ept_ad fsgsbase tsc_adjust

bmi1 hle avx2 smep bmi2 erms invpcid rtm avx512f avx512dq rdseed adx smap avx512ifma clflushopt clwb avx512

cd sha_ni avx512bw avx512vl xsaveopt xsavec xgetbv1 xsaves avx512vbmi umip avx512_vbmi2 gfni vaes vpclmulqdq

avx512_vnni avx512_bitalg avx512_vpopcntdq la57 rdpid fsrm arch_capabilities

Regards

Aron

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aron,

Could you please provide us with the expected results and the results that you are getting?

Regards,

Vidya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

The program makes identical calls to PARDISO and checks that the results are identical. When the results are not identical it aborts with the message "PARDISO is not thread safe!!!"

Regards

Aron

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aron,

Could you please confirm if the data which you are working with is sparse type?

Based on our observation, the test data is not sparse. You can also try the Pardiso examples that come with MKL installation.

Regards,

Vidya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aron,

As we haven't heard back from you, could you please provide us with an update regarding the issue?

Regards,

Vidya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Vidya,

What do you mean? It's clearly sparse matrix. And even if I did use PARDISO for a dense matrix I think it's still reasonable to expect that it doesn't give random results when called from multiple threads simultaneously.

Could you please ask someone who actually develops PARDISO to have a look at this?

Regards

Aron

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Vidya, As we haven't heard back from you, could you please provide us with an update regarding the issue?

Have you been able to reproduce it using the OP's reproducer?

Regards

Andy

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Apologies for the delay.

Yes, I could reproduce the issue and already forwarded the issue to the concerned development team. We are working on your issue internally and will get back to you as soon as I get an update.

Regards,

Vidya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Great to hear! Looking forward to you fixing this issue.

Regards

Aron

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Aron,

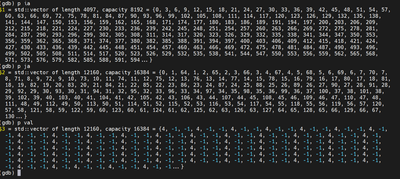

Could you please let us know what the capacity value from the screenshot below indicates?

(This is the output that we got when debugging the code using gdb).

Regards,

Vidya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Uhhh... The amount of memory allocated by the std::vector I guess. What has that got to do with anything? And why are you asking me about STL internals? Again, the bug is in PARDISO, not in my code (or in STL (!)). You really need to pass this on to the MKL development team.

Regards

Aron

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear arona,

Thank you for your patient and thank you again for posting your concern in the Community. We are delighted to let you know that the fix will be available in the next release version.

Best Regards,

Ruqiu

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page