- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I'm a rookie for intel MKL lib. I just down the lib and tried to use a generalized eigendecomposition function in MKL. But I wonder why such a function is so slow in my cases. I test a 7000*7000 sparse matrix. But it consumes about 120 s. Here is the c++ code. I am only using Eigen lib 3.37 and intel MKL for computing:

#include <omp.h>

#include <set>

#include <map>

#include <queue>

#include <fstream>

#include <iostream>

#include <iomanip>

#include <sstream>

#include <thread>

#include <Eigen/Core>

#include <Eigen/Dense>

#include <Eigen/Sparse>

#include <Eigen/SparseCore>

#include <Eigen/Eigenvalues>

#include <unsupported/Eigen/KroneckerProduct>

#include <unsupported/Eigen/ArpackSupport>

#include <unsupported/Eigen/CXX11/Tensor>

#include "unsupported/Eigen/ArpackSupport"

#include <unsupported/Eigen/SparseExtra>

#include "mkl.h"

using vec2i = Eigen::Vector2i;

using vec2 = Eigen::Vector2d;

using vec3 = Eigen::Vector3d;

using vec4 = Eigen::Vector4d;

using vec3f = Eigen::Vector3f;

using mat3 = Eigen::Matrix3d;

using DMatrix = Eigen::MatrixXd;

using IMatrix = Eigen::MatrixXi;

using DSparse = Eigen::SparseMatrix<double>;

using DVector = Eigen::VectorXd;

using IVector = Eigen::VectorXi;

static void EigenSparseToCuSparseTranspose(

//const Eigen::SparseMatrix<double, Eigen::RowMajor> &mat, int *row, int *col, double *val)

const DSparse &mat, int *row, int *col, double *val)

{

const int num_non0 = mat.nonZeros();

const int num_outer = mat.cols() + 1;

memcpy(row, mat.outerIndexPtr(), sizeof(int) * num_outer);

memcpy(col, mat.innerIndexPtr(), sizeof(int) * num_non0);

memcpy(val, mat.valuePtr(), sizeof(double) * num_non0);

for (int el = 0; el < num_outer; el++) {

row[el] = row[el] + 1;

}

for (int el = 0; el < num_non0; el++) {

col[el] = col[el] + 1;

}

}

int main()

{

// Define the sparse matrix object

Eigen::SparseMatrix<double> Lap;

Eigen::SparseMatrix<double> Vol;

// Read the matrix from the text file

Eigen::loadMarket(Lap,"Lap.txt");

Eigen::loadMarket(Vol, "Vol.txt");

// Print the matrix to the console

MKL_INT N = Lap.rows();

int num_non0 = Lap.nonZeros();

int num_outer = Lap.cols() + 1;

MKL_INT* ia = new MKL_INT[num_outer];

MKL_INT* ja = new MKL_INT[num_non0];

double* Vala = new double[num_non0];

EigenSparseToCuSparseTranspose(Lap, ia, ja, Vala);

int num_non0b = Vol.nonZeros();

int num_outerb = Vol.cols() + 1;

MKL_INT* ib = new MKL_INT[num_outerb];

MKL_INT* jb = new MKL_INT[num_non0b];

double* Valb = new double[num_non0b];

EigenSparseToCuSparseTranspose(Vol, ib, jb, Valb);

int nr = 3;

////exact for a 3*3 matrix, not my matrix

double Eig[3] = { 1.0, 9.0, 27.0 }; //

/* mkl_sparse_d_gv input parameters */

char which = 'S'; /* Which eigenvalues to calculate. ('L' - largest (algebraic) eigenvalues, 'S' - smallest (algebraic) eigenvalues) */

MKL_INT pm[128]; /* This array is used to pass various parameters to Extended Eigensolver Extensions routines. */

MKL_INT k0 = nr; /* Desired number of max/min eigenvalues */

/* mkl_sparse_d_gv output parameters */

MKL_INT k; /* Number of eigenvalues found (might be less than k0). */

double* E = new double[k0];

//double E[3]; /* Eigenvalues */

double* X = new double[k0*N];

//double X[3 * 3]; /* Eigenvectors */

double* res = new double[k0];

//double res[k0]; /* Residual */

/* Local variables */

MKL_INT info; /* Errors */

MKL_INT compute_vectors = 0;/* Flag to compute eigenvectors */

MKL_INT tol = 7; /* Tolerance */

MKL_INT i;

//Eigen::PardisoLDLT <Eigen::SparseMatrix<double> slo;

/* Sparse BLAS IE variables */

sparse_matrix_t A = NULL, B = NULL; /* Handle containing sparse matrix in internal data structure */

struct matrix_descr descrA, descrB; /* Structure specifying sparse matrix properties */

/////

//SPARSE_MATRIX_TYPE_GENERAL = 20, /* General case */

// SPARSE_MATRIX_TYPE_SYMMETRIC = 21, /* Triangular part of */

// SPARSE_MATRIX_TYPE_HERMITIAN = 22, /* the matrix is to be processed */

// SPARSE_MATRIX_TYPE_TRIANGULAR = 23,

// SPARSE_MATRIX_TYPE_DIAGONAL = 24, /* diagonal matrix; only diagonal elements will be processed */

// SPARSE_MATRIX_TYPE_BLOCK_TRIANGULAR = 25,

// SPARSE_MATRIX_TYPE_BLOCK_DIAGONAL = 26 /* block-diagonal matrix; only diagonal blocks will be processed */

////////////

/* Create handle for matrix A stored in CSR format */

//descrA.type = SPARSE_MATRIX_TYPE_GENERAL; /* Full matrix is stored */

descrA.type = SPARSE_MATRIX_TYPE_SYMMETRIC;

descrA.mode = SPARSE_FILL_MODE_UPPER;

descrA.diag = SPARSE_DIAG_NON_UNIT;

//descrA.type = SPARSE_MATRIX_TYPE_DIAGONAL;

// struct matrix_descr {

// sparse_matrix_type_t type; /* matrix type: general, diagonal or triangular / symmetric / hermitian */

// sparse_fill_mode_t mode; /* upper or lower triangular part of the matrix ( for triangular / symmetric / hermitian case) */

// sparse_diag_type_t diag; /* unit or non-unit diagonal ( for triangular / symmetric / hermitian case) */

//};

//mkl_sparse_d_create_csr(&A, SPARSE_INDEX_BASE_ONE, N, N, ia, ia + 1, ja, EigenSPTest.valuePtr());

sparse_status_t Astate = mkl_sparse_d_create_csr(&A, SPARSE_INDEX_BASE_ONE, N, N, ia, ia + 1, ja, Vala);

//mkl_ccsrcsc();

std::cout << "Astate:" << Astate << std::endl;

//mkl_sparse_d_create_csr(&A, SPARSE_INDEX_BASE_ONE, N, N, ia, ia + 1, ja, a);

//descrB.type = SPARSE_MATRIX_TYPE_GENERAL; /* Full matrix is stored */

descrB.type = SPARSE_MATRIX_TYPE_SYMMETRIC;

descrB.mode = SPARSE_FILL_MODE_UPPER;

descrB.diag = SPARSE_DIAG_NON_UNIT;

//descrB.type = SPARSE_MATRIX_TYPE_DIAGONAL;

/*mkl_sparse_d_create_csr(&B, SPARSE_INDEX_BASE_ONE, N, N, ib, ib + 1, jb, EigenSPTestB.valuePtr());*/

sparse_status_t Bstate = mkl_sparse_d_create_csr(&B, SPARSE_INDEX_BASE_ONE, N, N, ib, ib + 1, jb, Valb);

std::cout << "Bstate:" << Bstate << std::endl;

/////////////////

//SPARSE_STATUS_SUCCESS = 0, /* the operation was successful */

// SPARSE_STATUS_NOT_INITIALIZED = 1, /* empty handle or matrix arrays */

// SPARSE_STATUS_ALLOC_FAILED = 2, /* internal error: memory allocation failed */

// SPARSE_STATUS_INVALID_VALUE = 3, /* invalid input value */

// SPARSE_STATUS_EXECUTION_FAILED = 4, /* e.g. 0-diagonal element for triangular solver, etc. */

// SPARSE_STATUS_INTERNAL_ERROR = 5, /* internal error */

// SPARSE_STATUS_NOT_SUPPORTED = 6 /* e.g. operation for double precision doesn't support other types */

////////////////////////////////////

/* Step 2. Call mkl_sparse_ee_init to define default input values */

mkl_sparse_ee_init(pm);

//dfeast_scsrgv();

pm[1] = 6; /* Set tolerance */

pm[2] = 0;

pm[6] = 1;

/* Step 3. Solve the standard Ax = ex eigenvalue problem. */

for (i = 0; i < 10; i++)

{

std::cout << "pm_" << i << ":" << pm[i] << std::endl;

}

info = mkl_sparse_d_gv(&which, pm, A, descrA, B, descrB, k0, &k, E, X, res);

printf("mkl_sparse_d_gv output info after \n");

for (i = 0; i < 10; i++)

{

std::cout << "pm_" << i << ":" << pm[i] << std::endl;

}

//info = mkl_sparse_d_ev(&which, pm, A, descrA, k0, &k, E, X, res);

printf("mkl_sparse_d_gv output info %d \n", info);

if (info != 0)

{

printf("Routine mkl_sparse_d_gv returns code of ERROR: %i", (int)info);

}

printf("*************************************************\n");

printf("************** REPORT ***************************\n");

printf("*************************************************\n");

printf("#mode found/subspace %d %d \n", k, k0);

printf("Index/Exact Eigenvalues/Estimated Eigenvalues/Residuals\n");

for (i = 0; i < k; i++)

{

printf(" %d %.15e %.15e %.15e \n", i, Eig[i], E[i], res[i]);

}

mkl_sparse_destroy(A);

mkl_sparse_destroy(B);

std::cout << "End!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!:" << std::endl;

getchar();

}

Here, I also attach the txt file for the matrix. Currently, I use C++ 17 in visual studio 2017 and MKLversion 2022.2.1. I'll appreciate it if anyone could help me. Thanks.

I don't know why I cannot upload my txt file. It keeps saying:The attachment's vol.txt content type (text/plain) does not match its file extension and has been removed.

I hereby attach the link for the matrix:https://entuedu-my.sharepoint.com/:t:/g/personal/haoxiang002_e_ntu_edu_sg/ES7ixewE5CBMuTzzA4IBHKwBctW6tMHhpm3L3sHZ1r4BFw?e=M1GPnB

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

And here is the link for Vol Matrix:https://entuedu-my.sharepoint.com/:t:/g/personal/haoxiang002_e_ntu_edu_sg/EdrTMYb_iCBNoQDJLOnZaO4Br7PiWvGLSTqsAYHu3WPXBA?e=8mCb3C

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Haoxiang,

Thanks for reaching out to us and providing us with the details.

>>But I wonder why such a function is so slow in my cases

Could you please let us know if you have set /Qmkl option under configuration properties > Intel oneAPI libraries > Use Intel oneMKL to parallel?

>>But it consumes about 120 s

Please do let us know how you are measuring the time taken by oneMKL routine and also the time taken by the Eigen library.

Regards,

Vidya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Vidya:

Yes, I set it as parallel. Here is the figure for the properties page. I'm not sure if I set something wrong with MKL lib.

As for the time consumption. I use a C++ Lib from others. Just set the counter to start before mkl_sparse_d_gv and stop after it. E.g.:

PerformanceCounter substep2;

substep2.StartCounter();

info = mkl_sparse_d_gv(&which, pm, A, descrA, B, descrB, k0, &k, E, X, res);

substep2.StopCounter();

The Eigen lib here is just to read the sparse matrix from the txt file. And I convert it to CSR format. I believe it won't take much time to do these. I separate the code into four parts and counted the time for each part. The part2 consumes the most time, which is mkl_sparse_d_gv. Here I also attach PerformanceCounter header file. The time consumption for each part gives me:

substep0:0.0003518

substep1:0.0225588

substep2:111.683

substep3:0.0038434

overallT:111.71

I also test the MKL lib in Sequential mode. It takes 103.175s for Eigen decomposition. That's weird. Does it mean the multi-threading does not work for my cases?

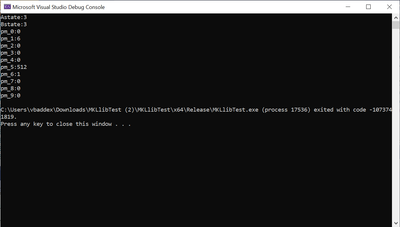

For convenience, I also attach the source code with the Counter. And this is the output in my console in Sequential mode:

Astate:0

Bstate:0

pm_0:0

pm_1:6

pm_2:0

pm_3:0

pm_4:0

pm_5:512

pm_6:1

pm_7:0

pm_8:0

pm_9:0

mkl_sparse_d_gv output info after

pm_0:0

pm_1:6

pm_2:0

pm_3:0

pm_4:10000

pm_5:512

pm_6:1

pm_7:0

pm_8:0

pm_9:-1

mkl_sparse_d_gv output info 0

*************************************************

************** REPORT ***************************

*************************************************

#mode found/subspace 3 3

Index/Exact Eigenvalues/Estimated Eigenvalues/Residuals

0 1.000000000000000e+00 5.410415611074628e-01 3.450940775367071e-01

1 9.000000000000000e+00 3.719215347399311e+07 2.728515969035386e-07

2 2.700000000000000e+01 4.812127510489339e+07 2.299420475540038e-05

substep0:0.0003271

substep1:0.021179

substep2:103.146

substep3:0.0074385

overallT:103.175

End!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Vidya:

Any further suggestions?

Regards.

haoxiang

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, I set it as parallel. Here is the figure for the properties page. I'm not sure if I set something wrong with MKL lib.

As for the time consumption. I use a C++ Lib from others. Just set the counter to start before mkl_sparse_d_gv and stop after it. E.g.:

PerformanceCounter substep2;

substep2.StartCounter();

info = mkl_sparse_d_gv(&which, pm, A, descrA, B, descrB, k0, &k, E, X, res);

substep2.StopCounter();

The Eigen lib here is just to read the sparse matrix from the txt file. And I convert it to CSR format. I believe it won't take much time to do these. I separate the code into four parts and counted the time for each part. The part2 consumes the most time, which is mkl_sparse_d_gv. Here I also attach PerformanceCounter header file. The time consumption for each part give me:

substep0:0.0003518

substep1:0.0225588

substep2:111.683

substep3:0.0038434

overallT:111.71

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I test the MKL lib in Sequential mode. It takes 103.175s for Eigen decomposition. That's weird. Does it mean the multi-threading does not work for my cases?

For convenience, I also attach the source code with the Counter. And this is the output in my console in Sequential mode:

Astate:0

Bstate:0

pm_0:0

pm_1:6

pm_2:0

pm_3:0

pm_4:0

pm_5:512

pm_6:1

pm_7:0

pm_8:0

pm_9:0

mkl_sparse_d_gv output info after

pm_0:0

pm_1:6

pm_2:0

pm_3:0

pm_4:10000

pm_5:512

pm_6:1

pm_7:0

pm_8:0

pm_9:-1

mkl_sparse_d_gv output info 0

*************************************************

************** REPORT ***************************

*************************************************

#mode found/subspace 3 3

Index/Exact Eigenvalues/Estimated Eigenvalues/Residuals

0 1.000000000000000e+00 5.410415611074628e-01 3.450940775367071e-01

1 9.000000000000000e+00 3.719215347399311e+07 2.728515969035386e-07

2 2.700000000000000e+01 4.812127510489339e+07 2.299420475540038e-05

substep0:0.0003271

substep1:0.021179

substep2:103.146

substep3:0.0074385

overallT:103.175

End!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

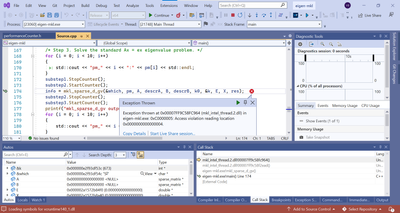

HI Haoxiang,

I tried running the code that you have provided but I'm getting an exception while running it in VS 2022.

Could you please provide us with your project file or else let me know if I miss something here?

Regards,

Vidya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Oh, sorry Vidya. Since you didn't reply for about one week, I thought you were on vacation these days. I didn't get this exception. Maybe you can run in debug mode and tell me where the error happens (because its seems like the code problem).

Previously I tried many different options and still could not solve the problem. So I switch to Matlab c++ engine. It can solve the 5e5*5e5 sparse matrix within 100s. For my current project file, I'm not sure whether some options are different from the beginning. But since I still cannot solve it with mkl lib, for convenience, I directly zip my sln file. Attached is the file.

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Haoxiang,

As we haven't heard back from you, could you please provide us with an update regarding the issue?

Regards,

Vidya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Haoxiang,

I tried running the code and here is the output I'm getting. Please see the below screenshot.

I didn't get an exception this time but I think it doesn't execute either from line 174.

Please let me know if I miss anything here.

Regards,

Vidya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Vidya.

Are u sure you successfully read these two matrices (Mat Lap and Vol) at the beginning?

Astate and Bstate should be 0 if you read them successfully.

I mean u might need to download these two matrices first and change the path to these txt files in your computer.

I previously provided these files in the link. In case you miss it.

the link is here:

I cannot directly upload these file because the system keeps saying that The attachment's vol.txt content type (text/plain) does not match its file extension and has been removed.

Regards,

haoxiang

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Haoxiang,

Thanks for the details.

The issue is reproducible from our end as well. We are working on this issue, we will get back to you soon.

Regards,

Vidya.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for your patience.

>>I test the MKL lib in Sequential mode. It takes 103.175s for Eigen decomposition. That's weird. Does it mean the multi-threading does not work for my cases?

Below are the observations and recommendations of our developers:

In the sequential result, the solver is not converged (pm_9=-1), while it is converged in the parallel result. The poor performance is because the smallest eigenvalue is close to zero and at least 8 orders of magnitude smaller than the others.

The smallest eigenvalue is ~10e-1 and the largest is ~10e+14.

The matrices provided by you are very sparse. More precisely the first matrix is the diagonal one, and the sparsity of the second matrix is a bit higher than 1%. mkl_sparse_d_ev is a sparse eigensolver and as a consequence, sparse matrix-vector multiply is the most time-consuming operation. Since the sparsity of the second matrix is very and very low it's impossible to provide efficient parallelization of sparse matrix-vector multiply for a matrix with roughly 70K of non-zeros simply because it's not enough computational work for many threads. This explains why only 1 thread is faster than 2 or 4 threads in this case.

There are several options to find the smallest eigenvalues faster when they are close to zero.

1. First of all, it is recommended to shift and invert spectral transformation. More precisely, instead of looking smallest eigenvalues for

Ax= lambda Bx

we'll be looking for the largest eigenvalues mu for the eigenvalue problem

( A - shift B)^(-1) B x= mu x

where mu = 1 / (lambda - shift) or your original eigenvalues are lambda_j = shift + 1/ mu_j. Also, it might be any shift smaller than the smallest eigenvalue provides faster convergence. It's well known that the convergence rate for the largest eigenvalues is essentially larger than for the smallest eigenvalues.

2. Transform the initial problem for finding the smallest eigenvalues to the problem of finding eigenvalues within a given interval. That means you must set an interval containing the smallest eigenvalues and use setting pm[2] = 2 for mkl_sparse_d_gv. In this case, the subspace iteration technique based on the FEAST algorithm will be used instead of the Krylov_Shur algorithm. However, it's better to call dfeast_scsrgv directly (mkl_sparse_d_gv calls dfeast_scsrgv for the case pm[2] = 2) since direct call to dfeast_scsrgv provides more options and it's more flexible.

At last, we suggest you try the recommendations and let us know if you need any other information.

Thanks & Regards,

Varsha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We assume that your query is resolved. If you have any other queries, please start a new thread.

Have a good day!

Thanks & Regards,

Varsha

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page