- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I had posted this on the Fortan froum here.

The jist of the question/observation is:

I am experimenting with threading combinations of using OpenMP in the main app, each calling MKL threaded library.

To facilitate this, OMP_PLACES is used to specify one and more places, each place with one or more hardware threads, and each place assigned to each respective OpenMP thread of the main app. Then each OpenMP thread (pinned to multiple HW threads), making a call to MKL where MKL is to use only the affinity pinned threads of the calling thread.

For example, a system with 4 NUMA nodes, each with 64 HW threads:

OMP_PLACES={0:64},{64:64},{128:64},{192:64}

With OpenMP in the main using 4 threads, each pinned to different NUMA node (*** which are located in different Windows ProcessorGroups ***). And where each main OpenMP thread making its MKL call being pinned to the threads of different NUMA nodes (and different ProcessorGroups).

The system has System proc numbers 0:255

Each Processor Group has Group relative proc numbers 0:63

The behavior observed leads me to conclude that the MKL (OpenMP) thread pool, is not carrying the calling threads ProcessorGroup number (it does carry the Group relative proc numbers/64-bit affinity pinning within that ProcessorGroup)

IOW while the main app's OpenMP threads are bound to individual NUMA nodes.

The MKL OpenMP thread pools all end up in ProcessorGroup 0 (NUMA node 0).

Note, this is not strictly a NUMA issue, rather it is a ProcessorGroup issue. For example, on a 4 socket system, with each socket having 1 NUMA node, and 32 HW threads, you could conceivably have a system with 4 NUMA nodes, in 2 Processor Groups. The problem then would be MKL called from node 2 would execute in node 0, MKL called from node 3 would execute in node 1 (I have not determined if the calling threads will remain in their respective Group or get re-pinned back into Processor Group 0).

While my example was using NUMA nodes, the issue is with multiple Processor Groups.

Jim Dempsey

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for reaching out to us.

We are forwarding this issue to the concerned team.

Warm Regards,

Abhishek

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

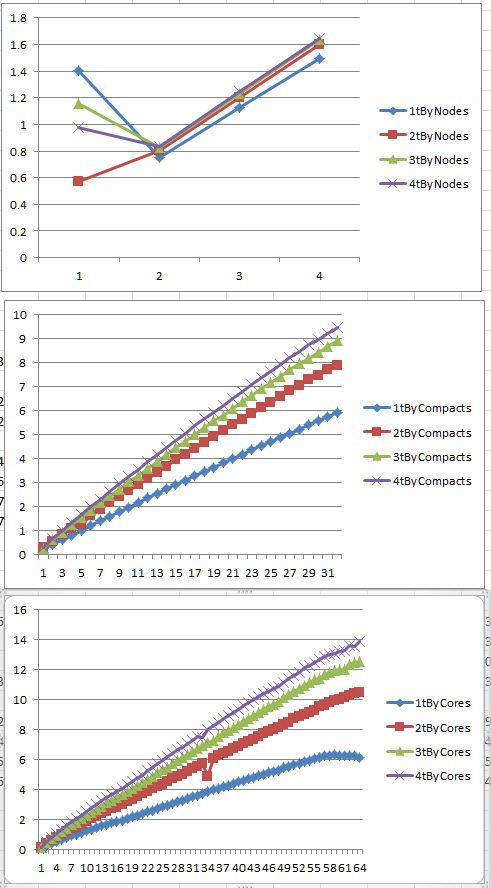

In looking into this further, the charts by NUMA node look goofy, but the charts by L2 and by core look OK.

Let me check the setup parameters for by NUMA node. This may be an error on my part.

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

On Further investigation, it appears that OMP_PLACES is not working as it should (from my understanding)

On KNL 7210, 64 core, 4t/c

...

OMP: Info #171: KMP_AFFINITY: OS proc 61 maps to socket 0 core 73 thread 1

OMP: Info #171: KMP_AFFINITY: OS proc 62 maps to socket 0 core 73 thread 2

OMP: Info #171: KMP_AFFINITY: OS proc 63 maps to socket 0 core 73 thread 3

OMP: Info #252: KMP_AFFINITY: pid 21664 tid 19188 thread 0 bound to OS proc set 0

OMP: Info #252: KMP_AFFINITY: pid 21664 tid 22164 thread 1 bound to OS proc set 1

OMP: Info #252: KMP_AFFINITY: pid 21664 tid 23092 thread 2 bound to OS proc set 2

OMP: Info #252: KMP_AFFINITY: pid 21664 tid 18228 thread 3 bound to OS proc set 3

OMP: Info #252: KMP_AFFINITY: pid 21664 tid 5068 thread 4 bound to OS proc set 4

OMP: Info #252: KMP_AFFINITY: pid 21664 tid 15512 thread 5 bound to OS proc set 5

maxThreads 6

MaximumProcessorGroupCount 4

GroupNumber 0 MaximumProcessorCount 64 ActiveProcessorCount 64

GroupNumber 1 MaximumProcessorCount 64 ActiveProcessorCount 64

GroupNumber 2 MaximumProcessorCount 64 ActiveProcessorCount 64

GroupNumber 3 MaximumProcessorCount 64 ActiveProcessorCount 64

nThreads 6

OMP_PLACES=

{0,4,8,12,16,20,24,28,32,36,40,44,48,52,56,60},

{64,68,72,76,80,84,88,92,96,100,104,108,112,116,120,124},

{128,132,136,140,144,148,152,156,160,164,168,172,176,180,184,188},

{192,196,200,204,208,212,216,220,224,228,232,236,240,244,248,252}

OMPthreadToGroupNumber: OMPthread 0 Group 0 procInGroup 0 affinityInGroup 1 NumaNode 0

OMPthreadToGroupNumber: OMPthread 1 Group 0 procInGroup 1 affinityInGroup 2 NumaNode 0

OMPthreadToGroupNumber: OMPthread 2 Group 0 procInGroup 2 affinityInGroup 4 NumaNode 0

OMPthreadToGroupNumber: OMPthread 3 Group 0 procInGroup 3 affinityInGroup 8 NumaNode 0

OMPthreadToGroupNumber: OMPthread 4 Group 0 procInGroup 4 affinityInGroup 16 NumaNode 0

OMPthreadToGroupNumber: OMPthread 5 Group 0 procInGroup 5 affinityInGroup 32 NumaNode 0

I took the liberty to edit the above for better reading.

Each place (is supposed to) contain the system proc numbers listed.

Each place ought to represent the affinities permitted to the omp thread(s) assigned to the place

In the above, there are 4 places, I am assigning 6 threads. Each place having the system proc # (of HT0 of core), representing the system procs within each proc group.

Two issues:

OpenMP thread 0 was assigned to place 0 (OK), but should be affinity'd to all the threads in place 0 not 1 thread.

OpenMP thread 1 was assigned to place 0 and to HT1 of core 0, as opposed to place 1, all threads of place 1.

...

OpenMP thread 4, should have been mapped back to place 0 (as there are only 4 places and place should wrap around), all threads of place 0

OpenMP thread 5, should have been mapped back to place 1, all threads of place 1

At least this is my understanding of OMP_PLACES.

Comments please?

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Yes, probably there are some problems with mkl_set_num_threads() behavior.

If you will see the same problem with the latest version of mkl then you may submit the issue to the Online Service Center(https://supporttickets.intel.com/servicecenter?lang=en-US).

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page