- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi all,

when I used DNN-Operations of math kernel library on win7, visual studio2015, it worked well.

convolution and maxpooling functions get same results, but faster.

but, the convolution functions get different value changed on win10,

do you have any ideas?

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jessica,

I feel confused with your code sample, seems you would like to read data from file and custom layout to calculate, however you only create layout for output but not for weight and input data. Even not do conversion process from your dnnLayout_t to dnnPrimitive_t. Even not do dnnConversionExecute_F32 before execute. According to your code, you might always get result with 0. I fixed your sample as below and always get same result:

#include <stdio.h>

#include <iostream>

#include <mkl.h>

#include <stdlib.h>

struct ConvWeight {

int M;

int N;

int K;

float * weight; // M*N*K elements

float * bias; // K elements

};

#define CHECK_ERR(f) { auto err = f; if(err != E_SUCCESS) { std::cout << "DNN Error " << err << " in line " << __LINE__ << std::endl; exit(-1); } }

void mkl_conv(ConvWeight layer, float * x, int xm, int xn, float * y, int ym, int yn)

{

float *weights = layer.weight;

//float *input = x;

const int M = layer.M;

const int N = layer.N;

const int K = layer.K;

///////////////////// mkl convolution ///////////////////////

mkl_set_num_threads(4);

/*size_t inp_size[] = { layer.h, layer.w, layer.c, 1 };

size_t out_size[] = { out_h, out_w, m, 1 };

size_t filt_size[] = { layer.size, layer.size, layer.c, m };

size_t stride[] = { layer.stride, layer.stride };

int pad[] = { -layer.pad, -layer.pad };*/

size_t inp_size[4] = { xm, 1, xn, 1 };

size_t out_size[4] = { ym, 1, yn, 1 };

size_t filt_size[4] = { M, 1, N, K };

size_t stride[2] = { 1, 1 };

int pad[2] = { 0, 0 };

dnnLayout_t conv_output = NULL;

dnnPrimitive_t conv = NULL;

dnnPrimitiveAttributes_t attributes = NULL;

float *res_conv[dnnResourceNumber] = { 0 };

CHECK_ERR(dnnPrimitiveAttributesCreate_F32(&attributes));

CHECK_ERR(dnnConvolutionCreateForward_F32(&conv, attributes, dnnAlgorithmConvolutionDirect, 4,

inp_size, out_size, filt_size, stride, pad, dnnBorderZeros));

res_conv[dnnResourceSrc] = x;

res_conv[dnnResourceFilter] = weights;

res_conv[dnnResourceDst] = y;

// CHECK_ERR(dnnLayoutCreateFromPrimitive_F32(&conv_output, conv, dnnResourceDst));

//CHECK_ERR(dnnAllocateBuffer_F32((void**)&res_conv[dnnResourceDst], conv_output));

// execution

CHECK_ERR(dnnExecute_F32(conv, (void **)res_conv));

CHECK_ERR(dnnDelete_F32(conv));

CHECK_ERR(dnnPrimitiveAttributesDestroy_F32(attributes));

}

int main(int argc, char* argv[])

{

const int X = 33;

const int M = 13;

const int N = 15;

const int K = 17;

const int Y = X - M + 1;

ConvWeight cw;

cw.M = M;

cw.N = N;

cw.K = K;

cw.bias = (float*)mkl_malloc(K*sizeof(float), 128);

cw.weight = (float*)mkl_malloc(M*N*K*sizeof(float), 128);

// Input is [X,1,N]

float * input = (float*)mkl_malloc(X*N*sizeof(float), 128);

// Output is [Y,1,K]

float * output1 = (float*)mkl_malloc(Y*K*sizeof(float), 128);

float * output2 = (float*)mkl_malloc(Y*K*sizeof(float), 128);

for (int i = 0; i < K; i++)

cw.bias = float(i + 1) / float(K);

for (int i = 0; i < M*N*K; i++)

cw.weight = float(i + 1) / float(M*N*K);

for (int i = 0; i < X*N; i++)

input = float(i + 1) / float(X*N);

mkl_conv(cw, input, X, N, output2, Y, K);

FILE* f = fopen("mkl_dnn.txt", "w");

if (f != NULL)

{

fprintf(f, "Results:\n");

for(int i=0;i<Y;i++)

{

for(int j=0;j<K;j++)

{

fprintf(f,"%f\t",output2[i*K+j]);

}

fprintf(f,"\n");

}

fclose(f);

}

return 0;

}

Or you could follow our example code for dnn convlution. Thanks.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jessica,

Could you please provide a sample case reproduced your problem. Thank you.

Best regards,

Fiona

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Fiona,

Sure, thanks for reply.

Here is the code snippets of convolution,

float *weights = layer.weights;

float *input = state.input;

///////////////////// mkl convolution ///////////////////////

mkl_set_num_threads(4);

size_t inp_size[] = { layer.h, layer.w, layer.c, 1 };

size_t out_size[] = { out_h, out_w, m, 1 };

size_t filt_size[] = { layer.size, layer.size, layer.c, m };

size_t stride[] = { layer.stride, layer.stride };

int pad[] = { -layer.pad, -layer.pad };

dnnLayout_t conv_output = NULL;

dnnPrimitive_t conv = NULL;

dnnPrimitiveAttributes_t attributes = NULL;

float *res_conv[dnnResourceNumber] = { 0 };

dnnError_t err;

CHECK_ERR(dnnPrimitiveAttributesCreate_F32(&attributes), err);

CHECK_ERR(dnnConvolutionCreateForward_F32(&conv, attributes, dnnAlgorithmConvolutionDirect, 4,

inp_size, out_size, filt_size, stride, pad, dnnBorderZeros), err);

res_conv[dnnResourceSrc] = input;

res_conv[dnnResourceFilter] = weights;

CHECK_ERR(dnnLayoutCreateFromPrimitive_F32(&conv_output, conv, dnnResourceDst), err);

CHECK_ERR(dnnAllocateBuffer_F32((void**)&res_conv[dnnResourceDst], conv_output), err);

// execution

CHECK_ERR(dnnExecute_F32(conv, (void*)res_conv), err);

the input res_conv[dnnnResoureceSrc] and res_conv[dnnResourceFilter] are the same, but the res_conv[dnnResourceDst] got different values on different machine.

Do you have any idea?

Best regards,

Jessica

Fiona Z. (Intel) wrote:

Hi Jessica,

Could you please provide a sample case reproduced your problem. Thank you.

Best regards,

Fiona

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jessica,

I feel confused with your code sample, seems you would like to read data from file and custom layout to calculate, however you only create layout for output but not for weight and input data. Even not do conversion process from your dnnLayout_t to dnnPrimitive_t. Even not do dnnConversionExecute_F32 before execute. According to your code, you might always get result with 0. I fixed your sample as below and always get same result:

#include <stdio.h>

#include <iostream>

#include <mkl.h>

#include <stdlib.h>

struct ConvWeight {

int M;

int N;

int K;

float * weight; // M*N*K elements

float * bias; // K elements

};

#define CHECK_ERR(f) { auto err = f; if(err != E_SUCCESS) { std::cout << "DNN Error " << err << " in line " << __LINE__ << std::endl; exit(-1); } }

void mkl_conv(ConvWeight layer, float * x, int xm, int xn, float * y, int ym, int yn)

{

float *weights = layer.weight;

//float *input = x;

const int M = layer.M;

const int N = layer.N;

const int K = layer.K;

///////////////////// mkl convolution ///////////////////////

mkl_set_num_threads(4);

/*size_t inp_size[] = { layer.h, layer.w, layer.c, 1 };

size_t out_size[] = { out_h, out_w, m, 1 };

size_t filt_size[] = { layer.size, layer.size, layer.c, m };

size_t stride[] = { layer.stride, layer.stride };

int pad[] = { -layer.pad, -layer.pad };*/

size_t inp_size[4] = { xm, 1, xn, 1 };

size_t out_size[4] = { ym, 1, yn, 1 };

size_t filt_size[4] = { M, 1, N, K };

size_t stride[2] = { 1, 1 };

int pad[2] = { 0, 0 };

dnnLayout_t conv_output = NULL;

dnnPrimitive_t conv = NULL;

dnnPrimitiveAttributes_t attributes = NULL;

float *res_conv[dnnResourceNumber] = { 0 };

CHECK_ERR(dnnPrimitiveAttributesCreate_F32(&attributes));

CHECK_ERR(dnnConvolutionCreateForward_F32(&conv, attributes, dnnAlgorithmConvolutionDirect, 4,

inp_size, out_size, filt_size, stride, pad, dnnBorderZeros));

res_conv[dnnResourceSrc] = x;

res_conv[dnnResourceFilter] = weights;

res_conv[dnnResourceDst] = y;

// CHECK_ERR(dnnLayoutCreateFromPrimitive_F32(&conv_output, conv, dnnResourceDst));

//CHECK_ERR(dnnAllocateBuffer_F32((void**)&res_conv[dnnResourceDst], conv_output));

// execution

CHECK_ERR(dnnExecute_F32(conv, (void **)res_conv));

CHECK_ERR(dnnDelete_F32(conv));

CHECK_ERR(dnnPrimitiveAttributesDestroy_F32(attributes));

}

int main(int argc, char* argv[])

{

const int X = 33;

const int M = 13;

const int N = 15;

const int K = 17;

const int Y = X - M + 1;

ConvWeight cw;

cw.M = M;

cw.N = N;

cw.K = K;

cw.bias = (float*)mkl_malloc(K*sizeof(float), 128);

cw.weight = (float*)mkl_malloc(M*N*K*sizeof(float), 128);

// Input is [X,1,N]

float * input = (float*)mkl_malloc(X*N*sizeof(float), 128);

// Output is [Y,1,K]

float * output1 = (float*)mkl_malloc(Y*K*sizeof(float), 128);

float * output2 = (float*)mkl_malloc(Y*K*sizeof(float), 128);

for (int i = 0; i < K; i++)

cw.bias = float(i + 1) / float(K);

for (int i = 0; i < M*N*K; i++)

cw.weight = float(i + 1) / float(M*N*K);

for (int i = 0; i < X*N; i++)

input = float(i + 1) / float(X*N);

mkl_conv(cw, input, X, N, output2, Y, K);

FILE* f = fopen("mkl_dnn.txt", "w");

if (f != NULL)

{

fprintf(f, "Results:\n");

for(int i=0;i<Y;i++)

{

for(int j=0;j<K;j++)

{

fprintf(f,"%f\t",output2[i*K+j]);

}

fprintf(f,"\n");

}

fclose(f);

}

return 0;

}

Or you could follow our example code for dnn convlution. Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Fiona,

Thanks again.

I know what you mean, the code I given just a snippet, the input and weights of convolution are read from a file, floating point pointer.

It's strange for me, when compute on win7, VS2015, the results are correct, but the same code get different result after execute on win10, not 0, other floating number.

I was confused couple of days.

Best regards,

Jessica

Fiona Z. (Intel) wrote:

Hi Jessica,

I feel confused with your code sample, seems you would like to read data from file and custom layout to calculate, however you only create layout for output but not for weight and input data. Even not do conversion process from your dnnLayout_t to dnnPrimitive_t. Even not do dnnConversionExecute_F32 before execute. According to your code, you might always get result with 0. I fixed your sample as below and always get same result:

#include <stdio.h> #include <iostream> #include <mkl.h> #include <stdlib.h> struct ConvWeight { int M; int N; int K; float * weight; // M*N*K elements float * bias; // K elements }; #define CHECK_ERR(f) { auto err = f; if(err != E_SUCCESS) { std::cout << "DNN Error " << err << " in line " << __LINE__ << std::endl; exit(-1); } } void mkl_conv(ConvWeight layer, float * x, int xm, int xn, float * y, int ym, int yn) { float *weights = layer.weight; //float *input = x; const int M = layer.M; const int N = layer.N; const int K = layer.K; ///////////////////// mkl convolution /////////////////////// mkl_set_num_threads(4); /*size_t inp_size[] = { layer.h, layer.w, layer.c, 1 }; size_t out_size[] = { out_h, out_w, m, 1 }; size_t filt_size[] = { layer.size, layer.size, layer.c, m }; size_t stride[] = { layer.stride, layer.stride }; int pad[] = { -layer.pad, -layer.pad };*/ size_t inp_size[4] = { xm, 1, xn, 1 }; size_t out_size[4] = { ym, 1, yn, 1 }; size_t filt_size[4] = { M, 1, N, K }; size_t stride[2] = { 1, 1 }; int pad[2] = { 0, 0 }; dnnLayout_t conv_output = NULL; dnnPrimitive_t conv = NULL; dnnPrimitiveAttributes_t attributes = NULL; float *res_conv[dnnResourceNumber] = { 0 }; CHECK_ERR(dnnPrimitiveAttributesCreate_F32(&attributes)); CHECK_ERR(dnnConvolutionCreateForward_F32(&conv, attributes, dnnAlgorithmConvolutionDirect, 4, inp_size, out_size, filt_size, stride, pad, dnnBorderZeros)); res_conv[dnnResourceSrc] = x; res_conv[dnnResourceFilter] = weights; res_conv[dnnResourceDst] = y; // CHECK_ERR(dnnLayoutCreateFromPrimitive_F32(&conv_output, conv, dnnResourceDst)); //CHECK_ERR(dnnAllocateBuffer_F32((void**)&res_conv[dnnResourceDst], conv_output)); // execution CHECK_ERR(dnnExecute_F32(conv, (void **)res_conv)); CHECK_ERR(dnnDelete_F32(conv)); CHECK_ERR(dnnPrimitiveAttributesDestroy_F32(attributes)); } int main(int argc, char* argv[]) { const int X = 33; const int M = 13; const int N = 15; const int K = 17; const int Y = X - M + 1; ConvWeight cw; cw.M = M; cw.N = N; cw.K = K; cw.bias = (float*)mkl_malloc(K*sizeof(float), 128); cw.weight = (float*)mkl_malloc(M*N*K*sizeof(float), 128); // Input is [X,1,N] float * input = (float*)mkl_malloc(X*N*sizeof(float), 128); // Output is [Y,1,K] float * output1 = (float*)mkl_malloc(Y*K*sizeof(float), 128); float * output2 = (float*)mkl_malloc(Y*K*sizeof(float), 128); for (int i = 0; i < K; i++) cw.bias = float(i + 1) / float(K); for (int i = 0; i < M*N*K; i++) cw.weight = float(i + 1) / float(M*N*K); for (int i = 0; i < X*N; i++) input = float(i + 1) / float(X*N); mkl_conv(cw, input, X, N, output2, Y, K); FILE* f = fopen("mkl_dnn.txt", "w"); if (f != NULL) { fprintf(f, "Results:\n"); for(int i=0;i<Y;i++) { for(int j=0;j<K;j++) { fprintf(f,"%f\t",output2[i*K+j]); } fprintf(f,"\n"); } fclose(f); } return 0; }Or you could follow our example code for dnn convlution. Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jessica,

I am testing on win10, well, it is very hard to reproduce according to your snippet. It would be great to provide a complete case and correct and wrong result you get from two system, you could submit case through private message or submit a ticket on Intel Online Service Center . Please also attach the result you get and I would like to know how much it different. If it is a slightly different or totally different? Thanks.

Best regards,

Fiona

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Fiona,

Thanks a lot,

It's hard to say, you konw, I also took a simple test on two system, obviously correct, and the simple sample as below:

#include <stdio.h>

#include <mkl.h>

int main()

{

float data[] = {

1,1,1,1,1,

3,3,3,3,3,

2,2,2,2,2,

4,4,4,4,4,

3,3,3,3,3,

1,1,1,1,1,

2,2,2,2,2,

3,3,3,3,3,

4,4,4,4,4,

5,5,5,5,5 };

float k[] = {

1, 1, 1,

1, 0, 0,

1, 1, 1,

1, 1, 1,

1, 1, 1,

1, 0, 0,

1, 1, 1,

1, 0, 0,

1, 1, 1,

1, 0, 0,

1, 1, 1,

1, 1, 1 };

float *out = mkl_calloc(8, sizeof(float), 0);

dnnError_t err;

// output size: out_w, out_h, channel, batch_size

size_t out_size[] = { 2, 2, 2, 1 };

// input size:

size_t inp_size[] = { 5, 5, 2, 1 };

size_t filt_size[] = { 3, 3, 2, 2 };

size_t conv_stride[2] = { 2, 2 };

// pad

int inp_offset[2] = { 0, 0 };

dnnPrimitive_t conv = NULL;

float* res_conv[dnnResourceNumber] = { 0 };

dnnPrimitiveAttributes_t attributes = NULL;

dnnPrimitiveAttributesCreate_F32(&attributes);

dnnConvolutionCreateForward_F32(&conv, attributes, dnnAlgorithmConvolutionDirect, 4,

inp_size, out_size, filt_size, conv_stride, inp_offset, dnnBorderZeros);

res_conv[dnnResourceSrc] = data;

res_conv[dnnResourceFilter] = k;

res_conv[dnnResourceDst] = out;

dnnExecute_F32(conv, (void*)res_conv);

dnnDelete_F32(conv);

dnnPrimitiveAttributesDestroy_F32(attributes);

for (int i = 0; i < 8; ++i) {

printf("%f ", out);

}

mkl_free(out);

getchar();

return 0;

}

But on my own project, it tooks wrong,

I will print parts of reults of input of convolution before execute and output after execute,

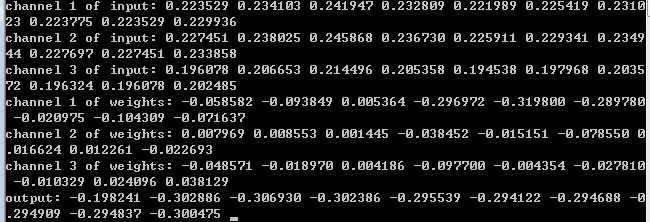

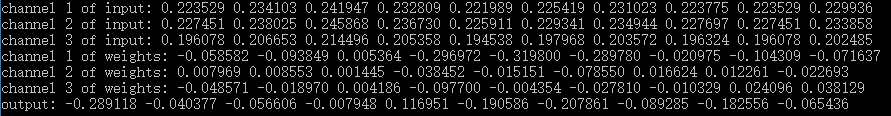

win7:

win10:

you can see, the inputs are the same, but output are totally different.

I really don't konw how can I do..Thanks.

Best regards,

Jessica

Fiona Z. (Intel) wrote:

Hi Jessica,

I am testing on win10, well, it is very hard to reproduce according to your snippet. It would be great to provide a complete case and correct and wrong result you get from two system, you could submit case through private message or submit a ticket on Intel Online Service Center . Please also attach the result you get and I would like to know how much it different. If it is a slightly different or totally different? Thanks.

Best regards,

Fiona

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jessica,

Would it possible to share your complete project (send author a message)? You could send via private message that only Intel staff can see it. I could not reproduce through simple single step calculation that I personally believe it may not be problem of conv interface for MKL 2017u3. Would you please provide more info about the version of MKL? For MKL2017u3, the send argument of execution function dnnExecute_F32 probably should be as the input of void ** type. dnnExecute_F32(conv, (void**)res_conv)

Best regards,

Fiona

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Fiona,

Thanks and sorry for this, it's seems the avx2 problem, the older machine didn't support avx2, so it worked,

I also test on some other machine, it seems to be true.

How could I solve this problem?Thanks.

Best regards,

Jessica

Fiona Z. (Intel) wrote:

Hi Jessica,

Would it possible to share your complete project (send author a message)? You could send via private message that only Intel staff can see it. I could not reproduce through simple single step calculation that I personally believe it may not be problem of conv interface for MKL 2017u3. Would you please provide more info about the version of MKL? For MKL2017u3, the send argument of execution function dnnExecute_F32 probably should be as the input of void ** type. dnnExecute_F32(conv, (void**)res_conv)

Best regards,

Fiona

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Fiona,

Now, I'm sure that is the avx2 problem, when I set the environment variable MKL_CBWR=AVX, the result get correct.

Thanks for your time, it means a lot to me.

Best Regards,

Jessica

Fiona Z. (Intel) wrote:

Hi Jessica,

Would it possible to share your complete project (send author a message)? You could send via private message that only Intel staff can see it. I could not reproduce through simple single step calculation that I personally believe it may not be problem of conv interface for MKL 2017u3. Would you please provide more info about the version of MKL? For MKL2017u3, the send argument of execution function dnnExecute_F32 probably should be as the input of void ** type. dnnExecute_F32(conv, (void**)res_conv)

Best regards,

Fiona

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Dear Fiona,

How can I submit the case through private message?

I'm very confused why it's different when I set the environment variable MKL_CBWR=AVX2 from MKL_CBWR=AVX?

I'll send you the resource data, could you help me to verify?

Fiona Z. (Intel) wrote:

Hi Jessica,

I am testing on win10, well, it is very hard to reproduce according to your snippet. It would be great to provide a complete case and correct and wrong result you get from two system, you could submit case through private message or submit a ticket on Intel Online Service Center . Please also attach the result you get and I would like to know how much it different. If it is a slightly different or totally different? Thanks.

Best regards,

Fiona

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jessica,

You could send private message by "Send Author A Message". And the private message only can be seen by yourself and Intel internal member. Thanks.

Best regards,

Fiona

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Fiona,

Thanks for reply, I just can't find that way.

I have already upload the project on this topic, https://software.intel.com/en-us/node/738438

Could you please help to verify?

And I also found it could be run on x86 platform, but not for x64.

Best regards,

Jessica

Fiona Z. (Intel) wrote:

Hi Jessica,

You could send private message by "Send Author A Message". And the private message only can be seen by yourself and Intel internal member. Thanks.

Best regards,

Fiona

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi jessica,

Thanks. We are investigating on it. I will give you reply soon.

Best regards,

Fiona

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Jessica,

We found the root cause, it looks like convolution primitive is not being correctly used. This particular example assumes that convolution takes data in NCHW format and produces NCHW output, while in reality it can use different formats depending on problem shape and architecture. So in general you always need to query primitive for required layout, convert your input to the required layout and then convert the output back to your original layout. I modified your program, please have a check with attached file, and the result of AVX & AVX2 implementation are the same. Thanks.

Best regards,

Fiona

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Fiona,

Thanks for your reply!!!

It's worked for me!

Best regards,

Jessica

Fiona Z. (Intel) wrote:

Hi Jessica,

We found the root cause, it looks like convolution primitive is not being correctly used. This particular example assumes that convolution takes data in NCHW format and produces NCHW output, while in reality it can use different formats depending on problem shape and architecture. So in general you always need to query primitive for required layout, convert your input to the required layout and then convert the output back to your original layout. I modified your program, please have a check with attached file, and the result of AVX & AVX2 implementation are the same. Thanks.

Best regards,

Fiona

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page