- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi everyone! I have a question about migrating this CUDA kernel:

kernel<<< blocks, threads >>>(...);

DPCT migrates this kernel as:

コピーされたリンク

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi,

Thanks for reaching out to us.

>> So, how can I ask for the maximum blocks and threads ?

To check supported max work group size or any other info related to your device, you may run 'clinfo' command in your terminal.

For more info on the DPCT alert, kindly refer to this link: https://www.intel.com/content/www/us/en/develop/documentation/intel-dpcpp-compatibility-tool-user-guide/top/diagnostics-reference/dpct1049.html#dpct1049_id-dpct1049

Regards,

Shwetha

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hey! Thank you. Using that I know the maximum group size. How can I get the maximum number of threads per group size? I didn't find that

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi,

"clinfo" command gives all the necessary info related to your device through a command line.

Max work group size = max no. of threads allowed in CUDA's block

Max work item size = max no. of threads allowed in CUDA's grid

(Max work item size / Max work group size) = max no. of blocks allowed in CUDA's grid at any given instance

The same info can be obtained from the DPC++ API using :

- "queue.get_info<device::detail::max_work_group_size>()" - The maximum number of work-items that are permitted in a work-group executing a kernel on a single compute unit.

- "queue.get_info<device::detail::max_work_item_sizes>()" - The maximum number of work-items that are permitted in each dimension of the work-group of the nd_range.

Thanks & Regards,

Shwetha

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi,

A gentle remainder to respond.

Regards,

Shwetha

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hey! Thank you so much for your help.

with max_work_group_size I'm getting 1024.

with max_work_item_sizes I'm getting (64, 1024, 1024)

So, my kernel looks like this

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi, thank you so much for your help.

max_work_group_size is 1024

max_work_item_sizes is (64, 1024, 1024)

With my kernel:

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi,

Small correction to our previous understanding.

Max work group size = Maximum number of threads allowed per block.

Max work item size = Maximum number of threads allowed in each dimensions.

This implies that at any given moment, the maximum number of threads within the work group shouldn't exceed max work group size.

To calculate maximum number of blocks per grid,

Max no. of blocks = Max Threads / threads requested by user.

And Maximum threads can be calculated by,

Max Threads = Max compute unit * max work group size

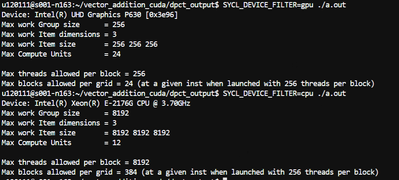

For further more details please refer the code below and attached output snapshot for both GPU and CPU device.

#include <CL/sycl.hpp>

int main()

{

sycl::queue q_ct1 = sycl::queue();

auto device = q_ct1.get_device();

auto max_work_group_size = device.get_info<cl::sycl::info::device::max_work_group_size>();

auto max_work_item_dimensions = device.get_info<cl::sycl::info::device::max_work_item_dimensions>();

auto max_work_item_sizes = device.get_info<cl::sycl::info::device::max_work_item_sizes>();

auto max_compute_units = device.get_info<cl::sycl::info::device::max_compute_units>();

std::string d_name = device.get_info<cl::sycl::info::device::name>();

std::cout << "Device: " << d_name << std::endl;

std::cout << "Max work Group size = " << max_work_group_size << std::endl;

std::cout << "Max work Item dimensions = " << max_work_item_dimensions << std::endl;

std::cout << "Max work Item size = " << max_work_item_sizes[0] << " " << max_work_item_sizes[1] << " " <<

max_work_item_sizes[2] << std::endl;

std::cout << "Max Compute Units = " << max_compute_units << std::endl;

int requested_threads = 256;

int max_threads = max_compute_units * max_work_group_size;

int max_blocks = max_threads / requested_threads;

std::cout << std::endl;

std::cout << "Max threads allowed per block = " << max_work_group_size << std::endl;

std::cout << "Max blocks allowed per grid = " << max_blocks << " (at a given inst, when " <<

requested_threads << " are requested per block)" << std::endl;

return 0;

}

Thanks & Regards,

Shwetha.

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi @ShwethaS_Intel, thank you so much for your help.

The only doubt I have is about "max_compute_units". In GPUs, is this number related on what we are working? Because I read about this and it seems like is the number of SM in GPUs, so it's not related to threads or blocks, is that right? If so, how could we modify the code to match for both GPU and CPU?

Thank you again

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi @ManuelCostanzo2 ,

>> The only doubt I have is about "max_compute_units".

YES, Max_compute_unit is equivalent to number of SM's in GPU and it is required to calculate the Maximum number of threads.

>> how could we modify the code to match for both GPU and CPU?

It's up to the description of the user, when launching particular threads, query about the device info to verify appropriate number of threads/blocks to be launched and then set the limit, this way the code can be modified for both CPU and GPU.

Hope these details will help you to resolve your queries.

Thanks & Regards,

Shwetha.

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi,

Has the information provided helped?

If this resolves your issue, make sure to accept this as a solution. Thank you!

Regards,

Shwetha.

- 新着としてマーク

- ブックマーク

- 購読

- ミュート

- RSS フィードを購読する

- ハイライト

- 印刷

- 不適切なコンテンツを報告

Hi,

I have not heard back from you. This thread will no longer be monitored by Intel.

If you need further assistance, please post a new question.

Thanks & Regards,

Shwetha.