- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello community, in short story:

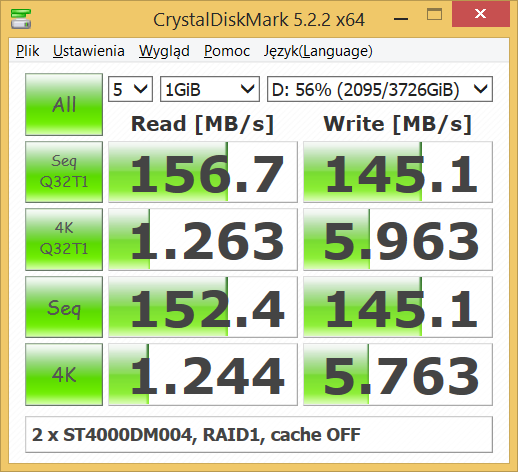

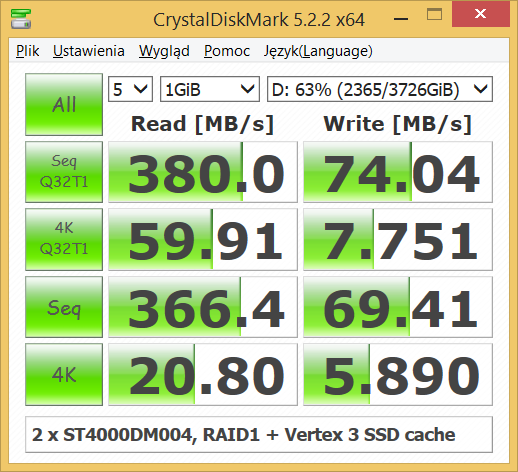

I had fully functional RAID1, built with 2 x ST4000DM004 and Vertex 3 60GB SSD as SSD cache. Z170 chipset, 3.815.448MB capacity.

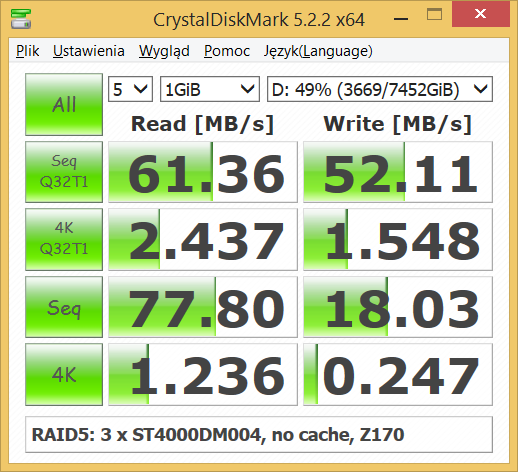

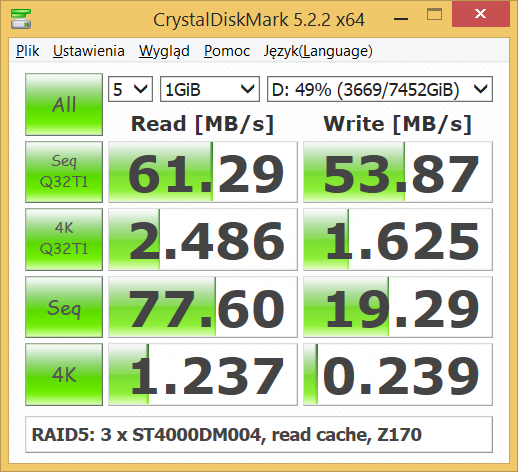

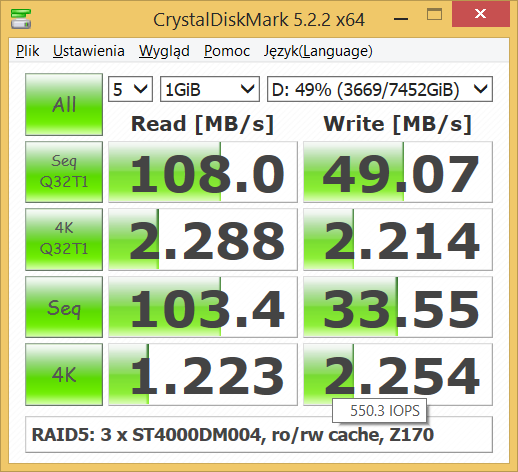

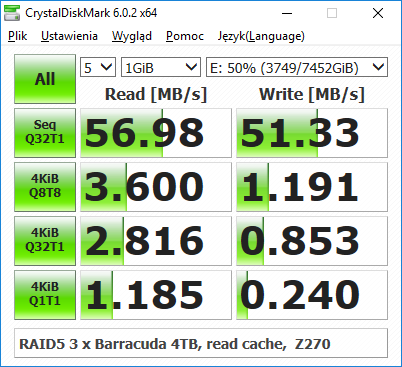

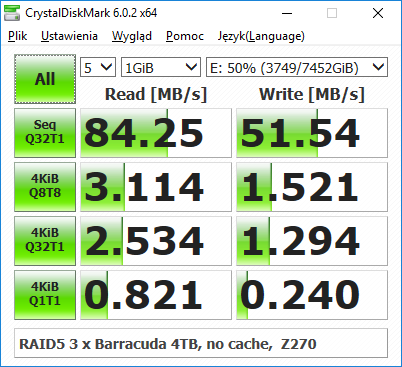

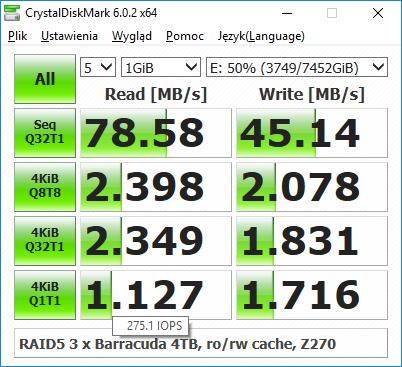

I've migrated to RAID5 to expand RAID array. Now it's 3 x ST4000DM004 without SSD cache, 7.630.891MB capacity. And now problem occured.

Write performance is awful, 5-10MB/s during sequentional transfers. Read performance sometimes good, sometimes bad. I generaly have large files on volume.

With SSD cache ON, I had several data loss incidents. So I've disabled it.

With newer version of BIOS and Intel RST, I had several data loss incidents, including FULL-RESTORE-FROM-BACKUP my system NVMe drive (which is not a part of RAID array by the way). So I've rolled back BIOS and fully restored OS.

Currently, only Intel RST read-only cache is enabled. No buffer flush enabled. And I'm running following versions:

Intel RST software 15.8.1.1007 (while latest available is: 16.8.0.1000, or 15.9.x for Win8)

MSI Z170 Gaming M5, BIOS ver. 7977v1G (while latest available is: 7977v1I)

3 x ST4000DM004, all perfectly fine condition (tested separately under Linux, no errors, no performance drops), FW ver: 0001

Windows 8.1 64-bit

The problem rises when newer version of RST or/and BIOS is installed. Data loss occurs even on single system disk (this is really worrying), when it's perfecty fine NVMe device. The system hungs randomly.

I belive, this has something to do with timeouts on devices because they start to appear in system log, like: device has been reset, NTFS flush error or something similar. High response times occurs during accessing volume.

To figure this out is it my OS or motherboard problem I've tested such config just by installing new, clean Win10 64-bit on Z270 motherboard and moving RAID 5 members around. The degraded write peformance exists. No error logged (NTFS flush, device reset), but high response times exists.

There is no such problems when disks work as standalone or RAID1 devices, either Z170 or Z270.

I remember from the past that Intel chipsets have no problems on earlier generations with RAID5, but now it is. Why?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After long struggling with the isse it turned to be SMR drives.

ST4000DM004 are SMRs and manufacturer (Seagate) has never mentioned that they're SMRs.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Wanner,

there is initialization task ongoing since I started from scratch and rebuild RAID5 array on the same disks.

I think my report will not be accurate at this moment.

This is to check if performance increases after that. The RST driver has been updated BEFORE volume has been deleted and recreated. I'm on 15.9.0.1015.

Stripe size is the same - 128kB. Full capacity assigned to single RAID5 volume. It took around 24hrs to get 13% progress.

There are 2 additional disks which I'm using as temporary media until whole process is completed.

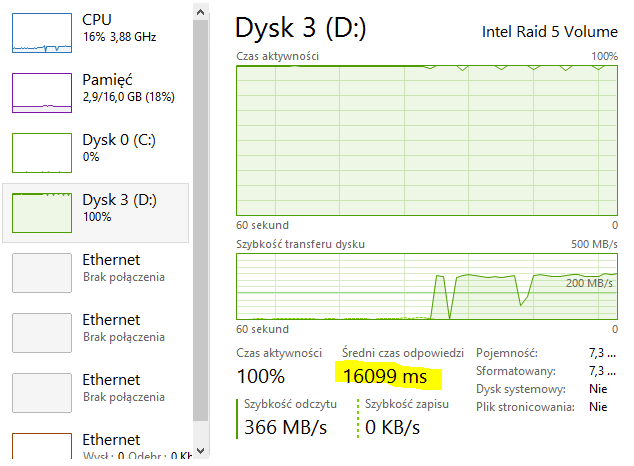

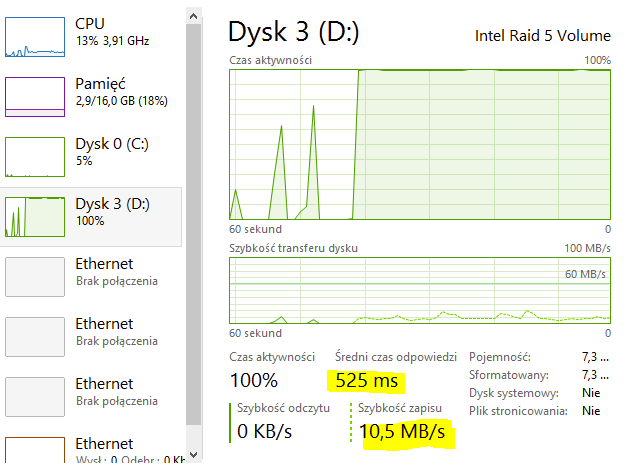

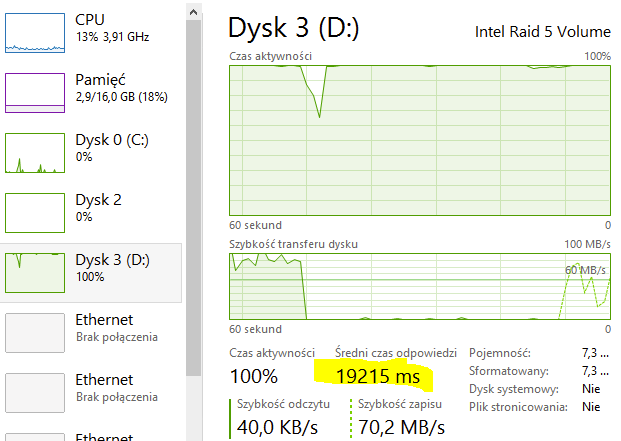

I noticed on both controllers Z170 and Z270 strange behaviour that when wolume is accessed latency times increases in Task Manager -> Performance on this volume. I measured 500-3000ms while reading and up to (!!!) 15000-19000ms while writing.

I think this is root cause why data loss occurs and NTFS errors appears. It always happen for RAID5 (even with SSD cache!), never happen for RAID0 or RAID1.

ROM versions I've tested:

Z170 has 15.2.0.2754

Z270 has 15.5.0.2875

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

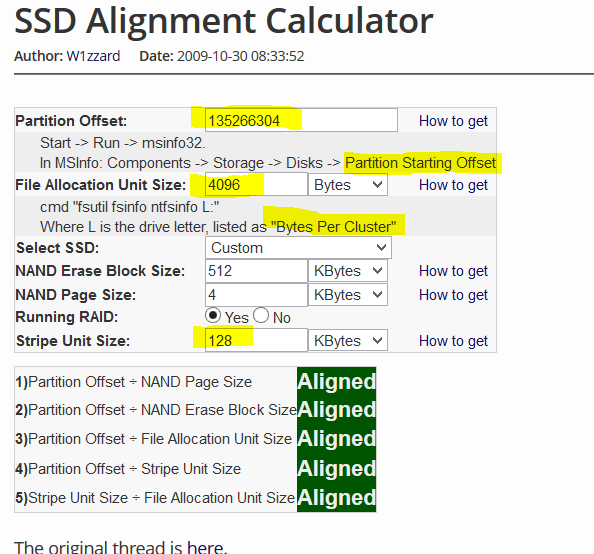

In the meantime, I've checked aligment of RAID volume via fsutil and msinfo32 tools, quick calc reveals that should be no problem there.

My Initialization task progress is 33% which seems to be around 4 TIMES slower than normal (typicaly 48hrs = 2 days for synchronization of 4TB disks, my is going to be completed in total 7,5-8 DAYS). And this is for IDLE volume (there is no data on it, only empty NTFS partition - I'm waiting until it finishes).

The only strange thing in this issue is that only RAID5 level seems to be affected, I haven't tested RAID10 however it does not parity calculations, I assume will also work normally.

My CPU is more-than-enough to handle such tasks...

CALC source:

https://www.techpowerup.com/articles/other/157

Is there any way to disable NCQ only for RAID member via reg keys like HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\iaStorA

?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi again,

I'm sorry to tell this, but.. I've stopped volume initialization.

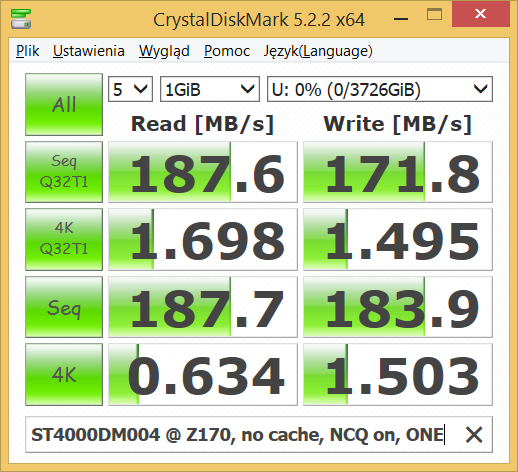

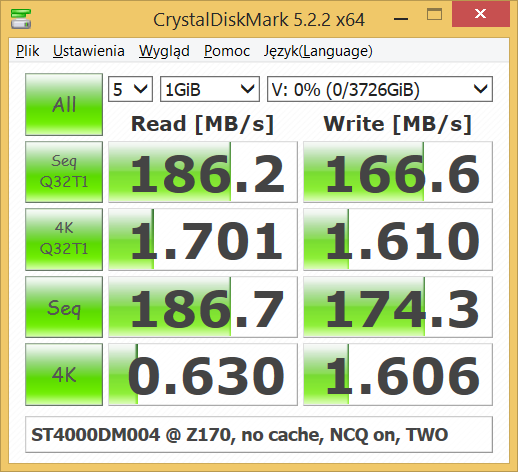

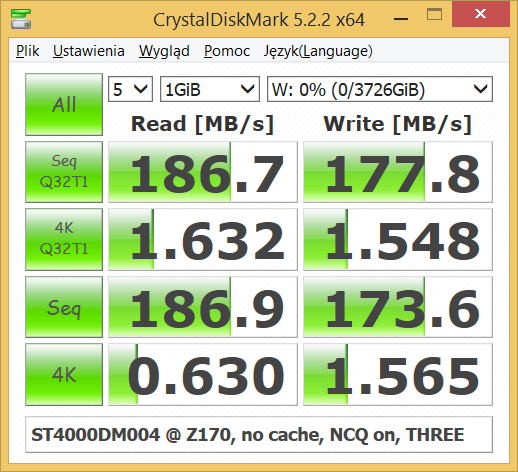

Parity calculations speed for time 7,5 to 8 days for 7630891MB volume is something like 11MB/s to 11,78MB/s, way too slow, when EACH of these disks alone can perform following:

and imagine that RAID1 sync takes only few hours, not DAYS..

I don't know why RAID5 on Z170 and Z270 is useless, but IT IS.

I've even checked all BIOS settings if any could affect this, found only Hot-plug feature which was Enabled on one of the ports, so I've Disabled for all, no change at all.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I've ended up configuring RAID10 with one additional drive, full sync time around 8h (hours, not days!).

Of course, performance is outstanding compared to RAID5.

What's I'm trying to tell You that THIS IS NOT NORMAL. I know many RAID5/RAID6 solutions, both SW and HW, I use them widely, this should be not so slow. Any modern CPU can do easily parity calculations like this.

For 3 drive RAID5 array, typical sync time should be between 24h and 48h. And write performance should be better.

Clearly there is problem with PARITY CALCULATIONS in there.

EDIT: in addition, I've done another RAID5 test on Z270, but under Linux mdadm and only available spare drives, which were 2TB Hitachi 7200 RPMs, rebuild was normal (around 8-10hrs), seq ro/rw speeds also normal (250-300MB/s read, up to 100MB/s seq, and NO slowdowns) so it clearly shows Intel RST issue there.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I have similar problems with my newly built PC and RAID 5.

Write speeds are around 35-40MB/s with Windows Cache on and with Write-Back Cache enabled on Intel RST write speeds actually drop to around 25-35MB/s. Array has been initialized. I got 12-15MB/s before initialization, so something happened there. The array holds around 160-180MB/s for the first ~10-12 sec before cache or something slows it down.

Changing between different cache modes have no effect on writing speed.

I followed these steps when setting up the array: http://www.tomshardware.co.uk/forum/id-2033069/intel-raid-poor-write-performance-fixed-mine.html

Before using the on-board Intel controller for RAID, I tried setting the array up via Win 10. At that time I got better write performance, around 50-60MB/s. which, at the time, felt very slow. I really hoped to get better performance after the array had been initialized.

Attached detailed system report.

EDIT: Also weird is that read speed reaches around 650-700MB/s. So I guess no fault on the disks themselves.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I have the disk reset, performing initialization at the moment. 6% done, so it'll be maybe ready on Sunday for bench-marking.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page