- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

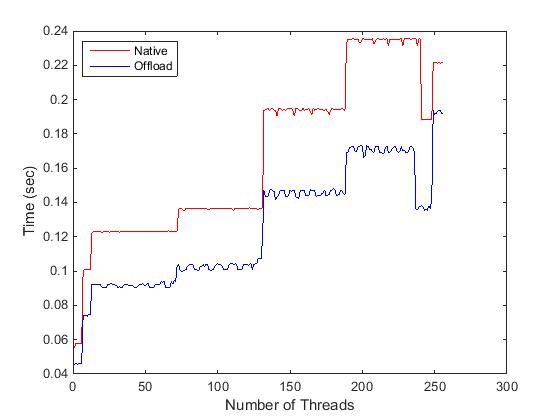

The attached is plot of execution time on Intel Phi with varying number of threads. The same program runs in native and offload modes.

The Phi device has 60 cores.

1) Why the timing steps don't occur at multiples of number of cores (i.e., multiple of 60s)?

2) Why the time drops substantially around 248 threads and increases again? (i.e., > 4x60)

Thank you.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It is difficult to tell what is going on without seeing your code and knowing what your affinity pinning (if any) is used.

The plateaus, for the majority of your intervals of threads per core are indicative of a bottleneck. Without seeing the code, it is difficult to provide meaningful advice. The graph is indicative of a serialization limitation being reached. Does your code have multiple critical sections? (Note, some critical sections exist within library functions such as random number generator, memory allocation, etc...).

Jim Dempsey

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thank you for your reply. In this implementation each thread performs additional new task. The workload scales with the number of thread. Therefore, plateaus are good to have. Sorry, as you said without the code it can't be said much. Regarding the plateaus, they indicate even the number of thread increase (i.e., workloads) time doesn't increase.

Note : The plateaus start when number of threds : 7, 13, 73, 132, 189

1) However, since the Phi has 60 cores and each core can executes 4 threads per core, why it is not reflected by the plateaus. The increments of the plateaus are not started at multiples of number of cores (i.e., multiple of 60s)? I understand when in offload method only 59 cores are participating for computations. But this is not reflecting that either.

2) When number of threads increase, how the threads are scheduling on the cores? Are the threads first fill on each core and move to next core or do they start next threads only after all the cores are allocated with equal number of threads? (In the plot the first plateau starts when number of threads are 7).

3) Why the time decreases substantially, when number of threads is just pass more than the hardware threads? (Note the sudden drop around 240 threads)

4) Are the 4 threads in a Phi core run simultaneously or are they switching?

5) The Phi has only 4*60 (i.e., 240) hardware threads. When I run 256 threads, how the additional threads are handled?

6) Why the number of threads cannot be exceeded more than 256? If exceeded, the additional threads do nothing!

Thank you

++++++++++++++++++++++++++++++ Code Structure : Offload +++++++++++++++++++++++++++

Note : each thread performs unique problem. The workload scales with the number of thread.

for(i=1; i<=nT; i++){

gettimeofday(&tval_before, NULL);

#pragma offload target(mic) \

in(reHoaIn, imHoaIn : length(dimDM*nObs))

{

omp_set_num_threads(i);

Kernel_solver( reXn, imXn, reHoaIn, imHoaIn);

}

memcpy( pwdReMatrix, reXn, i*dimDN*sizeof(float) );

memcpy( pwdImMatrix, imXn, i*dimDN*sizeof(float) );

gettimeofday(&tval_after, NULL);

}

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

1) The core design of the Knights Corner is that of an In-Order-Core. The hardware threads within the core have different register context but share some of the internal resources of the core with the other threads of the same core. Due to the In-Order design, and internal latencies, simple instructions such as register to register move take two clock cycles. When you run two threads per core, the execution is interleaved and the second thread essentially runs without encumbering the first thread (at least for GPR type of instructions). Vector instructions have different characteristics however to a great extent, two threads running within a core tend not to delay each other as you may have experienced on host processors with HT. (Knights Landing will be different.) Running the third thread within the core is generally advisable due to most targeted applications have memory access requirements, and the memory latency time can be (hardware) scheduled to a thread that is not waiting on memory. The usefulness of the fourth thread of the core (on KNC) appears to be greatly dependent on the nature of the algorithm and the programmers ability to finesse the code such that the threads within the core have cache access patterns that are "friendly" to the other threads of the core.

2) The thread scheduling per core is a matter up to you through use of various environment variables. Check the C++ User and Reference Guide in sections:

Setting Environment Variables on the CPU to Modify the Coprocessor's Execution Environment

Supported Environment Variables

In particular: KMP_AFFINITY, KMP_PLACE_THREADS, OMP_PLACES, OMP_PROC_BIND

Typically you use only one of the above to get what you want.

3) The sudden drop is when you exceeded the number of hardware threads (240 for 60 core models, 244 for 61 core models, different for different number of core models). When you oversubscribe, software threads in excess of hardware threads available will suspend for a while and the operating system will periodically suspend a running thread and resume a waiting thread.

4) The thread execution within a core is Round Robin amongst the threads that are not waiting (memory or inserted delay or resource). This is for KNC, KNL will be different.

5) See 3)

6) When you exceed the number of hardware threads, the operating system (subject to your affinity settings) will suspend some threads and resume others. This is somewhat like sharing a bicycle with your brother, you mother may have had the role of the operating system in determining who gets to ride and when. Typically in a compute bound application you would not oversubscribe the number of threads. However, most applications perform I/O. And I/O generally induces wait for I/O completion time. In these situations you may find it beneficial to oversubscribe by the number of expected pending I/O waits

Jim Dempsey.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you Jim. I appreciate your detailed explanation. I think I got the required explanation.

1) Where can I read more about: MIC in-order execution, core operations (data and instruction flow in simple way) and shared resources (in a core)?

2) If vector operations are not used, is there a possibility to perform 4 floating-point operations per cycle per core? How many ALU/FPUs are there in a core which can be accessed simultaneously by the 4 threads?

3) Further, what is the easy/recommended way of profiling a MIC openMP C program?

Thank you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Re: more about in-order execution, core operations...

Have you looked at the Software Developer's Guide?

Regards

--

Taylor

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Mahendra S. wrote:

2) If vector operations are not used, is there a possibility to perform 4 floating-point operations per cycle per core? How many ALU/FPUs are there in a core which can be accessed simultaneously by the 4 threads?

3) Further, what is the easy/recommended way of profiling a MIC openMP C program?

Thank you.

I haven't seen evidence that you could get improved threaded scaling by using only x87 floating point instructions, even if the compiler didn't impede that idea by using the VPU even when not vectorizing. VPU can take inputs from different threads on consecutive cycles, so the scaling from 1 to 2 threads per core should normally be more than it is beyond 2 threads per core.

I haven't seen much discussion of tools other than VTune for performance profiling, although you might look for published references on TAU et al.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page