- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi everyone,

I thought I'd pass on a RealSense Unity tip about the 'Continuous' setting of the TrackingAction Unity hand-tracking script, as I had been using it incorrectly for a long time and guessed that others might have had the same problems that arose from it.

My original assumption had been that enabling 'Continuous' meant that the camera would maintain joint tracking continuously instead of waiting for the hand to be detected before moving an object. However, an online Intel documentation page I came across informed me that this was not the case at all.

Basically, placing a tick in the 'Continuous' box tells the camera to ignore the hand index number and focus on tracking one particular hand and getting its movement values from that hand. If the opposite hand appears in the camera view then the camera ignores it and keeps tracking the other hand.

The outcome of having 'Continuous' ticked in every one of our 'TrackingAction' scripts on the left and right arm, hand and finger joints of our RealSense-controlled avatar meant that whilst the arms would move independently 75% of the time, the other 25% of the time, independent control of the left arm would be lost and that arm would precisely mirror whatever movements the right arm was making. This made the avatar look bad.

We had previously had a hunch that the 'Continuous' setting might be responsible for the mirroring but we had only done a couple of test-untickings of the setting and so could not see a real difference in the behavior of the arms. This time though, we unticked 'Continuous' in every single 'TrackingAction' script in the arms and hands and did a series of test runs.

The arms now moved completely independently without any occurrence of the mirroring error at all. What's more, they also moved more fluidly than before, presumably because the camera was no longer getting confused about which hand should be controlling an object.

Edit: a consequence of switching off all the 'Continuous' settings was that it was initially a bit less intuitive to use both arms simultaneously. This was because RealSense now expected the RL hand that was going to be used to be moved in front of the other RL hand (just like it was designed to do), whereas a positive side-effect of the glitching when Continuous was enabled was that the camera recognized both hands at the same time.

We found a simple technique that restored smooth and simultaneous two-arm control though. You just have to train the player early in your app to subconsciously sway their hands back and forth a little as they move them up and down, like they are playing the drums in slow motion in empty air. The swaying action ensures that the hands are constantly gliding to the foreground of the camera's view and back again.

The likely reason for why it works is that the camera is still switching control between the left and right hands, but the switching is happening so frequently that the virtual objects being controlled do not have time to stall their movement and so it looks as though both the left-hand and right-hand controlled objects are moving in perfect sync without any lag at all between camera input and the action taking place on screen.

I made a YouTube video that visually demonstrates the principle, as it is far easier to understand when explained that way!

https://www.youtube.com/watch?v=rnTYGqlLlcM&feature=youtu.be

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I followed up my work on the 'Continuous' setting with some lengthy research into the mechanics of the Real World Box to understand better how defining limitations for the camera view could enhance the effectiveness of object control.

In the case of our own project at least, it was found that there was no downside to removing the Real World Box values from the project completely, despite having spent a long time meticulously configuring each object's in the belief that they were vital. Glitches in object rotation - such as occasional breakages in the bending of avatar fingers with a RWB value of '100' - disappeared after all the RWB values were set to zero and our avatar's limbs moved even more fluidly and humanly.

Our initial logic behind the decision to remove the RWB values was that if the camera used its maximum view range when the values were set to '0', changing that value to define a limited area in front of the camera where the hands or face will be recognized and so make it more difficult to move objects was kind of crazy.

That's not to say that RWB values would not be useful for more specific applications in a project. For example, having a background, mid-ground and fore-ground and setting up the RWB values of the objects in each sector so that the 'TrackingAction' script only responded when the hand's distance from the camera was in the same zone as that object (with the hand being in the background when closest to the camera and in the foreground when furthest from it.) Such a project would resemble the 'Ninecubes' example packaged with the RealSense SDK, where moving the hand causes a different cube to become active.

Virtual World Box

The Virtual World Box values, however (which define limits for the movements of on-screen objects) are absolutely vital for limiting the travel distance of objects. For example, in our avatar, we use these values to define how wide the mouth of the avatar can open so that it does not open so far that it detaches from the head. So we left those exactly as they were.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I found a new cool trick that seems to prevent tracking of 'TrackingAction'-powered Unity hand-tracked objects with the '1'hand index number from stalling without having to do the 'air drumming' technique listed earlier in this post.

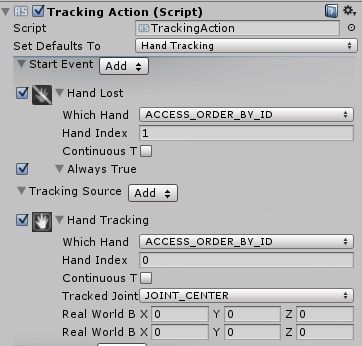

If you add a 'Hand Lost' rule to the "Start" rules of your object that uses the '1' index, alongside your main object activation rule (whether that be 'Hand Detected' or, as I use in mine, 'Always'), then you can create a loop where if the camera loses tracking of that object and falls asleep, it immediately wakes up again and resumes tracking so long as the hand controlling that object is within the camera view.

Using this method, I was able to control the left arm of my avatar without swapping the hands over, with the left hand behind the right hand maintaining the same constant distance from the camera and the right hand always in front of it.

The trade-off for this useful trick is that the tracked objects seem to move a bit slower.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page