- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Edit: additional illustrations now added.

Hi everyone,

Ever since I started using RealSense, I have wanted to use two or more of the same type of Action script (TrackingAction in my case) in one object. But the SDK ignores the second instance of the script and only runs the first one that you put into an object. This necessitated child-linking multiple objects together and putting one TrackingAction in each of the linked objects.

I had always figured that the problem could probably be overcome if I could make a unique copy of the TrackingAction script with a different name by copying the script's code into a freshly created C# Unity script file. The first time I attempted this last year though by manually changing the 'TrackingAction' name reference within its code, the copied script ran without errors but displayed no configuration settings in the Inspector panel, effectively making the copy useless.

Today I decided to have another try, this time using a word-processor Find and Replace function to change all the instances of the word 'TrackingAction' in the script to 'TrackingAction2'. And this time, it worked!

Here is a step-by-step guide to how I did it, using the TrackingAction script as my example.

STEP 1

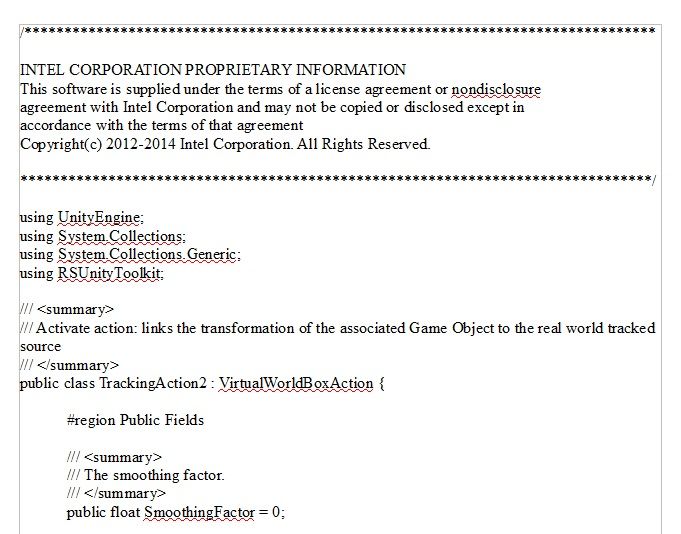

Open the TrackingAction script file's contents in Unity's MonoDevelop script editor. Go to the Edit menu and choose Select All to highlight the entire script and then select the Copy menu option to copy the code to your computer's memory.

STEP 2

Open a word-processor program such as Notepad, Wordpad, Microsoft Office or OpenOffice and paste the copied script into the document.

STEP 3

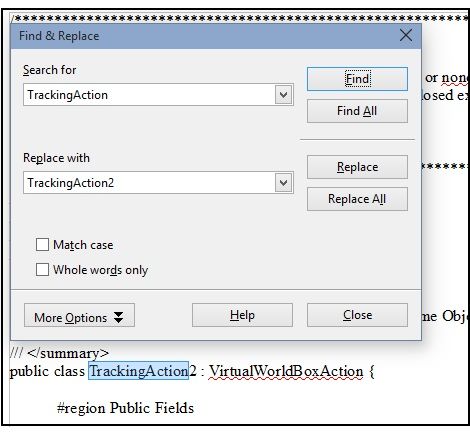

Do a Find and Replace operation on the document, finding all instances of the word TrackingAction and replacing them with TrackingAction2. Then do a Select All and Copy the amended script into your computer memory.

STEP 4

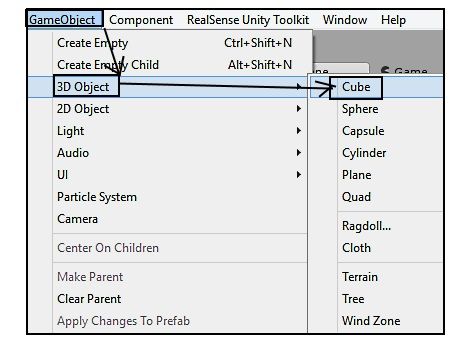

Return to Unity and create a basic Cube type object.

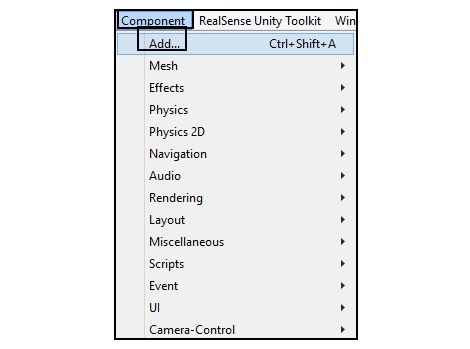

Then go to the 'Component' menu at the top of the Unity window and select the 'Add' option to bring up a pop-up window with a list of components that can be added to the object. Select the 'New Script' option.

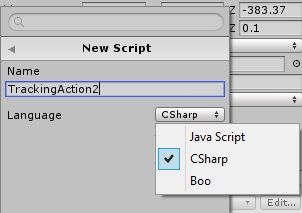

On the script creation interface, type in the name TrackingAction2 as your script name. Be sure to click on the drop-down menu beside the word 'Language' and pick the option labeled 'CSharp' to tell Unity that you are creating a C# type script (the type of code that the Action SDK scripts use). This is vital, because if you make a JavaScript type script file then the code will not work when it is placed in the file.

Press the Enter / Return key to create the script. If you click on the screen then the pop-up will disappear and you will have to go back to the New Script option and put the settings in again.

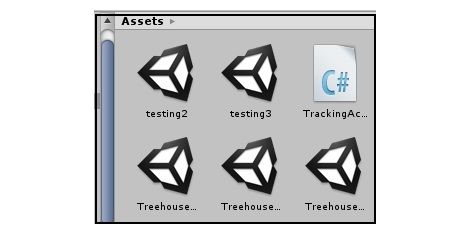

The 'TrackingAction2' C# script file will now be inside your cube and also listed as a file in the Assets folder's root directory in Unity's bottom 'Assets' panel.

STEP 5

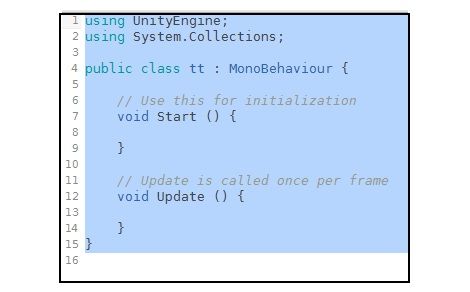

Open the newly made TrackingAction2 script file in the MonoDevelop script editor and delete the default block of C# code.

Then paste in the amended TrackingAction script code that you copied from the wordprocessor doc. Save the file.

STEP 6

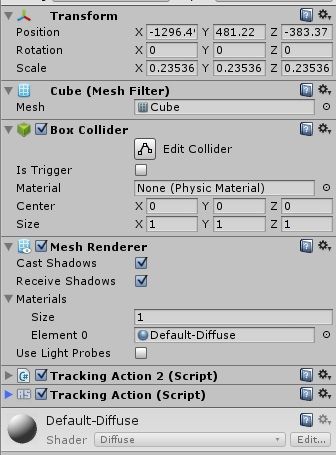

Drag and drop an ordinary 'TrackingAction' script from the RSUnityToolkit > Action folder where the Action scripts are stored. You should now have two scripts in your cube, the usual TrackingAction and our specially created TrackingAction2.

STEP 7

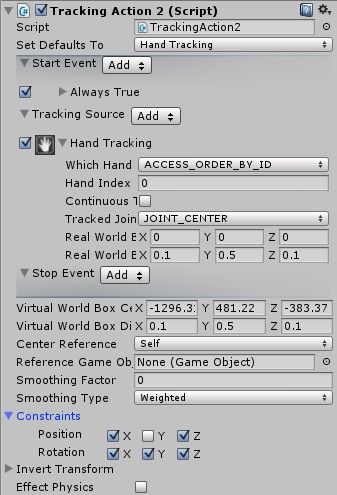

Give the 'TrackingAction2' script the following hand-tracking settings.

These settings use the palm of the hand to control the cube. The Real World Box settings are all changed to '0', and the X-Y-Z settings of the Virtual World box are changed to 0.1, 0.5 and 0.1 respectively. In the Constraints section meanwhile, all settings except the 'Y' Position axis are locked by ticking them.

This, in combination with the Virtual World Box setting of Y = 0.5, restricts the cube to only being able to travel a short distance up and down when the hand palm is moved up and down in front of the camera.

STEP 8

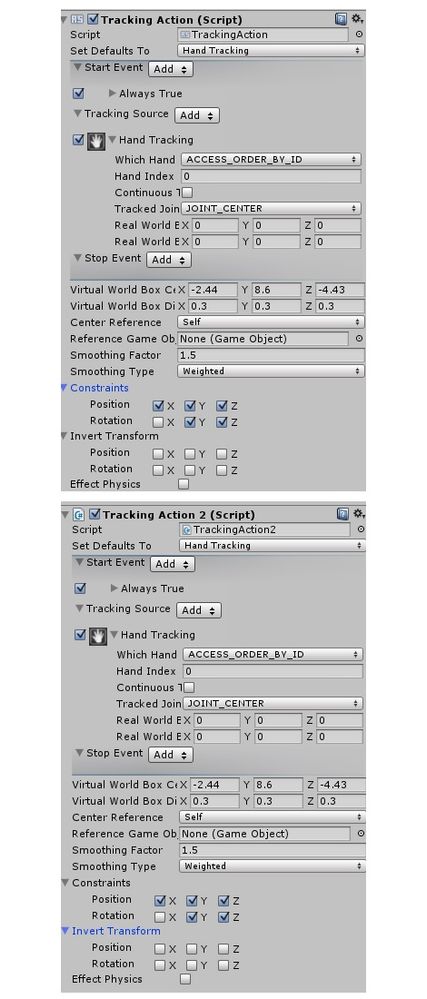

Give the TrackingAction script the following face control settings, using the same Real World Box settings as the TrackingAction2 script above, but this time using the Virtual World Box X-Y-Z settings of 0.1, 0.1 and ).9 respectively.

Set the point to be tracked as the nose-tip, as this is one of the easiest face-tracking points (the other being the chin) to move an object with,

Set the non-constrained Position axis to 'Z' this time instead of the 'Y' we used in 'TrackingAction2'. We will explain further below why this is necessary. Suffice to say, it is an important proof that the 'TrackingAction2' script is being actively used by the program instead of being ignored, as usually happens when there are two Action scripts of the same name.

STEP 9

Run the program! You should now be able to move the cube a short distance up and down by the following means:

(a) Move your palm up and down in front of the camera to move the cube a short distance up and down as it follows the unconstrained Y direction in the Constraint' section of 'TrackingAction2'.

(b) Put your hands behind your back, so you can confirm to yourself that the copied TrackingAction2 script really works and you are not accidentally triggering movement with your hands.

Then shake your head side to side in front of the camera to make the cube move left and right as it follows the unconstrained Z direction in the 'TrackingAction' script.

As a final test, temporarily remove the 'TrackingAction2' script from the cube and run the program again. Try to move the cube up and down with the hand. It should now be impossible to do so now that the 'TrackingAction2' script that provided the vertical 'Y' direction is absent.

You can though move the cube left to right with your hand instead of shaking your head left to right.

THE PROOF

This is why we gave the two scripts different directional constraints. It is still possible to move a face-tracking object with the hand as well. So if we had used the Y direction on both scripts so that the hand and face both moved the cube upside down, then the cube would still be able to move vertically with the hand when the hand-tracking script (TrackingAction2) was removed People would therefore be justified in being skeptical and wondering if the added 'TrackingAction2' script had made any difference at all.

By having 'TrackingAction2' move the cube up and down though, and then make that move impossible to do when the script providing that movement is removed, we prove that Unity IS listening to the second script and providing us with the ability to have multiple Action scripts in a single object providing different controls!

Best of luck!

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I began using two-TrackingAction arrangements in the arm and hand joints of my RealSense-controlled avatar to gather some data about how they behaved in comparison to the old approach of having a series of child-linked joints, each with one TrackingAction per joint in it. Here are my findings.

(a) If you have been using multiple linked joints to provide an object with more than one direction of movement (e,g moving the avatar's hand left / right with one joint and up / down with the other) then the hand behaves exactly the same when a single hand joint with a left-right 'TrackingAction' and an up-down 'TrackingAction2' is used. So this an area where using a two-TrackingAction object can simplify the design of a system.

(b) If you have been using multiple linked joints to amplify the motion generated by a facial part that normally does not produce much movement in an object (e.g the left lip side) then putting two TrackingActions into a single joint instead of having two linked pieces with one TrackingAction each inside them does not produce an amplification in motion. So the multi-TrackingAction system is not so useful for amplifying object movement when using only one joint.

However, we did find during testing that if you are using two linked TrackingAction-powered joints to produce an accelerated movement, then having two TrackingActions in each of these joints gives you the motion amplification, *and* amplifies the movement distance by x4 instead of x2.

Edit: just in case anyone at Intel reads this: a very simple way to implement multi-Action script support in a future SDK would be simply to package several pre-made copies of an Action script that were named, for example 'TrackingAction', 'TrackingAction2', 'TrackingAction3', etc (i.e have a folder for each Action script instead of having all the scripts in one folder like it is now). Then developers can quickly build very versatile applications without having to resort to the long process above involving a word processor and Find n Replace.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Here's a video of our latest use of the 'multiple TrackingActions inside one object' technique, where we showcase a version of our avatar arm's shoulder side-swing that is much easier to use than the previous version (in the old version the swing-across only worked when the arm was lifted to a certain height).

https://www.youtube.com/watch?v=JRFpQGL-cYM&feature=em-upload_owner

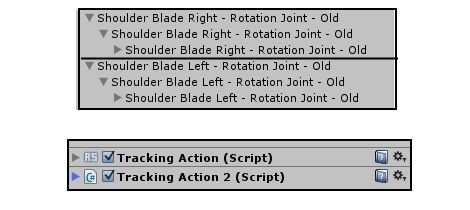

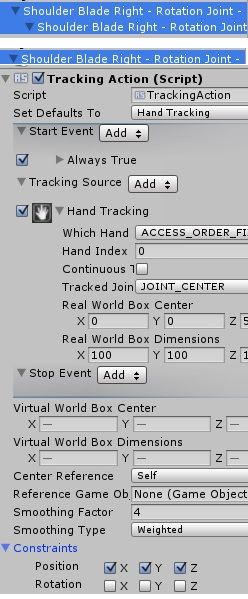

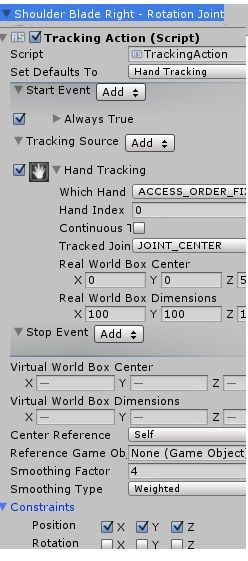

In this setup, we have three child-linked spherical joints in our left and right shoulders that move the shoulder-blade flesh object and everything beneath it down to the fingers at the base of the arm.

Each of these three joints has two individually numbered TrackingAction scripts in them - see the very top of this forum post for a guide to how to make multiple TrackingActions. We created alternate copies of the TrackingAction because if you put more than one copy of the original TrackingAction inside the same object, the camera only listens to the first one in the list and ignores the settings of the rest.

The pair of TrackingAction scripts in each of the first two of the three joints have identical settings. They are constrained so that only the 'X' rotation axis is unlocked, enabling the joint to rotate up and down on the spot to lift and drop the arm attached tot them.

The pair of 'TrackingActions' in the third and final joint, meanwhile, are constrained so that only the 'Y' rotation axis is unlocked. This enables the shoulder to rotate forwards and backwards at one end, so that the arm can more easily arc around to the front of the body.

Whilst you could achieve something similar just by having both the 'X' and 'Y' rotation axes unlocked in a TrackingAction in a single joint, the up / down and left / right rotation that you get from that method is less controllable than if you divide the up / down and left / right functions between separate rotational joints. By doing it this way, you can also apply different settings to each movement direction.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I did a follow-up to the above article (in which I explained how to use multiple TrackingActions in the same object) by creating several different numbered SendMessageActions (SendMessageAction 1-4). The reason for doing this was to replace some of the complex multi-stage trigger actions I had built with a simpler, more efficient approach via the SendMessageAction SDK script.

SendMessageAction activates a non-SDK script inside the same object as the one that the SendMessageAction script is in when a certain action such as a face expression or gesture is recognized by the camera.)

As an example of the simplification: the trigger that made my full-body avatar crouch would send a message to a rotation script in the upper right leg to lift when a closed-hand was recognized. That script would then send an activation to a script in the lower leg to tell it to also rise up, and that script in turn would send on a third activation to the avatar's foot to tell it to bend upwards at the joint.

The same effect could be achieved, I found, by having a SendMessageAction and SendMessageAction2 in the upper leg and a SendMessageAction and SendMessageAction2 also in the lower leg. The two SMA scripts were set to send an activation message to the rotation scripts that controlled the 'crouch down' animation when a 'hand closed' gesture was detected (i.e the upper and lower legs would both bend into a 'crouch' poise when a closed hand was detected.

The two SMA 2 scripts meanwhile were set to trigger the rotation scripts that reversed the animation and stood the avatar up straight again when the 'hand closed' gesture was Lost. So hand-closed would make the avatar crouch, and opening the hand would Lose detection of the gesture and trigger the standing-up action.

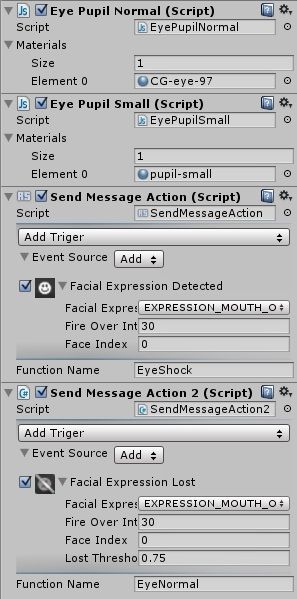

Having succeeded with using multiple SendMessageActions to replace our previous trigger system for the crouch action, we then applied the method to changing the avatar's eye pupil to a small 'shocked' size when an open-mouth expression was detected, and restoring the pupil to its normal size by closing the mouth to Lose the expression and trigger the activation of the default pupil gesture.

The image below illustrates this setup/

So in summary, our multiple-Action script creation script works just as well with SendMessageAction as it did with TrackingAction!

Edit: SendMessageAction tends to be quite sensitive in its facial recognition in the current SDK and Unity 5.1. This means that it can tend to switch between one state and another (e.g eyes small in shock and eyes normal) when you do not want it to. We found that a solution for this was to set the SendMessageActions to track a facial point that has less movement in it so that it's harder for the camera to see it and mis-trigger an action.

In the case of the eye pupil example above, assigning the pupil change on-off trigger to 'Expression_Brow_Raiser-Right' (i.e the raising of the right-side eyebrow) ensured that the pupil only changed when we raised that eyebrow high to simulate an eye wide open in shock (since an eyebrow on the real-life face moves upward when an eye opens wide) and remained in its normal default state the rest of the time no matter how hard we tried to mis-trigger it during testing.

Edit 2: another point worth making clear is that because SendMessageAction sends its activation to function names rather than script names, if you are using more than one non-SDK script in a particular object then you will need to give each script a unique function name (i.e you can't have two scripts that both have the function type Start or Awake.) In the eye example above, we gave our two pupil texture scripts the function names EyeShock and EyeNormal to replace the Start function that both were previously using.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I found a new use in Unity for the multiple Action script system described at the start of this article. It can also be used to improve hand and face tracking by reducing object stalling when the camera loses sight of a tracked point.

If you put multiple instances of a tracking-based Action script in your Unity object (e.g TrackingAction 1 to TrackingAction 4) and set some of those to track one point and set some to track an alternate point that is nearest to the first one then you can reduce stalling and move an object further.

As an example, my project's full-body avatar used the Nose Bottom nose-tracking point to bow the avatar down at the waist when the head was lowered and raise it back up to standing position when the head was raised. The avatar could not bend forwards far however, because the bending stopped once the head was in a position where the camera could no longer see the bottom of the nose.

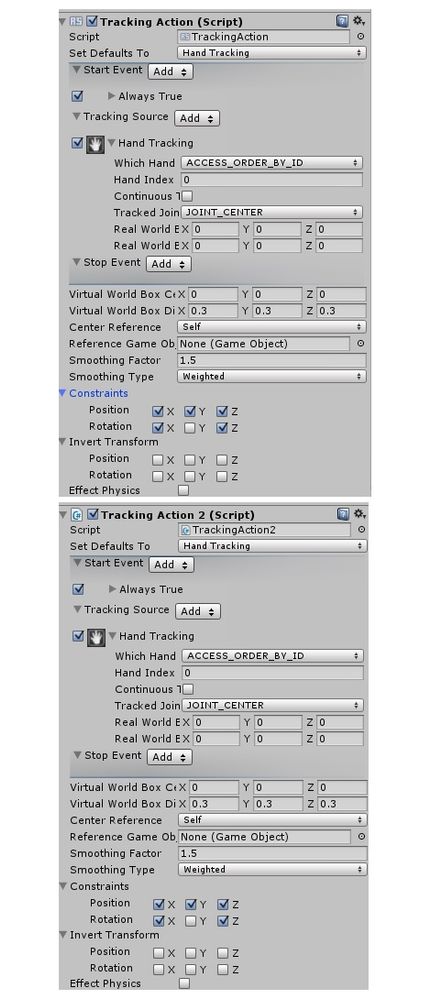

My solution was to set half of the four TrackingActions in the avatar's waist bending joint to track Nose Bottom, and the other half to track Nose Top. The result of doing so when I ran the project was that the avatar could lean further back and further forwards.

The basic principle behind this enhancement is very simple. The camera is simultaneously tracking both of the points (the main one and the alternate one). When the head is raised or lowered so far that the camera can no longer see one of the two tracked points, the remaining point that is still within the camera's view seamlessly takes over the burden of keeping the object in motion until the head returns to a position where the camera can re-acquire tracking on the lost point.

The images below show the extremes of lean-back and lean-forward achieved whilst in a sitting position with the camera on the computer desk level with the chest, and also the configuration of the four TrackingActions..

You can amplify movement further by making a copy of your joint and childing the copy to the original, so that you have double the number of TrackingActions active simultaneously (this has an impact on performance if over-used though). Unity has a phenomenon where it will multiply the movement effort of the TrackingActions in the original joint by the movement effort of the ones in the copied joint to produce a total movement effort that is much greater than the work output of two individual joints that are not childed together.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

My ongoing research with RealSense and Unity revealed an interesting and useful new insight about the technique in this article regarding using multiple numbered TrackngAction scripts inside the same object.

It seems that you are mostly likely to get a tangible motion amplification from using multiple TrackingActions with different file-names inside the same object if they each have different constraints / inverts or landmark tracking settings.

If you need to have multiple TrackingActions with the exact same configuration then it seems to be better to place a single TrackingAction in the object that will be moved or rotated and then create a chain of childed-together copies of that object, each with one Trackingaction in.

For example, in my full-body avatar, the knee joint has three joints, all overlaid perfectly on each other - Knee Joint (the original), and Knee Joint B and Knee Joint C (two copies containing the same TrackingAction script as the original 'Knee Joint'. This enables the leg flesh attached to the knee joint to travel 3x further than it normally would if there was just one joint.

By childing copies of objects containing TrackingActions together, you multiply the movement effort of whatever is attached to the end of that chain of joints by however many sub-joints you have (i.e 1 sub-joint = twice as fast, 2 sub-joints 3x as fast, etc).

*However*, if you have more than one TrackingAction of the same configuration inside two or three sub-joints then you only seem to get a movement magnification effect from one TrackingAction in each joint (TrackingAction 1 in Joint A and TrackingAction 1 in Joint B) If you have identically configured TrackingAction2's and TrackingAction3's in those joints then they seemed to get ignored when Unity is calculating the object amplification effect.

If you have more than one TrackingAction in an object with *different* configurations (e.g one that is set to Position-move in the X position direction and another that is set to Rotate in the Z direction) then you need to have a copy of the same set of scripts in each of the childed sub-joint copies. So the TrackingAction1 in the original joint will multiply its Position movement effort with the TrackingAction 1 in the sub-joint, whilst the TrackingAction2 in the original joint will multiply with the TrackingAction2 in the sub-joint to create a magnified Rotation effort.

The script set could therefore be thought of as being like animals of different breeds - they only want to combine with a script that has the same "genetics" and shows no interest in scripts that have a different configuration from their own.

Once I had made this discovery, I was able to achieve a significant performance speed increase in my project by removing a number of TrackingAction copies that were producing no useful movement effort because they were being ignored by TrackingAction scripts with a different configuration.

Each time you use a TrackingAction, there is a very small hit to performance that is barely noticeable at first but becomes more obvious as the number of TrackingActions grows (there are about twenty in the various parts of my avatar now, and thirty-five before I stripped out the redundant duplicates today). In my avatar's lips, for example, I could animate them with the same ease using eight TrackingActions in total than when they were using sixteen.

You always want to aim to use the minimum number of TrackingActions so that you free up Unity's processing resources for use by the other non-RealSense content in your Unity project (colliders, non-RealSense scripts, physics, lighting, etc).

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page