- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

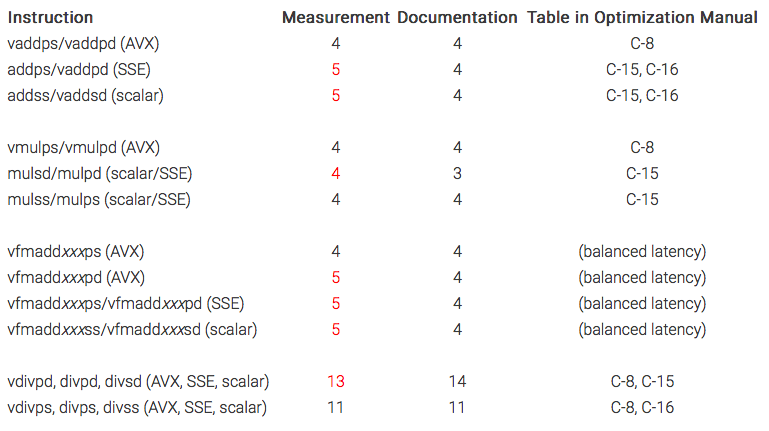

With the Skylake-EP release still in the distant future, I did some benchmarking on an Core i5-6500 to explore some of the new Skylake features. During my instruction latency benchmarking I came across something odd: The AVX instructions (vaddps/vaddpd) seem to have a latency of four clock cycles as specified in Table C-8 of the Optimization Manual. The scalar and SSE instructions (addss/addsd/addps/addpd) however seem to have a latency of five clock cycles, contradicting the values found in Tables C-15 and C-16 of the Optimization Manual. I (almost certainly) rule out problems with my benchmarking code, because it includes verification tests and the results come in fine. But maybe I made a mistake:

#define INSTR vaddpd

#define NINST 6

#define N edi

#define i r8d

.intel_syntax noprefix

.globl ninst

.data

ninst:

.long NINST

.text

.globl latency

.type latency, @function

.align 32

latency:

push rbp

mov rbp, rsp

xor i, i

test N, N

jle done

# create SP 1.0

vpcmpeqw xmm0, xmm0, xmm0 # all ones

vpsllq xmm0, xmm0, 54 # logical left shift: 11111110..0 (54 = 64 - (10 - 1))

vpsrlq xmm0, xmm0, 2 # logical right shift: 1 bit for sign; leading mantissa bit is zero

# copy SP 1.0

vmovaps xmm1, xmm0

loop:

inc i

INSTR xmm0, xmm0, xmm1

INSTR xmm0, xmm0, xmm1

INSTR xmm0, xmm0, xmm1

cmp i, N

INSTR xmm0, xmm0, xmm1

INSTR xmm0, xmm0, xmm1

INSTR xmm0, xmm0, xmm1

jl loop

done:

mov rsp, rbp

pop rbp

ret

.size latency, .-latency

What really strikes me as odd is the fact that this implies dedicated hardware units for AVX and scalar/SSE instructions. Does it have to do with the AVX Turbo being lower than the (regular) Turbo frequency? Giving the scalar/SSE units a longer pipeline (5 instead of 4 steps) would allow them to be clocked higher. The same seems to be true for other instructions (FMA, see Table below). By the way, FMA latency and throughput is not documented in Appendix C of the Optimization Manual. Is there another resource that them documented?

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have not looked at Agner Fog's test harness in detail, but I generally find his results more comprehensive than Intel's -- especially when I am looking at changes in performance over time. (I sometimes have trouble parsing the compressed format he uses for documentation of instruction types for the newer processors, especially for instructions that have very similar mnemonics, but that is not a problem here.)

The Skylake results in the 2016-01-09 revision of his instruction_tables document (http://www.agner.org/optimize/instruction_tables.pdf) don't show any deviations from the 4 cycle reported latency for the ADD, MUL, and FMA operations for the various SIMD widths, or from the 11 cycles for the single-precision divides, but he reports "13-14" cycles for the double-precision divides.

For the FP divides there are quite a few possible timings, depending on the specific values involved, due to the iterative nature of the process. WIth a SIMD implementation an implementation can probably only take an "early out" if all of the values satisfy the "early out" criteria (e.g., dividing a non-denorm number by 2 and obtaining a non-denorm result), but it is easy to imagine the timing varying by a cycle or so for different combinations of special cases in the different SIMD lanes.

You are probably already compensating for these, but two issues that cause me trouble when I am trying to get very detailed timing are the "start-up" overhead of the 256-bit pipelines and the transition of the branch predictor from correctly predicting to not correctly predicting the loop exit at somewhere in the range of 32-38 iterations (on Haswell -- I am not sure if Skylake is the same).

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page