"AI Inference" Posts in "Artificial Intelligence (AI)"

Success! Subscription added.

Success! Subscription removed.

Sorry, you must verify to complete this action. Please click the verification link in your email. You may re-send via your profile.

- Intel Community

- Blogs

- Tech Innovation

- Artificial Intelligence (AI)

- "AI Inference" Posts in "Artificial Intelligence (AI)"

Optimizing LLM Inference on Intel® Gaudi® Accelerators with llm-d Decoupling

07-28-2025

Discover how Intel® Gaudi® accelerators and the llm-d stack improve large language model inference b...

0

Kudos

0

Comments

|

“AI Everywhere” is Connected by Ethernet Everywhere

12-14-2023

As we realize AI is Everywhere, how does the data move “everywhere?” The answer is Ethernet.

1

Kudos

0

Comments

|

Microsoft Azure Cognitive Service Containers on-premises with Intel Xeon Platform

12-19-2022

Microsoft Azure Cognitive Services based AI Inferencing on-premises with Intel Xeon Platforms

0

Kudos

1

Comments

|

End-to-End Azure Machine Learning on-premises with Intel Xeon Platforms

12-13-2022

Azure Machine Learning on-premises with Intel Xeon Platforms and Kubernetes

0

Kudos

0

Comments

|

Deploy AI Inference with OpenVINO™ and Kubernetes

07-19-2022

In this blog, you will learn how to use key features of the OpenVINO™ Operator for Kubernetes

0

Kudos

0

Comments

|

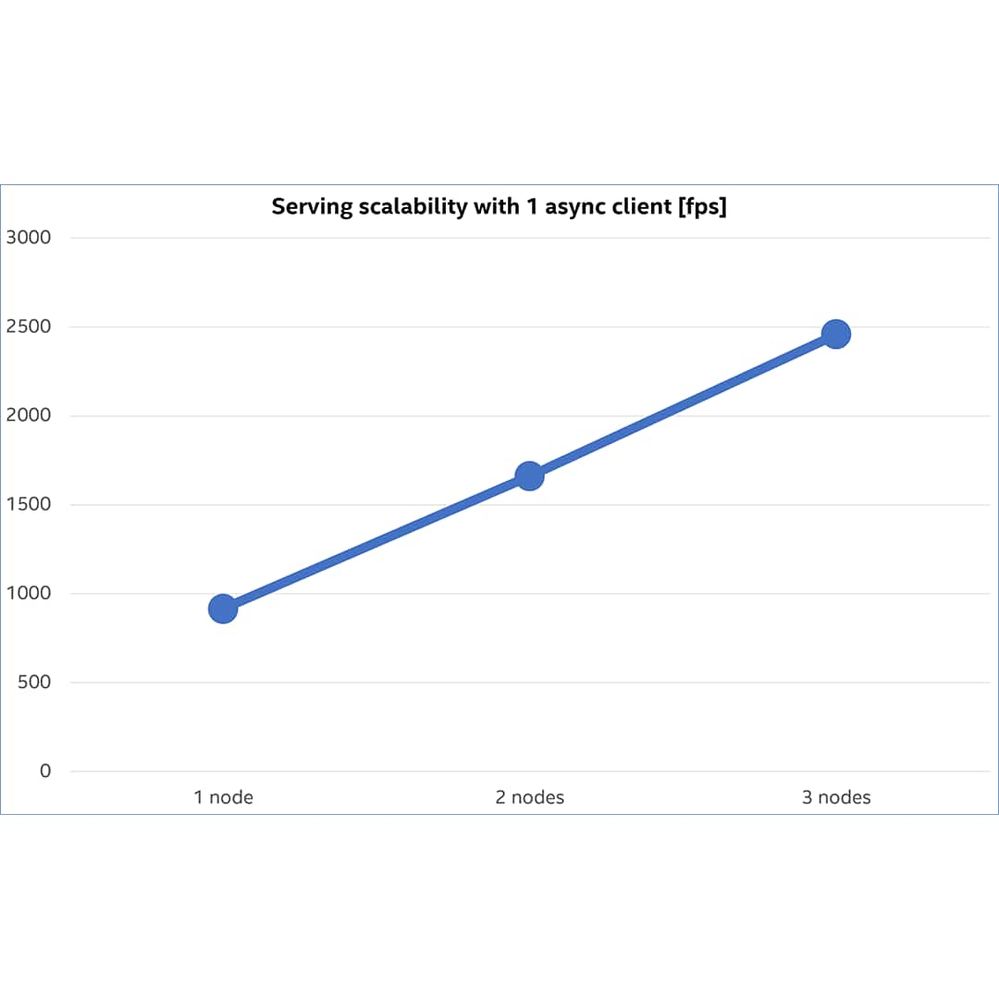

Load Balancing OpenVINO™ Model Server Deployments with Red Hat OpenShift

10-13-2021

Learn how to deploy AI inference-as-a-service and scale to hundreds or thousands of nodes using Open...

0

Kudos

0

Comments

|

Community support is provided Monday to Friday. Other contact methods are available here.

Intel does not verify all solutions, including but not limited to any file transfers that may appear in this community. Accordingly, Intel disclaims all express and implied warranties, including without limitation, the implied warranties of merchantability, fitness for a particular purpose, and non-infringement, as well as any warranty arising from course of performance, course of dealing, or usage in trade.

For more complete information about compiler optimizations, see our Optimization Notice.