- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi

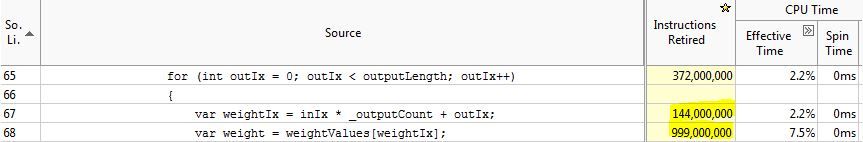

I'm trying to understand vTunes metric "Retired insrtuctions". I've read some previous questions/answers in this forum but don't find the answer to my question. I'm going to explain my question using an example. Here is a small piece of code from a performance tuning I did:

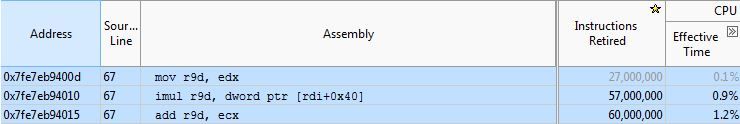

(This is C# code) As can be seen, there are 144 vs 999 million instructions retired on row 67 and 68. The loop should make each of the line execute as many times. So far so good since each lines contains several instructions. However, when I show the assembly code for row 67 for instance, this is where I get lost. It shows the following instructions with the following instructions retired count. 27, 57, and 60 adds up to 144 but why are there a different count for each instruction?

I guess the first greyed out mov instruction has some uncertainty since it is greyed out. (?) But the other two. Why the difference? Is it due to speculation? But if so, should not the imul intructions have more counts than the add instruction. Unless execution is not actually in this order. Some advice on how to interpret these numbers, please.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

VTune uses a sampling-based performance counting methodology. The most common way to do this is to set up an interrupt at fixed time intervals (e.g., every millisecond), and when the interrupt happens the interrupt handler looks at the value of the Program Counter that was saved and assigns this "sample" to that value of the Program Counter. So in a loop where you know that every instruction executes exactly the same number of times, the interrupt is more likely to sample instructions that take longer to execute, and those will have higher counts.

An alternative to sampling on fixed time intervals is to sample based on the overflow of a performance counter. If you are interested in the distribution of retired instructions, then setting the performance counter to overflow every million retired instructions will give an alternative way of sampling the instruction execution. It appears that this is what is being done in the example you show above. I think that the way that VTune works is to pick a sampling interval (e.g., interrupt execution after every 2,000,003 instructions retired), then assign the entire 2,000,003 instruction count to the one instruction that retired when the interrupt occurred. In the absence of systematic errors, increasing the number of samples should result in convergence to the correct distribution. (But there are systematic errors, as I discuss below.) Sampling based on the overflow of performance counters is especially useful if you are interested in finding code that causes rare (but potentially expensive) events, such as TLB misses.

Your example provides an illustration of some of the many possible systematic errors that can occur with sampling based on the overflow of performance counters.

- In your example, the "mov" instruction shows many fewer counts than the next two instructions, even though you can easily see that they must be executed exactly the same number of times.

- If sampling is based on wall-clock time, this would not be surprising since the "mov" instruction only takes a single-cycle, and can often execute in the same cycle as other instructions.

- If sampling is based on retired instructions, it is possible that the "mov" instruction is "hidden" because it is executed in the same cycle as one or more earlier instructions. It is easy to imagine that it is hard to know which instruction to point to if an interrupt occurs in a cycle in which multiple instructions are being issued, executed, and retired. A bias toward the first instruction (in program order) seems likely?

- In newer Intel processors, some "mov" instructions are not even executed by the core's execution units -- their effect is implemented by renaming registers in the "Allocate/Rename/Retire/MoveElimination/ZeroIdiom" stage of the processor pipeline (e.g., Sections 2.1 and 2.2 of the Intel Optimization Reference Manual, document 248966).

- The second and third instructions in your example ("imul" and "add") show approximately the same number of counts.

- With sampling based on wall-clock time, the "imul" is more likely to be sampled both because "imul" is a multi-cycle operation (e.g., it has a latency of 3 cycles on most recent Intel processors) and because it has a memory operand, which can take anywhere between ~4 cycles and >1000 cycles.

- With sampling based on retired instructions, the "imul" instruction cannot be "hidden" by the preceding "mov" instruction because one of the inputs of the "imul" is the output of the "mov". So the "imul" instruction will execute the cycle after the "mov", which may make it a more likely target.

- Similarly, one of the inputs to the "add" instruction is the output of the "imul" instruction, so they cannot execute in the same cycle. This makes it likely that the "add" will be the first instruction (in program order) to execute after the "imul" instruction, which may make it a likely target.

For any sampling-based methodology, there is typically a problem with "skew" -- when the interrupt is actually processed, the Program Counter often points to an instruction *after* the instruction that actually caused the interrupt. Sometimes it is possible to correct for this, but as processors become more complex (especially with out-of-order processing executing multiple instructions per cycle) there are more and more special cases and it becomes more and more difficult to precisely locate the instruction that caused the interrupt. For instruction-based performance analysis this does not really matter -- since you know that the all of the instructions in the loop must execute the same number of times, the only metric that matters is the sum of the counts for all the instructions in the loop.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

VTune uses a sampling-based performance counting methodology. The most common way to do this is to set up an interrupt at fixed time intervals (e.g., every millisecond), and when the interrupt happens the interrupt handler looks at the value of the Program Counter that was saved and assigns this "sample" to that value of the Program Counter. So in a loop where you know that every instruction executes exactly the same number of times, the interrupt is more likely to sample instructions that take longer to execute, and those will have higher counts.

An alternative to sampling on fixed time intervals is to sample based on the overflow of a performance counter. If you are interested in the distribution of retired instructions, then setting the performance counter to overflow every million retired instructions will give an alternative way of sampling the instruction execution. It appears that this is what is being done in the example you show above. I think that the way that VTune works is to pick a sampling interval (e.g., interrupt execution after every 2,000,003 instructions retired), then assign the entire 2,000,003 instruction count to the one instruction that retired when the interrupt occurred. In the absence of systematic errors, increasing the number of samples should result in convergence to the correct distribution. (But there are systematic errors, as I discuss below.) Sampling based on the overflow of performance counters is especially useful if you are interested in finding code that causes rare (but potentially expensive) events, such as TLB misses.

Your example provides an illustration of some of the many possible systematic errors that can occur with sampling based on the overflow of performance counters.

- In your example, the "mov" instruction shows many fewer counts than the next two instructions, even though you can easily see that they must be executed exactly the same number of times.

- If sampling is based on wall-clock time, this would not be surprising since the "mov" instruction only takes a single-cycle, and can often execute in the same cycle as other instructions.

- If sampling is based on retired instructions, it is possible that the "mov" instruction is "hidden" because it is executed in the same cycle as one or more earlier instructions. It is easy to imagine that it is hard to know which instruction to point to if an interrupt occurs in a cycle in which multiple instructions are being issued, executed, and retired. A bias toward the first instruction (in program order) seems likely?

- In newer Intel processors, some "mov" instructions are not even executed by the core's execution units -- their effect is implemented by renaming registers in the "Allocate/Rename/Retire/MoveElimination/ZeroIdiom" stage of the processor pipeline (e.g., Sections 2.1 and 2.2 of the Intel Optimization Reference Manual, document 248966).

- The second and third instructions in your example ("imul" and "add") show approximately the same number of counts.

- With sampling based on wall-clock time, the "imul" is more likely to be sampled both because "imul" is a multi-cycle operation (e.g., it has a latency of 3 cycles on most recent Intel processors) and because it has a memory operand, which can take anywhere between ~4 cycles and >1000 cycles.

- With sampling based on retired instructions, the "imul" instruction cannot be "hidden" by the preceding "mov" instruction because one of the inputs of the "imul" is the output of the "mov". So the "imul" instruction will execute the cycle after the "mov", which may make it a more likely target.

- Similarly, one of the inputs to the "add" instruction is the output of the "imul" instruction, so they cannot execute in the same cycle. This makes it likely that the "add" will be the first instruction (in program order) to execute after the "imul" instruction, which may make it a likely target.

For any sampling-based methodology, there is typically a problem with "skew" -- when the interrupt is actually processed, the Program Counter often points to an instruction *after* the instruction that actually caused the interrupt. Sometimes it is possible to correct for this, but as processors become more complex (especially with out-of-order processing executing multiple instructions per cycle) there are more and more special cases and it becomes more and more difficult to precisely locate the instruction that caused the interrupt. For instruction-based performance analysis this does not really matter -- since you know that the all of the instructions in the loop must execute the same number of times, the only metric that matters is the sum of the counts for all the instructions in the loop.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the extensive response. Very interesting. The final conclusion I gather is to not trust the retired instruction count too much but rather use it as a hint. Some few follow up questions to your response if you don't mind:

1) Can you choose yourself what sampling mechanism to be used in a profile in vTune (can't find how to do this), or does vTune choose this automatically based on the hardware and type of profile?

2) You wrote that it was likely that sampling in this profile was based on retired instructions (overflow) rather than wall clock (interrupt). Why did you reach this conclusion?

Thanks again!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The sampling mechanisms used by VTune are discussed at https://software.intel.com/en-us/node/544067 ; I have not looked at all the linked pages to see how complete the information is, but it should definitely get you started.

In the VTune distribution, there is a directory with a bunch of configuration files for the various Intel processor families. These are not entirely self-explanatory, but it is not hard to understand some of the entries. The column labelled "OVERFLOW" gives the default number of counts per sample for each of the performance counter events. In the file "haswell_db.txt", for example, the event named "INST_RETIRED.ANY" has an OVERFLOW value of 2,000,003. This is the default value for the event, and these may be overridden by the specific "Analysis Type" used by VTune. If I recall correctly, the actual values are documented in one of the tabs of the VTune GUI output when you open a project or results file.

In the case above, I am guessing that the sampling was based on retired instructions for a couple of reasons:

- The column of counts is labeled "Instructions Retired".

- The counts for the "imul" and "add" instructions are almost the same, and I would have expected the "imul" to have significantly higher counts with time-based sampling. (Both because of the 3 cycle latency and because the memory argument will extend the execution time further, depending on the location of the requested data in the cache hierarchy.)

I could certainly be wrong about the sampling type used, but the documentation above should help clarify what VTune is doing.

You may also be able to figure out how to change the OVERFLOW counts. If you try this, be careful --- setting the OVERFLOW count too low can make a system crash or become unresponsive. Even with values that are "safe" (e.g., 100,000 instead of 2,000,000), the overhead of measurement will become more intrusive and the job will use a lot more memory, disk space, and CPU time to finalize the data collection and analysis.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page