- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

I'm using Intel FPGA AI 2023.2 on ubuntu 20.04 host computer and trying to infer a custom CNN in a Intel Arria 10 SoC FPGA.

I have followed Intel FPGA AI Suite SoC Design Example Guide and I'm able to copile the Intel FPGA AI suite IP and run the M2M and S2M examples.

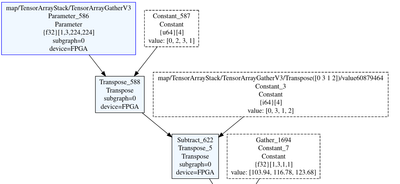

I have a question regarding the input layer data type. In the example, resnet-50-tf NN, the input layer seems to be FP32 [1,3,224,224] in the .dot file obtained after the graph compiling (see screenshot below)

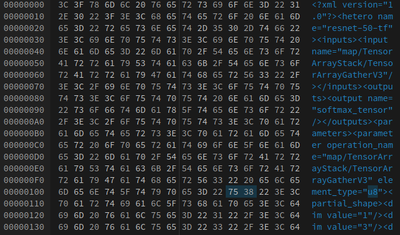

However, when running dla_benchmark example I noticed that U8 input data type was detected. After reading the .bin file of the graph compiled, from which this info is extracted by the dla_benchmark application, it can be seen that input data type is U8 (see screenshot below)

The graph was compitled according to Intel FPGA AI Suite SoC Design Example Guide using:

omz_converter --name resnet-50-tf \

--download_dir $COREDLA_WORK/demo/models/ \

--output_dir $COREDLA_WORK/demo/models/

In addition I compiled my custom NN with dla_command and I get the same result: the input layer is fp32 in the .dot and u8 in the .bin.

dla_compiler

--march "{path_archPath}"

--network-file "{path_xmlPath}"

--o "{path_binPath}"

--foutput-format=open_vino_hetero

--fplugin "HETERO:FPGA,CPU"

--fanalyze-performance

--fdump-performance-report

--fanalyze-area

--fdump-area-report

Compilation was performed for A10_Performance.ach example architecture architecture in both cases.

In addition, input precission should be reported in input_transform_dump report but it is not.

2 questions:

-Why is the input data_type changed?

- Is it possible to compile the graph with input data type FP32?

Thank you in advance

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Can you provide the full step that you are using to from custom NN to generating FPGA bitstream?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @JohnT_Intel

There is no custom bitstream. The bitstream was compiled following the instructions in the Intel FPGA AI Suite SoC Design Example Guide, specifically in section "3.3.2 Building the FPGA Bitstreams." The compilation was performed using the dla_build_example_design.py script with the following parameters:

dla_build_example_design.py \

-ed 4_A10_S2M \

-n 1 \

-a

$COREDLA_ROOT/example_architectures/A10_Performance.arch \

--build \

--build-dir

$COREDLA_WORK/a10_perf_bitstream \

--output-dir $COREDLA_WORK/a10_perf_bitstream

I am a bit confused about your question because all the information I provided in the previous message is related to the compiled graph process, which, as far as I understand, is not dependent on the bitstream. From my understanding, the common factor between the compiled graph and the bitstream is the architecture file, which is A10_Performance.arch. And the input is detected as U8 with the .bin compiled graph file.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Can you generate FPGA bitstream using the Custom NN architecture? This will ensure that it is using the input format that you plan to run with.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @JohnT_Intel ,

Could you help me to identify the parameter to specify the input precision in the custom architecture? The only parameter related with precision that I found in Intel FPGA AI Suite IP Reference Manual is "arch_precision" which is set to FP11 and the input detected as U8. I the A10_FP16_Architecture this parameter is set to FP16 and the input is also detected as U8.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

After you make change on the arch_precision, is the prototxt also updated with the Custom NN?

Are you able to share with the file that you are using for easier debug?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @JohnT_Intel,

The question comes from Intel example. The initial NN TF model is attached in resnet-50-tf.zip file. "resnet-50-tf.xml" is de IR model get with the omz_converter function as it is described in the Intel example and "RN50_Performance_b1.zip" contains the compiled graph for A10_Performance.ach architecture, in which architecture precision parameter is set to FP11. As you can see, input is described as FP32 in IR file whereas after compiling the graph the input precision changes to U8. The .bin file is totaly different if it is compiled for an architecture with architecture precision set to FP11 or FP16 but in .bin file header, input layer is identified as U8.

The question is why the input is set to U8 and how i can be changed to another precision such as FP16/FP32? Is there any architecture parameter to change it?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @JohnT_Intel,

I think I found the solution. Input is detected as FP32 when --use_new_frontend model optimizer option is used. But there are strill some questions without answers. The model is a TensorFlow model,

why the IR is totally different?

Why input precision information is not provided in the input_transform_dump report?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

The IR will depends on the prototxt file provided to OpenVINO application.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @JohnT_Intel,

Sure but --use_new_frontend option doesn't make difference in TF models according documentation:

"--use_new_frontend Force the usage of new Frontend for model conversion into IR. The new Frontend is C++ based and is available for ONNX* and PaddlePaddle* models. Model Conversion API uses new Frontend for ONNX* and PaddlePaddle* by default that means use_new_frontend and use_legacy_frontend options are not specified."

What is the difference?

And why input precision information is not provided in the input_transform_dump report?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

How do you performed the model optimization? https://docs.openvino.ai/2023.2/openvino_docs_MO_DG_prepare_model_convert_model_Convert_Model_From_TensorFlow.html

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @JohnT_Intel,

mo

--saved_model_dir "{path_savedModelPath}"

--input_shape "{lst_inputShape}"

--model_name "{str_modelName}"

--output_dir "{path_irTargetPath}"

--use_new_frontend

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

May I know which mo user guide are you refering to?

Can you follow the guide in https://docs.openvino.ai/2023.2/openvino_docs_MO_DG_prepare_model_convert_model_Convert_Model_From_TensorFlow.html by following TensorFlow 2 Models?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @JohnT_Intel ,

You can review the Model Optimizer help by using the command mo --help or consult to the OpenVINO documentation https://docs.openvino.ai/2023.2/notebooks/121-convert-to-openvino-with-output.html

Do you believe there is a significant difference between the options I'm employing and the guide you are refering to? In the guide, I observe that only the --input_model or --saved_model_dir options are specified, along with the --input_shape and --input/--output options for pruning non-supported layers. Notably, the --use_new_frontend option is not explicitly mentioned, and as I previously detailed, without its use, the input is identified as U8 instead of FP32.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Please, find in the following link the complete TF model, IR model and Compiled Graph https://consigna.ugr.es/download.php?files_ids=63731

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Not able to download it as it mention token missing

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Not able to download it as it mention token missing

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

The IR xml file that you are using is for FP32. May I know how to you generate the IR as it is not reflected by it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @JohnT_Intel ,

Yes, the input must be FP32. That's the question, why input is detected as U8 intead of FP32? The IR model was generated using the aforementioned Model Optimizer (mo) command. Please , refer to community.intel.com/t5/Application-Acceleration-With/Intel-FPGA-AI-suite-input-data-type/m-p/1552953#M2414.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Can we consolidate all the AI Suite disucssion into single forum discussion?

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page