- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I have a kernel that supposed to contain a piece of local memory. The data is first being copied to the local memory and then being used further.

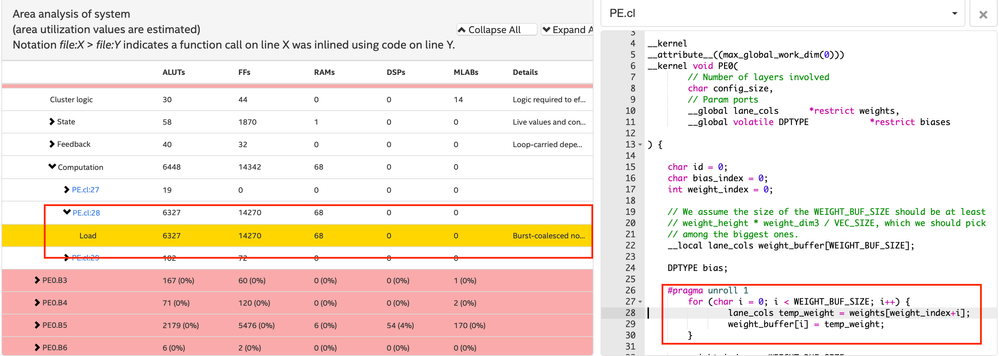

Interestingly, the component that loads the data from the global memory to the local memory, is consuming much more RAM than the local memory itself. It also consumes a considerable amount of FFs and LUTs. In the html report, it claims that the loads uses burst-coalesced non-aligned LSU.

have more than one kernel instance that does the same thing. As a result, the over-utilization of the LSU logics prevents my from proper scale out of my design.

Here is the code:

Hint: I'm actually trying to re-implement the Intel DLA design. As you can see, I'm loading the weights into the local memory of each processing element. I have 8 of these PEs. I cannot scale to 48 PEs (claimed in the paper), due to the problem mentioned above.

Now the question is, how can I optimize this design? How I can change the loading part to infer a more minimal LSU? One I have thought about having another kernel which loads the weights and send them to PEs through channel. But it seems like the number and the width of channels would become another problem.

Would appreciate any help.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

The Block RAM usage is likely because of the cache the compiler automatically creates; this cache was clearly mentioned in the old report but they removed any mention of it in the new HTML report. Try also marking your "weights" buffer as volatile, or adding "--opt-arg -nocaching" (or -opt-arg=-nocaching for newer versions of the compiler) to the command-line to disable the cache and see if it makes a difference. However, at the end of the day, having so many kernels with an interface to host and memory will result in significant waste of logic and Block RAMs to support the interfaces, and this is apart from the fact that you will have to create a queue for every one of these kernels in the host and pay the coding and kernel launch overhead. I would instead recommend using autorun kernels with the automatic replication provided by the compiler to implement your PEs, and have only one non-autorun kernel to read data from memory and feed to the PEs and another non-autorun to read from PEs and write back to memory. If your PEs are regular and there is little to no rate imbalance between them, you can get away with very shallow channel depth of <20 which will not even use Block RAMs.

P.S. There is no guarantee the implementation mentioned in the paper uses a separate kernel for every PE; some people tend to also consider unrolled loop iterations as PEs... In other words, the Cvec, Wvec and Kvec mentioned in the paper might simply be unroll factors of the different loops in their code. Moreover, the paper says everything is implemented in OpenCL, but we don't really know...

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the great answer.

I have actually taken the second option (Having autorun kernels and having another kernels that feed the weights. The area reduced significantly).

With regard to DLA, I'm still confused that you said even unrolling can be considered as the systolic array. I fully understand the systolic array, but cannot really understand the connection between unrolling and having a systolic array implied. In another words, I don't know what are option that the compiler has to infer different design when facing and unrolled loop. Is there any resources that explain how compiler in different situations infer different designs for unrolled loop?

I also have a second question here, if you don't mind: When we compile a kernel, we first see a report on the console (Before synthesis) about five metric: (1) Logic Utilization, (2) ALUTs, (3) Dedicated logic registers, (4) Memory Blocks, and (4) DSP Blocks. On the other hand, in the html report we have (1) ALUTs, (2) FFs, (3) RAMs, (4) DSPs, (5) MLABs. Unfortunately, these metrics do not equal to each other (Except DSPs and RAMs). It seems to me that the Logic Utilization = ALUTs + FFs + MLABs, Dedicated logic registers = FFs, and etc. So, in html report I can see that I still can scale the design, but in the console report the logic utilization is close to 98%. Can I still scale out the design? Which metric should I trust? I am using SDK 18.1-pro, but Quartus 18.0.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

>With regard to DLA, I'm still confused that you said even unrolling can be considered as the systolic array. I fully understand the systolic array, but cannot really understand the connection between unrolling and having a systolic array implied. In another words, I don't know what are option that the compiler has to infer different design when facing and unrolled loop. Is there any resources that explain how compiler in different situations infer different designs for unrolled loop?

I personally do not consider loop unrolling as "generating an array of PEs" but some people are very keen on calling it that; this is just a matter of notation and is unrelated to what the compiler actually does. In fact, I would assume the compiler uses the same algorithm to unroll loops all the time. I can point you to multiple papers where the authors draw an array of PEs to describe their design while all they have actually done in the code is to add #pragma unroll to a loop... I am suspecting this might also be the case with the DLA paper. In fact, the best example of this is probably "Section 9.1.2. Use a Single Kernel to Describe Systolic Arrays" of the Best Practices Guide where it is described that now it is recommend to avoid autorun kernels on Startix 10 and instead use a single-kernel design to describe systolic arrays, while the code example is just unrolling two loops... This will just result in a single long and wide pipeline and not what I would call a systolic array which is a set of disjoint PEs connected with FIFOs and implicitly synchronized using the stall signals of the FIFOs... Please note that much of what I say here are my own personal opinions based on my personal experience and I could always be wrong.

Regarding resource utilization, MLAB estimation has only been added to the HTML report but not to the area report summary printed to stdout. The mapping of the rest of the resources is like this:

ALUT from HTML report = ALUT from summary

FF from HTML report = Dedicated logic registers from summary

RAMs from HTML report = Memory blocks from summary

DSP from HTML report = DSP from summary

Logic utilization from summary (supposed to estimate ALM usage) = ALUT + Dedicated logic registers/FF

You should remember that the report just uses a resource model Intel has come up with and can be highly inaccurate at times (both over and under-estimated). Specifically, the logic utilization estimation is very inaccurate and usually over-estimated since it does not account for the fact that much of the required ALUT and FFs will be mapped to the same ALM; then again estimation this mapping with any reasonable degree of accuracy will be very difficult. What I do is that I totally ignore the logic utilization and just place and route the design to see what happens; if the logic was indeed over-utilized, then I will go and modify my kernel.

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page