- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

I have a reference design, where I have implemented a systolic array (By generating the kernels using a script). All the `PE` kernels are the same, except the name. These kernels are connected through a chain of channels.

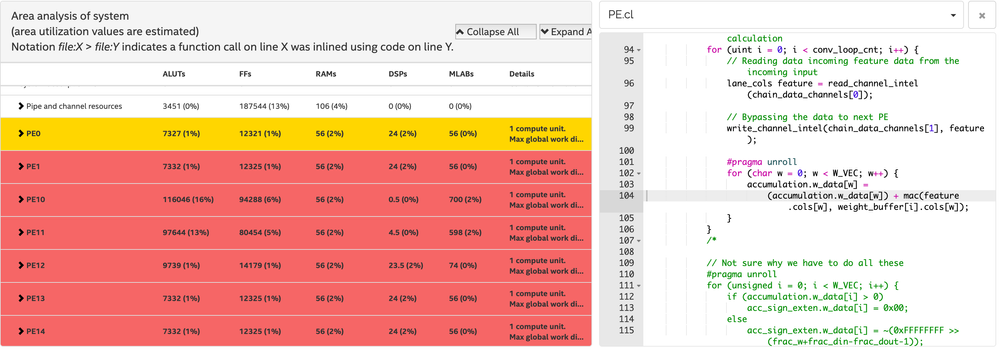

Looking at the html report, I can spot a weird behavior.

As you can see in the image, `PE10` and `PE11` are utilizing ALUs and FFs instead of DSPs to implement the computation. Other kernels are using the DSPs. Such behavior have led into over-utilization of the resources and stops me from further increasing the number of kernels in my systolic array.

Why such thing is happening? How can I avoid it?

Thanks

- Tags:

- OpenCL™

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well, here is how it works: With char, every MUL should take half a DSP, but the compiler calculates DSP usage internally using int which requires 2 DSPs per MUL. Hence, it thinks DSPs are over-utilized as soon as you hit the 25% mark (which would be equal to 100% DSP utilization if you were actually using int) and maps everything else to logic. With your new method, again each MUL is using half a DSP but the internal calculation is using one MUL per DSP and hence, over-utilization is detected internally at 50% DSP usage. Now, if you do place and route the design and the mapper does its job correctly, you will get 25% or 50% DSP utilization in the end but the extra operations which were mapped to logic by the OpenCL compiler will not be remapped to DSPs... Essentially, because of this bug, I don't think it is possible to fully use the DSPs with low-precision data types in OpenCL...

If you want, you can use as many PEs as would fit in the 50% DSP utilization limit with your current data type and just place and route it; that would give you the same OP/s as using a larger data type that would use one DSP per MUL. But of course the whole point of using lower precision is to be able to achieve higher OP/s; yet, that does not seem to be possible with the current versions of the compiler. And I don't think think bug has ever been fixed, nor do I understand how is it that the whole world is not reporting this bug to Intel considering the number of people who are doing low-precision AI/DL on FPGAs these days.

P.S. You can also experiment with bit masking but no guarantees it would change anything. I remember I had a long discussion with someone else on the forum on this topic for which I also did some coding and experimentation and reported the results, but I do not seem to be able to find the thread anymore. Using HDL libraries could bypass the issue altogether, though, if you are willing to go through the trouble of implementing it.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

What is your data type? For integer and likely also fixed-point, I have seen cases where when the compiler detects possible DSP over-utilization, it will map remaining operations to logic.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

We are using `char` for now. Actually, in our case we are just utilizing around 23% of all the DSPs.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Then you could be running into this [age-old] bug:

https://forums.intel.com/s/question/0D50P00003yyTf2SAE/how-to-share-dsp-correctly-?language=en_US

Essentially, the compiler claims it is packing multiple low-precision operations into one DSP, but in fact it is not and hence, internally, DSP over-utilization is detected and extra operations are mapped to logic, while the report does not reflect the DSP over-utilization. You might have better luck using the arbitrary precision integer extension, rather than using the char data type.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the help.

I will go on and use the arbitrary precision variables and see how it will behave.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have tried that option, it fixed the problem when I set the number of PEs to 16, but when I scale it to 32, then problem arises again. PEs that are using ALUTs and FFs instead of DSPs are highly wasting the resources. What are the other options here? Should I use int instead of char to stop the compiler from packing multiple MACs into one DSP?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Well, here is how it works: With char, every MUL should take half a DSP, but the compiler calculates DSP usage internally using int which requires 2 DSPs per MUL. Hence, it thinks DSPs are over-utilized as soon as you hit the 25% mark (which would be equal to 100% DSP utilization if you were actually using int) and maps everything else to logic. With your new method, again each MUL is using half a DSP but the internal calculation is using one MUL per DSP and hence, over-utilization is detected internally at 50% DSP usage. Now, if you do place and route the design and the mapper does its job correctly, you will get 25% or 50% DSP utilization in the end but the extra operations which were mapped to logic by the OpenCL compiler will not be remapped to DSPs... Essentially, because of this bug, I don't think it is possible to fully use the DSPs with low-precision data types in OpenCL...

If you want, you can use as many PEs as would fit in the 50% DSP utilization limit with your current data type and just place and route it; that would give you the same OP/s as using a larger data type that would use one DSP per MUL. But of course the whole point of using lower precision is to be able to achieve higher OP/s; yet, that does not seem to be possible with the current versions of the compiler. And I don't think think bug has ever been fixed, nor do I understand how is it that the whole world is not reporting this bug to Intel considering the number of people who are doing low-precision AI/DL on FPGAs these days.

P.S. You can also experiment with bit masking but no guarantees it would change anything. I remember I had a long discussion with someone else on the forum on this topic for which I also did some coding and experimentation and reported the results, but I do not seem to be able to find the thread anymore. Using HDL libraries could bypass the issue altogether, though, if you are willing to go through the trouble of implementing it.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Alright Got some good news.

Seems like the issue is resolved in SDK 19.1. Now I get 32 DSP usage for all PEs :D

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

That is good to hear. Most existing Arria 10 boards (more or less everything except a10_ref) will never get a BSP compatible with 19.1, though. :(

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page