- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello!

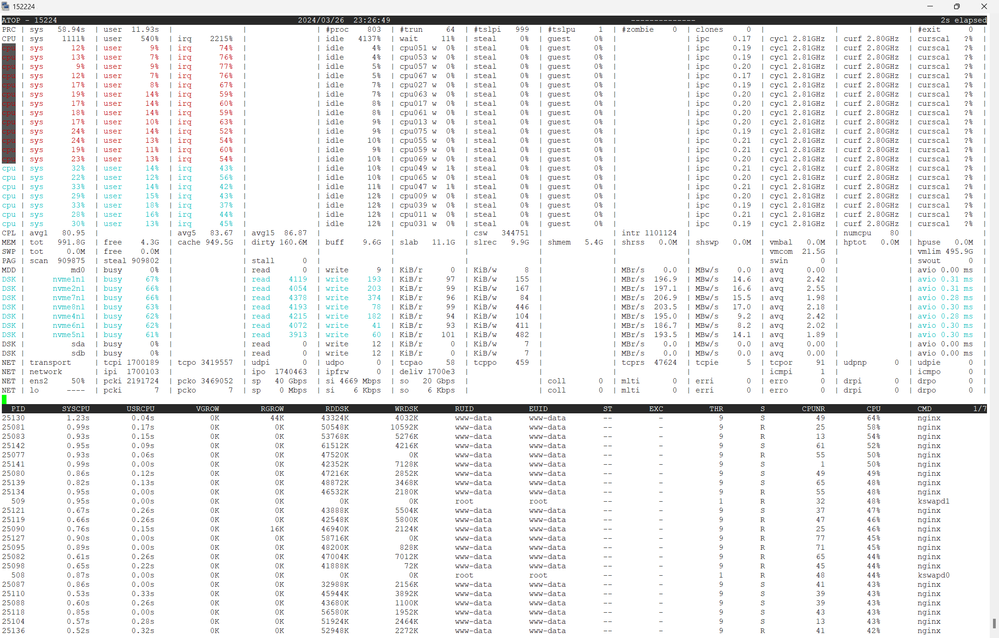

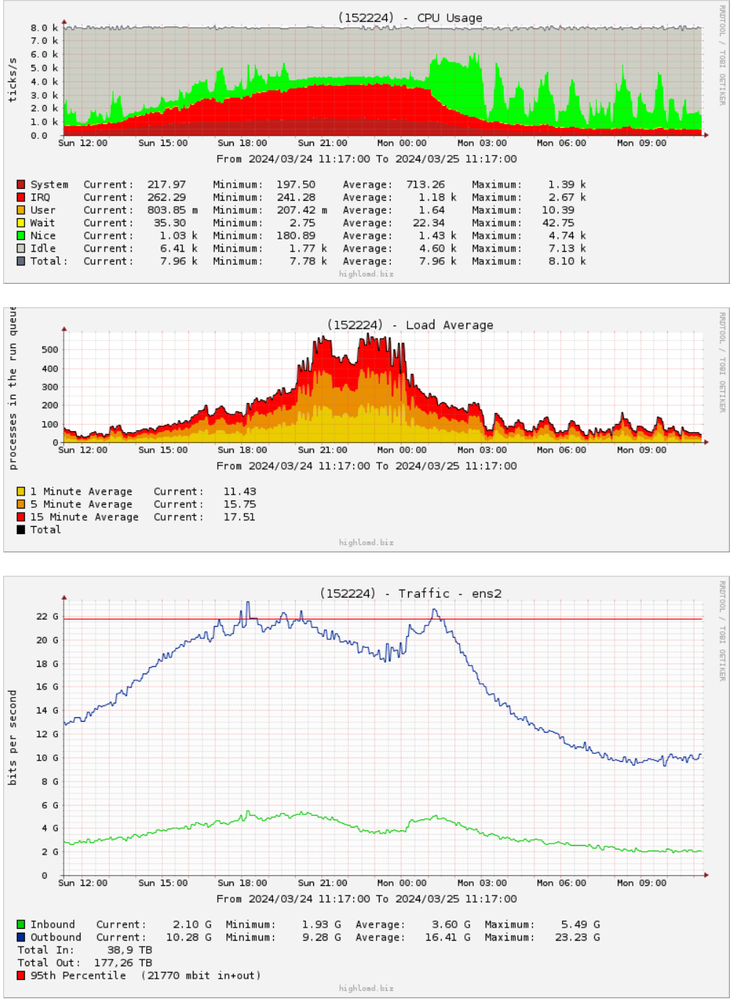

I have a problem with Intel XL710, after 22 Gbps of traffic some cores of processor loads 100% IRQ and traffic goes down.

There are latast firmware and driver:

# ethtool -i ens2

driver: i40e

version: 2.24.6

firmware-version: 9.40 0x8000ecc0 1.3429.0

expansion-rom-version:

bus-info: 0000:d8:00.0

supports-statistics: yes

supports-test: yes

supports-eeprom-access: yes

supports-register-dump: yes

supports-priv-flags: yes

I read this guide

And made all possible configurations. But nothing changes

382 set_irq_affinity local ens2

384 set_irq_affinity all ens2

387 ethtool -C ens2 adaptive-rx off adaptive-tx off rx-usecs 0 tx-usecs 0

389 ethtool -C ens2 adaptive-rx off adaptive-tx off rx-usecs 5000 tx-usecs 20000

391 ethtool -C ens2 adaptive-rx off adaptive-tx off rx-usecs 10000 tx-usecs 20000

393 ethtool -g ens2

394 ethtool -C ens2 adaptive-rx off adaptive-tx off rx-usecs 84 tx-usecs 84

396 ethtool -C ens2 adaptive-rx off adaptive-tx off rx-usecs 62 tx-usecs 62

398 ethtool -S ens2 | grep drop

399 ethtool -S ens2 | grep drop

400 ethtool -S ens2 | grep drop

401 ethtool -S ens2 | grep drop

402 ethtool -C ens2 adaptive-rx off adaptive-tx off rx-usecs 336 tx-usecs 84

403 ethtool -S ens2 | grep drop

404 ethtool -S ens2 | grep drop

406 ethtool -S ens2 | grep drop

407 ethtool -C ens2 adaptive-rx off adaptive-tx off rx-usecs 672 tx-usecs 84

408 ethtool -S ens2 | grep drop

409 ethtool -S ens2 | grep drop

411 ethtool -S ens2 | grep drop

412 ethtool -S ens2 | grep drop

425 ethtool -S ens2 | grep drop

426 ethtool -S ens2 | grep drop

427 ethtool -C ens2 adaptive-rx off adaptive-tx off rx-usecs 8400 tx-usecs 840

428 ethtool -C ens2 adaptive-rx off adaptive-tx off rx-usecs 4200 tx-usecs 840

430 ethtool -S ens2 | grep drop

431 ethtool -S ens2 | grep drop

432 ethtool -S ens2 | grep drop

433 ethtool -S ens2 | grep drop

434 ethtool -C ens2 adaptive-rx off adaptive-tx off rx-usecs 4200 tx-usecs 1680

435 ethtool -S ens2 | grep drop

436 ethtool -S ens2 | grep drop

439 ethtool -C ens2 adaptive-rx off adaptive-tx off rx-usecs 3200 tx-usecs 3200

469 ethtool -a ens2

472 ethtool ens2

473 ethtool -i ens2

475 ethtool -i ens2

476 ethtool ens2

482 ethtool -C ens2 adaptive-rx on

484 ethtool -c ens2

486 ethtool -C ens2 adaptive-tx on

487 ethtool -c ens2

492 history | grep ens2

494 ethtool -m ens2

499 ethtool -C ens2 adaptive-rx off adaptive-tx off rx-usecs 4200 tx-usecs 1600

501 history | grep ens2

Server configuration:

80 cores Intel(R) Xeon(R) Gold 6230 CPU @ 2.10GHz

RAM 960 GB

8 x SAMSUNG MZQLB7T6HMLA-000AZ NVME disks

Ethernet Controller XL710 for 40GbE QSFP+ (rev 02)

What can be done with the settings of this network card to solve the problem?

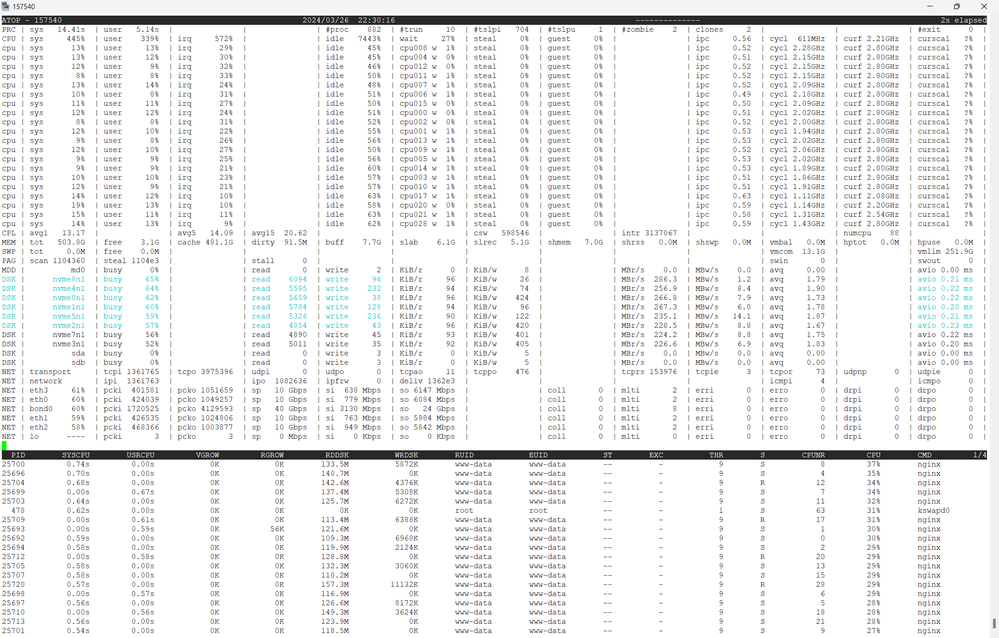

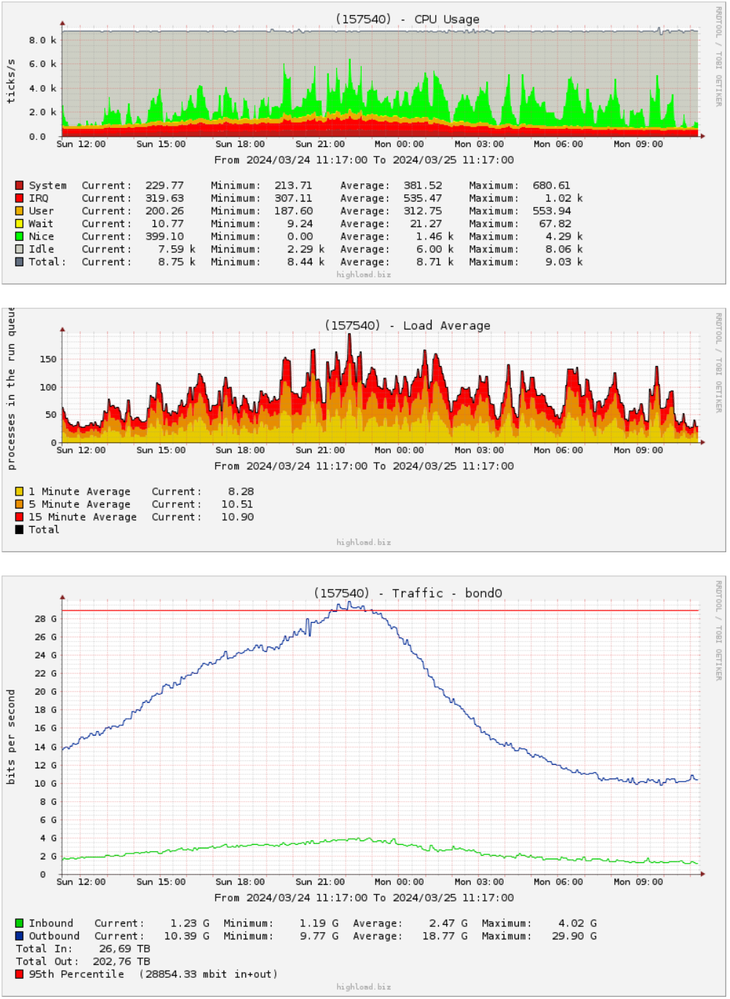

On another server with a similar configuration, but a different network cards, everything is fine

Server configuration:

88 cores Intel(R) Xeon(R) CPU E5-2699 v4 @ 2.20GHz

RAM 512 GB

8 x SAMSUNG MZQLB7T6HMLA-00007 NVME disks

4 x 82599ES 10-Gigabit SFI/SFP+ Network Connection

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello AndriiV,

Greeting for the day!

Would it be helpful to set up a live debug call with you? If so, please let us know a suitable day and time this week. We would suggest Thursday or Friday, but we're flexible and can accommodate your availability.

Regards,

Azeem Ulla

Intel Customer Support Technician

intel.com/vroc

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Azeem!

You can call me thursday via phone, Facetime, telegram, viber, signal or write to this messangers. I will wait your call from 10 to 16 GMT+2

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello AndriiV,

As discussed over the call, we will arrange the callback accordingly.

Best regards,

Simon

Intel Support Engineer

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi AndriiV,

Thank you for patiently waiting for us.

Unfortunately, the performance engineering team is not available until next week. We will be checking with them when they will be available and try to set up a call. We will share the date time if it is convenient with you.

Regards,

Sazzy_Intel

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello AndriiV,

Greetings!

This is a follow up. Kindly let us know if you were able to find the details provided in the last email.

Kindly reply back so that we can continue checking this further.

Regards,

Sachin KS

Intel Customer Support Technician

intel.com/vroc

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Sachin!

Sorry for late answer.

About cables, I wrote the names in this topic. Also we used before XXV710 - XL710 card, there was the same problems.

I also wrote about this earlier, we have another server with the XXB710 card connected directly to the same switch in the same port (in 4x25 mode) (2x25 in one server and 2x25 in the other). But in the other server there is rarely more than 1 Gbit/s of incoming traffic and therefore everything is fine there even with 35 Gbit/s of outgoing traffic.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi AndriiV,

Good Day!

Thank you for your response.

We will check this internally and get back to you as soon as we have an update.

Regards,

Simon

Intel Customer Support Technician

intel.com/vroc

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello!

A few days ago I updated firmware to the latest 9.53. Also disabled LLDP as recommended when linux bonding used.

New comparision of two servers 2x25 Gbps and 4x10Gbps:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi AndriiV

Greetings!

Apologies for the delayed response,

We have reached out to our Engineering team for an update on this case and are awaiting their reply. Once we receive an update, we will share it with you as soon as possible.

Regards

Pujeeth

Intel customer support technician

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi AndriiV

Good day!

This is a follow up. We are still waiting for an update from Engineering team for an update on this case and are awaiting their reply. Once we receive an update, we will share it with you as soon as possible.

Regards

Sachin KS

Intel customer support technician

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello AndriiV,

Good day!

Please share the "ethtool -S" statistics and "dmesg" logs of the new setup (as mentioned in point 3 below from your last feedback to us. This will help us compare the old setup with the new one.

The high "port.rx_dropped" counts suggest potential hardware issues, and the multiple link Down/Up events in the customer's "dmesg" logs could indicate a problem with the cables.

------------------------------------------------------------

Previous response:

1. Regarding the cables, I have already provided the names in this discussion.

2. We have previously used the XXV710 - XL710 card, and the same issues occurred.

3. Additionally, we have another server with the XXB710 card, which is connected directly to the same switch and port (in 4x25 mode), where one server uses 2x25 ports and the other uses 2x25.

4. However, in the second server, the incoming traffic rarely exceeds 1 Gbit/s, and everything functions fine there, even with 35 Gbit/s of outgoing traffic.

Thank You & Best Regards,

Ragulan_Intel

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Ragulan!

First of all, yesterday I made some changes:

root@152224-02-fdc:/#ethtool -L ens1f0 combined 40

root@152224-02-fdc:/#ethtool -L ens1f0 combined 40

root@152224-02-fdc:/#ethtool -K ens1f0 gro on

root@152224-02-fdc:/#ethtool -K ens1f1 gro on

root@152224-02-fdc:/#ethtool -K ens1f0 hw-tc-offload on

root@152224-02-fdc:/#ethtool -K ens1f1 hw-tc-offload on

root@152224-02-fdc:/#ethtool -K ens1f0 ufo on

root@152224-02-fdc:/#ethtool -K ens1f1 ufo onSecond I made some changes on Jan 5

root@152224-02-fdc:/#ethtool -C ens1f0 adaptive-rx off adaptive-tx off rx-usecs 1000 tx-usecs 2000 rx-frames-irq 1024 tx-frames-irq 2048 rx-usecs-high 100 tx-usecs-high 200; ethtool -C ens1f1 adaptive-rx off adaptive-tx off rx-usecs 1000 tx-usecs 2000 rx-frames-irq 1024 tx-frames-irq 2048 rx-usecs-high 100 tx-usecs-high 200

root@152224-02-fdc:/#ethtool -C ens1f0 adaptive-rx off adaptive-tx off rx-usecs 1000 tx-usecs 2000 rx-frames-irq 1024 tx-frames-irq 2048 rx-usecs-high 100; root@152224-02-fdc:/#ethtool -C ens1f1 adaptive-rx off adaptive-tx off rx-usecs 1000 tx-usecs 2000 rx-frames-irq 1024 tx-frames-irq 2048 rx-usecs-high 100

root@152224-02-fdc:/#ethtool -C ens1f0 adaptive-rx off adaptive-tx off rx-usecs 1000 tx-usecs 2000 rx-frames-irq 1024 tx-frames-irq 2048 rx-usecs-high 200; root@152224-02-fdc:/#ethtool -C ens1f1 adaptive-rx off adaptive-tx off rx-usecs 1000 tx-usecs 2000 rx-frames-irq 1024 tx-frames-irq 2048 rx-usecs-high 200

root@152224-02-fdc:/#ethtool -C ens1f0 adaptive-rx off adaptive-tx off rx-usecs 1500 tx-usecs 2000 rx-frames-irq 1024 tx-frames-irq 2048 rx-usecs-high 400; root@152224-02-fdc:/#ethtool -C ens1f1 adaptive-rx off adaptive-tx off rx-usecs 1500 tx-usecs 2000 rx-frames-irq 1024 tx-frames-irq 2048 rx-usecs-high 400

root@152224-02-fdc:/#ethtool -C ens1f0 adaptive-rx off adaptive-tx off rx-usecs 1500 tx-usecs 2000 rx-frames-irq 1024 tx-frames-irq 2048 rx-usecs-high 236; root@152224-02-fdc:/#ethtool -C ens1f1 adaptive-rx off adaptive-tx off rx-usecs 1500 tx-usecs 2000 rx-frames-irq 1024 tx-frames-irq 2048 rx-usecs-high 236

root@152224-02-fdc:/#ethtool -C ens1f0 rx-usecs-high 5; ethtool -C ens1f1 rx-root@152224-02-fdc:/#usecs-high 5

root@152224-02-fdc:/#ethtool -C ens1f0 rx-frames-irq 2048; ethtool -C ens1f1 rx-frames-irq 2048

root@152224-02-fdc:/#ethtool -C ens1f0 rx-frames-irq 4096; ethtool -C ens1f1 rx-frames-irq 4096

root@152224-02-fdc:/#ethtool -C ens1f0 adaptive-rx on adaptive-tx on

root@152224-02-fdc:/#ethtool -C ens1f1 adaptive-rx on adaptive-tx on

Now logs for Feb 2 - Feb 4:

root@152224-02-fdc:/# grep ens1f /var/log/messages

Feb 3 21:23:08 localhost kernel: [13168880.907253] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168880.919189] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168880.927132] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168880.935105] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168880.947220] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168880.959104] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168880.967104] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168880.979097] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168880.991100] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.003119] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.011203] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.023093] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.035094] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.047133] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.059119] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.067103] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.075140] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.087094] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.095134] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.103188] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.111235] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.119254] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.127213] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.139098] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.151093] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.159109] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.171100] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.179162] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.191094] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.199101] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.211141] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.223133] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.231229] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.285548] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.291115] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.299504] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.307109] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.319535] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.331341] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.343223] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.351186] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.359102] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.371149] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.383570] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.391129] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.403137] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.415313] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.423185] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.435098] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.447275] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.459162] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.467180] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.475301] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.483195] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.491172] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.503230] bond0: link status down for interface ens1f0, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.619095] bond0: link status down for interface ens1f1, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.631094] bond0: link status down for interface ens1f1, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.643093] bond0: link status down for interface ens1f1, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.655095] bond0: link status down for interface ens1f1, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.667095] bond0: link status down for interface ens1f1, disabling it in 200 ms

Feb 3 21:23:08 localhost kernel: [13168881.679094] bond0: link status down for interface ens1f1, disabling it in 200 ms

Feb 3 21:23:09 localhost kernel: [13168881.687093] bond0: link status down for interface ens1f1, disabling it in 200 ms

Feb 3 21:23:09 localhost kernel: [13168881.695089] bond0: link status down for interface ens1f1, disabling it in 200 ms

Feb 3 21:23:09 localhost kernel: [13168881.707090] bond0: link status down for interface ens1f1, disabling it in 200 ms

Feb 3 21:23:09 localhost kernel: [13168881.715089] bond0: link status down for interface ens1f1, disabling it in 200 ms

Feb 3 21:23:09 localhost kernel: [13168881.727088] bond0: link status down for interface ens1f1, disabling it in 200 ms

Feb 3 21:23:09 localhost kernel: [13168881.735098] bond0: link status down for interface ens1f1, disabling it in 200 ms

Feb 3 21:23:09 localhost kernel: [13168881.747090] bond0: link status down for interface ens1f1, disabling it in 200 ms

Feb 3 21:23:09 localhost kernel: [13168881.755090] bond0: link status down for interface ens1f1, disabling it in 200 ms

Feb 3 21:23:09 localhost kernel: [13168881.767090] bond0: link status down for interface ens1f1, disabling it in 200 ms

Feb 3 21:23:09 localhost kernel: [13168881.779100] bond0: link status down for interface ens1f1, disabling it in 200 ms

Feb 3 21:23:09 localhost kernel: [13168881.787098] bond0: link status down for interface ens1f1, disabling it in 200 ms

Feb 3 21:23:09 localhost kernel: [13168881.799116] bond0: link status down for interface ens1f1, disabling it in 200 ms

Feb 3 21:23:09 localhost kernel: [13168881.811110] bond0: link status down for interface ens1f1, disabling it in 200 ms

Feb 3 21:23:09 localhost kernel: [13168881.819097] bond0: link status down for interface ens1f1, disabling it in 200 ms

Feb 3 21:23:09 localhost kernel: [13168881.827122] bond0: link status down for interface ens1f1, disabling it in 200 ms

Feb 3 21:23:09 localhost kernel: [13168881.835096] bond0: link status down for interface ens1f1, disabling it in 200 ms

Feb 3 21:23:09 localhost kernel: [13168881.843095] bond0: link status down for interface ens1f1, disabling it in 200 ms

Feb 3 21:23:09 localhost kernel: [13168881.851093] bond0: link status down for interface ens1f1, disabling it in 200 ms

Feb 3 21:23:09 localhost kernel: [13168881.863121] bond0: link status down for interface ens1f1, disabling it in 200 ms

Feb 3 21:23:09 localhost kernel: [13168881.871099] bond0: link status down for interface ens1f1, disabling it in 200 ms

Feb 3 21:23:09 localhost kernel: [13168881.883096] bond0: link status down for interface ens1f1, disabling it in 200 ms

Feb 3 21:23:09 localhost kernel: [13168881.895116] bond0: link status down for interface ens1f1, disabling it in 200 ms

Feb 3 21:23:09 localhost kernel: [13168881.907104] bond0: link status down for interface ens1f1, disabling it in 200 ms

Feb 3 21:23:09 localhost kernel: [13168881.915096] bond0: link status down for interface ens1f1, disabling it in 200 msLogs for Jan 26 - Feb 1 (nothing for XXV710 NICs):

root@152224-02-fdc:/# grep ens1f /var/log/messages.1

root@152224-02-fdc:/#Logs for Jan 5 - Jan 25:

root@152224-02-fdc:/# zgrep ens1f /var/log/messages.*.gz

/var/log/messages.4.gz:Jan 5 21:06:33 localhost kernel: [10662292.076904] i40e 0000:86:00.0 ens1f0: tx-usecs-high is not used, please program rx-usecs-high

/var/log/messages.4.gz:Jan 5 21:06:33 localhost kernel: [10662292.078441] i40e 0000:86:00.1 ens1f1: tx-usecs-high is not used, please program rx-usecs-high

/var/log/messages.4.gz:Jan 5 21:10:11 localhost kernel: [10662510.556244] i40e 0000:86:00.0 ens1f0: Invalid value, rx-usecs-high range is 0-236

/var/log/messages.4.gz:Jan 5 21:10:11 localhost kernel: [10662510.557986] i40e 0000:86:00.1 ens1f1: Invalid value, rx-usecs-high range is 0-236

/var/log/messages.4.gz:Jan 5 21:16:43 localhost kernel: [10662902.287278] i40e 0000:86:00.0 ens1f0: Interrupt rate limit rounded down to 4

/var/log/messages.4.gz:Jan 5 21:16:43 localhost kernel: [10662902.291367] i40e 0000:86:00.1 ens1f1: Interrupt rate limit rounded down to 4

As you can see there is no "link up" and "link down" notification for last month. Only yesterday, when I changed channel parameters

Now statistics for "port.rx_dropped" for both servers that use one cable:

Server 152224 with problem XXV710 card:

root@152224-02-fdc:/# date ; ethtool -S ens1f0 | grep port.rx_dropped

Tue 04 Feb 2025 06:13:05 PM EET

port.rx_dropped: 96468727

root@152224-02-fdc:/# date ; ethtool -S ens1f0 | grep port.rx_dropped

Tue 04 Feb 2025 06:13:52 PM EET

port.rx_dropped: 96489688

root@152224-02-fdc:/# date ; ethtool -S ens1f0 | grep port.rx_dropped

Tue 04 Feb 2025 08:32:44 PM EET

port.rx_dropped: 119869994

root@152224-02-fdc:/# date ; ethtool -S ens1f0 | grep port.rx_dropped

Tue 04 Feb 2025 08:47:07 PM EET

port.rx_dropped: 146696543

root@152224-02-fdc:/# date ; ethtool -S ens1f0 | grep port.rx_dropped

Tue 04 Feb 2025 08:54:44 PM EET

port.rx_dropped: 172873508

root@152224-02-fdc:/# date ; ethtool -S ens1f1 | grep port.rx_dropped

Tue 04 Feb 2025 06:14:41 PM EET

port.rx_dropped: 105123354

root@152224-02-fdc:/# date ; ethtool -S ens1f1 | grep port.rx_dropped

Tue 04 Feb 2025 08:32:54 PM EET

port.rx_dropped: 118214611

root@152224-02-fdc:/# date ; ethtool -S ens1f1 | grep port.rx_dropped

Tue 04 Feb 2025 08:47:15 PM EET

port.rx_dropped: 131517867

root@152224-02-fdc:/# date ; ethtool -S ens1f1 | grep port.rx_dropped

Tue 04 Feb 2025 08:54:49 PM EET

port.rx_dropped: 144578528As you can see the counter is growing on both cards

Second server 158178:

root@158178-12-fdc:/# date ; ethtool -S ens2f0 | grep port.rx_dropped

Tue 04 Feb 2025 08:55:31 PM EET

port.rx_dropped: 0

root@158178-12-fdc:/# date ; ethtool -S ens2f0 | grep port.rx_dropped

Tue 04 Feb 2025 08:58:11 PM EET

port.rx_dropped: 0

root@158178-12-fdc:/# date ; ethtool -S ens2f0 | grep port.rx_dropped

Tue 04 Feb 2025 10:12:34 PM EET

port.rx_dropped: 0root@158178-12-fdc:/# date ; ethtool -S ens2f1 | grep port.rx_dropped

Tue 04 Feb 2025 08:55:42 PM EET

port.rx_dropped: 0

root@158178-12-fdc:/# date ; ethtool -S ens2f1 | grep port.rx_dropped

Tue 04 Feb 2025 08:58:03 PM EET

port.rx_dropped: 0

root@158178-12-fdc:/# date ; ethtool -S ens2f1 | grep port.rx_dropped

Tue 04 Feb 2025 10:12:29 PM EET

port.rx_dropped: 0As you can see the counter is not growing on both cards (cable the same)

I also want to remind you that at first on server 152224 we used the XL710 card and there was a completely different cable. The statistics for "port.rx_dropped" are in the first messages of this topic

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello AndriiV,

Good day!

This is a gentle follow up on this thread, please share the "ethtool -S" statistics and "dmesg" logs of the new setup (as mentioned in point 3 below from your last feedback to us. This will help us compare the old setup with the new one.

Thank You & Best Regards,

Ragulan_Intel

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for the reminder!

I also want to draw attention to the ceiling of 43 Gbps when using linux bonding 2x25 Gbps

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi AndriiV,

Thank you for your response.

Please allow us some time to check this internally. We will get back to you as soon as we have an update.

Regards,

Ragulan_Intel

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello AndriiV,

Hope this message finds you well.

We would appreciate if you can share us the "ethtool -S" statistics and "dmesg" logs of the new setup so we can have our engineering team compare the old setup with the new one and also parallelly check on the ceiling of 43 Gbps when using Linux bonding 2x25 Gbps.

Thank You & Best Regards,

Ragulan_Intel

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello!

Maybe I didn't understand what you want. But I provided statistics and dmesg logs (/var/log/messages) in this message:

I also gave full access (root) to both servers with XXV710 cards. You can independently find any information on them.

I also thought that it would be possible to try to conduct synthetic tests between these servers, where first one network card is loaded with incoming traffic > 4 Gbit/s, then the second one. These tests can be performed between 5:00 and 14:00 GMT.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Team,

Thank you for your response.

Please allow me sometime to check with my team. I will get back to you as soon as I have an update.

Best regards,

Simon

Intel Customer Support Technician

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi AndriiV,

We would like to inform you that we are still working internally on your request. Once we have an update, we will get back to you.

Thank you for your patience.

Best regards,

Azeem

Intel Customer Support Technician

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Andrii_Vasylyshyn,

Thank you for patiently waiting for us. Regarding this issue, we have some update and insights on the current status that we would like to share with you. Kindly refer below for our analysis and current status from the logs shared:

1. You enabled GRO, HW-TC-OFFLOAD and UFO: GRO is recommended for TCP traffic, UFO is recommended for UDP traffic /fragmentation (if kernel supports) and TC offload is for traffic prioritization.

Questions:We would like to check if you trying QoS offload/traffic prioritization and also UDP fragmentation?

2. Made some changes on Jan5th: using ethtool -C command configured interrupt moderation settings on ens1f0 and ens1f1 interfaces.

Suggestion: adaptive-rx/ tx off, tx/rx-usecs and rx-usecs-high are enough to configure, and also "tx/rx usec and rx-usecs-high" values are very high (1 ms & 2 ms), no need go with such a big number. Here is the command to use. Also tried to adaptive-tx/rx ON, don't enabled them.

ethtool -C $NIC_INTERFACE adaptive-rx off adaptive-tx off rx-usecs 100 tx-usecs 100 rx-usecs-high 100

3. Logs for Feb2-Feb 4:

One of the bonded interfaces is down. Kindly resolve the issue. If the problem persists, it will impact performance.

4. Logs for Jan5-Jan25:

The log also confirms the same observation mentioned in point 2 above.

5. "ethtool -S ens1f0 | grep port.rx_dropped" stats collected in Feb

output says that there are many drops, which means that the packets are being received by the NIC but not passed to the OS. Reasons for the drops:

a. RX buffer is small, increase it using the command : ethtool -G $NIC_INTERFACE rx 4096 tx 4096

b. double check affinity, Intel provides the "set_irq_affinity" script in drivers tarball. Please use the same script to set the affinity.

c. Set the queues using the command " ethtool -L $NIC_INTERFACE combined "$Num_Core"

d. set the Interrupt coalescing using the command : same command mentioned in point 2 above

e. Set the kernel max using the command: sysctl -w net.core.rmem_max=16777216 ; sysctl -w net.core.wmem_max=16777216

Earlier our team were unable to reproduce the issue as we ran the test with platinum processor (for better performance we go with platinum processor) , but you were using Intel(R) Xeon(R) Gold 6230 CPU @ 2.10GHz & Intel(R) Xeon(R) CPU E5-2699 v4 @ 2.20GHz .

Please be inform that we are currently have the 6230 processor and have configured the setup. Traffic testing is yet to be done.

Once completed, we will update the results.

Regards,

Sazzy_Intel

Intel Customer Support Technician

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page