- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am running MPI sample program on intel devcloud cluster. It is running fine when executing on single node but facing trouble to use multi node cluster environment. Please suggest.

Number of processes is specified as 4 while running program with mpirun, expected rank information (rank 0,1,2, and 3) is coming on single node but on multinode only 2 processes are executed and also the rank information is incorrect.

- Execution on Single compute Node:

u171596@s001-n004:~/OneAPI_Project/intel_ci/mpiTest$ mpiicpc -cxx=dpcpp -fsycl mpiTest.cpp -o hello_mpi_dpcpp

u171596@s001-n004:~/OneAPI_Project/intel_ci/mpiTest$ cat mpiTest.cpp

#include <mpi.h>

#include<iostream>

#include<sched.h>

using namespace std;

int main(int argc, char** argv){

MPI_Init(NULL, NULL);

int rank;

int world;

MPI_Comm_rank(MPI_COMM_WORLD, &rank);

MPI_Comm_size(MPI_COMM_WORLD, &world);

cout<<"Core "<<sched_getcpu()<<endl;

cout<<"Hello rank: "<<rank<< " world: "<<world<<"\n";

MPI_Finalize();

}

u171596@s001-n004:~/OneAPI_Project/intel_ci/mpiTest$ mpirun -n 4 ./hello_mpi_dpcpp

Hello world from host s001-n004 core 21 (rank 3)!

Hello world from host s001-n004 core 6 (rank 0)!

Hello world from host s001-n004 core 11 (rank 1)!

Hello world from host s001-n004 core 19 (rank 2)!

- Execution on multi Node Cluster using qsub utility:

u171596@login-2:~/OneAPI_Project/intel_ci/mpiTest$ cat distrjob2.sh

#PBS -l nodes=2:ppn=2

cd $PBS_O_WORKDIR

echo Launching the parallel job from mother superior `hostname`...

echo $PBS_NODEFILE

cat $PBS_NODEFILE

mpirun -machinefile $PBS_NODEFILE ./hello_mpi_dpcpp

u171596@login-2:~/OneAPI_Project/intel_ci/mpiTest$ qsub -l nodes=2:gpu:ppn=2 -d . distrjob2.sh

2107279.v-qsvr-1.aidevcloud

u171596@login-2:~/OneAPI_Project/intel_ci/mpiTest$ cat distrjob2.sh.o2107279

########################################################################

# Date: Tue 27 Dec 2022 08:19:26 PM PST

# Job ID: 2107279.v-qsvr-1.aidevcloud

# User: u171596

# Resources: cput=75:00:00,neednodes=2:gpu:ppn=2,nodes=2:gpu:ppn=2,walltime=06:00:00

########################################################################

Launching the parallel job from mother superior s019-n015...

/var/spool/torque/aux//2107279.v-qsvr-1.aidevcloud

s019-n015

s019-n015

s019-n016

s019-n016

..........................

:: oneAPI environment initialized ::

Hello world from host s019-n016 core 7 (rank 0)!

Hello world from host s019-n016 core 0 (rank 0)!

Error file : $ cat distrjob2.sh.e2107279

./hello_mpi_dpcpp: error while loading shared libraries: libsycl.so.6: cannot open shared object file: No such file or directory

--------------------------------------------------------------------------

Primary job terminated normally, but 1 process returned

a non-zero exit code. Per user-direction, the job has been aborted.

--------------------------------------------------------------------------

./hello_mpi_dpcpp: error while loading shared libraries: libsycl.so.6: cannot open shared object file: No such file or directory

--------------------------------------------------------------------------

mpirun detected that one or more processes exited with non-zero status, thus causing

the job to be terminated. The first process to do so was:

Process name: [[2486,1],1]

Exit code: 127

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thanks for posting in Intel Communities and thanks for providing the details.

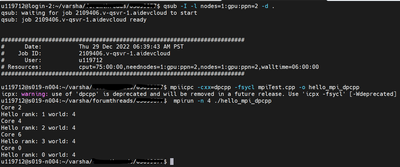

We have followed all the steps mentioned by you. But, we are able to get the expected results without any errors. For more details, please find the below screenshots(single and multi-node):

And also, could you please try re-running once again on multi-node by following these steps:

1) Could you please try changing the below line in the file distrjob2.sh(in order to check whether it is only on a particular node):

replace

#PBS -l nodes=2:ppn=2

it with

#PBS -l nodes=s019-n004+s019-n007:ppn=2

2) Next, run this command:

qsub distrjob2.sh

3) Then, the files will be generated. Do provide us with the results of both the error and output files. We have tried it with the nodes(s019-n015

s019-n016) but we are able to get the correct results.

Please do let us know if you are still facing the same error.

Thanks & Regards,

Varsha

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you for your reply.

I am still facing the same issue. Updated distrijob2.sh error and output files are pasted below.

----------------------------------------------------------------------------------------------------------

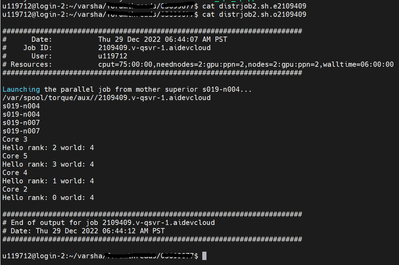

u171596@s001-n007:~/OneAPI_Project/intel_ci/mpiTest$ cat distrjob2.sh

#PBS -l nodes=s019-n004+s019-n007:ppn=2

cd $PBS_O_WORKDIR

echo Launching the parallel job from mother superior `hostname`...

echo $PBS_NODEFILE

cat $PBS_NODEFILE

mpirun -machinefile $PBS_NODEFILE ./hello_mpi_dpcpp

-------------------------------------------------------------------------------------------------------------

u171596@login-2:~$ cat OneAPI_Project/intel_ci/mpiTest/distrjob2.sh.o2112895

########################################################################

# Date: Mon 02 Jan 2023 11:02:45 PM PST

# Job ID: 2112895.v-qsvr-1.aidevcloud

# User: u171596

# Resources: cput=75:00:00,neednodes=s019-n004+s019-n007:ppn=2,nodes=s019-n004+s019-n007:ppn=2,walltime=06:00:00

########################################################################

Launching the parallel job from mother superior s019-n004...

/var/spool/torque/aux//2112895.v-qsvr-1.aidevcloud

s019-n004

s019-n007

s019-n007

:: initializing oneAPI environment ...

bash: BASH_VERSION = 5.0.17(1)-release

args: Using "$@" for setvars.sh arguments:

:: advisor -- latest

:: ccl -- latest

:: clck -- latest

:: compiler -- latest

:: dal -- latest

:: debugger -- latest

:: dev-utilities -- latest

:: dnnl -- latest

:: dpcpp-ct -- latest

:: dpl -- latest

:: embree -- latest

:: inspector -- latest

:: intelpython -- latest

:: ipp -- latest

:: ippcp -- latest

:: ipp -- latest

:: ispc -- latest

:: itac -- latest

:: mkl -- latest

:: modelzoo -- latest

:: modin -- latest

:: mpi -- latest

:: neural-compressor -- latest

:: oidn -- latest

:: openvkl -- latest

:: ospray -- latest

:: ospray_studio -- latest

:: pytorch -- latest

:: rkcommon -- latest

:: rkutil -- latest

:: tbb -- latest

:: tensorflow -- latest

:: vpl -- latest

:: vtune -- latest

:: oneAPI environment initialized ::

Core 0

Hello rank: 0 world: 1

Core 3

Hello rank: 0 world: 1

########################################################################

# End of output for job 2112895.v-qsvr-1.aidevcloud

# Date: Mon 02 Jan 2023 11:02:50 PM PST

########################################################################

---------------------------------------------------------------------------------------------------------------

u171596@login-2:~$ cat OneAPI_Project/intel_ci/mpiTest/distrjob2.sh.e2112895

./hello_mpi_dpcpp: error while loading shared libraries: libsycl.so.6: cannot open shared object file: No such file or directory

--------------------------------------------------------------------------

Primary job terminated normally, but 1 process returned

a non-zero exit code. Per user-direction, the job has been aborted.

--------------------------------------------------------------------------

./hello_mpi_dpcpp: error while loading shared libraries: libsycl.so.6: cannot open shared object file: No such file or directory

./hello_mpi_dpcpp: error while loading shared libraries: libsycl.so.6: cannot open shared object file: No such file or directory

./hello_mpi_dpcpp: error while loading shared libraries: libsycl.so.6: cannot open shared object file: No such file or directory

./hello_mpi_dpcpp: error while loading shared libraries: libsycl.so.6: cannot open shared object file: No such file or directory

./hello_mpi_dpcpp: error while loading shared libraries: libsycl.so.6: cannot open shared object file: No such file or directory

./hello_mpi_dpcpp: error while loading shared libraries: libsycl.so.6: cannot open shared object file: No such file or directory

./hello_mpi_dpcpp: error while loading shared libraries: libsycl.so.6: cannot open shared object file: No such file or directory

--------------------------------------------------------------------------

mpirun detected that one or more processes exited with non-zero status, thus causing

the job to be terminated. The first process to do so was:

Process name: [[15378,1],3]

Exit code: 127

--------------------------------------------------------------------------

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Sorry for the delay, we are unable to reproduce your issue from our end. We informed the concern team, and we will get back to you with an update.

Thanks,

Jaideep

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

This is an account-specific issue, and the concerned team advised creating a new account and trying the same thing. If the issue still persists, please let us know.

Thanks,

Jaideep

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank you Jaideep.

It is working with different account.

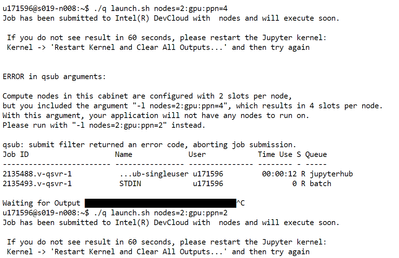

Need one more information please. I am only able to use 2 nodes to execute the MPI program. for anything more, it is giving "Resource Limitation" error. Is there any way I can use more than 2 nodes to execute the program?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

In Devcloud, each user can access a maximum of two nodes, i.e., four processes per node.

There is no other way to access more than 2 nodes.

If this resolves your issue, make sure to accept this as a solution. This would help others with similar issue. Thank you!

Thanks,

Jaideep

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thank You Jaideep

I tried with 4 processes per node. It seems only 2 are permissible. Please confirm.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Could you please try the above workaround suggested by @VarshaS_Intel ?

We were able to run with the above workaround.

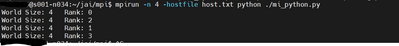

Note (Work around 2):

>>In DevCloud, we can run a maximum of 2 nodes, and on each node we can run 2 processes (total of 4 processes).

Please follow the below steps:

Connect to the DevCloud via Cygwin using below command:

qsub -I -l nodes=<number_of_nodes>:<property>:ppn=2 -d .

example: qsub -I -l nodes=2:gpu:ppn=2 -d .

After logging into the compute node, we need to get the node numbers which we accessed. So run the below command.

echo $PBS_NODEFILE (example output looks like this: /var/spool/torque/aux//1955007.v-qsvr-1.aidevcloud)

We need to cat the output of $PBS_NODEFILE

example:

cat /var/spool/torque/aux//1955007.v-qsvr-1.aidevcloud

s001-n141

s001-n141

s001-n157

s001-n157

Copy the node numbers from above and paste them into the host file (I pasted the above node numbers into host.txt)

After pasting the node numbers into the host file, we can run the mpirun command. (Since I am running the mpi4py script, I gave the python command in the below command.)

mpirun -n 4 -hostfile host.txt ./test

If this resolves your issue, make sure to accept @VarshaS_Intel workaround as a solution. This would help others with a similar issue. Thank you!

Thanks,

Jaideep

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

If this resolves your issue, make sure to accept this as a solution. This would help others with similar issue. Thank you!

Regards,

Jaideep

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

We assume that your issue is resolved. If you need any additional information, please post a new question as this thread will no longer be monitored by Intel.

Thanks,

Jaideep

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page