- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

working with openvino_2021.4.689 and python. We are not able to get the same results after changing from synchronous inference to asynchronous.

face_neural_net = ie.read_network(model=face_model_xml, weights=face_model_bin)

if face_neural_net is not None:

face_input_blob = next(iter(face_neural_net.input_info))

face_neural_net.batch_size = 1

face_execution_net = ie.load_network(

network=face_neural_net, device_name=device.upper()

)

face_blob = cv2.dnn.blobFromImage(

frame, size=(MODEL_FRAME_SIZE, MODEL_FRAME_SIZE), ddepth=cv2.CV_8U

)

face_results = face_execution_net.infer(inputs={face_input_blob: face_blob})

face_neural_net = ie.read_network(model=face_model_xml, weights=face_model_bin)

if face_neural_net is not None:

face_input_blob = next(iter(face_neural_net.input_info))

face_output_blob = next(iter(face_neural_net.outputs))

face_neural_net.batch_size = 1

face_execution_net = ie.load_network(

network=face_neural_net, device_name=device.upper(), num_requests=0

)

face_blob = cv2.dnn.blobFromImage(

frame, size=(MODEL_FRAME_SIZE, MODEL_FRAME_SIZE), ddepth=cv2.CV_8U)

face_execution_net.requests[0].async_infer({face_input_blob: face_blob})

while face_execution_net.requests[0].wait(0) != StatusCode.OK:

sleep(1)

face_results = face_execution_net.requests[0].output_blobs[face_output_blob].buffer

While in the sync inference we are getting a dictionary with the expected results, in the async inference we are only getting an array with the confidence level for each detection.

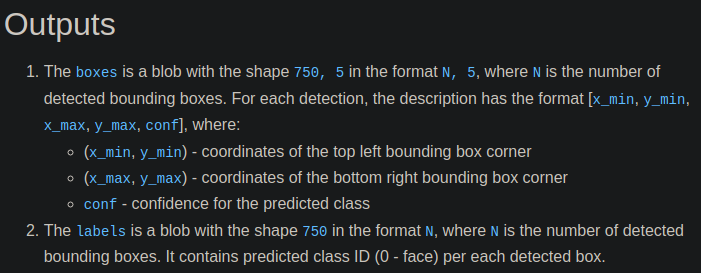

The model output:

Maybe it is related with output blob configuration, but we were not able to find an example.

Thanks and Regards,

Luciano

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Lucho,

You will need to implement the Async_infer API to start the asynchronous inference in your code. InferRequest.async_infer, InferRequest.wait, and Blob.buffer are the API for the asynchrounous Infer features.

You can refer to the Image Classification Async Python* Sample that demonstrates how to do inference of image classification networks using Asynchronous Inference Request API.

Regards,

Aznie

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Lucho,

Thanks for reaching out.

The face_detection.py is not official from our developer and has never been validated from our side. We cannot verify the validated output for the inference. Basically, when the application ran in the synchronous mode, it creates one infer request and executes the infer method. If you run the application in the asynchronous mode, it creates as many infer requests. The asynchronous approach runs multiple inferences in a parallel pipeline. That may lead to a higher throughput is than the synchronous approach.

Check out the following OpenVINO documentation for more information.

Meanwhile, which example are you looking for that related to the output blob?

Regards,

Azni

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Azni,

thanks for the reply. face_detection.py is a script that I wrote and I am sharing with you to show how I'm trying to use the model. I will look into the documentation.

Regards,

Luciano

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

From the documentation, step 2:

for name, info in face_neural_net.outputs.items():

print("\tname: {}".format(name))

print("\tshape: {}".format(info.shape))

print("\tlayout: {}".format(info.layout))

print("\tprecision: {}\n".format(info.precision))I'm getting:

name: TopK_2434.0

shape: [750]

layout: C

precision: FP32

name: boxes

shape: [750, 5]

layout: NC

precision: FP32

name: labels

shape: [750]

layout: C

precision: I32

But there is no info on how to get each output from async infer output buffer.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I will need technical support for this. Is there any other documentation related to async inference that you could point me out?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Lucho,

You will need to implement the Async_infer API to start the asynchronous inference in your code. InferRequest.async_infer, InferRequest.wait, and Blob.buffer are the API for the asynchrounous Infer features.

You can refer to the Image Classification Async Python* Sample that demonstrates how to do inference of image classification networks using Asynchronous Inference Request API.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Lucho,

This thread will no longer be monitored since this issue has been resolved.

If you need any additional information from Intel, please submit a new question.

Regards,

Wan

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page