- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

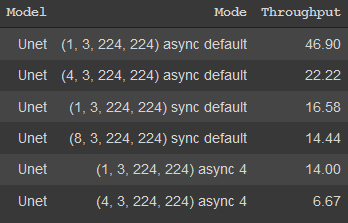

I'm using the benchmark app to find the best configuration for my two models: YoloV3 and Unet, both trained on custom data sets.

I ran two versions of the async configuration, one has -nthreads 4, the other setting has it not specified.

Here's an example, it's ranked from best(top) to worst(bottom) throughput.

I'm trying to wrap my head around this result on why the asynchronous 4 threads are getting beat by the synchronous calls.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi gdioni,

Thanks for reaching out.

The throughput value depends on the batch size.

When you run the application in the synchronous mode, it creates one infer request and executes the infer method. If you run the application in the asynchronous mode, it creates as many infer requests. The asynchronous approach running multiple inferences in a parallel pipeline. That why the throughput is higher than the synchronous approach.

Check out the following OpenVINO documentation for more information.

Regards,

Aznie

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi gdioni,

Thanks for reaching out.

The throughput value depends on the batch size.

When you run the application in the synchronous mode, it creates one infer request and executes the infer method. If you run the application in the asynchronous mode, it creates as many infer requests. The asynchronous approach running multiple inferences in a parallel pipeline. That why the throughput is higher than the synchronous approach.

Check out the following OpenVINO documentation for more information.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi gdioni,

This thread will no longer be monitored since this issue has been resolved. If you need any additional information from Intel, please submit a new question.

Regards,

Aznie

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page