- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello There,

I built from source openvino 2022 for Raspberry Pi 4 - 32-bit OS - python 3.7.3 following the steps in https://www.intel.com/content/www/us/en/support/articles/000057005/boards-and-kits.html

Everything is working in terms of importing all the libraries and installation however, I am getting the error "Bus error" when I run the deblur demo code, as is shown below:

pi@rpi1:~/openvino/open_model_zoo/demos/deblurring_demo/python $

python deblurring_demo.py --input blur.jpg --model model/deblurgan-v2.xml -d MYRIAD

[ INFO ] OpenVINO Runtime

[ INFO ] build: custom_master_862aebce714c61902531b097e90df6365f325a46

[ INFO ] Reading model model/deblurgan-v2.xml

[ DEBUG ] Reshape model from [1, 3, 844, 1200] to [1, 3, 864, 1216]

[ INFO ] Input layer: blur_image, shape: [1, 3, 864, 1216], precision: f32, layout: NCHW

[ INFO ] Output layer: deblur_image, shape: [1, 3, 864, 1216], precision: f32, layout:

Bus error

Looking forward to hearing from you,

Cheers,

Y,

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Y,

Thank you for reaching out to us.

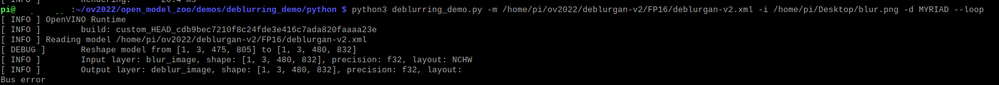

I have validated from my end by building OpenVINO™ 2022.1 from source for Raspbian OS on Raspberry Pi 4 Model B. I encountered similar error when running the Image Deblurring Python* Demo using FP16 model. The error message is as shown below:

We will escalate this issue to the relevant team for further action.

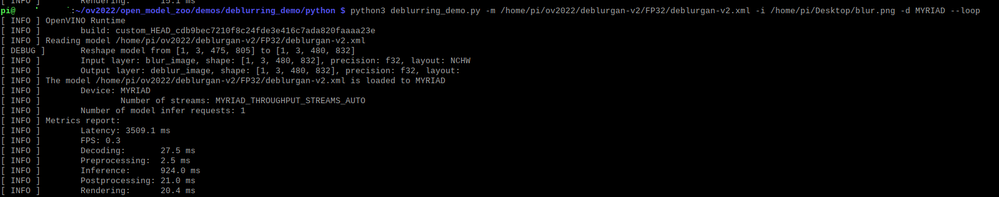

On another note, I was able to run the demo successfully when I use FP32 model. Please have a try running the demo using FP32 model.

Here are the results of using FP32 model for Image Deblurring Python* Demo:

Regards,

Megat

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Megat,

Thank you for your response; much appreciated.

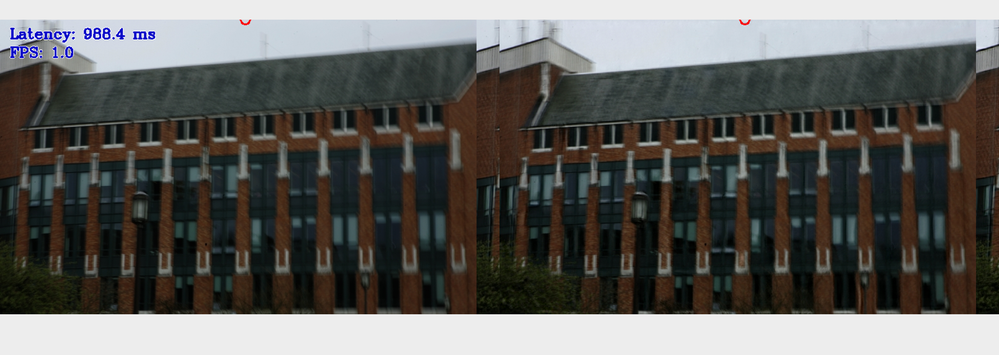

Yes, with precision FP32 is working. However, the process is too slow compared to your results, with an error at the end of the process. Also, the output is not promising, as seen in the attached file.

pi@rpi1:~/openvino/open_model_zoo/demos/deblurring_demo/python $ python deblurring_demo.py --i blur.png --m deblurgan-v2.xml --d MYRIAD --output test.png

[ INFO ] OpenVINO Runtime

[ INFO ] build: custom_master_862aebce714c61902531b097e90df6365f325a46

[ INFO ] Reading model deblurgan-v2.xml

[ DEBUG ] Reshape model from [1, 3, 720, 1280] to [1, 3, 736, 1280]

[ INFO ] Input layer: blur_image, shape: [1, 3, 736, 1280], precision: f32, layout: NCHW

[ INFO ] Output layer: deblur_image, shape: [1, 3, 736, 1280], precision: f32, layout:

[ INFO ] The model deblurgan-v2.xml is loaded to MYRIAD

[ INFO ] Device: MYRIAD

[ INFO ] Number of streams: MYRIAD_THROUGHPUT_STREAMS_AUTO

[ INFO ] Number of model infer requests: 1

OpenCV: FFMPEG: tag 0x47504a4d/'MJPG' is not supported with codec id 7 and format 'image2 / image2 sequence'

[ INFO ] Metrics report:

[ INFO ] Latency: 89465.5 ms

[ INFO ] FPS: 0.0

[ INFO ] Decoding: 62.3 ms

[ INFO ] Preprocessing: 1.7 ms

[ INFO ] Inference: 2488.4 ms

[ INFO ] Postprocessing: 22.8 ms

[ INFO ] Rendering: 50.6 ms

python3: ../../libusb/io.c:2116: handle_events: Assertion `ctx->pollfds_cnt >= internal_nfds' failed.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Update about my previous reply:

I used the code from openvino_notebooks: https://github.com/openvinotoolkit/openvino_notebooks

And it works properly now without any errors. However, I still have a problem with time inference on the MYRIAD-Raspberry Pi; it took 2.29 minutes to process one image, and I am not getting the desired output (the image is still blurry) !?

Looking forward to hearing from you,

Cheers,

Yaser,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Yaser,

I got the same blurry result as yours when running the demo using Intel® Neural Compute Stick 2 (NCS2) on Raspberry Pi 4 Model B. When I tried with an Intel® Core™ i7-10610U CPU, on Windows 10, I got a better result. I share my results here:

NCS2:

CPU:

When using CPU, we observe significant performance improvement as well as reduced latency (approximately 95%). Therefore, we recommend you use CPU Plugin for better results.

Regards,

Megat

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Megat,

Thank you for your response,

Yes, I know CPU or GPU provides better results than the MYRIAD. However, the whole point is to have it running on a Raspberry Pi for faster inference and good results.

Regards,

Yaser,

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Yaser,

Intel® Neural Compute Stick 2 (NCS2) is a plug & play AI device for deep learning inference at the edge. It can be used as a prototype with low-cost edge devices such as Raspberry Pi 4 and other ARM host devices, and it offers access to deep learning inference without the need for large, expensive hardware.

However, the general performance of NCS2 is generally lower than GPU, and high-end CPUs due to different processing and computing power thus resulting in different performance results.

Regards,

Megat

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Yaser,

This thread will no longer be monitored since we have provided a suggestion. If you need any additional information from Intel, please submit a new question.

Regards,

Megat

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page