- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

Recently I have ran benchmark_app on new Intel CPU, and I get a question from the result of benchmark_app.

The below throughput table is substracted from my test result for example.

| FP32 | BF16 |

EfficientDet D1 | 12.21 | 11.97 |

MobileNet V3 Large | 578.91 | 584.98 |

As you see when I run the benchmark tool with Ultra Core 5 125H, throughputs are not much difference between FP32 and BF16 as well as I have expected much higher number in case of using BF16.

I see that the total memory of usage is decreased when data-type is BF16 with compare to FP32 whereas throughput is similar.

Could you help me out to resolve the issue or explain why I get those result?

Thanks in advance!

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Kyoungsoo_oh,

Thank you for reaching out to us.

We are investigating the issue regarding the throughput performance you get and will get back to you soon.

On the other hand, for the BF16 memory usage, the memory consumption will be lower since the BF16 data is half the size of the 32-bit float. For more information, you can check out the Floating Point Data Types Specifics page.

Regards,

Megat

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Thanks for investigation of this issue.

I look forward to replying back.

Thanks

KyoungSoo

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi KyoungSoo,

Thank you for your patience.

For your information, Intel® Core™ Ultra 5 processor 125H max vector Instruction Set Architecture (ISA) is AVX2. AVX2 does not have any BF16 compute commands so far. Platforms that natively support BF16 calculations have the AVX512_BF16 or AMX extension as mentioned in the Floating Point Data Types Specifics section.

For more details, you can refer to the Intel® 64 and IA-32 Architectures Software Developer Manuals page.

Therefore, it is expected that OpenVINO™ does not apply BF16 inference precision for Meteor Lake CPU due to the lack of BF16 hardware acceleration.

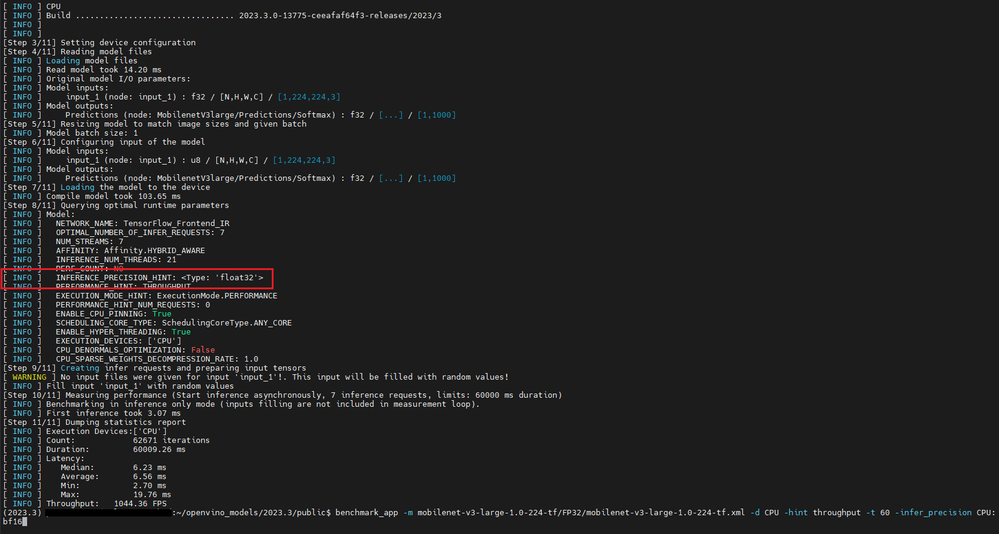

On my end, I ran the benchmark app on the Intel® Core™ Ultra 7 processor 155H and selected bf16 as the infer precision option. However, the selected inference precision hint remains float32 as the CPU lacks the BF16 hardware acceleration.

Regards,

Megat

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Kyoungsoo_oh,

Thank you for your question. This thread will no longer be monitored since we have provided a suggestion. If you need any additional information from Intel, please submit a new question.

Regards,

Megat

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page