- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello,

Did you guys see these type of error before?

My customer run into this following errors:

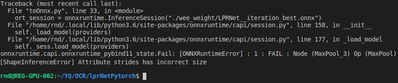

When they load the aforementioned ONNX model using OpenCV Inference Runtime, the following error occurs:

Using OpenVINO Deep Learning Workbench (Docker image openvino/workbench:2020.3), importing the ONNX model in Model Optimizer yields the following error:

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Using OpenVino DeepLearning Workbench (Docker image openvino/workbench:2020.3), importing the ONNX model in Model Optimizer yields the following error:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi DarkHorse,

Can you share the environment of your workaround? Which Pytorch model you are converting?

Please check the Pytorch models that are supported by the OpenVINO toolkit from this page: https://docs.openvinotoolkit.org/2020.4/openvino_docs_MO_DG_prepare_model_convert_model_Convert_Model_From_ONNX.html#supported_pytorch_models_via_onnx_conversion

You also can find the information on converting the ONNX model from this page :

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Aznie,

I am wondering if we are able to view the codes inside the ONNX model?

I am sharing customer's converted ONNX model to see if you guys are familiar with this error?

https://www.dropbox.com/sh/zffreiakhxf8gzx/AADyEYEn0teuQF9CEm1rZ4f-a?dl=0

This seems to be a customized model which is shared in Github, so it would be hard to determine if this model is really supported by OpenVINO toolkit.

https://github.com/sirius-ai/LPRNet_Pytorch

Do let me know your views and suggestions.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi DarkHorse,

LPRNet_Pytorch model is not supported by the OpenVINO toolkit.

Please check the supported Pytorch model via ONXX Conversion here.

Meanwhile, from your OpenVINO workbench screenshot, I can see the error "Stopped shape/value propagation at "66" node". This error means that Model Optimizer cannot infer shaped or values for the specified node. It can happen because of a bug in the custom shape infer function, because the mode inputs have incorrect values/shapes, or because the input shapes are incorrect.

You can find information regarding the input shapes from this page.

To proceed with ONXX conversion to OpenVINO IR, you need to have a correct ONNX model that supported by OpenVINO.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Aznie,

Good day and thanks for the update.

I have managed to run the PyTorch model and right now I am trying to convert this PyTorch model to .ONNX model.

Is there any sample of conversion script that is available to share to ease my conversion process?

I am trying to go extra miles to help customer in this area. After I can reproduce the same issue on the converted .ONNX model so that I can close the loop with my customers.

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi DarkHorse,

Unfortunately, we only support issues regarding the OpenVINO toolkit. This means when you have an ONNX model to be converted to the OpenVINO. What can I suggest you is to visit this page that might help you. Hope that will help!

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Aznie,

Good day.

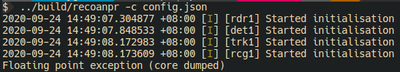

I have managed to re-run the whole conversion procedure by using this LPRNet PyTorch model which I get from here:

https://github.com/sirius-ai/LPRNet_Pytorch

- Run LPRNet_Pytorch model in Ubuntu 18.04

- Convert LPRNet_Pytorch model to ONNX Model

- Run converted ONNX file in ONNXRUNTIME

- Run converted ONNX file in OpenVINO to convert to .IR & .XML file

Can you help me to check if you are familiar with these error messages from OpenVINO?

I will want to close loop with my customers after this I have confirmed that the original PyTorch Model is not suitable to run in OpenVINO platform.

Model Optimizer version:

[ ERROR ] Cannot infer shapes or values for node "MaxPool_3".

[ ERROR ] operands could not be broadcast together with shapes (2,) (3,)

[ ERROR ]

[ ERROR ] It can happen due to bug in custom shape infer function <function Pooling.infer at 0x7f74d75987b8>.

[ ERROR ] Or because the node inputs have incorrect values/shapes.

[ ERROR ] Or because input shapes are incorrect (embedded to the model or passed via --input_shape).

[ ERROR ] Run Model Optimizer with --log_level=DEBUG for more information.

[ ERROR ] Exception occurred during running replacer "REPLACEMENT_ID" (<class 'extensions.middle.PartialInfer.PartialInfer'>): Stopped shape/value propagation at "MaxPool_3" node.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

After the installation of the Openvino the following path contains the code for conversion of pytorch model to onnx.

C:\ProgramFiles(x86)\Intel\openvino_2021.2.185\deployment_tools\open_model_zoo\tools\downloader\pytorch_to_onnx.py

Run "python pytorch_to_onnx.py -h " to get the information of the params accepted by the script.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi DarkHorse,

Can you please share your model so I can look into it further. Also, please provide the command you used to convert your model.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Aznie,

Attached are the converted ONNX file and some error messages.

The python commands for OpenVINO toolkit is shown in the error message file.

root@allen-VirtualBox:/home/allen/Issue# python3 /opt/intel/openvino_2020.4.287/deployment_tools/model_optimizer/mo.py --input_model Final_LPRNet_model.onnx

Thanks for the great help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Darkhouse,

As for now MaxPool_3 operation is not supported yet by OpenVINO toolkit.

Although we cannot guarantee it works, have you tried to run your ONNX model directly with ONXX Reader?

Also, actually I just figure out that there is a sample script that is available for the Pytorch model to ONNX model.

You can find the script here : C:\Program Files (x86)\Intel\openvino_2021\deployment_tools\tools\model_downloader\pytorch_to_onxx.py

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Aznie,

I have tried to run the converted ONNX model in ONNXRUNTIME and below are the error messages and error messages are quite similar related to Maxpool3.

root@allen-VirtualBox:/home/allen/Issue# python3 temp_onnx.py

Traceback (most recent call last):

File "temp_onnx.py", line 7, in <module>

ort_session = onnxruntime.InferenceSession("Final_LPRNet_model.onnx")

File "/usr/local/lib/python3.6/dist-packages/onnxruntime/capi/session.py", line 29, in __init__

self._sess.load_model(path_or_bytes)

RuntimeError: [ONNXRuntimeError] : 10 : INVALID_GRAPH : Load model from Final_LPRNet_model.onnx failed:Node:MaxPool_3 Unrecognized attribute: ceil_mode for operator MaxPool

I see the pytorch_to_onnx.py script is quite similar to the one that I am using. I am attaching this to you.

# --------load and convert network using pretrained model----------

from model.LPRNet import LPRNet

import torch

import torch.onnx

import argparse

import os

parser = argparse.ArgumentParser()

parser.add_argument('-w', '--trained_weight', type = str, required = True, help = 'crnn weight path')

parser.add_argument('-out', '--output_folder', type = str, help = 'directory to save onnx')

parser.add_argument('-img_h', '--image_height', type = int, default=24, help = 'image height')

parser.add_argument('-img_w', '--image_width', type = int, default=94, help = 'image width')

parser.add_argument('-ncls', '--number_class', type = int, default=68, help = 'number of classes')

parser.add_argument('-img_ch', '--number_channel', type = int, default=3, help = 'input channel')

parser.add_argument('-batch', '--batch_size', type = int, default=1, help = 'input batch size')

args = parser.parse_args()

Net = LPRNet(lpr_max_len=8, phase=False, class_num=args.number_class, dropout_rate=0.5)

trained_model = args.trained_weight

batch_size = args.trained_weight

folder_path = args.output_folder

base_name = (os.path.basename(trained_model)).split('.')[0]

device = torch.device("cpu")

map_location = lambda storage, loc: storage

if torch.cuda.is_available():

map_location = None

Net.load_state_dict(torch.load(trained_model,map_location=device))

# set the model to inference mode

Net.eval()

imgsz = (args.image_height, args.image_width) #(24,94)

img = torch.zeros((args.batch_size, args.number_channel) + imgsz)

torch_out = Net(img)

torch.onnx.export(Net, img, folder_path + '/' + base_name + '.onnx', export_params=True, opset_version=12,

input_names = ['input'], output_names = ['output'])

Can we conclude that the original PyTorch model has some shape which is not compatible with OpenVINO toolkit and ONNXRUNTIME?

Thanks for the help.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Darkhorse,

Probably yes, since MaxPool_3 operation is not supported yet by OpenVINO and you already test with ONXXRUNTIME too. The reason might be your PyTorch model has an unsupported layer that’s why you are getting the shape error because the layer cannot be recognized by the OpenVINO toolkit.

Regards,

Aznie

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello Aznie,

Thank you so much for the help.

I will revert back to my customer on this.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Darkhorse,

This thread will no longer be monitored since this issue has been resolved. If you need any additional information from Intel, please submit a new question.

Regards,

Aznie

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page