- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

My post is a similar issue to the post below.

https://community.intel.com/t5/Intel-Distribution-of-OpenVINO/openVino-inference-using-onnx-model/m-p/1460146

I believe OpenVINO supports QuantizeLinear/DeauantizeLinear layers and ONNX format models.

However, the model using QuantizeLinear/DeauantizeLinear layers (=Model1) gives different results depending on the OpenVINO 2021.4 and 2022.3.

For comparison, the model that does not use QuantizeLinear/DeauantizeLinear (=Models2) gives the same result for OpenVINO 2021.4 and 2022.3.

For further comparison, I also compared results when using different inference frameworks (e.g. TensorRT).

The conditions are as follows.

・model format is onnx.

・Windows10 and C++.

・output size = 1000 (using Generic imageNet)

The results are summarized below.

・The numbers in the table below are the first 10 of 1000

| OpenVINO 2021.4 |

OpenVINO 2022.3 |

Other framework (TensorRT) |

|

| use QuantizeLinear/DeauantizeLinear (=Model1) | 1.82228 | 2.83418 | 1.84796 |

| -5.29731 | -3.76114 | -5.31353 | |

| -2.57535 | -3.80294 | -2.56074 | |

| 0.815923 | 0.491049 | 0.816834 | |

| -5.83255 | -4.85166 | -5.85728 | |

| -3.84568 | -1.81016 | -3.84114 | |

| -4.65202 | -5.18312 | -4.6788 | |

| 3.07942 | 1.29188 | 3.09532 | |

| 2.51693 | 1.51579 | 2.54098 | |

| 10.021 | 6.2427 | 10.0298 | |

| does not use QuantizeLinear/DeauantizeLinear (=Model2) |

1.92688 | 1.92688 | 1.92688 |

| -4.5917 | -4.5917 | -4.5917 | |

| -3.09134 | -3.09134 | -3.09134 | |

| 0.355102 | 0.355103 | 0.355103 | |

| -6.01955 | -6.01955 | -6.01955 | |

| -4.48821 | -4.48821 | -4.48821 | |

| -5.00235 | -5.00235 | -5.00235 | |

| 2.16591 | 2.16591 | 2.16591 | |

| 2.74276 | 2.74276 | 2.74276 | |

| 11.9108 | 11.9108 | 11.9108 |

Only the combination of the model using QuantizeLinear/DeauantizeLinear and OpenVINO2022.3 has a large numerical difference.

Why is this?

Please let me know if you get similar results in your environment and if so what the problem is.

I wanted to attach the model and the input image, but it was not possible due to size restrictions. Please let me know if there is a better way.

Thank you for your support.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi North-man,

Thanks for reaching out to us.

Yes, QuantizeLinear and DequantizeLinear are supported as shown in ONNX Supported Operators in Supported Framework Layers.

Please share the required files with us via the following email so we can replicate the issue:

waix.fook.wan@intel.com

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi North-man,

Thanks for your information.

We've received the required files via email. We'll investigate this issue and we'll update you at the earliest.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi North-man,

Thanks for your patience.

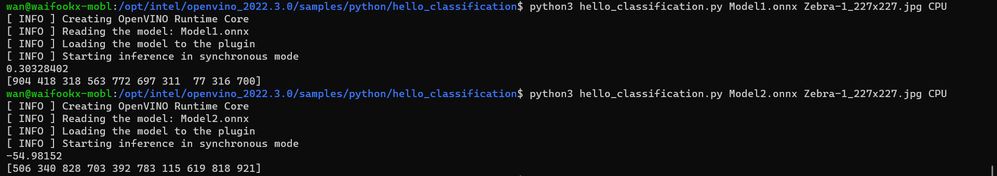

I've validated Model1 and Model2 ONNX models with Hello Classification Python Sample from OpenVINO™ toolkit 2021.4.2 and Hello Classification Python Sample from OpenVINO™ toolkit 2022.3 using Zebra-1_227x227.jpg as an input image.

Model1 ONNX model (uses QuantizeLinear and DequantizeLinear) :

Result when using OpenVINO™ toolkit 2021.4.2 : -1.9281068

Result when using OpenVINO™ toolkit 2022.3 : 0.30328402

Model2 ONNX model (does not use QuantizeLinear and DequantizeLinear):

Result when using OpenVINO™ toolkit 2021.4.2 : -54.98152

Result when using OpenVINO™ toolkit 2022.3 : -54.981552

We do notice the results are different when using the ONNX model that uses QuantizeLinear and DequantizeLinear.

On another note, could you please share your script with us so we can reproduce the same inference result from our end for further investigation? From your results, we would like to know if the results that are approximately 1.92688 is the correct or expected result.

Regards,

Wan

References:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, Wan-Intel

Thank you for your response.

Some parts of my script cannot be published, so I will consider a script that can be reproduced.

Please wait.

Regards,

North-man

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi North-man,

Noted. Take your time to provide us required files to replicate the issue so we can further investigate the issues.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Wan,

I have one question about your answer above.

Model1 ONNX model (uses QuantizeLinear and DequantizeLinear) :

Result when using OpenVINO™ toolkit 2021.4.2 : -1.9281068

Result when using OpenVINO™ toolkit 2022.3 : 0.30328402

Model2 ONNX model (does not use QuantizeLinear and DequantizeLinear):

Result when using OpenVINO™ toolkit 2021.4.2 : -54.98152

Result when using OpenVINO™ toolkit 2022.3 : -54.981552What does a number like -1.9281068 show?

The sample below appears to display the Top 10.

Hello Classification Python Sample

Regards,

North-man

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi North-man,

I've edited Hello Classification Python Sample for both OpenVINO™ toolkit 2021.4.2 and OpenVINO™ toolkit 2022.3 to display the results above. I've attached both Python scripts in this thread.

edited_hello_classification_2021

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

# Copyright (C) 2018-2021 Intel Corporation

# SPDX-License-Identifier: Apache-2.0

import argparse

import logging as log

import sys

import cv2

import numpy as np

from openvino.inference_engine import IECore

def parse_args() -> argparse.Namespace:

"""Parse and return command line arguments"""

parser = argparse.ArgumentParser(add_help=False)

args = parser.add_argument_group('Options')

# fmt: off

args.add_argument('-h', '--help', action='help', help='Show this help message and exit.')

args.add_argument('-m', '--model', required=True, type=str,

help='Required. Path to an .xml or .onnx file with a trained model.')

args.add_argument('-i', '--input', required=True, type=str, help='Required. Path to an image file.')

args.add_argument('-d', '--device', default='CPU', type=str,

help='Optional. Specify the target device to infer on; CPU, GPU, MYRIAD, HDDL or HETERO: '

'is acceptable. The sample will look for a suitable plugin for device specified. '

'Default value is CPU.')

args.add_argument('--labels', default=None, type=str, help='Optional. Path to a labels mapping file.')

args.add_argument('-nt', '--number_top', default=10, type=int, help='Optional. Number of top results.')

# fmt: on

return parser.parse_args()

def main():

log.basicConfig(format='[ %(levelname)s ] %(message)s', level=log.INFO, stream=sys.stdout)

args = parse_args()

# ---------------------------Step 1. Initialize inference engine core--------------------------------------------------

log.info('Creating Inference Engine')

ie = IECore()

# ---------------------------Step 2. Read a model in OpenVINO Intermediate Representation or ONNX format---------------

log.info(f'Reading the network: {args.model}')

# (.xml and .bin files) or (.onnx file)

net = ie.read_network(model=args.model)

if len(net.input_info) != 1:

log.error('Sample supports only single input topologies')

return -1

if len(net.outputs) != 1:

log.error('Sample supports only single output topologies')

return -1

# ---------------------------Step 3. Configure input & output----------------------------------------------------------

log.info('Configuring input and output blobs')

# Get names of input and output blobs

input_blob = next(iter(net.input_info))

out_blob = next(iter(net.outputs))

# Set input and output precision manually

net.input_info[input_blob].precision = 'U8'

net.outputs[out_blob].precision = 'FP32'

# Get a number of classes recognized by a model

num_of_classes = max(net.outputs[out_blob].shape)

# ---------------------------Step 4. Loading model to the device-------------------------------------------------------

log.info('Loading the model to the plugin')

exec_net = ie.load_network(network=net, device_name=args.device)

# ---------------------------Step 5. Create infer request--------------------------------------------------------------

# load_network() method of the IECore class with a specified number of requests (default 1) returns an ExecutableNetwork

# instance which stores infer requests. So you already created Infer requests in the previous step.

# ---------------------------Step 6. Prepare input---------------------------------------------------------------------

original_image = cv2.imread(args.input)

image = original_image.copy()

_, _, h, w = net.input_info[input_blob].input_data.shape

if image.shape[:-1] != (h, w):

log.warning(f'Image {args.input} is resized from {image.shape[:-1]} to {(h, w)}')

image = cv2.resize(image, (w, h))

# Change data layout from HWC to CHW

image = image.transpose((2, 0, 1))

# Add N dimension to transform to NCHW

image = np.expand_dims(image, axis=0)

# ---------------------------Step 7. Do inference----------------------------------------------------------------------

log.info('Starting inference in synchronous mode')

res = exec_net.infer(inputs={input_blob: image})

# print(res)

# ---------------------------Step 8. Process output--------------------------------------------------------------------

# Generate a label list

# if args.labels:

# with open(args.labels, 'r') as f:

# labels = [line.split(',')[0].strip() for line in f]

res = res[out_blob]

print(res[0,0])

# print(res)

# Change a shape of a numpy.ndarray with results to get another one with one dimension

probs = res.reshape(num_of_classes)

# Get an array of args.number_top class IDs in descending order of probability

top_n_idexes = np.argsort(probs)[-args.number_top :][::-1]

print(top_n_idexes)

# header = 'classid probability'

# header = header + ' label' if args.labels else header

# log.info(f'Image path: {args.input}')

# log.info(f'Top {args.number_top} results: ')

# log.info(header)

# log.info('-' * len(header))

# for class_id in top_n_idexes:

# probability_indent = ' ' * (len('classid') - len(str(class_id)) + 1)

# label_indent = ' ' * (len('probability') -

edited_hello_classification_2022

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

# Copyright (C) 2018-2022 Intel Corporation

# SPDX-License-Identifier: Apache-2.0

import logging as log

import sys

import cv2

import numpy as np

from openvino.preprocess import PrePostProcessor, ResizeAlgorithm

from openvino.runtime import Core, Layout, Type

def main():

log.basicConfig(format='[ %(levelname)s ] %(message)s', level=log.INFO, stream=sys.stdout)

# Parsing and validation of input arguments

if len(sys.argv) != 4:

log.info(f'Usage: {sys.argv[0]} <path_to_model> <path_to_image> <device_name>')

return 1

model_path = sys.argv[1]

image_path = sys.argv[2]

device_name = sys.argv[3]

# --------------------------- Step 1. Initialize OpenVINO Runtime Core ------------------------------------------------

log.info('Creating OpenVINO Runtime Core')

core = Core()

# --------------------------- Step 2. Read a model --------------------------------------------------------------------

log.info(f'Reading the model: {model_path}')

# (.xml and .bin files) or (.onnx file)

model = core.read_model(model_path)

if len(model.inputs) != 1:

log.error('Sample supports only single input topologies')

return -1

if len(model.outputs) != 1:

log.error('Sample supports only single output topologies')

return -1

# --------------------------- Step 3. Set up input --------------------------------------------------------------------

# Read input image

image = cv2.imread(image_path)

# Add N dimension

input_tensor = np.expand_dims(image, 0)

# --------------------------- Step 4. Apply preprocessing -------------------------------------------------------------

ppp = PrePostProcessor(model)

_, h, w, _ = input_tensor.shape

# 1) Set input tensor information:

# - input() provides information about a single model input

# - reuse precision and shape from already available `input_tensor`

# - layout of data is 'NHWC'

ppp.input().tensor() \

.set_shape(input_tensor.shape) \

.set_element_type(Type.u8) \

.set_layout(Layout('NHWC')) # noqa: ECE001, N400

# 2) Adding explicit preprocessing steps:

# - apply linear resize from tensor spatial dims to model spatial dims

ppp.input().preprocess().resize(ResizeAlgorithm.RESIZE_LINEAR)

# 3) Here we suppose model has 'NCHW' layout for input

ppp.input().model().set_layout(Layout('NCHW'))

# 4) Set output tensor information:

# - precision of tensor is supposed to be 'f32'

ppp.output().tensor().set_element_type(Type.f32)

# 5) Apply preprocessing modifying the original 'model'

model = ppp.build()

# --------------------------- Step 5. Loading model to the device -----------------------------------------------------

log.info('Loading the model to the plugin')

compiled_model = core.compile_model(model, device_name)

# --------------------------- Step 6. Create infer request and do inference synchronously -----------------------------

log.info('Starting inference in synchronous mode')

results = compiled_model.infer_new_request({0: input_tensor})

# print(results)

# --------------------------- Step 7. Process output ------------------------------------------------------------------

predictions = next(iter(results.values()))

#print(predictions)

# print(dir(predictions))

# print(type(predictions))

# print(predictions.shape)

print(predictions[0,0])

# Change a shape of a numpy.ndarray with results to get another one with one dimension

probs = predictions.reshape(-1)

# Get an array of 10 class IDs in descending order of probability

top_10 = np.argsort(probs)[-10:][::-1]

print(top_10)

# header = 'class_id probability'

# log.info(f'Image path: {image_path}')

# log.info('Top 10 results: ')

# log.info(header)

# log.info('-' * len(header))

# for class_id in top_10:

# probability_indent = ' ' * (len('class_id') - len(str(class_id)) + 1)

# log.info(f'{class_id}{probability_indent}{probs[class_id]:.7f}')

# log.info('')

# ----------------------------------------------------------------------------------------------------------------------

# log.info('This sample is an API example, for any performance measurements please use the dedicated benchmark_app tool\n')

# return 0

if __name__ == '__main__':

sys.exit(main())

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Wan,

Thank you for your answer, I understand.

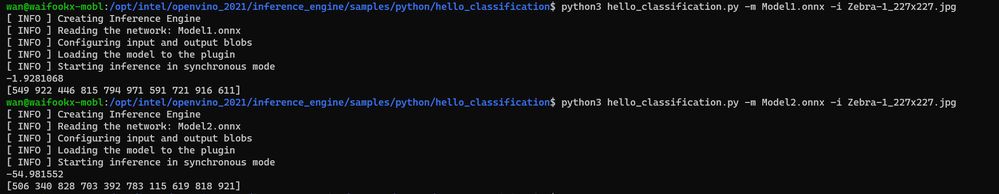

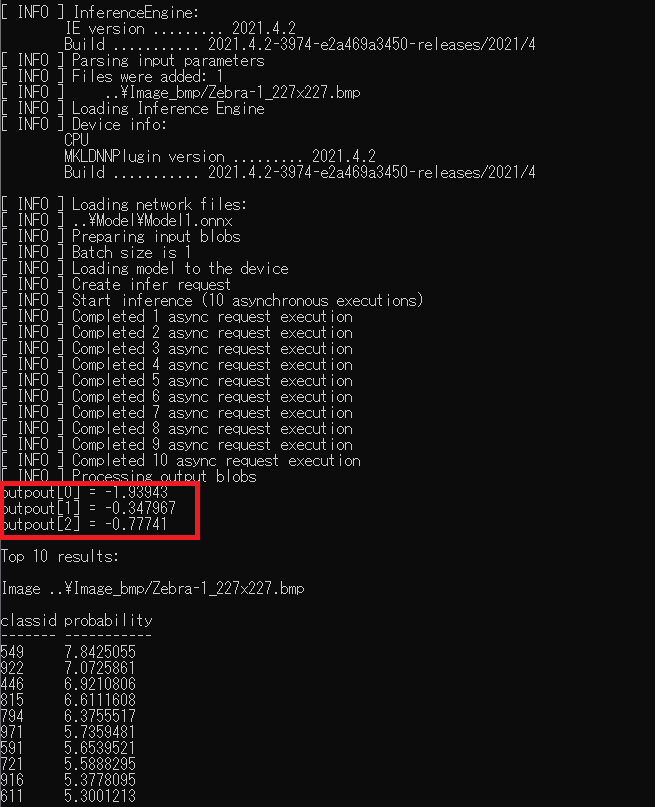

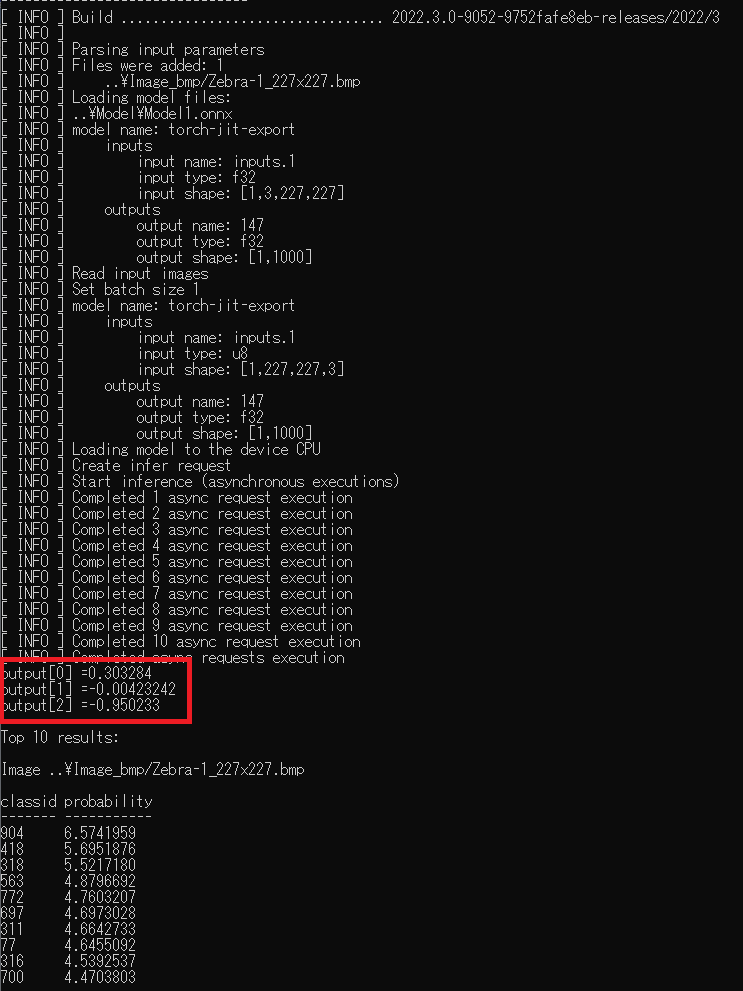

My environment is Windows 10 and C++, so I tried with the following C++ sample.

■OpenVINO 2021.4

C:\Program Files (x86)\Intel\openvino_2021.4.752\inference_engine\samples\cpp\classification_sample_async

■OpenVINO 2022.3.

C:\Program Files (x86)\Intel\openvino_2022.3.0\samples\cpp\classification_sample_async

The detailed output values are different from the first post, but the trend is the same.

(I think the difference in the output values from my first post is due to problems such as preprocessing, the order of the input images, color order, ...)

Model1 ONNX model (uses QuantizeLinear and DequantizeLinear) :

Result when using OpenVINO™ toolkit 2021.4 : -1.93943

Result when using OpenVINO™ toolkit 2022.3 : 0.303284

Model2 ONNX model (does not use QuantizeLinear and DequantizeLinear):

Result when using OpenVINO™ toolkit 2021.4 : -54.9816

Result when using OpenVINO™ toolkit 2022.3 : -54.9816

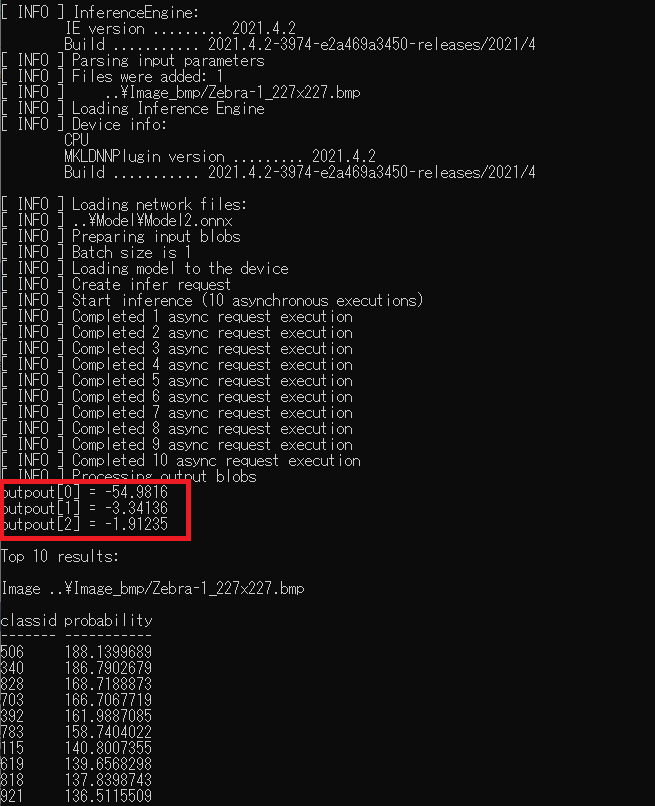

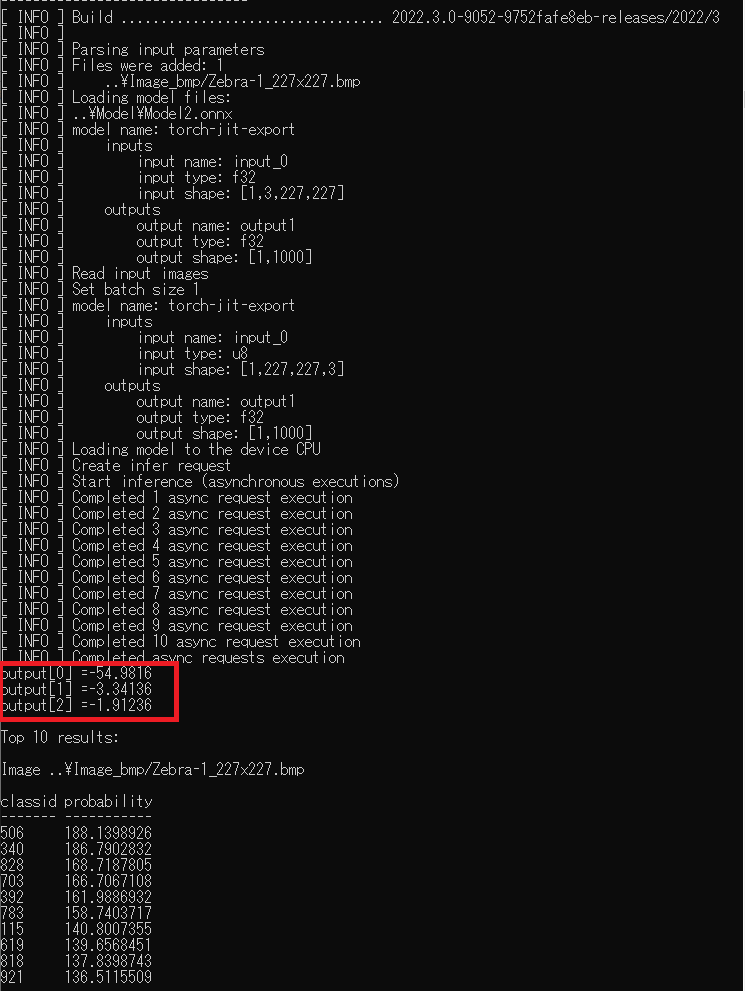

A reference image is attached below.

Added display of first three output values.

■OpenVINO 2021.4 and Mode1

■OpenVINO 2022.3 and Mode1

■OpenVINO 2021.4 and Mode2

■OpenVINO 2022.3.0 and Mode2

I may not be able to meet your request, but if you have any other requests, please let me know.

Regards,

North-man

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi North-man,

Thanks for your information.

Let us check with our next level and we'll update you at the earliest.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi North-man,

Thanks for your patience.

We've got feedback from our next level. May I know if you installed OpenVINO™ toolkit on Windows from Installer? Or do you build OpenVINO™ toolkit from GitHub?

On another note, could you please share your hardware details and Operating System with us? This information was being requested by our next level to further troubleshoot the issues.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

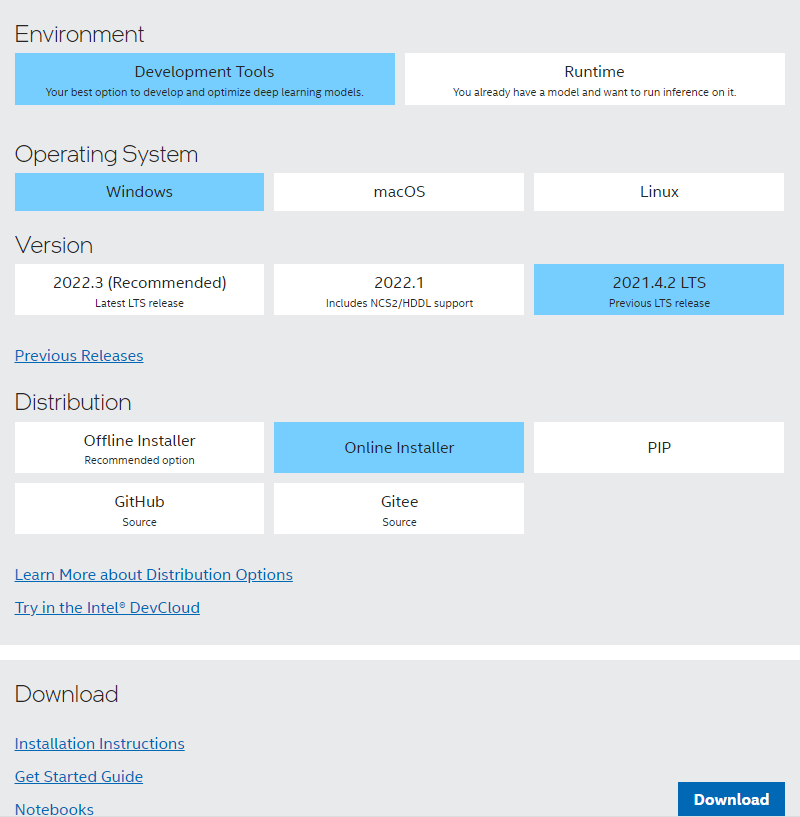

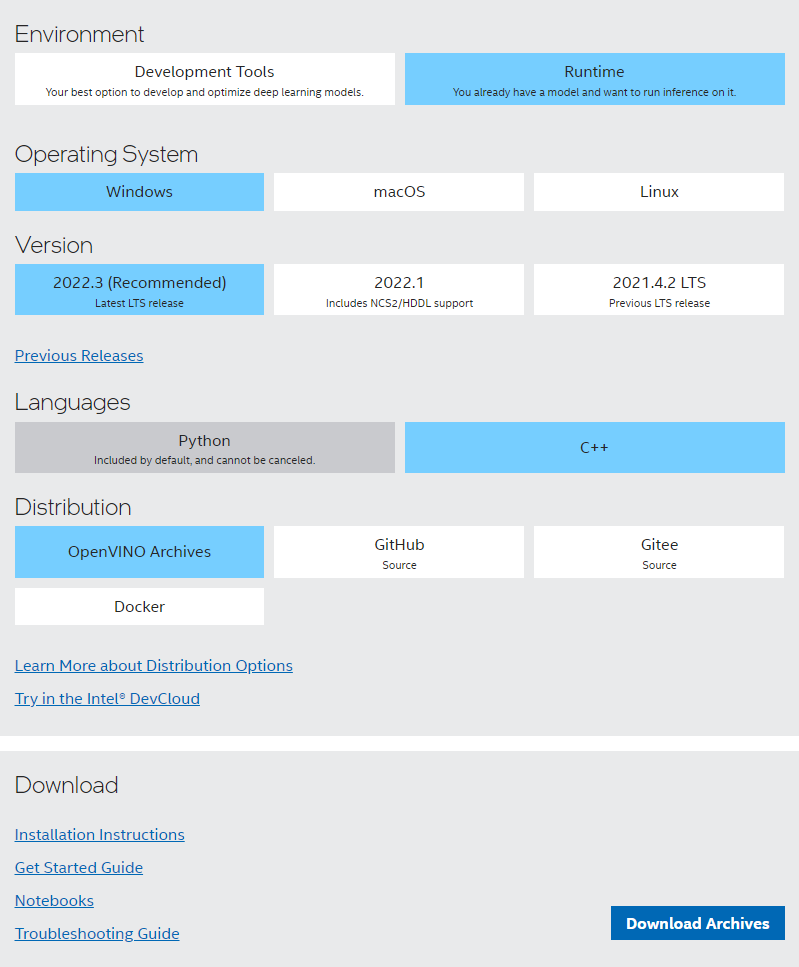

Hi Wan,

thank you for your research.

I installed OpenVINO from the installer.

https://www.intel.com/content/www/us/en/developer/tools/openvino-toolkit/download.html

■OpenVINO™ toolkit 2021.4.2

Both "Development Tools" and "Runtime" will download the same installer.

- w_openvino_toolkit_p_2021.4.752_online.exe

When I ran the installer, OpenVINO was installed below.

- C:\Program Files (x86)\Intel\openvino_2021.4.752

And I Used the below files.

- deployment_tools\inference_engine\bin\intel64\Release

- deployment_tools\inference_engine\lib\intel64\Release

- deployment_tools\inference_engine\include

- deployment_tools\inference_engine\samples\cpp

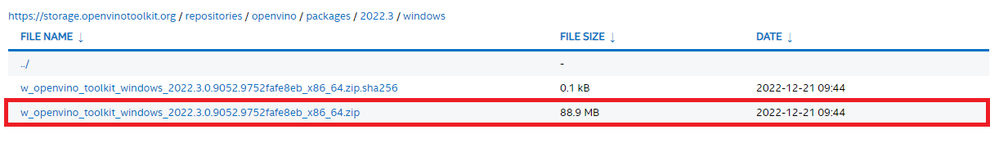

■OpenVINO™ toolkit 2022.3

I chose "Runtime" and zip file on the next page.

Then I unpacked the zip file and got the following,

- runtime\bin\intel64\Release

- runtime\lib\intel64\Release

- runtime\include

- samples\cpp

My OS and Hardware (CPU) are below,

- OS : Windows 10 Enterprise (Version 21H2)

- CPU : Intel(R) Xeon(R) W-2145 CPU @ 3.70GHz 3.70 GHz

Do you have enough information? Please let me know if I'm missing.

Regards,

North-man

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi North-man,

Thanks for your information.

Let me share the information with next level and we'll update progress here as soon as possible. Thanks.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Northman,

Thanks for your patience.

We've received feedback from our next level.

The difference between results from OpenVINO™ Toolkit 2021.4 and OpenVINO™ Toolkit 2022.3 is that for OpenVINO™ Toolkit 2021.4, low precision transformations were not executed because the input intervals are not constant.

This was fixed some time ago in the ONNX frontend https://github.com/openvinotoolkit/openvino/blob/master/src/frontends/onnx/frontend/src/op/quantize_linear.cpp#L91-L103.

Furthermore, the results from the quantized model are so different than the results from the non-quantized model. We are still checking if it is expected behavior or not.

When low precision transformations are executed (like it's the case for OpenVINO™ Toolkit 2022.3) it can affect precision since the layers potentially can be executed with u8/i8 data types.

On another note, the probability results look suspicious. They should be within [0, 1] range but we get values like 188.1398773, etc. Is the model missing Softmax or something alike?

If you, for example, run hello_classification with alexnet from the open model zoo, the results look like follows:

[ INFO ] Top 10 results:

[ INFO ] class_id probability

[ INFO ] --------------------

[ INFO ] 340 0.9999918

[ INFO ] 292 0.0000066

[ INFO ] 282 0.0000012

[ INFO ] 293 0.0000004

[ INFO ] 288 0.0000001

[ INFO ] 83 0.0000000

[ INFO ] 290 0.0000000

[ INFO ] 102 0.0000000

[ INFO ] 63 0.0000000

[ INFO ] 287 0.0000000

Where 340 is the class id for zebra.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Wan,

thank you for your research and feedback.

>This was fixed some time ago in the ONNX frontend ...

I will also check the ONNX frontend.

It's hard to judge, but...

I would like to know which of the following.

- 2021.4 was wrong (bug) and got it right in 2022.3

- 2021.4 is not wrong, but 2022.3 is better

>On another note, the probability results look suspicious. They should be within [0, 1] range but we get values like 188.1398773, etc.

>Is the model missing Softmax or something alike?

Yes, my model does not have softmax.

If there is no softmax, it will not be 0-1 numerically, but there is no problem with the performance of classification.

If necessary, we also prepare a model with softmax added.

Regards,

North-man

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi North-man,

Thanks for your information.

You may share the model which contains softmax to the following email to further investigate the issue.

waix.fook.wan@intel.com

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi North-man,

Thanks for your question.

Please submit a new question when additional information is needed as this thread will no longer be monitored.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Wan,

thank you for your research.

Sorry for late reply.

@Wan_Intel wrote:Hi North-man,

Thanks for your question.

Please submit a new question when additional information is needed as this thread will no longer be monitored.

Regards,

Wan

I will prepare a model with softmax added, is it okay if I reply to your address?

Regards,

North-man

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi North-man.

Yes, you can share the model to the email address above.

We can continue our discussion here.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi, Wan.

I understand.

I will email you when it is ready.

Please wait a little more.

Thank you.

Regards,

North-man

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi North-man,

Thanks for sharing your information.

We've received your model and we'll further investigate the issue and update you as soon as possible.

Regards,

Wan

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page