링크가 복사됨

- 신규로 표시

- 북마크

- 구독

- 소거

- RSS 피드 구독

- 강조

- 인쇄

- 부적절한 컨텐트 신고

Hi Babken,

Thank you for reaching out to us.

Could you please provide the following information with us for further investigation?

· Version of the OpenVINO™ toolkit

· Hardware specifications

· Steps to reproduce

Regards,

Wan

- 신규로 표시

- 북마크

- 구독

- 소거

- RSS 피드 구독

- 강조

- 인쇄

- 부적절한 컨텐트 신고

Hi Wan,

- Version of the OpenVINO™ toolkit

Version 2021.04 - Hardware specification:

Device:iMacPro1,1

Device.family: MacOs 1.6.5

Release: .134.6 - Steps to reproduce:

Unfortunately I have no reproducible example to share, it happened on our client side (10 clients reported the same issue on the same device)

The crash caused when loading a model via ov_impl.load(weight*,...) method

Regards,

Babken

- 신규로 표시

- 북마크

- 구독

- 소거

- RSS 피드 구독

- 강조

- 인쇄

- 부적절한 컨텐트 신고

Hi Babken,

Thanks for your information.

Referring to System Requirements in Install and Configure Intel® Distribution of OpenVINO™ toolkit for macOS, the supported Operating System is macOS 10.15.

On another note, macOS 11.5 (64-bit) was validated on Build from source OpenVINO™ toolkit. Please refer to Build OpenVINO™ Inference Engine on macOS* Systems.

Regards,

Wan

- 신규로 표시

- 북마크

- 구독

- 소거

- RSS 피드 구독

- 강조

- 인쇄

- 부적절한 컨텐트 신고

Hi Wan,

- 신규로 표시

- 북마크

- 구독

- 소거

- RSS 피드 구독

- 강조

- 인쇄

- 부적절한 컨텐트 신고

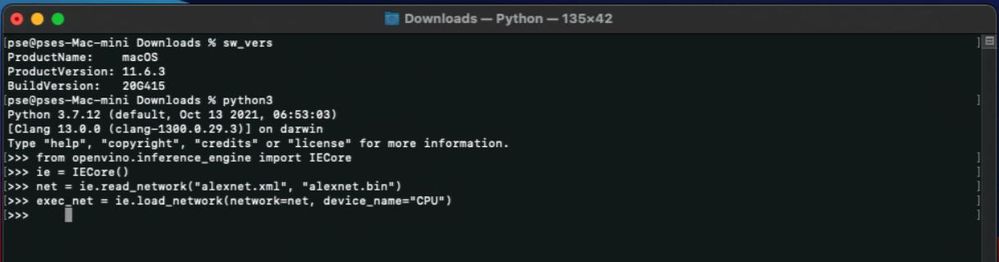

Hi Babken,

I noticed that your client loads the network using the ov_impl.load(weight*,...) method.

For your information, you can use the load_network() method to load the network.

For example:

from openvino.inference_engine import IECore

ie = IECore()

net = ie.read_network("alexnet.xml", "alexnet.bin")

exec_net = ie.load_network(network=net, device_name="CPU")

Regards,

Wan

- 신규로 표시

- 북마크

- 구독

- 소거

- RSS 피드 구독

- 강조

- 인쇄

- 부적절한 컨텐트 신고

Hi Wan,

Thanks for your reply

Do you think this(loading network within ov_impl.load) is leading to pointed crush? If yes can you elaborate a little bit

Warm Wishes,

Babken

- 신규로 표시

- 북마크

- 구독

- 소거

- RSS 피드 구독

- 강조

- 인쇄

- 부적절한 컨텐트 신고

Hi Babken,

For your information, OpenVINO™ Toolkit uses the Inference Engine to read the Intermediate Representation (IR), ONNX, and execute the model on the device.

Referring to OpenVINO™ Toolkit 2021.4 Inference Engine Python API, ov_impl.load() method is not available.

Therefore, you can use the load_network() method from OpenVINO™ Toolkit 2021.4 Inference Engine Python API to load the network.

For more information on the typical Inference Engine API workflow, please refer to Integrate Inference Engine with Your C++ Application.

Regards,

Wan

- 신규로 표시

- 북마크

- 구독

- 소거

- RSS 피드 구독

- 강조

- 인쇄

- 부적절한 컨텐트 신고

Hi Wan,

Thanks for materials.

The steps at our end is following

pInferPlugin = std::make_unique<Core>(XmlPath);

network = pInferPlugin->ReadNetwork(model);

pInferPlugin->LoadNetwork(network, ...);

And the crash (backtrace is attached)

The hardware/software info also provided

Can you please let me know what could be the reason of crash ? and what I'm doing incorrectly?

Let me know if more info is required

Regards,

Babken

- 신규로 표시

- 북마크

- 구독

- 소거

- RSS 피드 구독

- 강조

- 인쇄

- 부적절한 컨텐트 신고

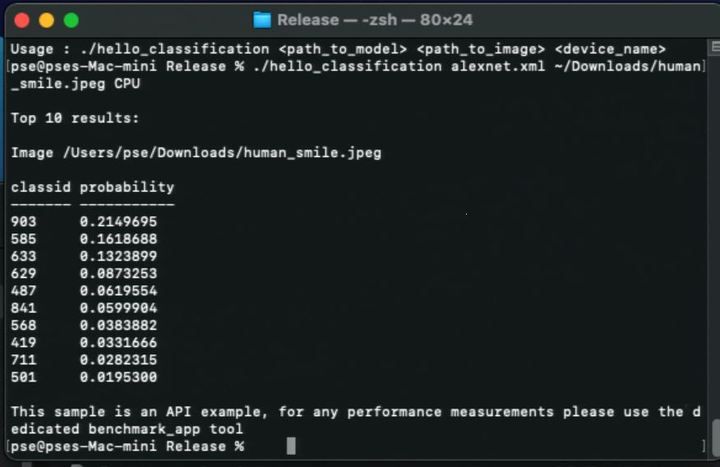

Hi 1985,

I have validated the Hello Classification C++ Sample from OpenVINO™ Toolkit 2021.4.1 LTS on Mas OS Big Sur 11.6.3.

For your information, the error you encountered: “EXC_BAD_ACCESS” is an exception raised as a result of accessing bad memory. Please refer to here for more information.

On another note, could you please execute Hello Classification C++ Sample from your end to check if you encountered the same error in this sample?

Regards,

Wan