- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I have some issue while convert the EfficientDet D0 onnx to IR Format.

System information (version)

- OpenVINO 2021.4.582

- Operating System / Platform => Windows 10

- Problem classification: Model Conversion

- Framework: TensorFlow

- Model name: EfficientDet D0 512x512

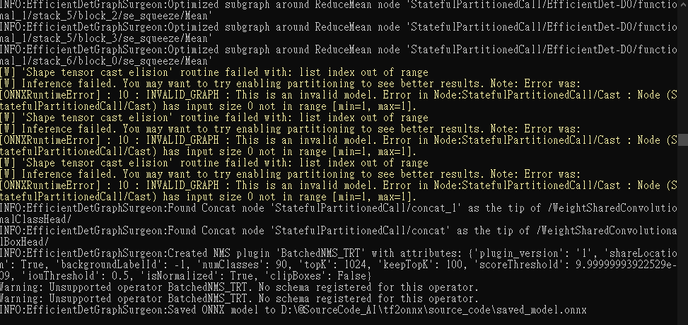

In order to convert the tensorflow saved_model.pb to onnx, I run TensorRT create_onnx.py and it work successfully.

Command:

Export:

Reference: https://github.com/NVIDIA/TensorRT/blob/master/samples/python/efficientdet/create_onnx.py

And I tried to use the model optimizer mo_onnx.py convert the exported onnx file to IR format, some error shown as below.

Link Copied

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi FanChen,

Thank you for reaching out to us and thank you for using OpenVINO™ Toolkit.

For your information, I have successfully converted EfficientDet models trained with the TensorFlow Object Detection API (TFOD) to Intermediate Representation (IR) using OpenVINO™ Toolkit 2021.4.1.

Please use the following command to convert your TensorFlow Object Detection EfficientDet D0 using OpenVINO™ Toolkit 2021.4.1 Model Optimizer:

python mo_tf.py \

--saved_model_dir="<path_to_saved_model_dir>\saved_model" \

--input_shape=[1,512,512,3] \

--reverse_input_channels \

--tensorflow_object_detection_api_pipeline_config="<path_to_pipeline.config>\pipeline.config" \

--transformations_config="<path_to_model_optimizer>\extensions\front\tf\efficient_det_support_api_v2.0.json"

On another note, you may download and convert EfficientDet models trained with the AutoML framework to IR using Model Downloader from Open Model Zoo.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

How does one put together the pipeline.config file?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Robopp,

Thanks for reaching out to us.

I noticed that you have posted a similar question in the OpenVINO community.

Hence, I would like to notify you that we will continue our conversation at the following thread:

https://community.intel.com/t5/Intel-Distribution-of-OpenVINO/Issue-converting-AutoML-TensorFlow-model-in-openvino-toolkit/m-p/1322559

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Wan:

Thanks for your reply, I have tried the method you provided, and the conversion was successful, but in order to unify the parameters, we have request to convert the onnx to ir-format, is there any solution to do that?

Best Regard,

Fan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi FanChen,

Thanks for your information.

Good to know my previous reply was helpful to you.

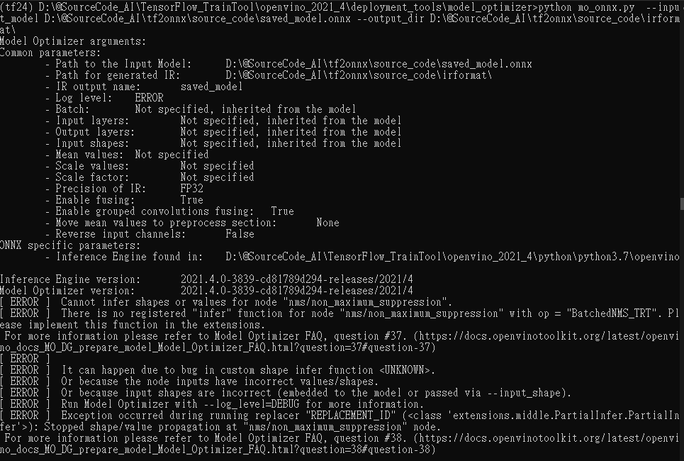

I have converted the EfficientDet model trained with the TensorFlow Object Detection API (TFOD) to the ONNX model using the create_onnx.py from TensorRT. I encountered the same error as you did when converting the EfficientDet ONNX model into IR:

Cannot infer shapes or values for node "nms/non_maximum_suppression"

There is no registered "infer" function for node "nms/non_maximum_suppression" with op = "EfficientNMS_TRT". Please implement this function in the extensions.

Based on Supported Framework Layers, there is no “EfficientNMS_TRT” under the ONNX operation. Therefore, we regret to inform you that “EfficientNMS_TRT” is yet to be supported in OpenVINO™ Toolkit.

On another note, do you want to initiate a feature request for this case? If yes, we might contact you for some information (business goal, cost-saving, etc) as part of the feature request process.

Regards,

Wan

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi FanChen,

Thank you for your question.

If you need any additional information from Intel, please submit a new question as this thread is no longer being monitored.

Regards,

Wan

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page